Given all the press surrounding it, it's easy to be confused. After all, if you believe everything you read, you'd think we're practically in an artificial intelligence (AI)-controlled world already, and it's only a matter of time before the machines take over.

Except, well, a quick reality check will easily show that that perspective is far from the truth. To be sure, AI has had a profound impact on many different aspects of our lives---from smart personal assistants to semi-autonomous cars to chatbot-based customer service agents and much more---but the overall real-world influence of AI is still very modest.

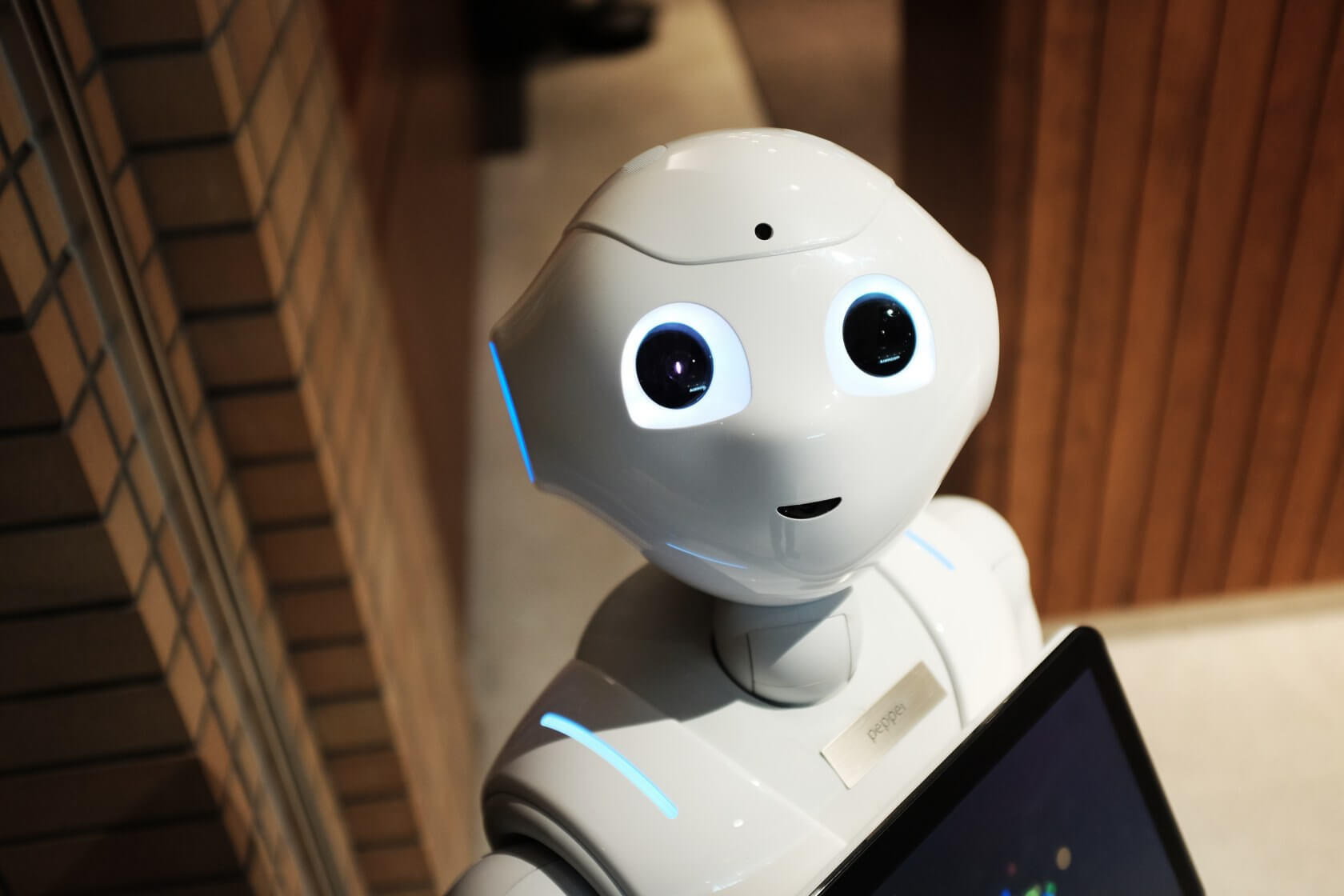

Part of the confusion stems from a misunderstanding of AI. Thanks to a number of popular, influential science fiction movies, many people associate AI with a smart, broad-based intelligence that can enable something like the nasty, people-hating world of Skynet from Terminator movies. In reality, however, most AI applications of today and the near future are very practical---and, therefore, much less exciting.

Most AI-based activities are still extraordinarily literal. So, if there's an AI-based app that can recognize dogs in photos, for example, that's all it can do.

Leveraging AI-based computer vision on a drone to notice a crack on an oil pipeline, for example, is a great real-world AI application, but it's hardly the stuff of AI-inspired nightmares. Similarly, there are many other examples of very practical applications that can leverage the pattern recognition-based capabilities of AI, but do so in a real-world way that not only isn't scary, but frankly, isn't that far advanced beyond other types of analytics-based applications.

Even the impressive Google Duplex demos from their recent I/O event may not be quite as awe-inspiring as they first appeared. Amongst many other issues, it turns out Duplex was specifically trained to just make haircut appointments and dinner reservations---not doctor's appointments, coordinating a night out with friends, or any of the multitude of other real-world scenarios that the voice assistant-driven phone calls that the Duplex demo implied were possible.

Most AI-based activities are still extraordinarily literal. So, if there's an AI-based app that can recognize dogs in photos, for example, that's all it can do. It can't recognize other animal species, let alone distinct varieties, or serve as a general object detection and identification service. While it's easy to presume that applications that can identify specific dog species offer similar intelligence across other objects, it's simply not the case. We're not dealing with a general intelligence when it comes to AI, but a very specific intelligence that's highly dependent on the data that it's been fed.

I point this out not to denigrate the incredible capabilities that AI has already delivered across a wide variety of applications, but simply to clarify that we can't think about artificial intelligence in the same way that we do about human-type intelligence. AI-based advances are amazing, but they needn't be feared as a near-term harbinger of crazy, terrible, scary things to come. While I'm certainly not going to deny the potential to create some very nasty outcomes from AI-based applications in a decade or two, in the near and medium-term future, they're not only not likely, they're not even technically possible.

Instead, what we should concentrate on in the near-term is the opportunity to apply the very focused capabilities of AI onto important (but not necessarily groundbreaking) real-world challenges. This means things like improving the efficiency or reducing the fault rate on manufacturing lines or providing more intelligent answers to our smart speaker queries. There are also more important potential outcomes, such as more accurately recognizing cancer in X-rays and CAT scans, or helping to provide an unbiased decision about whether or not to extend a loan to a potential banking customer.

Ultimately, we will see AI-based applications deliver an incredible amount of new capability, the most important of which, in the near-term, will be to make smart devices actually "real-world" smart.

Along the way, it's also important to think about the tools that can help drive a faster, more efficient AI experience. For many organizations, that means a growing concentration on new types of compute architectures, such as GPUs, FPGAs, DSPs, and AI-specific chip implementations, all of which have been shown to offer advantages over traditional CPUs in certain types of AI training and inferencing-focused applications. At the same time, it's critically important to look at tools that can offer easier, more intelligible access to these new environments, whether that be software languages like Nvidia's CUDA platform for GPUs, National Instruments' LabView tool for programming FPGAs, and other similar tools.

Ultimately, we will see AI-based applications deliver an incredible amount of new capability, the most important of which, in the near-term, will be to make smart devices actually "real-world" smart. Way too many people are frustrated by the lack of "intelligence" on many of their digital devices, and I expect to see many of the first key advances in AI to be focused on these basic applications. Eventually, we'll see a wide range of very advanced capabilities as well, but in the short term, it's important to remember that the phrase artificial intelligence actually implies much less than it first appears.

Bob O'Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting and market research firm. You can follow him on Twitter @bobodtech. This article was originally published on Tech.pinions.