What just happened? It's no secret that deepfake videos are becoming increasingly realistic, and that can be a problem when it comes to politics and manipulated porn videos. To address the situation, the state of California has signed two bills into law that pushes back against such clips.

Last week, California Gov. Gavin Newsom signed AB 730. The law makes it illegal to distribute manipulated videos designed to discredit a political candidate and deceive voters within 60 days of a general election.

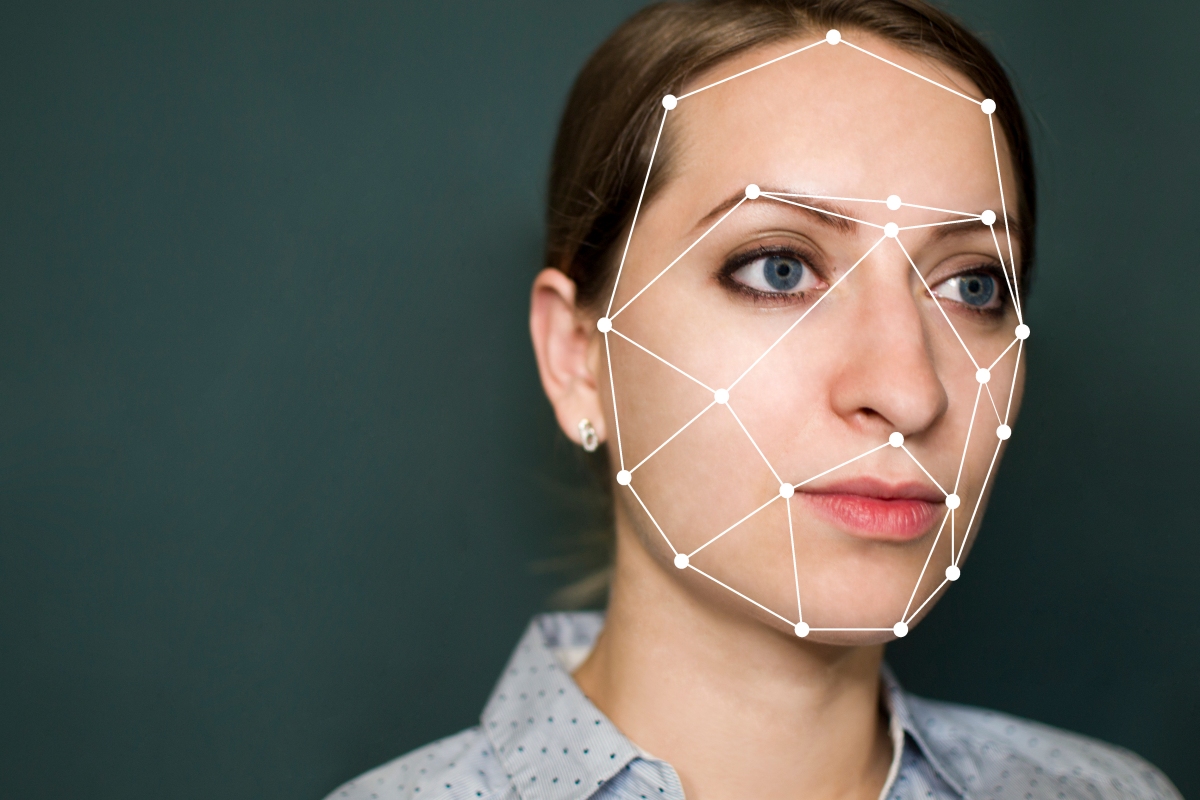

We've already seen how deepfakes, which involves the manipulation of images and videos using machine learning, can be used to put words in the mouths of politicians. The video of Barack Obama being voiced by filmmaker Jordan Peele is quite convincing, and the clip in which House Speaker Nancy Pelosi was made to appear drunk and slurring her words caused controversy when Facebook refused to remove it.

Deepfakes originally rose to prominence when people started digitally replacing porn actresses' faces with those of Hollywood stars. With AB 602, also signed by Newsom, California residents will be able to sue anyone who puts their image into a pornographic clip without their consent.

"Voters have a right to know when video, audio, and images that they are being shown, to try to influence their vote in an upcoming election, have been manipulated and do not represent reality," said California Assembly member Marc Berman, author of AB 730 and AB 602. "In the context of elections, the ability to attribute speech or conduct to a candidate that is false - that never happened - makes deepfake technology a powerful and dangerous new tool in the arsenal of those who want to wage misinformation campaigns to confuse voters."

In an interview last month, Hao Li, a deepfakes pioneer and associate professor of computer science at the University of Southern California, said "perfectly real" videos are just six months away.