Why it matters: In trying to find a balance between encouraging innovation in artificial intelligence and ensuring that companies don't abuse it for various purposes, the European Commission will soon propose a set of regulations that will no doubt raise tensions with the US at a time when the region lags behind the rest of the world in the AI race.

Last year, the European Commission revealed its plans for a "generational project" that's supposed to reduce the region's dependence on outside companies for digital products and services. Various proposals are still up for public debate, but most of them revolve around the creation of a new digital market policy that will have wide-ranging implications for tech giants like Google, Amazon, Facebook, Microsoft, and all the other companies that collect an enormous amount of data to feed it to AIs.

An official announcement of the proposed rules is expected next week, but thanks to a leaked document obtained by Politico, we now have a preliminary view on what those rules are. According to the 81-page draft, the European Commission is looking to outright ban "high risk" AI systems if they don't meet certain criteria. For instance, the document specifies that "indiscriminate surveillance of natural persons should be prohibited when applied in a generalized manner to all persons without differentiation."

Companies that won't comply with the criteria could be fined four percent of their annual turnover or up to €20 million ($23.95 million).

The Commission also wants to outlaw social scoring systems like the one used by China, which is widely considered to be a violation of basic human rights. Otherwise, anything that can cause harm to European citizens by manipulating their opinion or decisions, as well as AIs that can be used for mass surveillance, will be classified as "contravening to Union values" and banned. There are some exceptions, however, which would allow authorities to fight crime and terrorism using facial recognition systems.

Deepfakes are also in the Commission's crosshairs, but not to the same degree as other uses of artificial intelligence. The technology can make very convincing alterations to images and videos, but existing detection tools are nowhere near sophisticated enough to catch such content and flag it appropriately or remove it before it goes viral.

Private companies such as Facebook and Microsoft have been partnering with universities to help improve these tools, but the European Commission doesn't want to ban deepfakes altogether. Instead, it will allow them so long as they are marked as "artificially created or manipulated." However, that obligation "shall not apply where necessary for the purposes of safeguarding public security [and other prevailing public interests] or for the exercise of a legitimate right or freedom of a person and subject to appropriate safeguards for the rights and freedoms of third parties."

From the leaked draft, it appears that the EU doesn't think this issue warrants more than a string of tiny text accompanying almost every deepfake. Some may argue there are plenty of legitimate uses for the technology behind deepfakes, such as entertainment, bringing back long-dead personalities, or the protection of one's privacy online. However, the vagueness of the proposal does raise some questions about what will be done to prevent misuse, as well as abuse by government agencies.

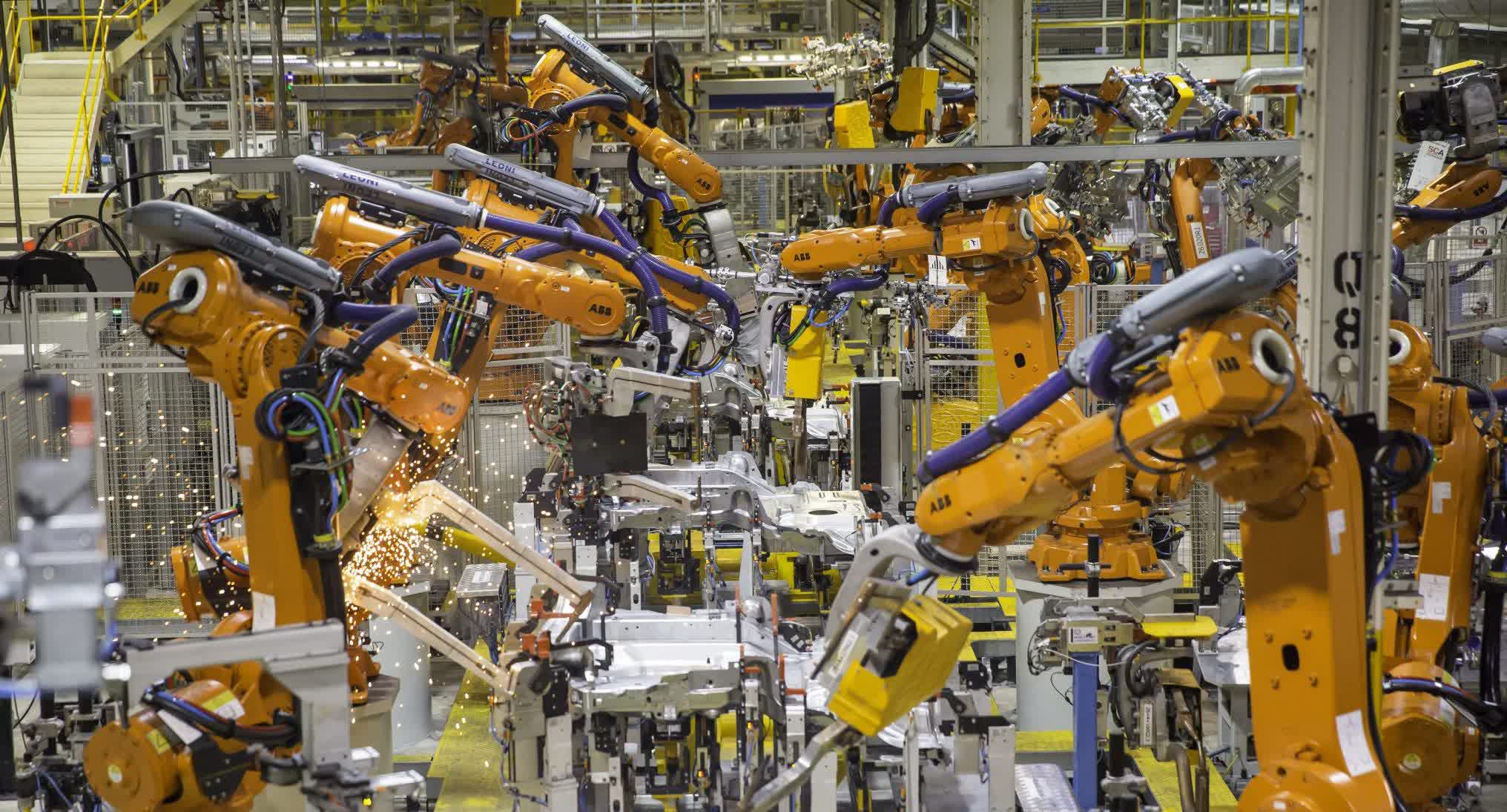

On the other hand, Europe intends to welcome the development of AI systems that aid in manufacturing, making the energy grid more efficient, self-driving cars, remote surgery, modeling climate change or medical drugs. Additionally, the draft proposes the creation of a European Artificial Intelligence Board as a dedicated mechanism of oversight for the new rules.

Not everyone believes the EU will be able to apply to regulate AI so strictly. During an interview, former Google CEO Eric Schmidt, who now chair of the US National Security Commission on Artificial Intelligence (NSCAI), said that Europe's strategy on AI governance won't be successful as it's "simply not big enough on the platform side to compete with China alone."

The European Center for Not-for-Profit Law, which is one of the contributors to the European Commission's White Paper on AI, believes "the EU's approach to binary-defining high versus low risk is sloppy at best and dangerous at worst, as it lacks context and nuances needed for the complex AI ecosystem already existing today."