FidelityFX Super Resolution 2.0 is AMD's second attempt at offering a broad game upscaling technology to compete with Nvidia's DLSS. In this article we'll be using the game Deathloop which supports DLSS, FSR 1.0 and FSR 2.0 to benchmark and compare several GPUs from current and previous generations, including the GTX 1070 Ti, GTX 1650 Super, RTX 2060, RTX 3080, and on the Radeon camp, the RX 570, Vega 64, RX 5700 XT, and RX 6800 XT.

Since the release of DLSS 2.0 over two years ago, it's steadily become a key selling point for Nvidia's RTX GPUs, lots of gamers have been buying GeForce GPUs specifically for this feature, and AMD has been working on something to counter that for their Radeon customers.

AMD's first attempt, now called FSR 1.0 was released about a year ago and while we found that FSR 1.0 was effective in some situations, particularly at 4K using the Ultra Quality mode, it ultimately wasn't as good as DLSS overall. The image quality at 1080p was unimpressive and the Performance mode looked worse than DLSS.

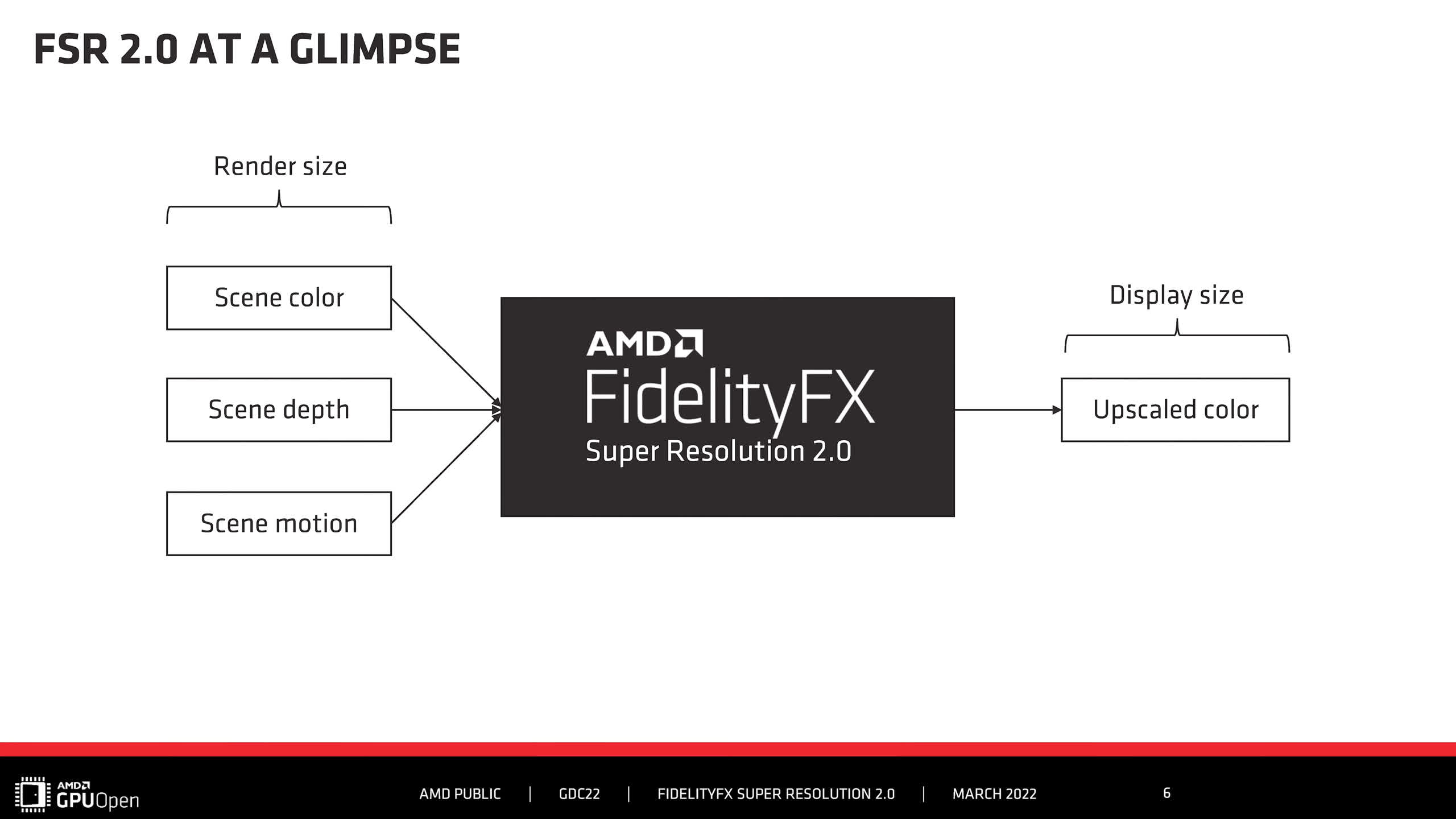

FSR 2.0 is a significant upgrade to the upscaling technology, moving from a spatial technique to temporal. If you want to learn more about how FSR 2.0 works, we covered a lot of information when it was first announced so it's worth revisiting that.

The shift to temporal scaling allows FSR 2.0 to combine current frame information with data from previous frames and motion vectors to reconstruct the final image, utilizing a lot more data than is possible with spatial scaling. By having access to all this data, it's possible for FSR 2.0 to produce "better than native" results, like Nvidia also claims for DLSS.

FSR 2.0 vs DLSS 2.0: How they work

Where FSR 2.0 and DLSS differ is in how they approach the puzzle of reconstruction and choosing which bits of data to use. DLSS takes a closed-source AI based approach, which requires specific instructions exclusive to Nvidia's Tensor core hardware.

FSR 2.0 doesn't use AI at all, instead using a hand-tuned algorithm that's open-source and supports a wide range of hardware, just like FSR 1.0 - tensor cores or other specialized AI processing blocks are not required. We were able to get FSR 2.0 working on 5-year-old hardware without any issue, more on that later.

The first opportunity to evaluate FSR 2.0 came with Deathloop, a game which also supports FSR 1.0 and DLSS, so we could do a nice comparison between today's main upscaling technologies from the two GPU vendors. Check out the video below for a lot of detail on how visual quality differs between FSR 2.0 and DLSS in Deathloop.

Now I wouldn't call this an FSR 2.0 "review" just because we're looking at a sample of one game. We'll need more than that to make a definitive call on FSR 2.0 vs DLSS, but at the very least it's a good preview of what FSR 2.0 can do.

Deathloop is also a good game to test with because DLSS is very effective in this title. It's one of the best examples of DLSS producing a "better than native" image, while FSR 1.0 looks less than amazing.

FSR 2.0 Settings

FSR 2.0, like DLSS, has three quality modes available in Deathloop: Quality, Balanced and Performance, which correspond to a 1.5x, 1.7x and 2.0x scale factor respectively, roughly in line with what Nvidia offers. This means that at 4K, using FSR 2.0 Quality means we're upscaling from 1440p to 4K, while using the Performance mode gives us a 1080p to 4K upscale.

FSR 2.0 is a widely supported temporal upscaling solution, meaning it can work across a range of hardware and that's exactly what we'll be doing today. We've tested GPUs from four generations of AMD and Nvidia releases to see how well FSR 2.0 scales on different architectures.

For today's testing, we're using a Ryzen 9 5950X test system equipped with 16GB of low latency DDR4-3200 memory, running the latest publicly available drivers for AMD and Nvidia. We'll be comparing FSR 2.0 to the next best available upscaling solution for the GPU at hand, so for RTX 20 series cards and newer we'll be comparing to DLSS, and everything else to FSR 1.0.

To prevent CPU bottlenecks, we've run Deathloop at the best quality playable settings for each GPU, which includes ray tracing enabled on higher end cards. Deathloop is a demanding game on GPU memory, and some of the cards we've tested have only 4 GB or 6 GB of VRAM, which causes issues on the highest settings and basically bottlenecks the card - meaning we don't see the real benefits of FSR 2.0 or other upscaling algorithms. For those GPUs, we've reduced the settings to an appropriate level that doesn't cause a bottleneck.

Benchmarks

Radeon RX 570: FSR 1 vs. FSR 2 vs. Native

We'll start here with the oldest and slowest card in the line-up, AMD's trusty Radeon RX 570 4GB, which was released way back in 2017. This game struggles to run Deathloop at 1440p using even Medium settings, but with upscaling we can get a performance uplift at a minimal cost to visuals.

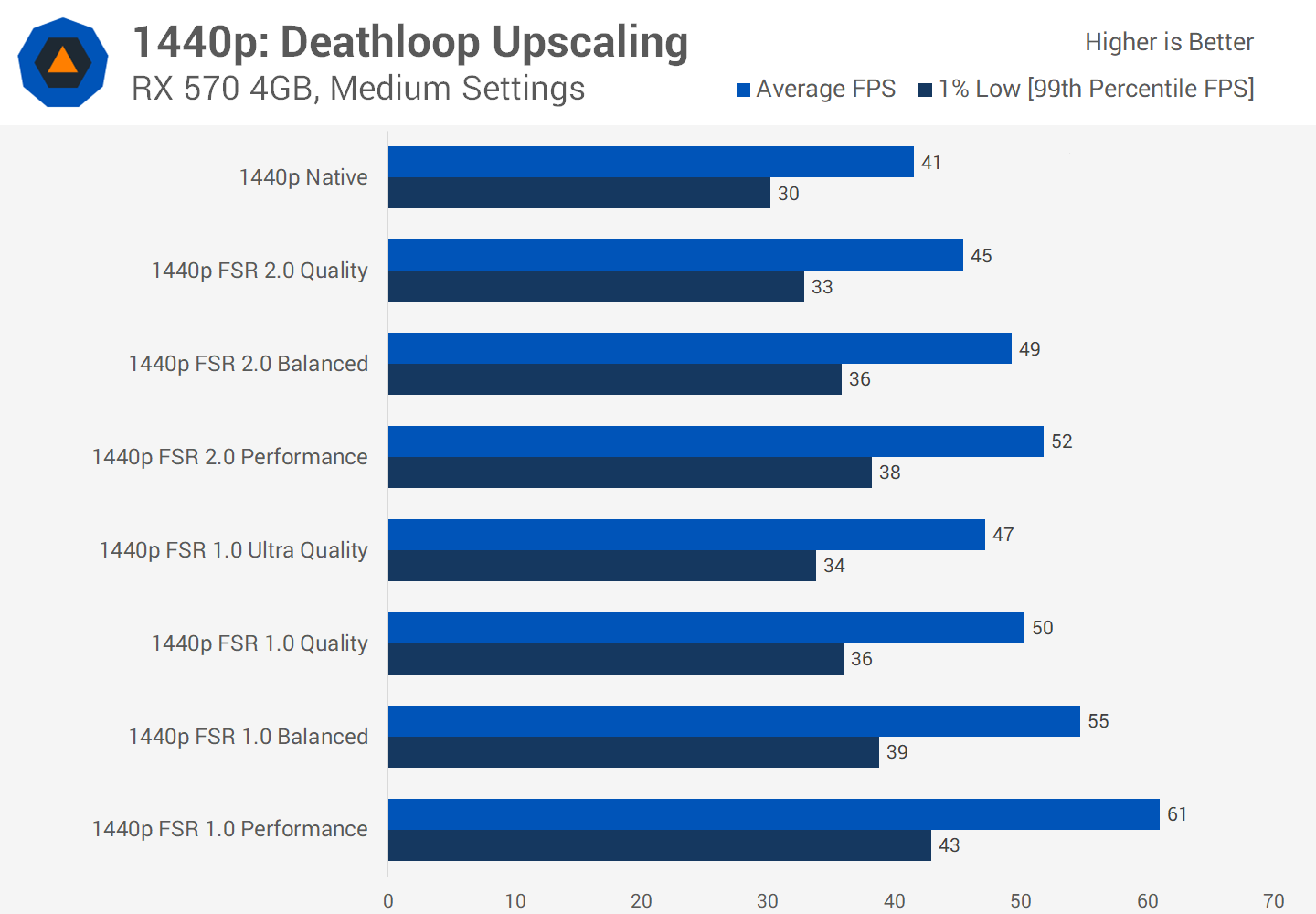

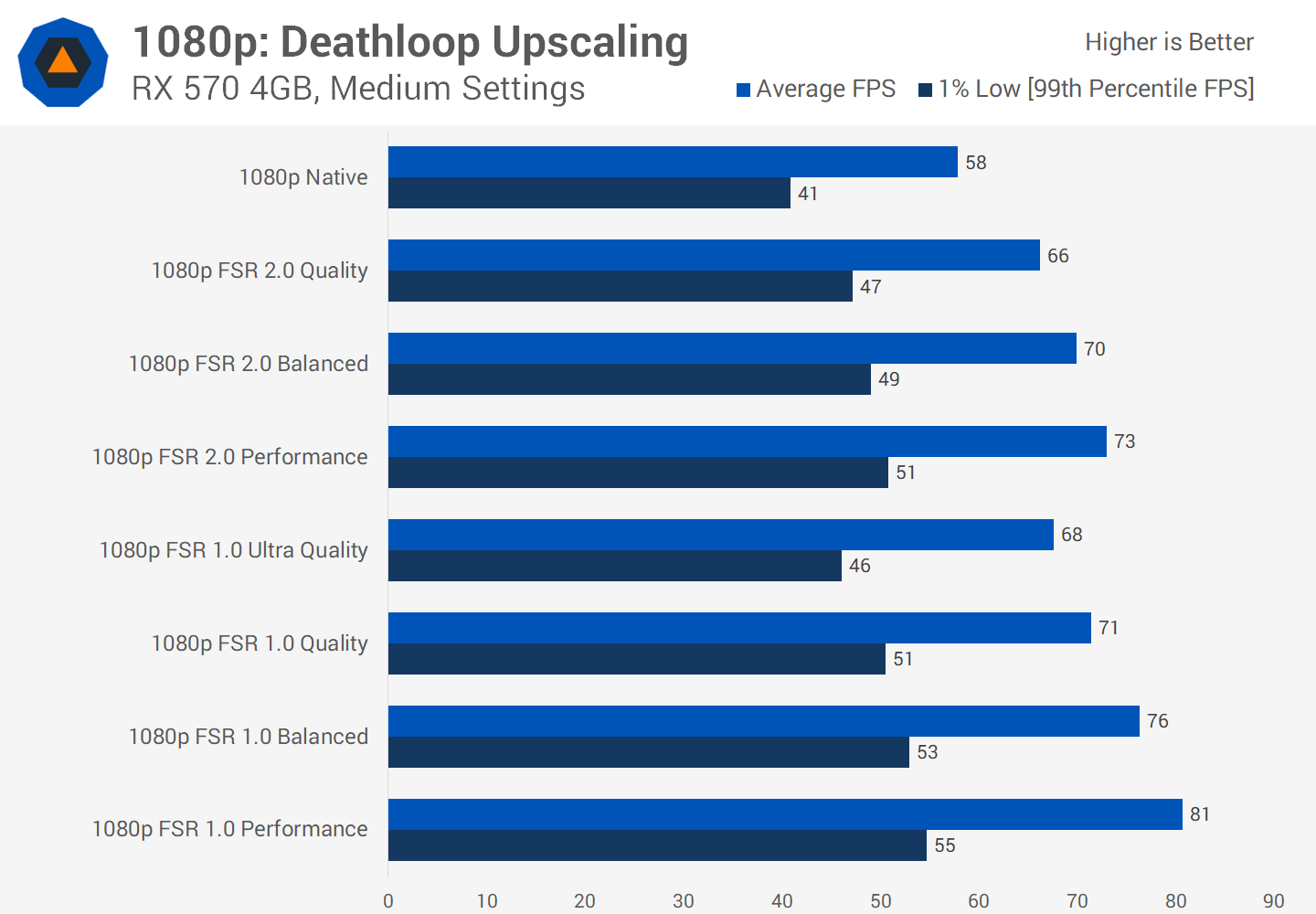

The RX 570 doesn't benefit from FSR 2.0 to nearly as significant of a degree. We do get a performance uplift, but it's just a 10 percent gain from the FSR 2.0 Quality mode, and 27 percent from the Performance mode.

It's still worth using, but not the instant 40%+ gains we saw from the latest architectures. You can also see that FSR 1.0 is indeed faster, which wasn't the case with RDNA2 either. With the 6700 XT, typically FSR 2.0 Quality mode ran better than FSR 1.0 Ultra Quality. But with the RX 570, it's the less taxing FSR 1.0 that runs a few frames better. However, I'd still recommend using FSR 2.0 here as the visual quality is significantly superior at 1440p.

At 1080p, FSR 2.0 was more capable of a performance uplift. The Quality mode was giving a 14% boost over native rendering, and the Performance mode (which we don't really recommend at this resolution) was 26 percent faster. This does help the RX 570 achieve an even more playable frame rate, but it's clear the gains from this old, mainstream GPU are limited.

GeForce GTX 1650 Super: FSR 1 vs. FSR 2 vs. Native

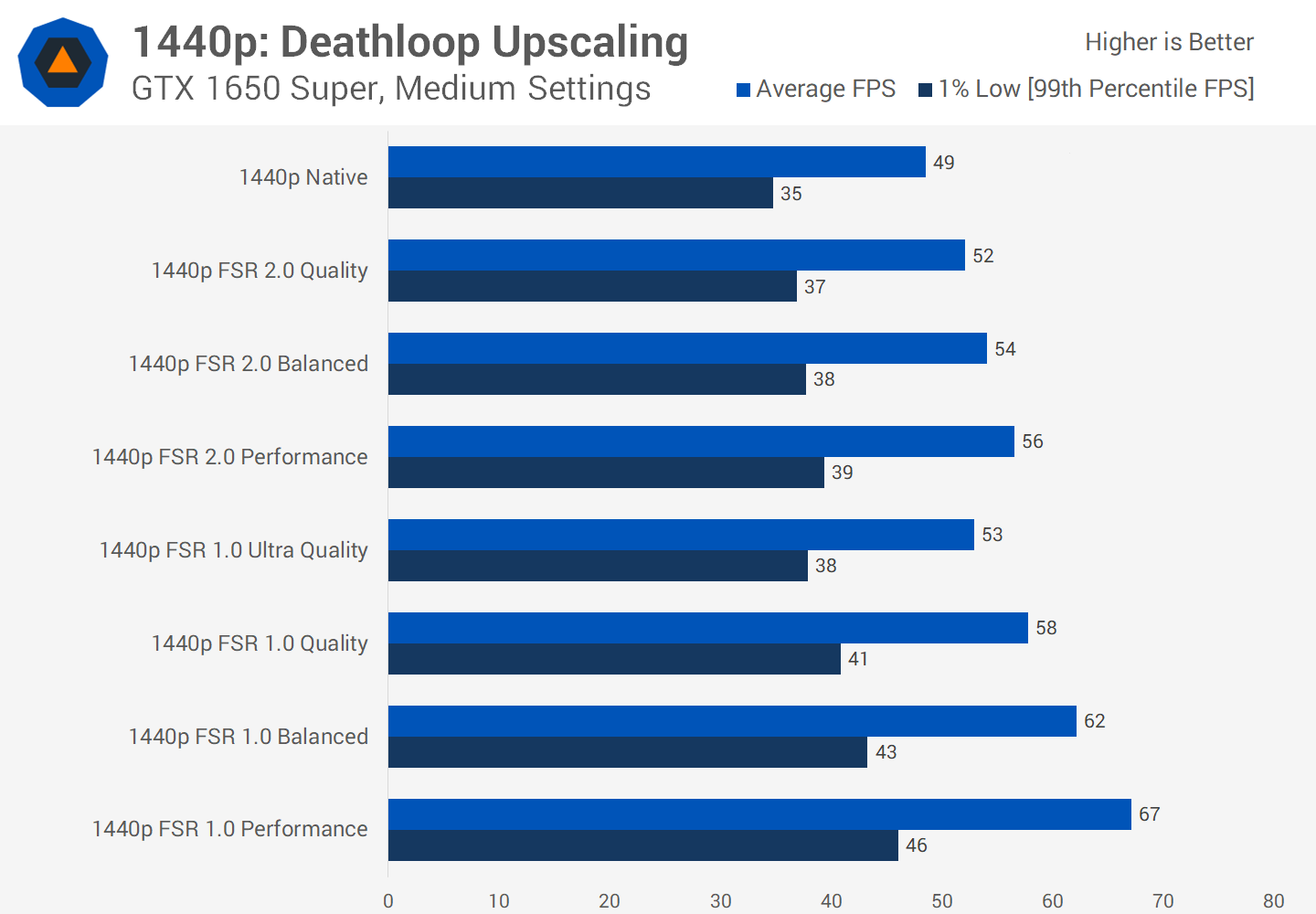

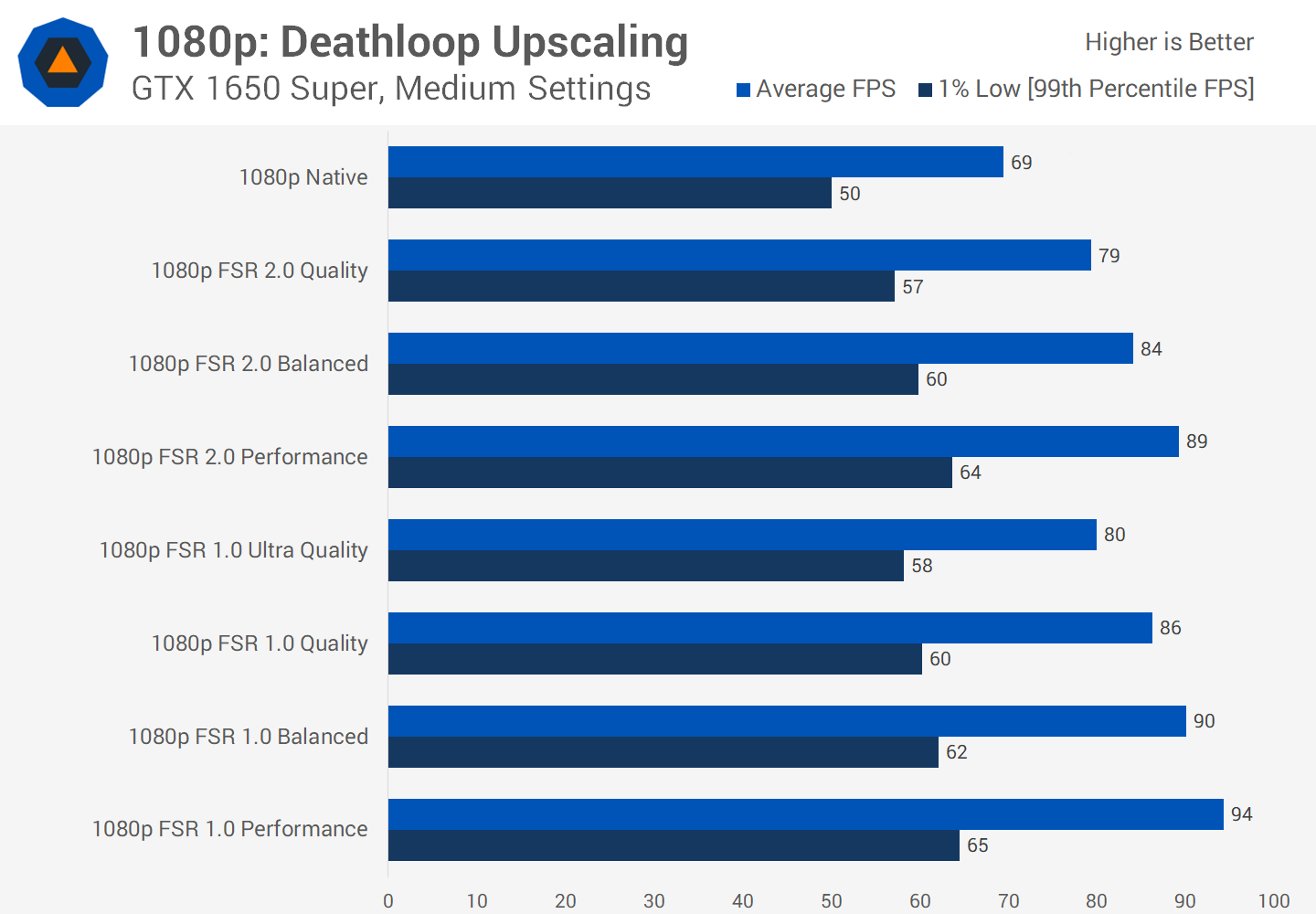

The GeForce GTX 1650 Super is also an entry-level GPU but it's a fair bit newer but doesn't support DLSS as it lacks tensor cores. Like the RX 570, the GTX 1650 Super doesn't benefit hugely at 1440p with either FSR 2.0 or 1.0 in this title, and it seems that even Medium settings is a bit of a stretch here.

The FSR 2.0 Quality mode was only 6 percent faster than native, and the Performance mode barely improved up on that. In comparison, FSR 1.0 was able to deliver much better frame rates, with the Quality mode there delivering higher FPS than FSR 2.0 Performance.

However the gains at 1080p were more acceptable. The FSR 2.0 Quality mode was 15 percent faster than native, similar to what was seen on the RX 570, and the Performance mode increased that figure to 29 percent. Not earth shattering results - and FSR 1.0 is definitely faster on this entry-level GPU - but usable. I'll be interested to see how budget GPUs like this fare in other FSR 2.0 games to see if it's more of a game thing or more of an FSR 2.0 algorithm thing, but certainly it seems there just aren't that many GPU resources to go around to run something like FSR 2.0.

Radeon RX Vega 64: FSR 1 vs. FSR 2 vs. Native

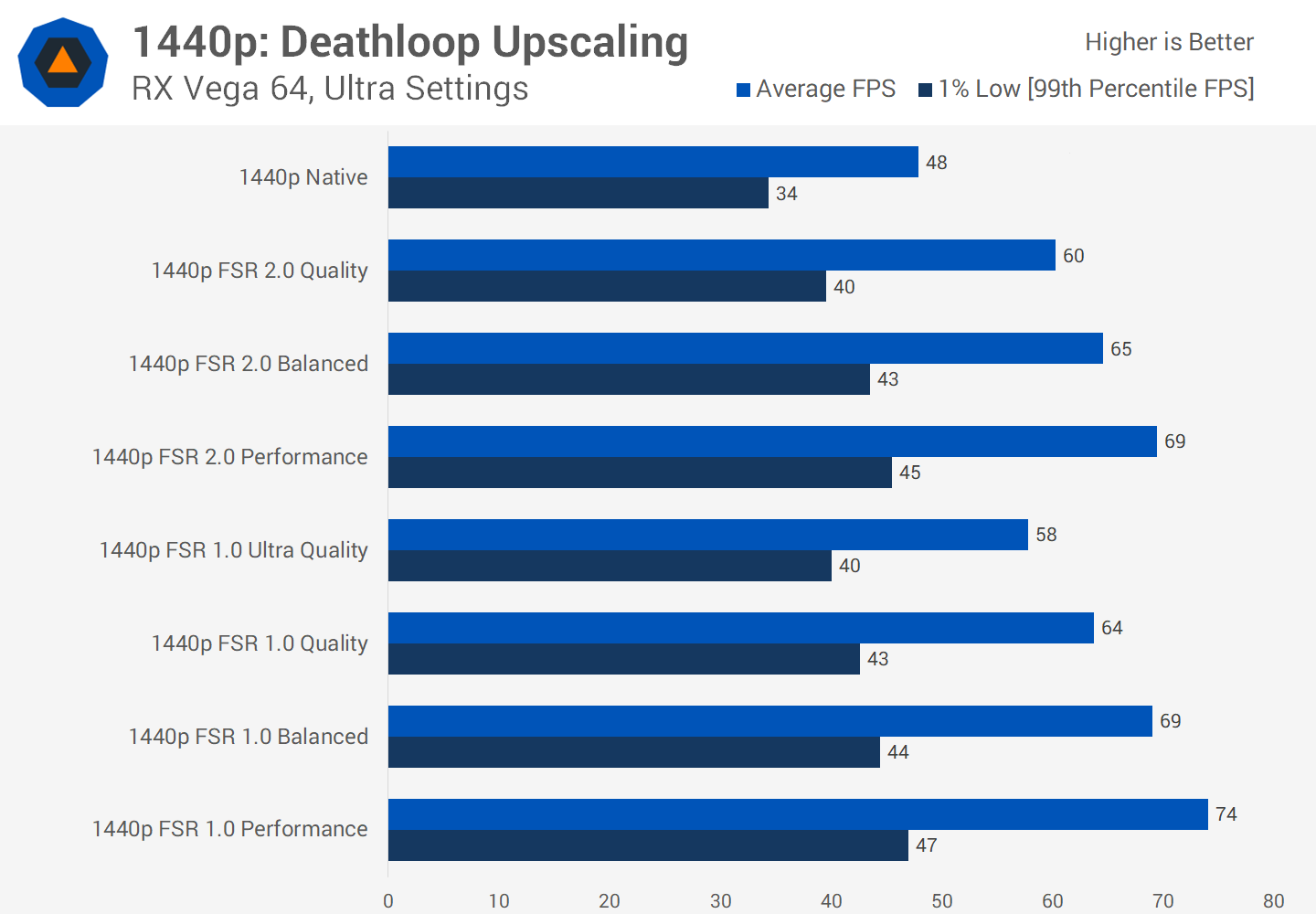

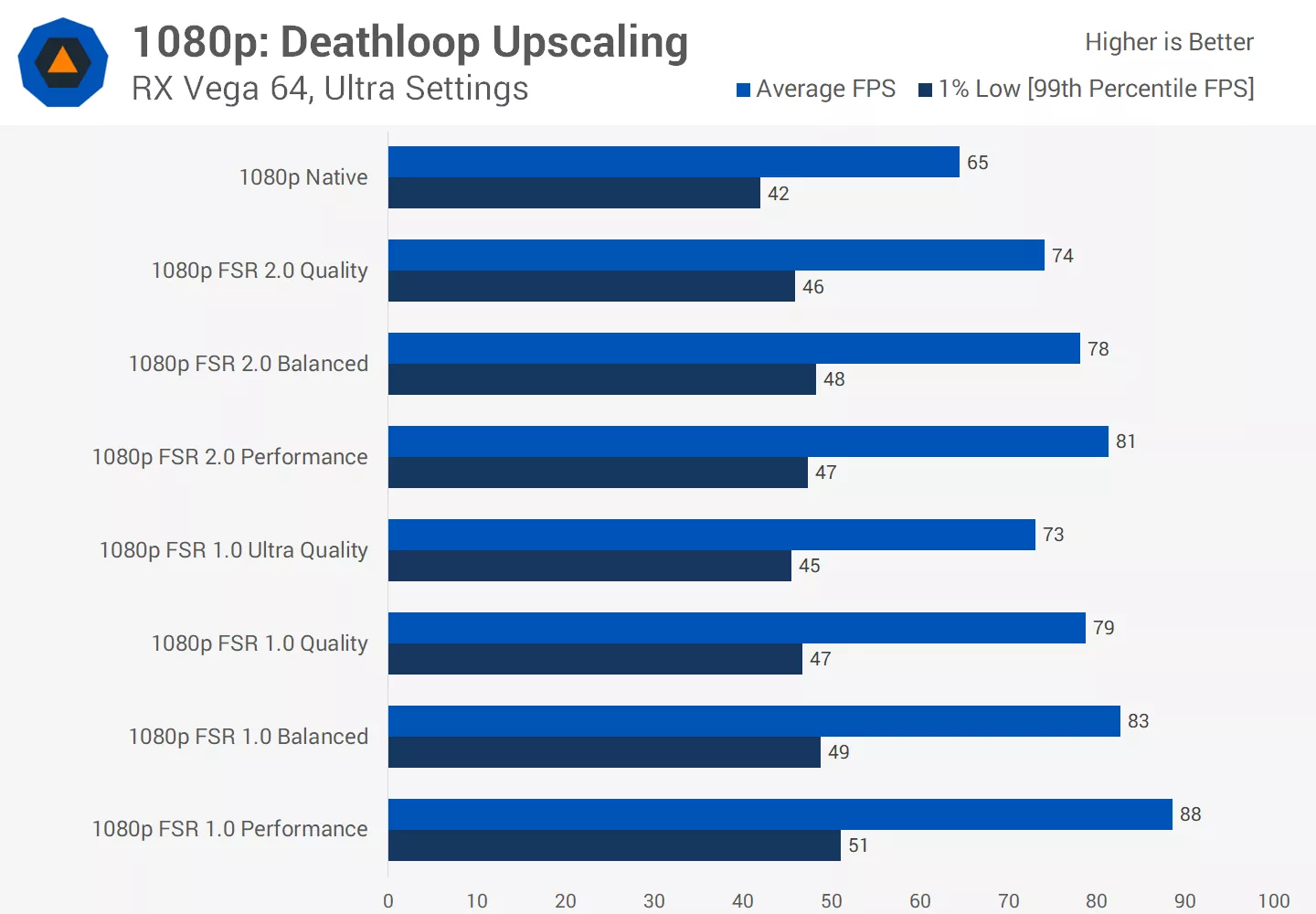

Let's go back in time to look at FSR 2.0 running on the Radeon RX Vega 64, another 5 year old product. Using Ultra settings at 1440p, FSR 2.0 did provide a modest performance uplift, 25 percent for the Quality mode compared to native rendering, which was enough to take the game up to a 60 FPS average.

Unlike with the entry-level cards we were just looking at, we're back into a situation where the FSR 2.0 Quality mode performs better than FSR 1.0 Ultra Quality, and for that reason FSR 2.0 is definitely the preferred option due to its much better image quality.

At 1080p, I actually saw less of a gain than at 1440p for FSR 2.0 as it seems like pure shading isn't necessarily the main limiting factor for performance on Vega 64. If shading performance isn't the main bottleneck, then lowering the render resolution may only have limited gains, which seems to be happening here. Other areas to performance, like memory or geometry, could be holding us back from further gains. But either way we still do get a performance uplift.

GeForce GTX 1070 Ti: FSR 1 vs. FSR 2 vs. Native

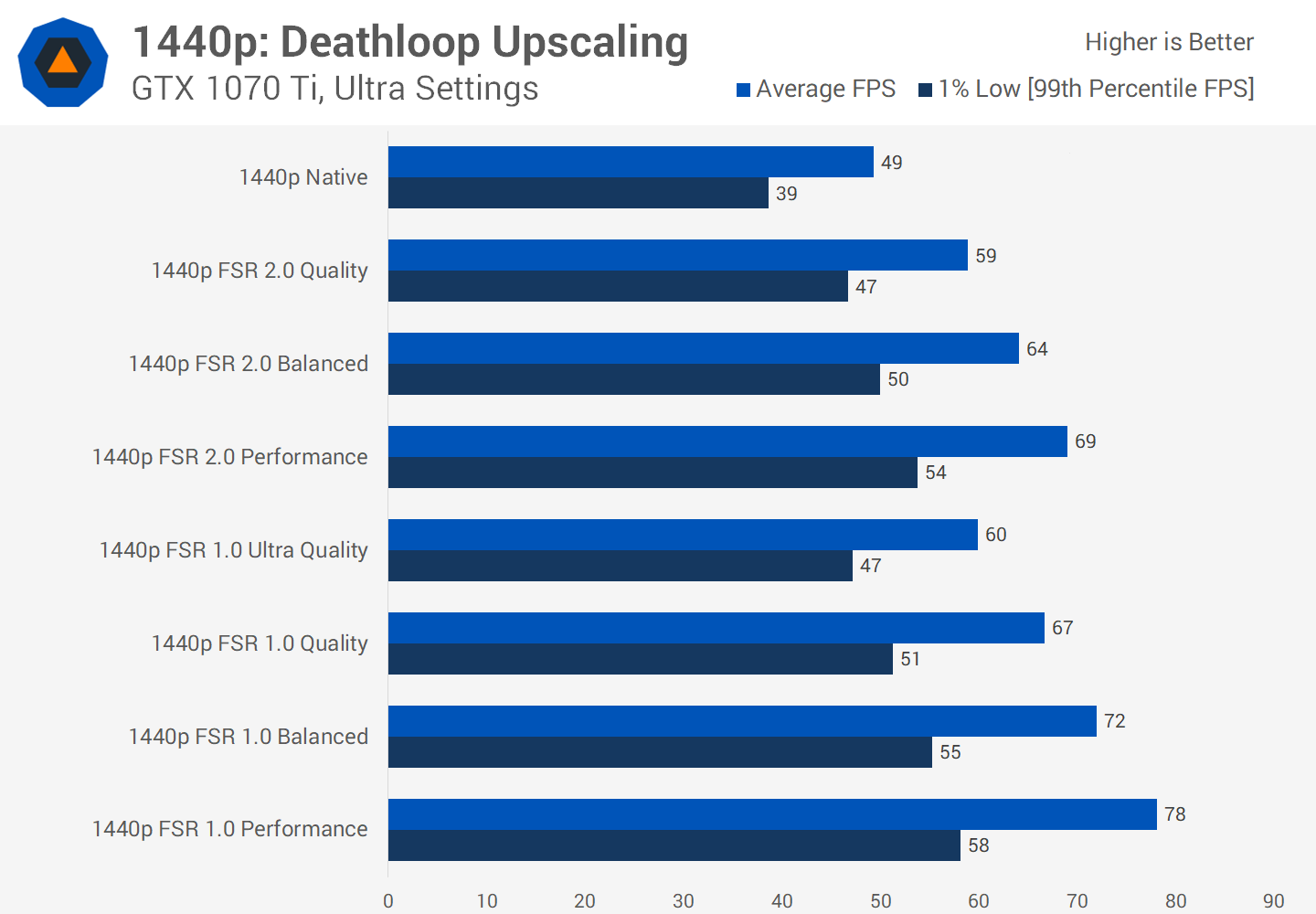

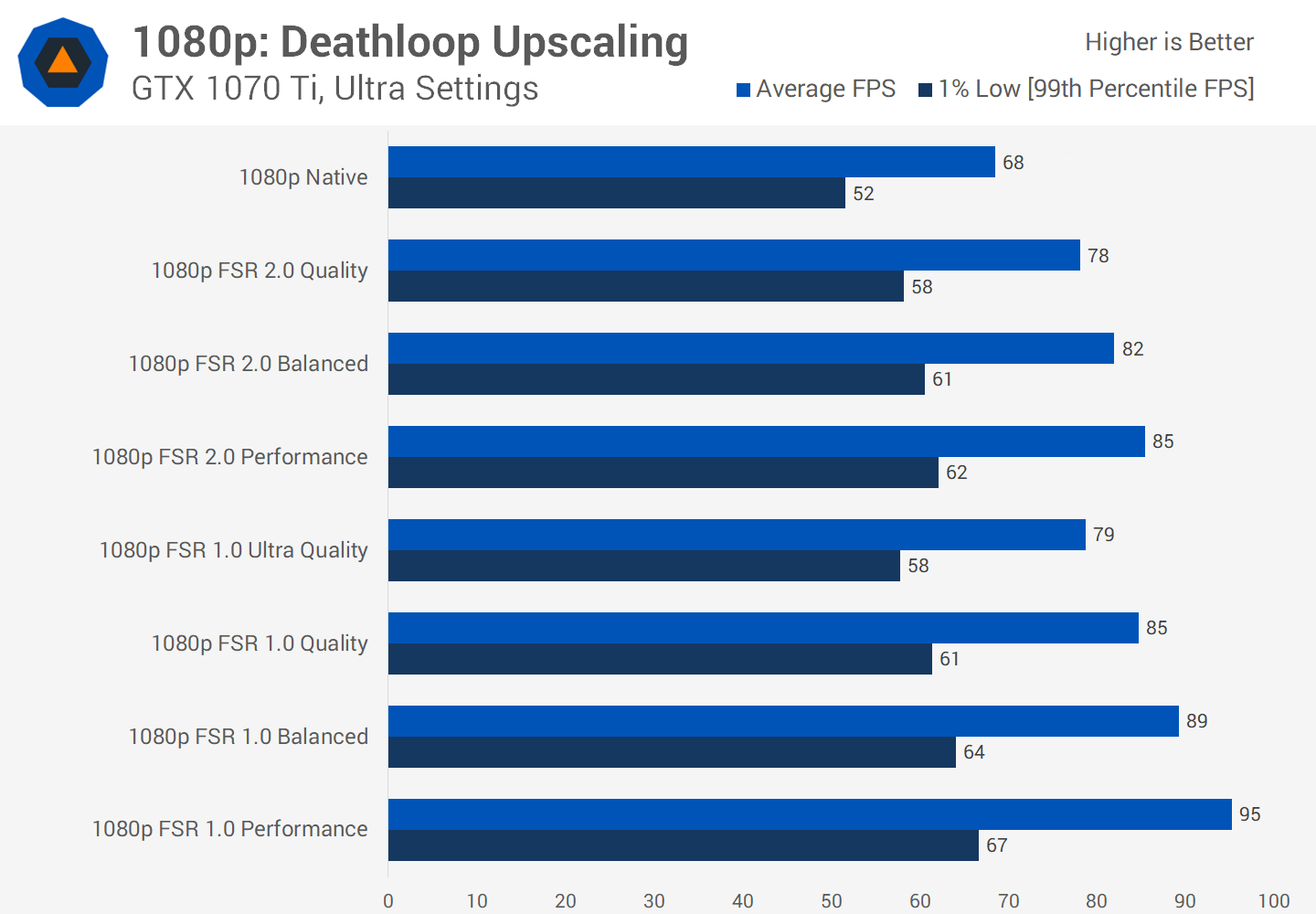

On Nvidia's Pascal architecture we see a similar situation to Vega 64. Using Ultra settings at 1440p, the GTX 1070 Ti was able to achieve 20 percent better performance going from native rendering to FSR 2.0 Quality, and 41 percent using FSR 2.0 Performance. FSR 1.0 performs better on this architecture, especially at lower render resolutions, but I'd still prefer to use FSR 2.0 due to its higher image quality.

At 1080p, once again more modest gains of 15 percent for the Quality mode, similar to Vega. It'll be interesting to see how this holds up in other titles, but FSR 1.0 wasn't exactly miles better for something like its Ultra Quality setting. So I'm still pleased that FSR 2.0 is usable here and a better option than AMD's older FSR version.

Radeon RX 5500 XT 8GB: FSR 1 vs. FSR 2 vs. Native

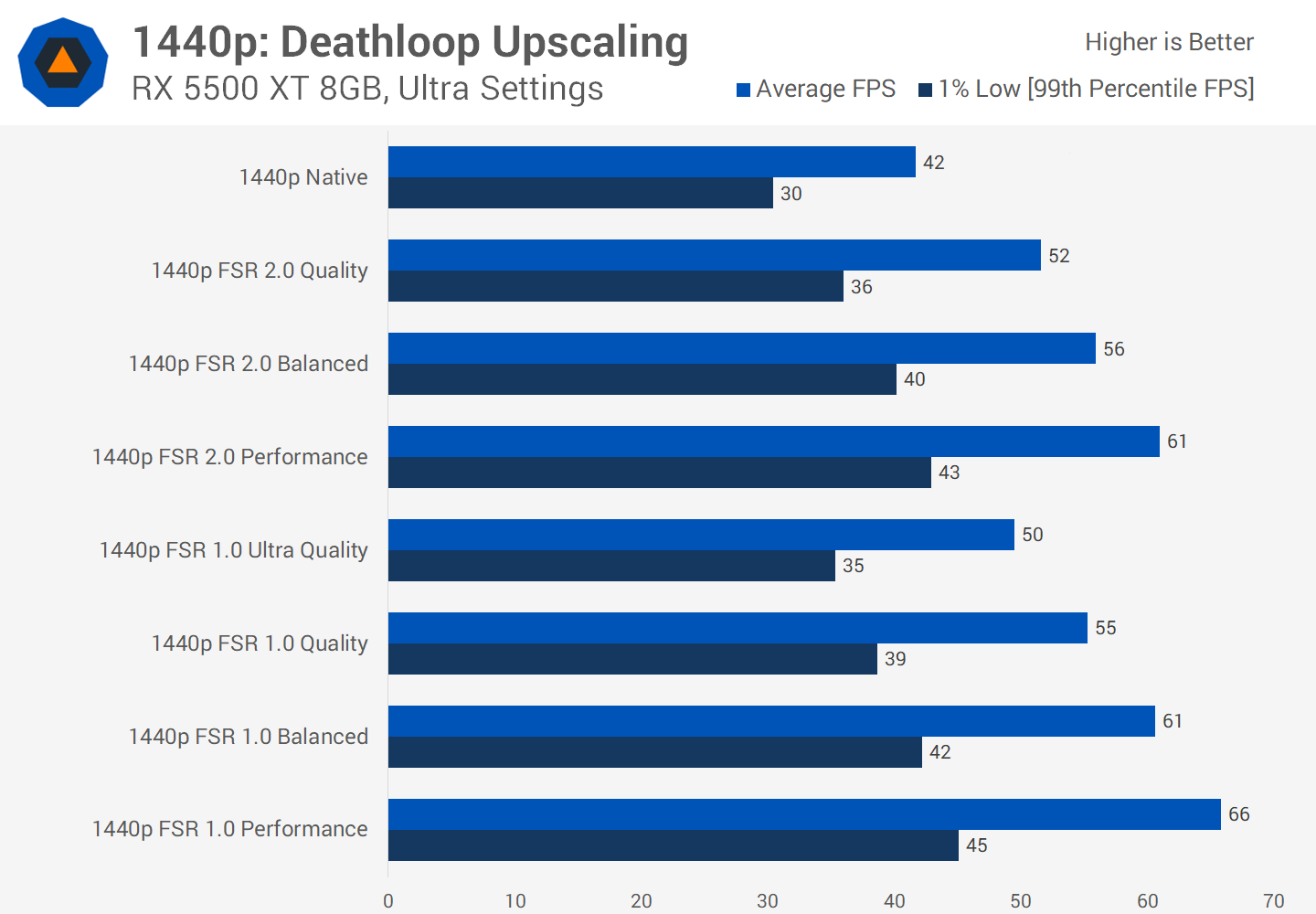

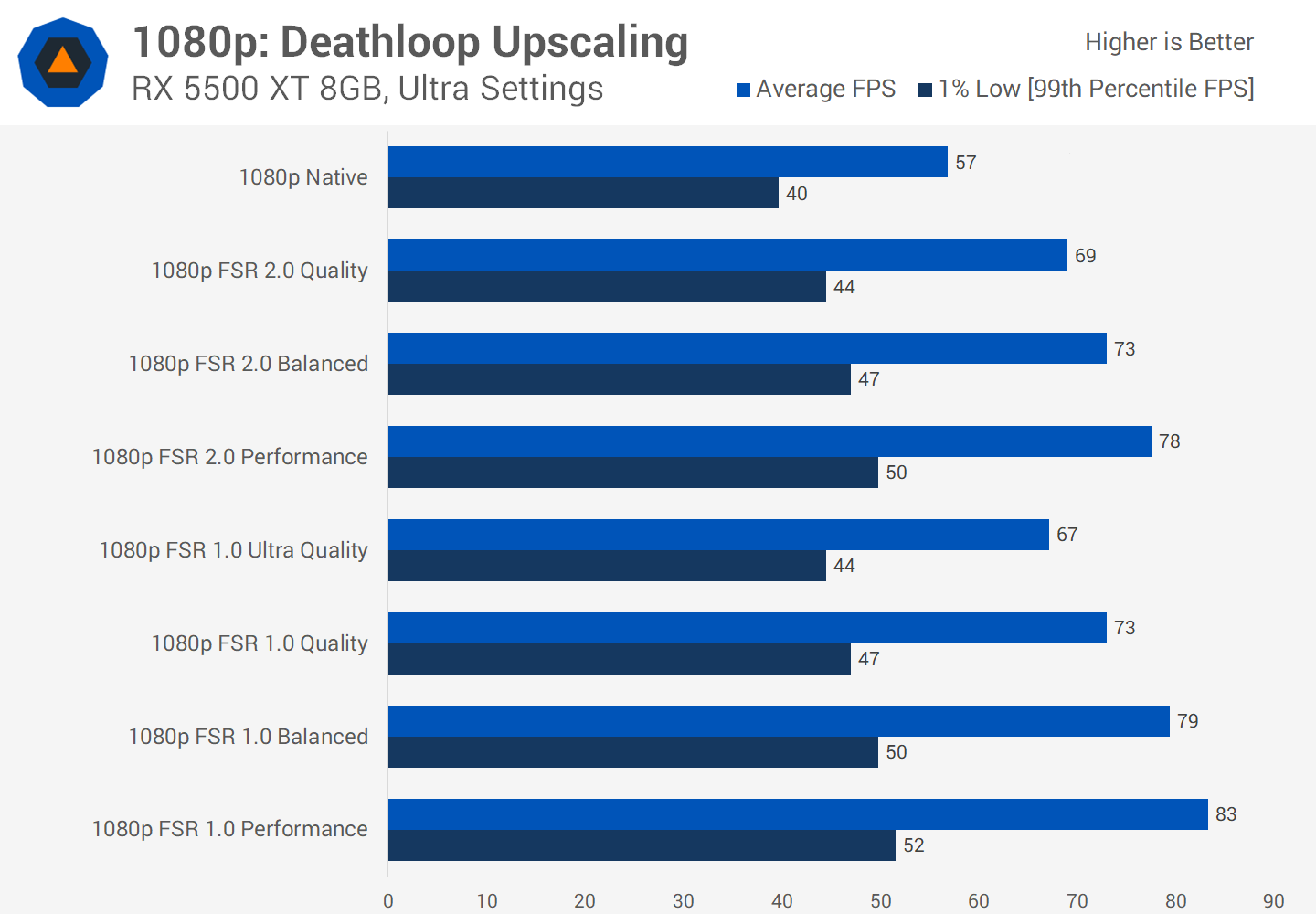

Let's take a look at a first-generation RDNA product, the RX 5500 XT 8GB. At 1440p, FSR 2.0 Quality mode was able to deliver 24 percent better performance than native rendering, a much larger uplift than with the RX 570 despite both cards running at approximately the same FPS natively under the conditions we tested. 45% better performance was also possible using the Performance mode, and overall this is preferable to FSR 1.0.

At 1080p I also saw respectable gains, 21 percent for FSR 2.0 Quality mode and 37 percent for FSR 2.0 Performance, again delivering results that are preferable to using FSR 1.0. Yes the FSR 1.0 Performance setting gives you a few extra FPS, but your eyes won't forgive you for using such a low quality setting.

Radeon RX 5700 XT: FSR 1 vs. FSR 2 vs. Native

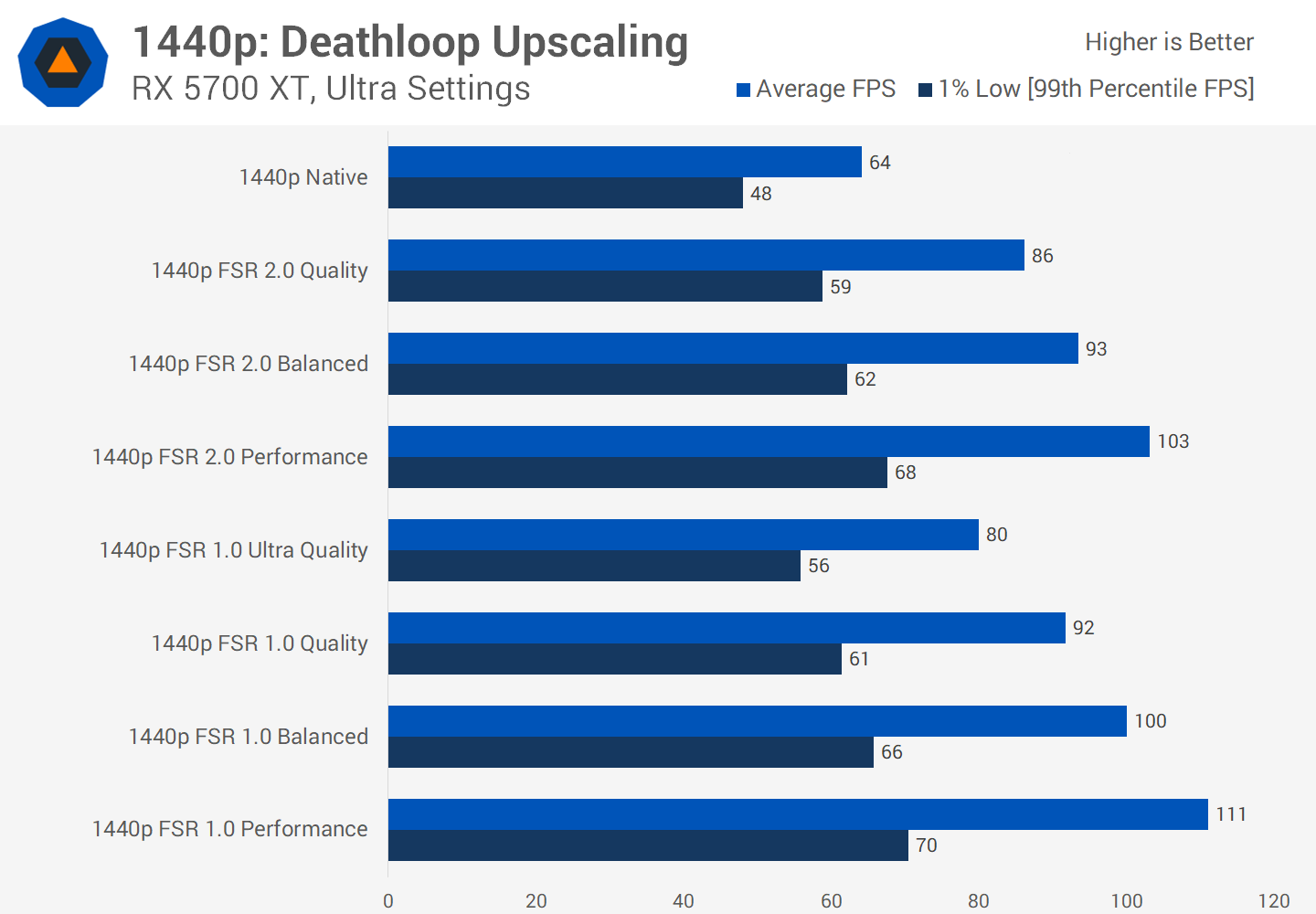

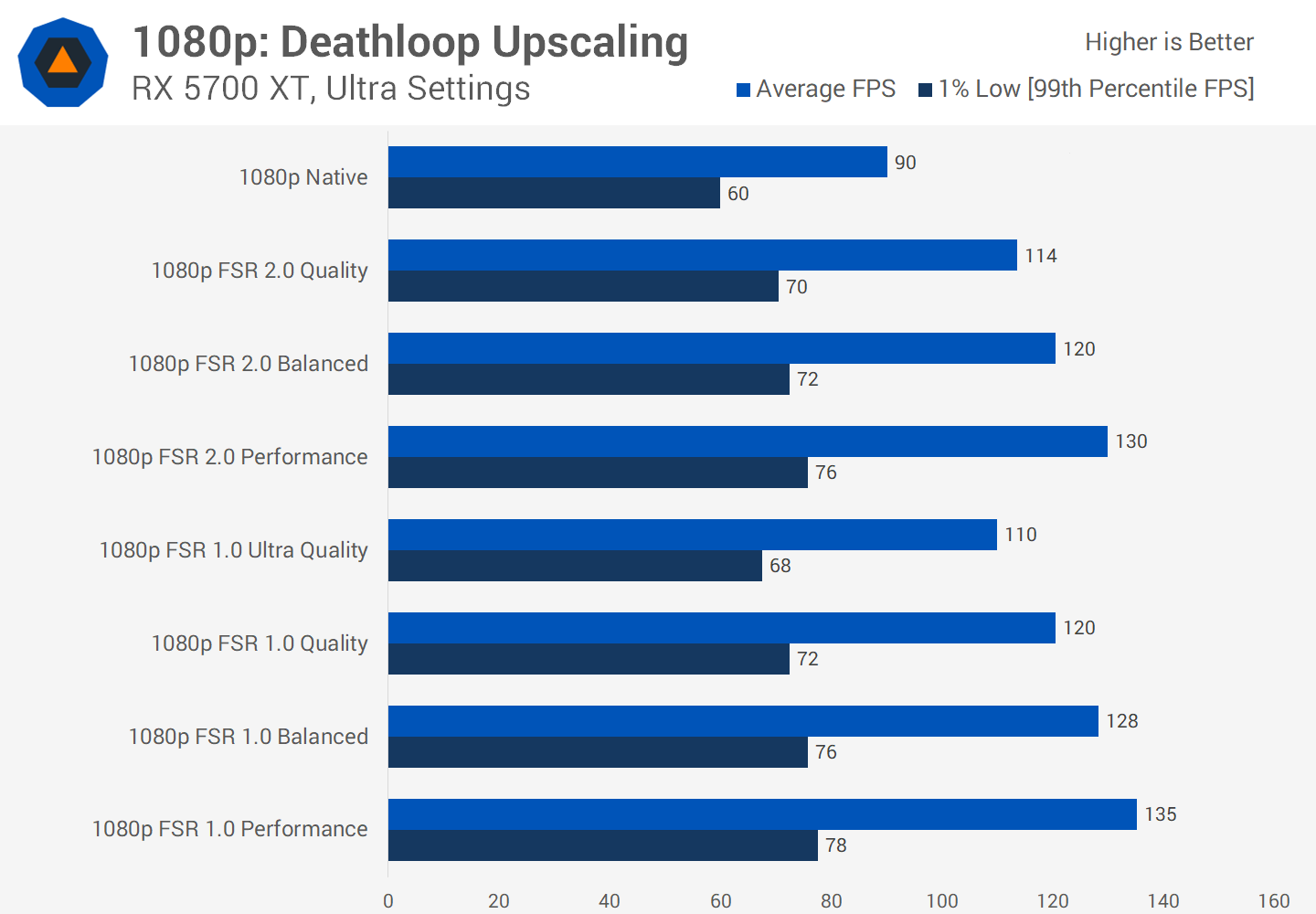

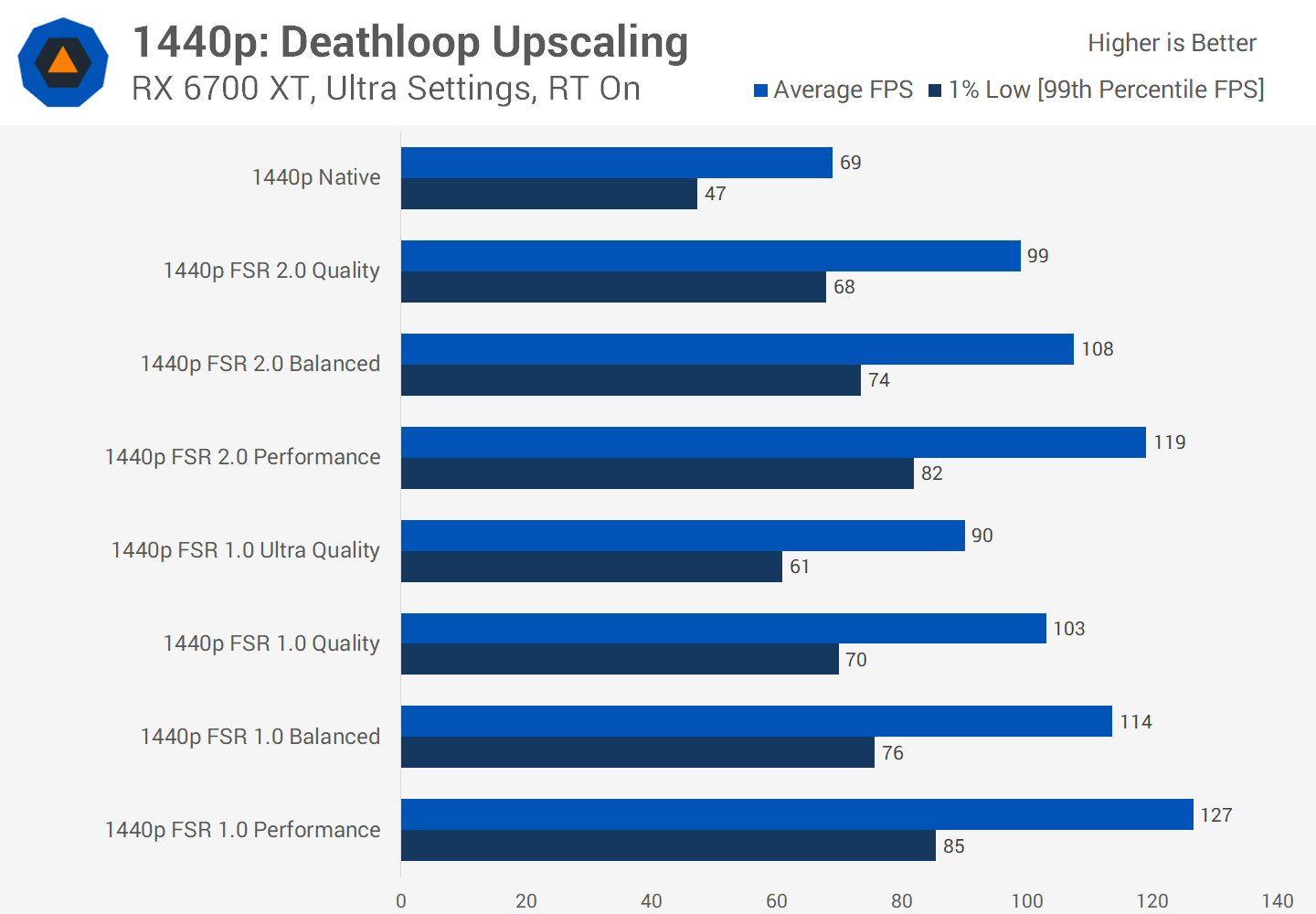

Under the same architecture family, let's now look at the much faster RX 5700 XT. With the same architecture and same memory capacity at hand, FSR 2.0 clearly does benefit from more GPU resources. At 1440p using FSR 2.0 Quality mode, we saw a 34 percent performance uplift, higher than the 24% we saw for the 5500 XT. A larger uplift was also possible for the Performance mode, 61 percent here compared to 45% for entry-level RDNA.

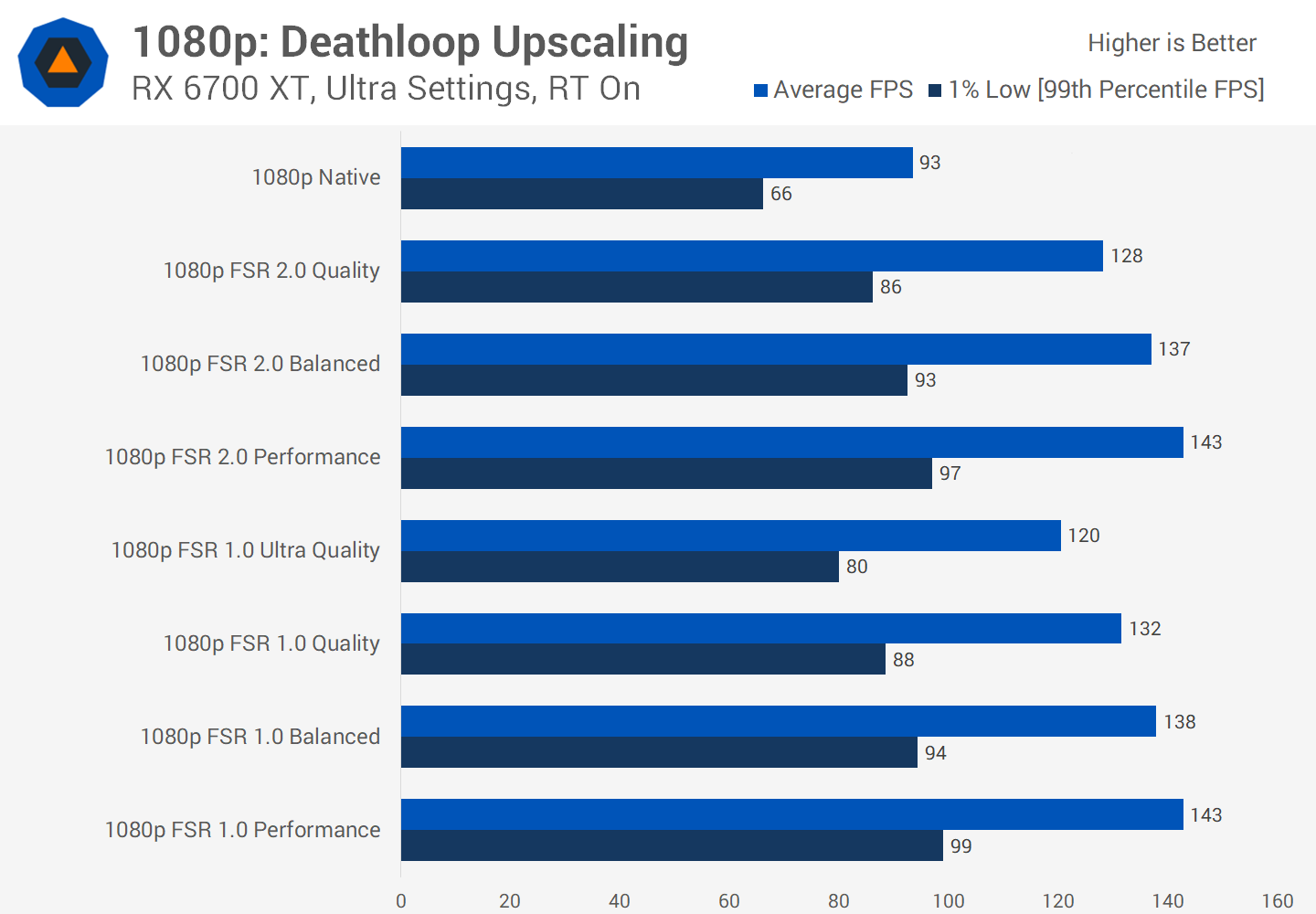

At 1080p we are able to get a 27 percent performance uplift compared to native rendering using FSR 2.0 Quality mode, and a 44% uplift using the Performance mode. With these more mid-range products it really doesn't make sense to use FSR 1.0 as the Ultra Quality mode runs slower than FSR 2.0 for once again, worse image quality.

GeForce RTX 2060: FSR 2 vs. DLSS vs. Native

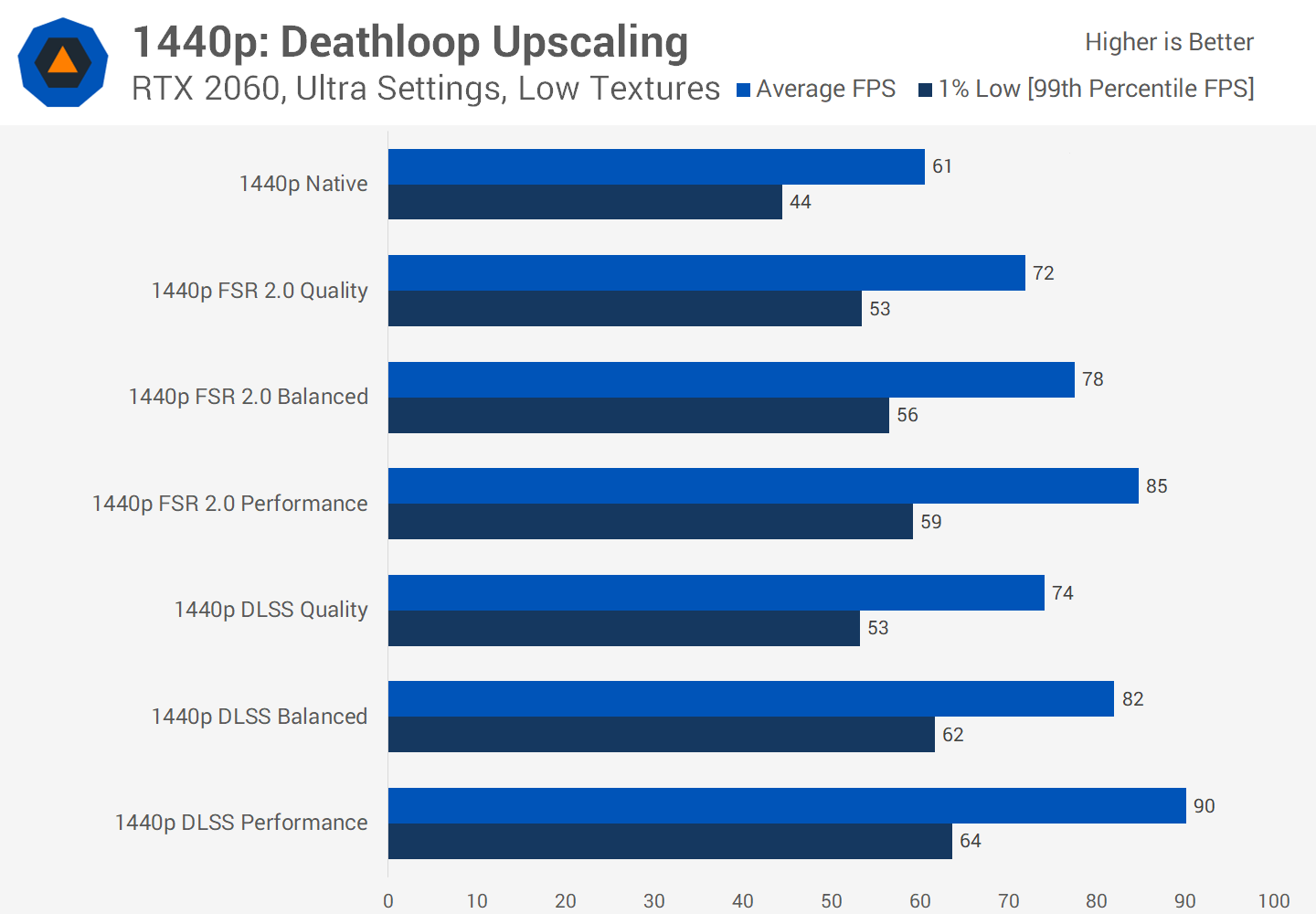

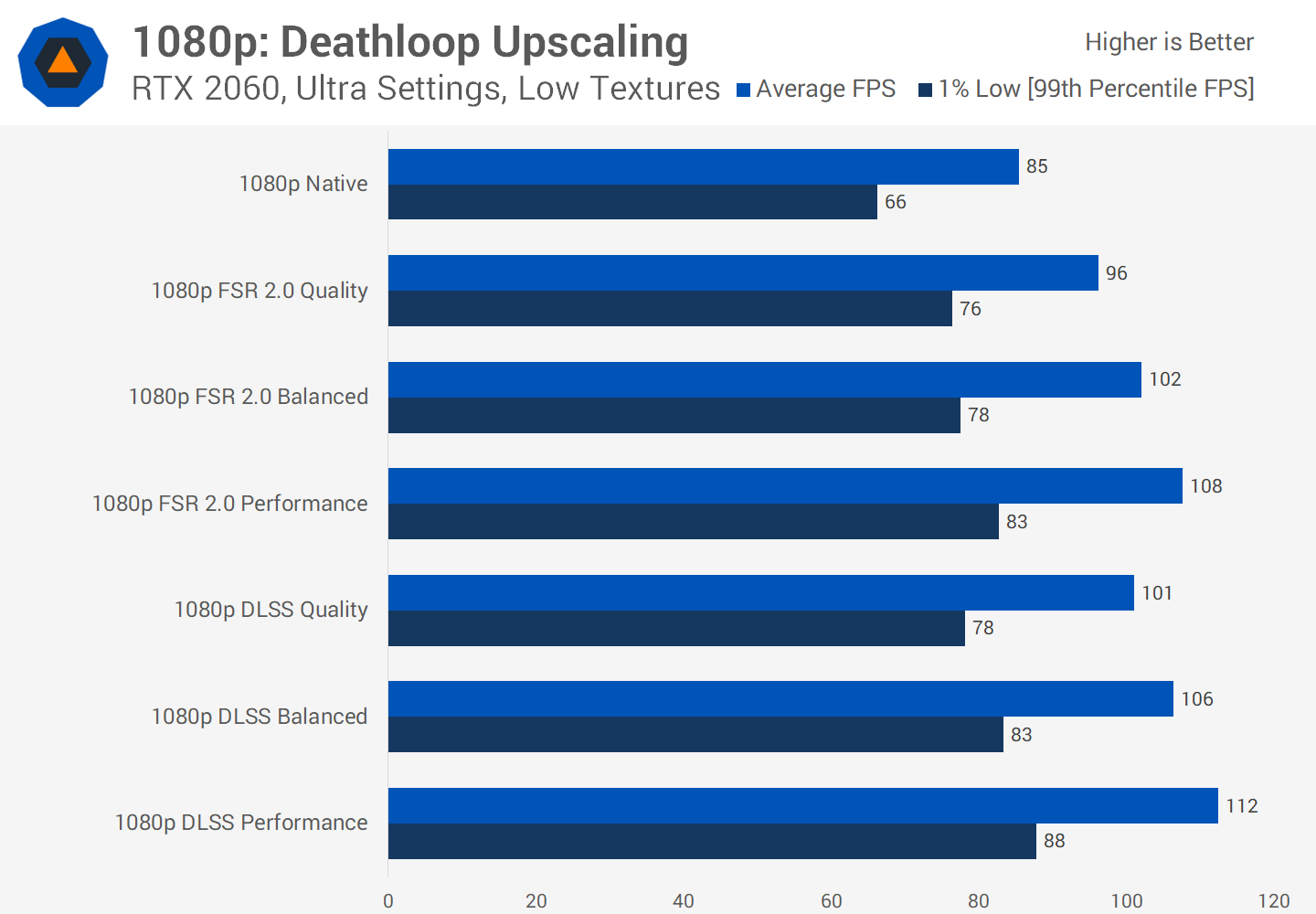

Now let's take a look at Nvidia's Tensor core equipped Turing architecture starting with the RTX 2060. With this GPU, FSR 2.0 performance is similar to what we see with the RTX 3060 Ti (below), in that DLSS is slightly faster than FSR 2.0 overall, but not significantly so.

FSR 2.0 Quality mode was capable of a fairly unimpressive 18 percent performance uplift, but DLSS Quality mode only improved that to a 21 percent gain, so bit much of a muchness. The best results for DLSS were the Performance mode, which ended up 6% faster than the equivalent FSR 2.0 setting.

Then at 1080p, similar story. Only a 13 percent performance uplift for FSR 2.0 Quality mode compared to native, with DLSS Quality mode delivering a 19 percent uplift. At 1080p this does make DLSS the favorable option as it also tends to look better, but neither option is delivering a huge performance increase - though I'd certainly take it if I was struggling to hit a playable frame rate.

GeForce RTX 2080: FSR 2 vs. DLSS vs. Native

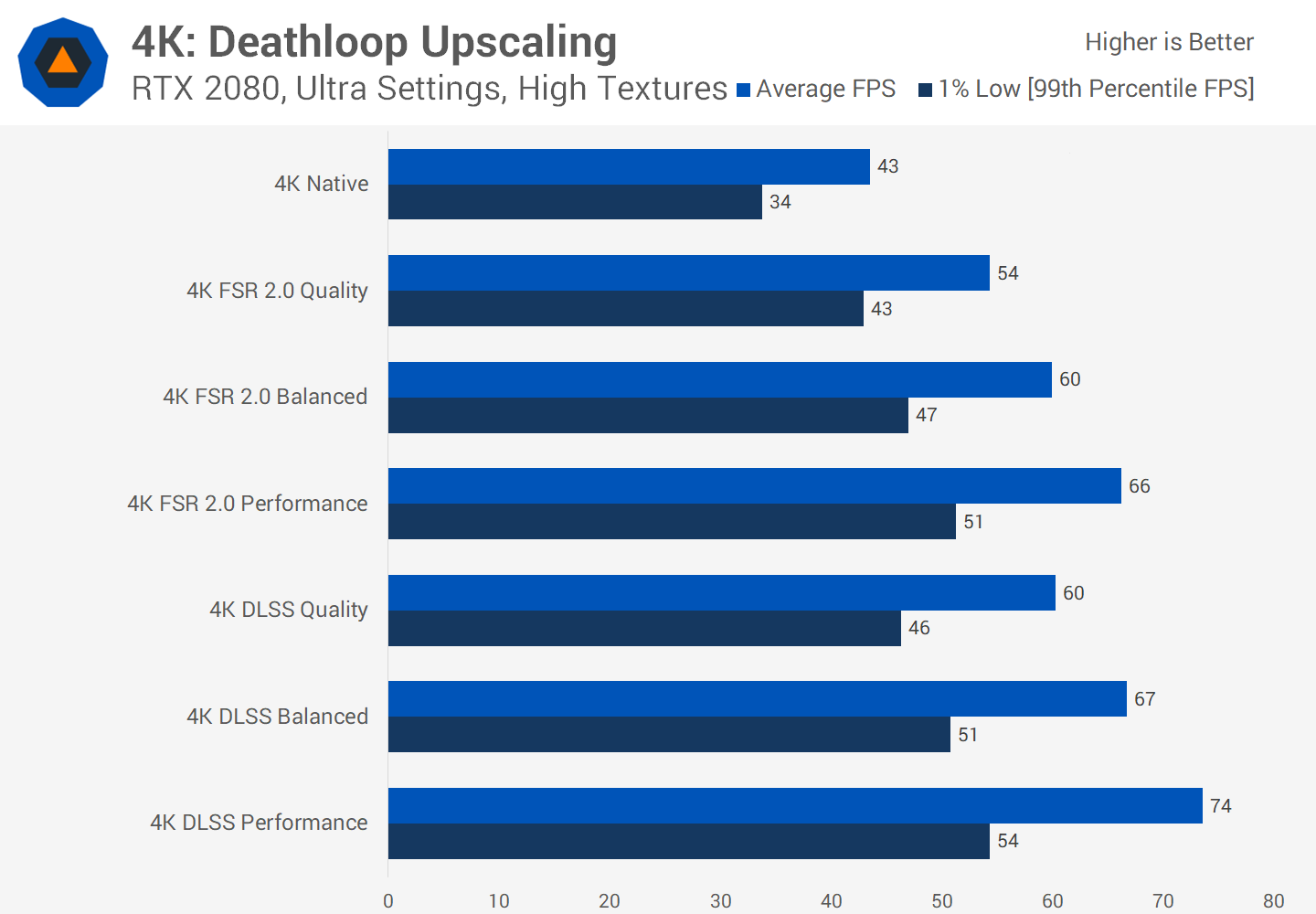

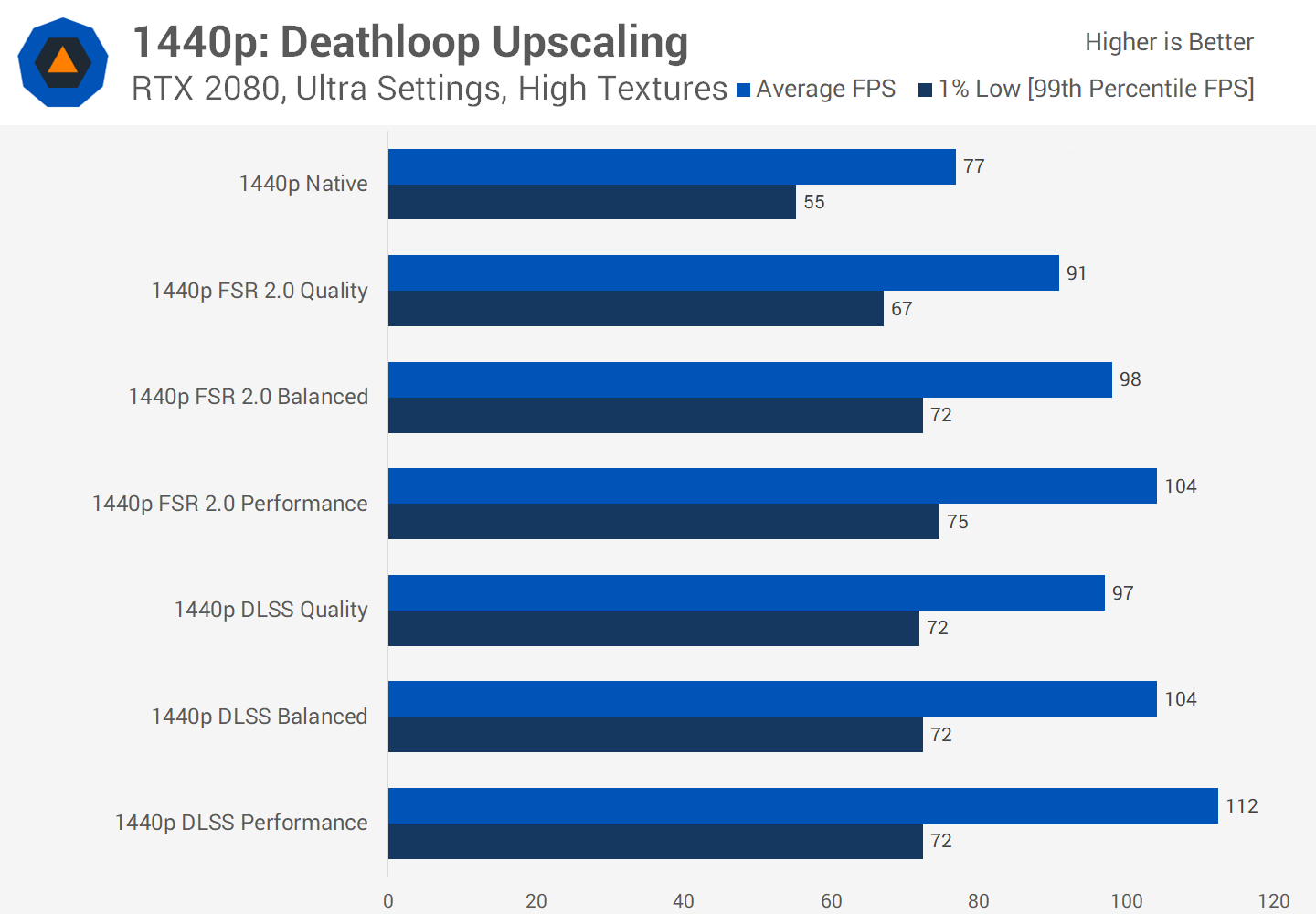

Some of the most interesting results are these with the RTX 2080. At 4K, FSR 2.0 was capable of a 26 percent performance uplift using the Quality mode compared to native, however the gains with DLSS were much larger at 40 percent for the equivalent mode.

On this GPU, DLSS was 10 to 12 percent faster at 4K, which is a significant margin and clearly makes DLSS the favorable option. Again we don't know how FSR 2.0 works exactly, so we don't know which sort of GPU resources it favors, but I think the results on the 2080 are a little disappointing, especially when DLSS is very effective.

It's a similar situation at 1440p. The performance uplift from FSR 2.0 Quality was 18 percent compared to native rendering, but DLSS Quality was achieving a 26 percent uplift. Not as large of a difference in favor of DLSS, but Nvidia's technique was still around 7 percent faster overall, so again for 2080 owners it would make sense to use DLSS.

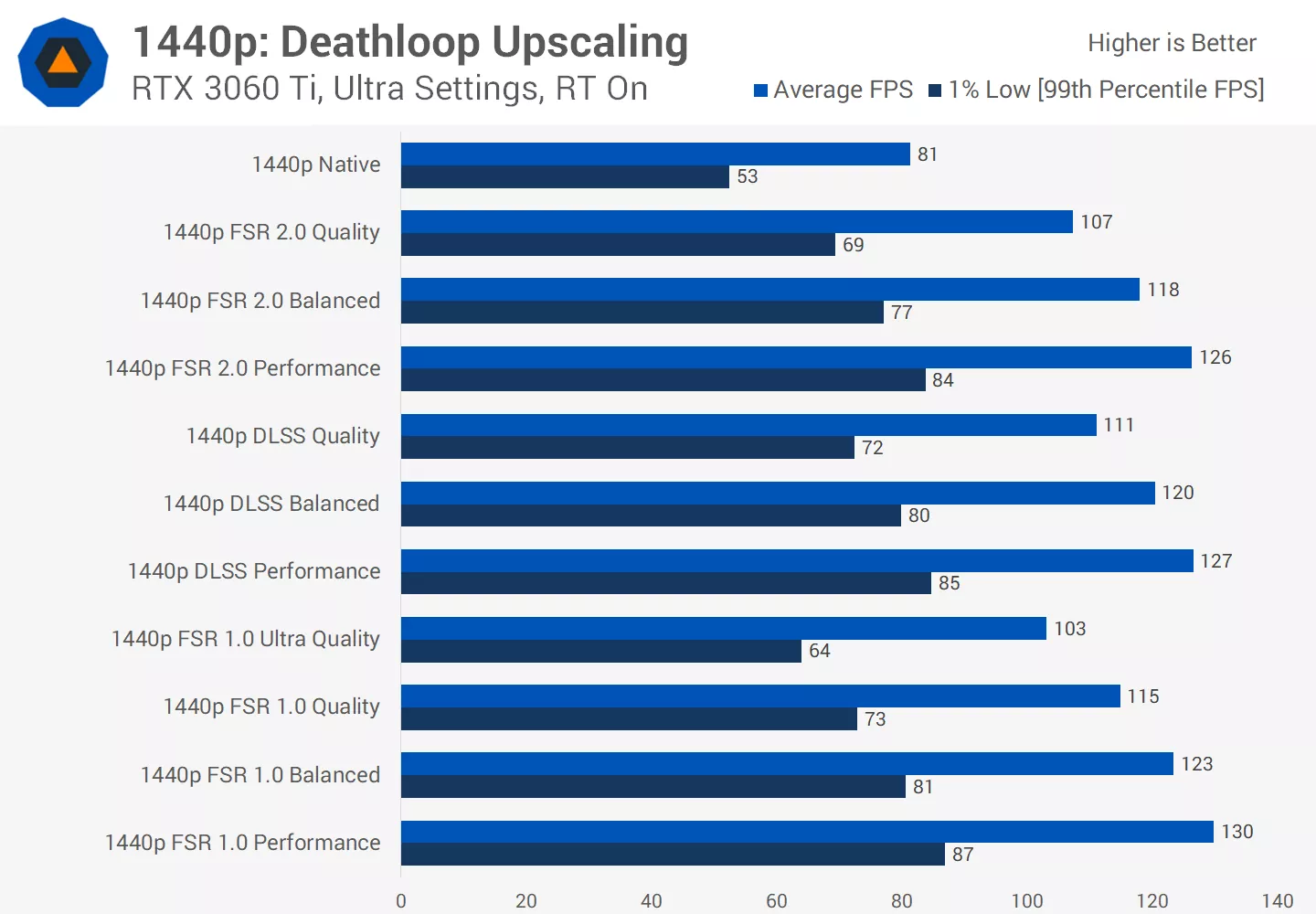

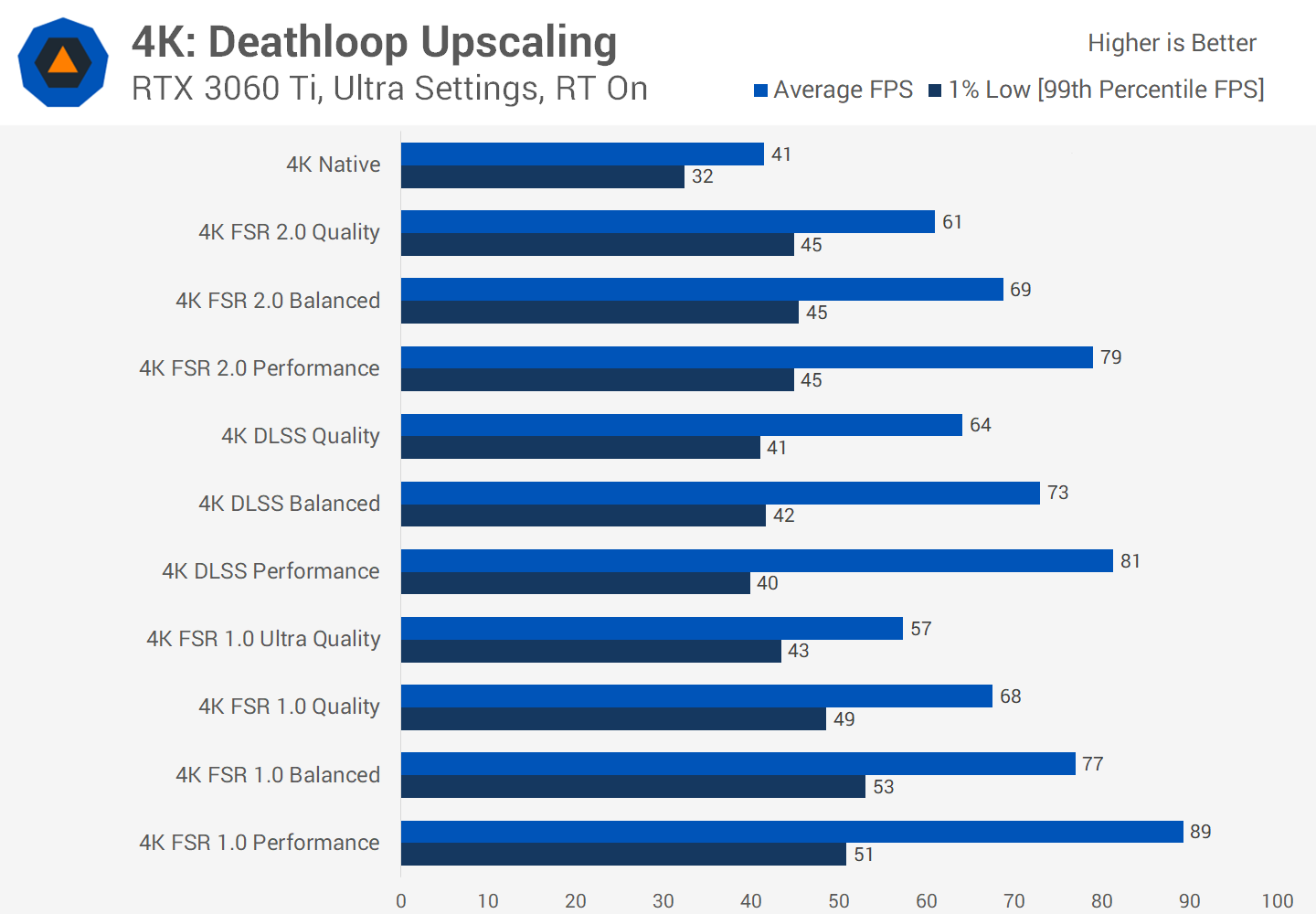

GeForce RTX 3060 Ti: FSR 2 vs. DLSS vs. Native

Radeon RX 6800 XT: FSR 1 vs. FSR 2 vs. Native

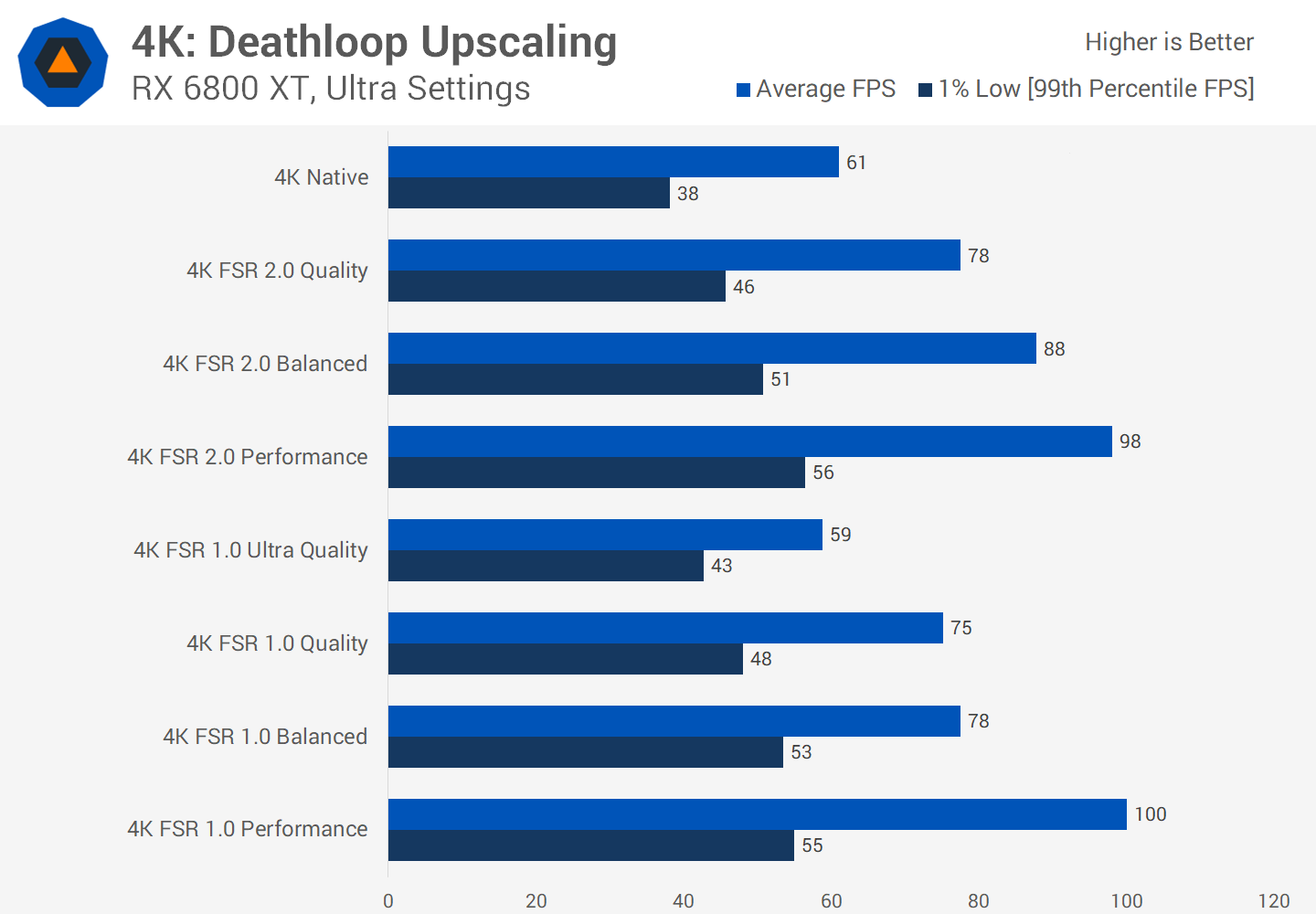

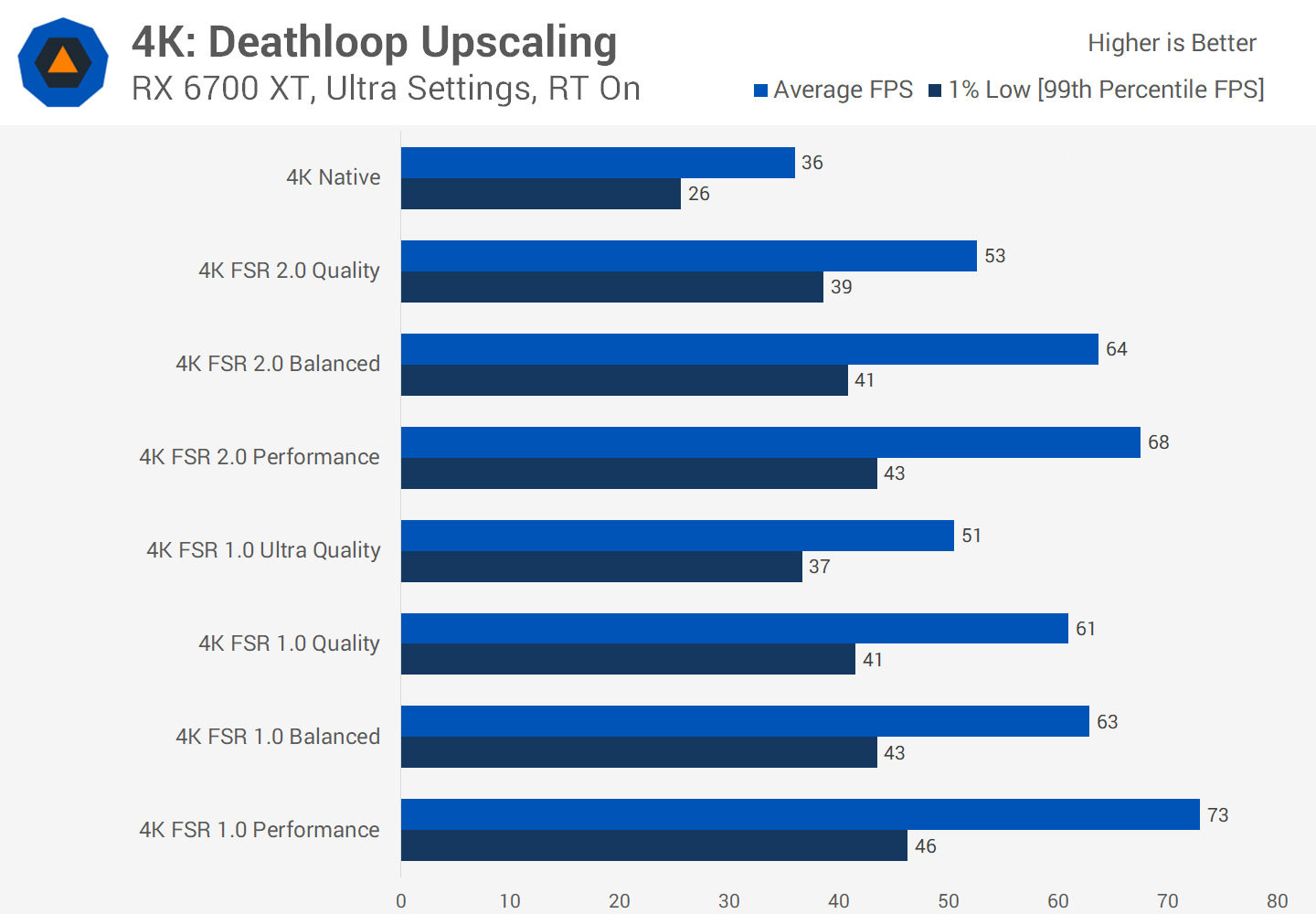

Let's take a look at some modern high-end GPUs. At 4K with the RX 6800 XT, FSR 2.0 Quality mode was effective, delivering a 28 percent performance gain over native rendering. Using the Performance mode increased that to 61 percent over native, and with most modes either matching or beating FSR 1.0 it's clear that on a high-end card like this playing a high resolution you should be using FSR 2.0.

Radeon RX 6700 XT: FSR 1 vs. FSR 2 vs. Native

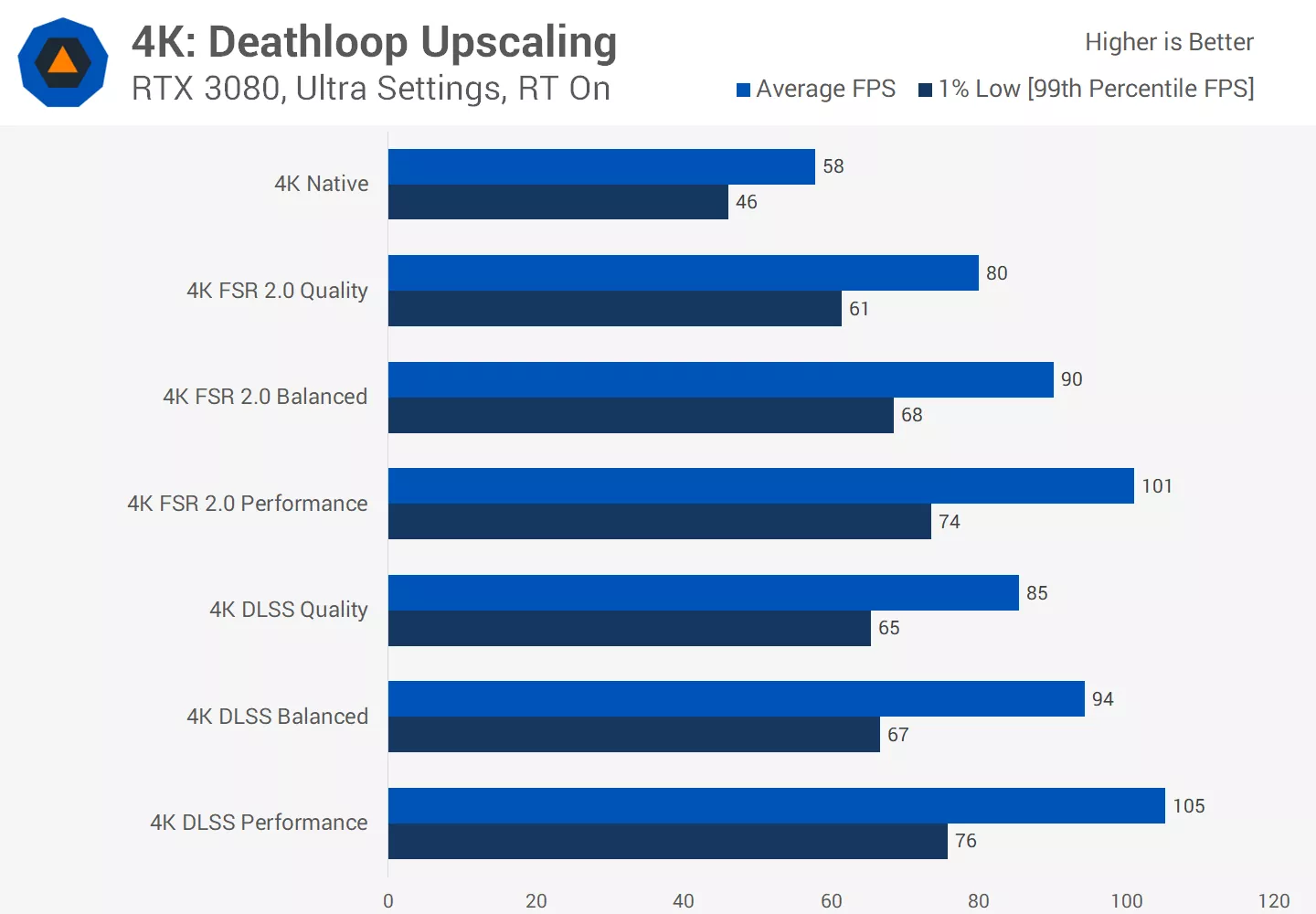

GeForce RTX 3080: FSR 2 vs. DLSS vs. Native

Then for the RTX 3080 using the same settings at 4K, we see a larger gain of 38 percent for FSR 2.0 Quality vs native, and 74 percent for the Performance mode. However like with other Nvidia GPUs that we've benchmarked, DLSS is the faster option, delivering 4 to 6 percent higher frame rates at the equivalent quality settings to FSR 2.0. So my general thoughts on using DLSS over FSR 2.0 on Nvidia's latest GPUs holds true.

What We Learned

It's clear that FSR 2.0 runs best on the latest GPU architectures like AMD's RDNA and RDNA2 designs, as well as Nvidia's Ampere. These GPUs consistently delivered the best results in terms of a performance uplift compared to native rendering. We don't think it's unreasonable to expect minimum gains of 30 to 40 percent using FSR 2.0 Quality mode on modern GPUs.

In all situations it still made sense to use or at least consider using FSR 2.0 as we didn't see performance go backwards, and results were generally favorable compared to FSR 1.0, but there are some instances where gains are smaller than expected.

However it's not just the GPU architecture that influences performance. Like we saw several years ago when testing DLSS, a key component is also the native rendering frame rate: you'll get higher gains if your baseline FPS is lower.

This is because FSR 2.0 has a fixed rendering cost, which takes up a larger proportion of the total frame rendering time at higher frame rates. But even if your baseline is 100 FPS or more, FSR 2.0 can still provide solid gains on newer architectures, provided you don't run into CPU bottlenecks.

It also seems to be the case that FSR 2.0 runs better on GPUs that are simply faster overall, as we saw when comparing the RX 5700 XT and RX 5500 XT: same architecture, same settings, yet the gains from the 5700 XT were better despite a higher baseline FPS. This is also what AMD suggested at launch: a longer FSR 2.0 processing time for less powerful GPUs.

It makes sense that cards with more resources could run the algorithm faster, especially as it doesn't utilize dedicated hardware. When the algorithm is run faster, it uses up proportionally less of the time it takes to render each frame, which can give a performance gain advantage.

This is also relevant for budget and older GPUs. Cards like the RX 570 and GTX 1650 Super are simply taking longer to process FSR 2.0, which leads to more limited performance uplifts even in "ideal" conditions for upscaling. Compounding this are the limitations of older architectures, for example if parts of FSR 2.0 uses FP16 processing like FSR 1.0, then the algorithm will have to fallback to FP32 processing on GPUs architectures like Polaris that don't natively support FP16, hurting performance. We don't yet have the full picture on what architectural features are necessary for maximum FSR 2.0 performance, but we'd be surprised if FP16 wasn't a factor.

Having that said, even five year old GPUs can run and benefit from FSR 2.0, which you can't say for DLSS. We'll certainly take a 10 to 20 percent performance uplift from this temporal algorithm on those cards, and would use it over FSR 1.0 despite lesser performance gains.

Across the four GPUs we tested today that also support DLSS, from both Turing and Ampere generations, FSR 2.0 performance gets close to DLSS using equivalent quality settings. However, DLSS typically does run better, up to 12% faster in the best case scenario on the RTX 2080. So for Nvidia GPU owners with Tensor equipped cards, our recommendation would be to use DLSS, for the small performance advantage and also because in some situations it delivers better image quality as well.

Finally, it's worth repeating that this is a sample size of one game. We'll need to do more in depth testing a few months down the track to see how FSR 2.0 works across a wider range of titles. By then we should also have the FSR 2.0 source code and hopefully experts in that area can dive in and give us a good picture of what's going on. But for now, we can still learn a bit about the optimal situations for FSR 2.0 in Deathloop, so hopefully this testing has been valuable.