Servers are probably not near the top of your list for conversation topics nor are they something most people think about. But, it turns out there are some interesting changes happening in server design that will start to have a real world impact on everyone who uses both traditional and new computing devices.

Everything from smart digital assistants to autonomous cars to virtual reality is being enabled and enhanced with the addition of new types of computing models and new types of computing accelerators to today's servers and cloud-based infrastructure. In fact, this is one of the key reasons Intel recently doubled down on their commitment to server, cloud, and data center markets as part of the company's evolving strategy.

Until recently, virtually all the computing effort done on servers---from email and web page delivery to high performance computing---was done on CPUs, conceptually and architecturally similar to the ones found in today's PCs.

Traditional server CPUs have made enormous improvements in performance over the last several decades, thanks in part to the benefits of Moore's Law. In fact, Intel just announced a Xeon server CPU, the E7 V4, which is optimized for analytics, with 24 independent cores this week.

Recognizing the growing importance of cloud-based computing models, a number of competitors have tried to work their way into the server CPU market but, at last count, Intel still owns a staggering 99% share. Qualcomm has announced some ARM-based server CPUs and Cavium introduced their new Thunder X2 ARM-based CPU at last week's Computex show in Taiwan but both companies face uphill battles. A potentially more interesting competitive threat could come from AMD. After a several year competitive lull, AMD is expected to finally make a serious re-entry into the server CPU market this fall when their new x86 core, code-named Zen, which they also previewed at Computex, is expected to be announced and integrated into new server CPUs.

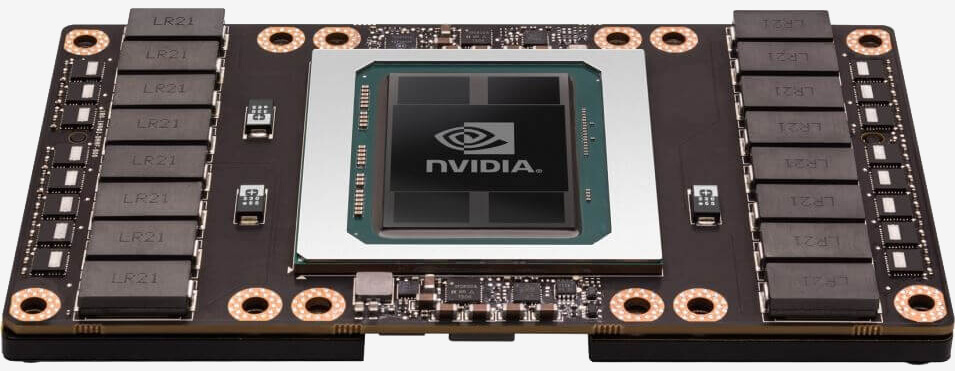

Some of the more interesting developments in server design are coming from the addition of new chips that serve as accelerators for specific kinds of workloads. Much as a GPU inside a PC works alongside the CPU and powers certain types of software, new chips are being added to traditional servers in order to enhance their capabilities. In fact, GPUs are now being integrated into servers for applications such as graphics virtualizations and artificial intelligence. The biggest noise has been created by Nvidia with their use of GPUs and GPU-based chips for applications like deep learning. While CPUs are essentially optimized to do one thing very fast, GPUs are optimized to do lots of relatively simple things simultaneously.

Visual-based pattern matching, at the heart of many artificial intelligence algorithms, for example, is ideally suited to the simultaneous computing capabilities of GPUs. As a result, Nvidia has taken some of their GPU architectures and created the Tesla line of accelerator cards for servers.

While not as well known, Intel has actually offered a line of parallel-processing optimized chips they call Intel Phi to the supercomputing and high-performance computing (HPC) market for several years. Unlike Tesla (and forthcoming offerings AMD is likely to bring to servers), Intel's Phi chips are not based on GPUs but a different variant of its own X86-based designs. Given the growing number of parallel processing-based workloads, it wouldn't be surprising to see Intel bring the parallel computing capabilities of Phi to the more general purpose server market in the future for machine learning workloads.

In addition, Intel recently made a high profile purchase of Altera, a company that specializes in FPGAs (field programmable gate arrays). FPGAs are essentially programmable chips that can be used to perform a variety of specific functions more efficiently than general purpose CPUs and GPUs. While the requirements vary depending on workloads, FPGAs are known to be optimized for applications like signal processing and high-speed data transfers. Given the extreme performance requirements of today's most demanding cloud applications, the need to quickly access storage and networking elements of cloud-based servers is critical and FPGAs can be used for these purposes as well.

Many newer server workloads, such as big data analytics engines, also require fast access to huge amounts of data in memory. This, in turn, is driving interest in new types of memory, storage, and even computing architectures. In fact, these issues are at the heart of HP Enterprise's The Machine concept for a server of the future. In the nearer term, memory architectures like the Micron and Intel-driven 3D Xpoint technology, which combines the benefits of traditional DRAM and flash memory, will help drive new levels of real-time performance even with existing server designs.

Today's servers have come a long way from the PC-like, CPU-dominated world of just a few years back.

The bottom line is that today's servers have come a long way from the PC-like, CPU-dominated world of just a few years back. As we see the rise of new types of workloads, we're likely to see even more chip accelerators optimized to do certain tasks. Google, for example, recently announced a TPU, which is a chip they designed (many believe it to be a customized version of an FPGA) specifically to accelerate the performance of their TensorFlow deep learning software. Other semiconductor makers are working on different types of specialized accelerators for applications such as computer vision and more.

In addition, we're likely to see combinations of these different elements in order to meet the wide range of demands future servers will face. One of Intel's Computex announcements, for example, was a new server CPU that integrated the latest elements of its Xeon line with FPGA elements from the Altera acquisition.

Of course, simply throwing new chips at a workload won't do anything without the appropriate software. In fact, one of the biggest challenges of introducing new chip architectures is the amount of effort it takes to write (or re-write) code that can specifically take advantage of the new benefits the different architectures offer. This is one of the reasons x86-based traditional CPUs continue to dominate the server market. Looking forward, however, many of the exciting new cloud-based services need to dramatically scale their efforts around a more limited set of software, which makes the potential opportunity for new types of chip accelerators a compelling one.

Diving into the details of server architectures can quickly get overwhelming. However, having at least a basic understanding of how they work can help give you a better sense of how today's cloud-based applications are being delivered and might provide a glimpse into the applications and services of tomorrow.

Bob O'Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting and market research firm. You can follow him on Twitter @bobodtech. This article was originally published on Tech.pinions.