Today we're taking a look at CPU performance in Battlefield 2042 and this may well be the most difficult benchmark we've ever done. The problem faced when trying to test a multiplayer game like Battlefield 2042 is that it's extremely difficult to get accurate comparative data. Testing one or two hardware configurations isn't too difficult or that time consuming... play the game on the same map under the same conditions for a few minutes, do that three times to record the average, and you have a pretty good idea of how they compare. It might not be an exact apples to apples comparison, but it's certainly ballpark.

But testing 20+ configurations for comparing a wide range of CPUs is a massive undertaking, and long story short it's taken me 7 straight days of doing nothing but trying to load into a 128-player conquest match on the same map (and succeeded!).

For testing we've used the Orbital map, and of course that map wasn't always available in the rotation, so we had to wait for it to cycle into use. This along with a number of other factors meant that I was only able to test 3 or 4 CPUs per day. We've also included separate 60 second testing on the same map using bots which is a more controlled environment as I have a fixed number of players in the game and they're all active. It isn't as CPU demanding as there are less players, plus the AI load is different. But we're more confident in the accuracy of that data because it's a more controlled test.

The 128-player conquest data is based on 3 minutes of gameplay and because the amount of players in the server can change, as well as what the players are actually doing, there's more variance, but the 3-run average helps to address this. But please be aware the margin for error is higher when compared to our more controlled testing, and I was certainly seeing a bigger run to run variance.

It's also worth noting that during our testing, the game received a patch, and Nvidia released an updated GPU driver. We used the GeForce RTX 3090 for testing as it was typically faster than the Radeon RX 6900 XT in this game, as we discovered in the GPU benchmark a week ago.

Neither of these updates affected the results. We believe the Nvidia driver mostly addressed DLSS-related issues, while the game patch mostly addressed stability and bug fixes. The test was verified with GeForce Game Ready Driver 496.76 WHQL drivers, using the latest version of the game. All configurations used 32GB of dual-rank, dual-channel DDR4-3200 CL14 memory, and we've also included some memory results using a few different configurations for good measure.

For now, let's start with the CPU testing...

Benchmarks

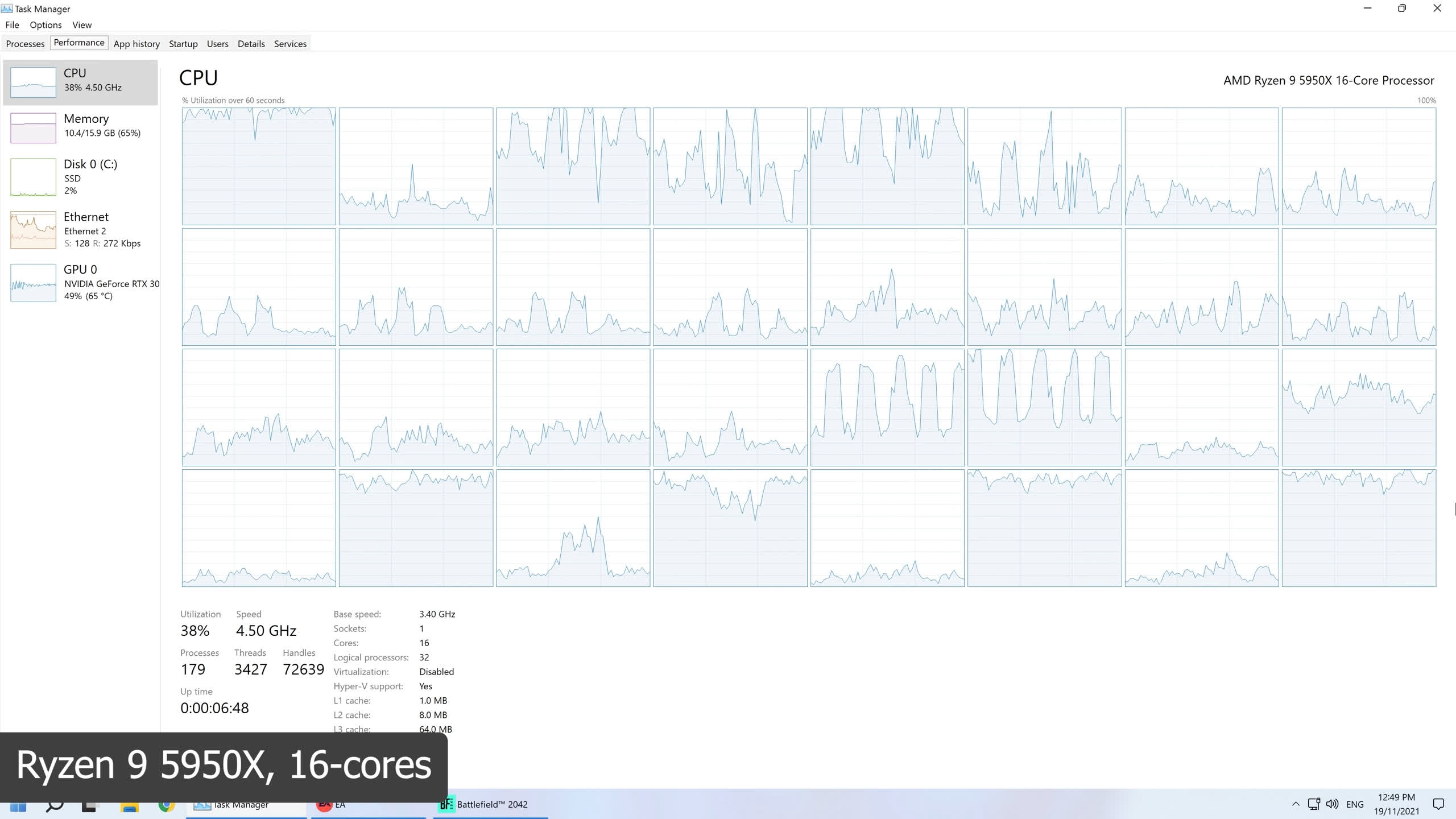

Typically, we test CPU performance at lower resolutions such as 1080p to help remove any GPU bottlenecks, though with Battlefield 2042 multiplayer that's not really necessary as you'll see soon when we test at 1440p.

But here at 1080p, we see that for the best performance you'll want a 12th-gen Intel processor, though we're talking about an 8% performance advantage for the 12900K over the 5950X. The 12600K was also 9% faster than the 5800X, though the 1% low and 0.1% low data was comparable. What's really interesting to note though is that despite the game being very CPU demanding – at least by normal gaming standards – the 5950X was just 5% faster than the 5600X when comparing the average frame rate, and up to 14% faster for the 1% low.

Battlefield 2042 utilizes 8 cores when available, so with the 5950X half the cores essentially did nothing. In the case of Zen 3, the 8 cores aren't maxed out, so the 5590X saw utilization in 128 player games of around 30 to 40%.

With the 5800X, utilization was more in the range of 70-80%. So the only reason the 5950X was a few frames faster would be due to the slight increase in frequency, as stuff like cache capacity is the same per CCD.

The 5600X was similar despite being a 6-core/12-thread CPU as the game didn't max out the 5800X, meaning a fast 6-core Zen 3 processor is still fine, though it's right on the edge with utilization often locked at 100%. Despite this heavy utilization, the game didn't stutter, at least no more than what was witnessed using higher core count Zen 3 processors. But it does mean you're right on the edge with this part, and slower 6-core/12-thread processors will start to see a decline in performance, assuming your GPU is capable of driving over 100 fps using your desired quality settings.

So for the newer CPU architectures it's less about core count and more about the IPC that particular architecture offers. There is a little more variance on Intel's side as L3 cache capacity increases from Core i5 to i7 and from i7 to i9.

The difference between the 11th-gen models is minimal, and we're only talking about 6-cores vs 8-cores. With 10th-gen we do see as much as a 15% variation between the 10600K and 10900K, and this is largely due to the L3 cache capacity.

AMD's Zen 2 processors mixed it up with Intel 10th-gen, and it was good to see parts like the Ryzen 3 3600X neck and neck with the Core i5-10600K. As we get down towards the Zen+ parts you can see how these older Ryzen CPUs are starting to show their age. The 2700X struggled despite being an 8-core processors with 0.1% lows of 40 fps and while the 79 fps average was still respectable, the 5800X was 43% faster here.

Modern 4-core/8-thread CPUs can still technically play Battlefield 2042, but you can expect a lot more noticeable stuttering than what you'd receive on equivalent 6 and 8-core models. What can't deliver playable performance are 4-core/4-thread CPUs such as the Core i3-8350K, the game was essentially broken on this CPU, providing nothing but constant stuttering.

Looking over these numbers, the most surprising part is that even when throwing a significant amount of processing power at the game, it's difficult to push over 100 fps in large 128-player matches. We'll talk more about this towards the end of the article.

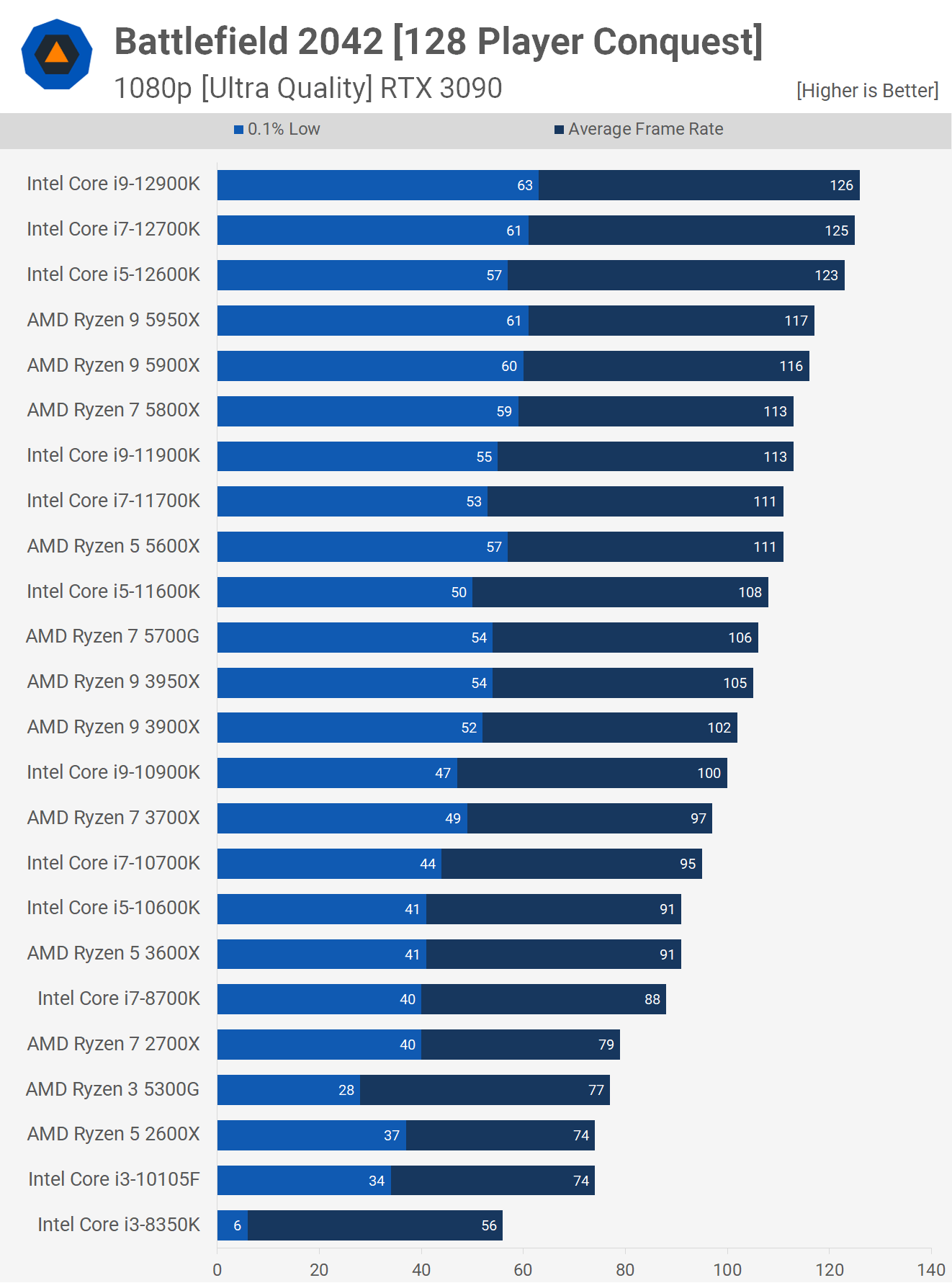

The 1440p results are interesting as they reflect the GPU testing much more closely, where the CPU limits were largely removed. So these results are more GPU limited when using high-end CPUs such as Intel 12th-gen or AMD Ryzen 5000 series. For the rest of the CPUs, the performance figures are about the same. For example, the Core i9-11900K dropped from 113 fps on average to 110 fps.

This explains why a lot of Battlefield players haven't been able to improve performance by lowering the resolution or reducing quality settings, they're simply not GPU limited, but they're also probably not always CPU limited in the way they think they are.

Testing with Bots

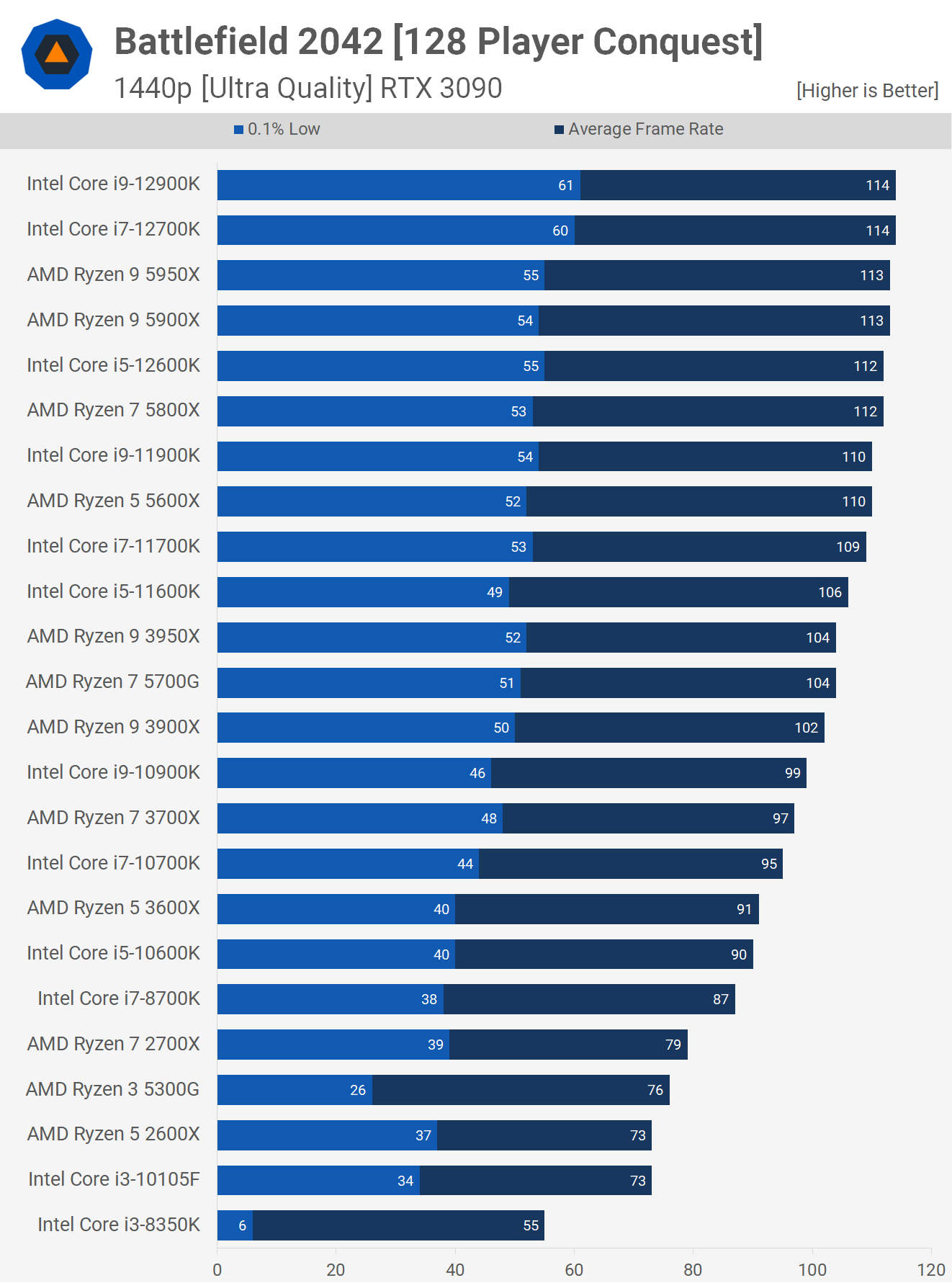

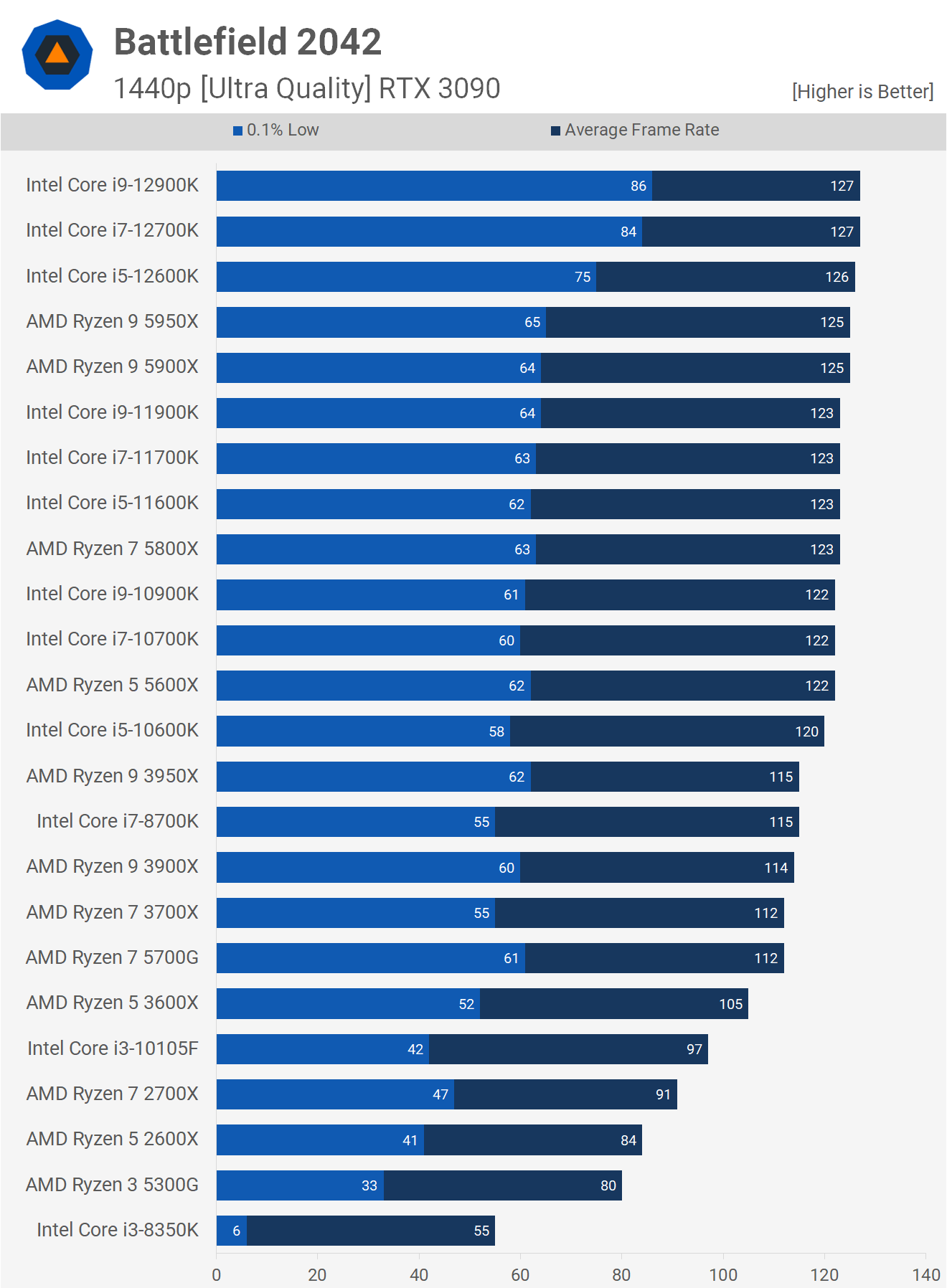

Now this data is based on a custom bot match using nothing but the game's AI and when compared to the 128-player results we just saw, CPU utilization for a part like the Ryzen 5 5600X dropped by ~15%. That's enough to boost GPU performance by around 30%, and improve 1% lows by a massive 50%, though interestingly 0.1% lows remained much the same, at least for the 5600X.

It's a similar story with older 6-core/12-thread processors like the 2600X, where the average frame rate increased by 20% while the 1% low was boosted by an incredible 59%.

But it's not just the mid to low-end CPUs that benefit massively from this lighter workload. The 12900K's average frame rate jumped by 33% with a 94% increase in 1% low performance. It's interesting to see such a massive change in performance through what is only a very small difference in utilization. But of course, the CPU load is likely very different which is why utilization figures on their own can be quite misleading.

Jumping to 1440p, we become GPU bound and this sees processors from the 10600K up all delivering similar average frame rates, though the 12th-gen CPUs were much better when looking at the 0.1% low performance.

Memory Benchmarks

Given that we're heavily CPU limited in Battlefield 2042, it makes sense that memory would play a key role when it comes to performance, and it sure does. That said, we saw no improvement when moving to DDR5-6000 with the 12900K, and in fact we saw a slight frame rate regression. That's a real shame and yet another blow to the current state of DDR5.

Moving on to the Ryzen 9 5950X results, I installed some budget DDR4-3000 CL18 single rank memory, and here we see that the low-latency DDR4-3200 memory boosts performance by 18%, with a 15% improvement to 1% lows. That's a significant difference given DDR4-3000 and 3200 are similar, at least in terms of frequency. There is, of course, a big difference between CL18 and CL14 timings.

Using the same memory configurations we saw up to a 21% improvement with the 8700K, and although this is a completely different CPU architecture, it makes sense that the more CPU limited you are, the more higher quality memory can give you a boost. So if you have an 8700K and you're only able to drive around 70 fps with a relatively high-end GPU, tuning your memory could lead to noticeable performance improvements.

What We Learned

As many gamers have noticed, Battlefield 2042 is a very CPU demanding game and pushing past the 100 fps barrier can be a real challenge. But is this a fail on the developer's behalf, is the game heavily unoptimized, and can it be fixed?

As we see it, the problem Battlefield 2042 faces is that of all modern games. Yes, it's very CPU intensive, but gamers shouldn't be concerned about the percentage utilization of their CPUs in Battlefield, as other aspects to the CPU might be limiting performance, such as cache performance or memory latency, which aren't included in that figure. If they changed the game to utilize the CPU more and bump up that number on high-end CPUs, the load on the CPU itself would be increased, which would cause performance issues on lower end CPUs. The game seems very demanding on multiple aspects of the CPU and optimizing for multiple areas could be difficult, but that doesn't mean it's unoptimized overall.

So if you think it's bad now, many gamers would have no chance of achieving playable performance if the game was maxing out modern 8 or 12-core processors, for example. If we look at the official system requirements, and focus on the AMD processors (the Intel recommendations are rubbish) we see that the minimum spec is a Ryzen 5 1600 and based on the testing we've seen here from parts like the R5 2600X, which is only marginally faster, that recommendation makes sense, it's an absolute minimum.

The recommended spec calls for at least a Ryzen 7 2700X and this is where you want to be at a minimum, but it has to be said, while this CPU was still a bit overwhelmed, the game was perfectly playable, so the recommendation makes sense. Had the developer utilized the CPU more heavily, the recommended spec would become something like the 5800X, and at that point very few could enjoy the game.

So while gamers are often quick to criticize the developer by blurting out generic terms like unoptimized, the truth is it's a lot more complicated than that. And Battlefield 2042 does have a lot going on: they've doubled the player count and that's basically shown quad-core processors the door, while putting the heat on previous generation 6-core/12-thread processors. The game also features an advanced destruction system, weather effects, and so on.

At this point, we don't think the level of CPU usage we're seeing is unjustified or suggests the game is poorly optimized (referring to CPU/GPU... there have been other complaints). Could more be done to optimize the game? Probably, but would that radically change performance without compromising on player numbers or effects? I doubt it.

It's a fine balance between making the game playable for the majority of the fan base, and adding new features like increasing the player count to make the game more exciting. You can't simply do more while requiring less and I think that's what a lot of gamers were expecting.

Finally, if you're in the (small?) group of gamers who are willing to give Battlefield 2042 a second chance, what's the best CPU to go for? If you're on the AM4 platform, the Ryzen 7 5800X looks to be the best option, though as far as we can tell, the cheaper 5600X works just fine.

The 5800X has come down in price and at $390 it offers a "better cost per core" ratio than the 5600X. Given that and the added headroom, it's probably the way to go. For Intel owners it depends on what you have. Anything older or slower than the Core i5-10600K, the upgrade to 12th-gen with the 12600K or above is going to net you ~30% greater performance, which in Battlefield 2042 is a significant improvement.

Right now the Core i5-12600K does look like the perfect CPU for Battlefield 2042, throw it on the MSI Z690 Pro-A or Gigabyte Z690 UD and you have a powerful $500 combo. And of course, DDR5 isn't required, in fact, you're best off avoiding it for now.

Speaking of memory, because this is a very CPU sensitive game, memory does influence performance more than in other games. For those gaming at 4K this will be less of an issue, but for those trying to drive as many frames as possible at lower resolutions, tightening up times and increasing the frequency will dramatically improve performance.

As for how much RAM you require? Not a lot. 16GB is plenty as total system usage when playing Battlefield 2042 never exceeded 12 GB in our testing. Generally, it hovered around 10 GB, and that was with 32 GB installed in our testbed. The only time you're going to creep well over that is when running out of VRAM, and the game does use a lot of VRAM with the ultra quality settings, so you'll want at least an 8GB graphics card, or more for playing at 1440p and higher.

Circling back to our earlier GPU testing, if we look at the 1080p data you only need an GeForce RTX 3060 Ti or Radeon RX 6700 XT when using a high-end CPU, and that's because the CPU will be the primary performance limiting component, not the GPU. For 1440p, the RTX 3080 or 6800 XT will be required, and our 1440p CPU and GPU data is very similar with just a 10% variation in performance between the two different test methods.

That's Battlefield 2042's CPU and system performance in a nutshell.

Bring a big CPU because you're going to need it...

Shopping Shortcuts:

- Intel Core i9-12900K on Amazon

- Intel Core i7-12700K on Amazon

- Intel Core i5-12600K on Amazon

- AMD Ryzen 9 5950X on Amazon

- AMD Ryzen 9 5900X on Amazon

- AMD Ryzen 7 5800X on Amazon

- Intel Core i9-11900K on Amazon

- Intel Core i7-11700K on Amazon

- Nvidia GeForce RTX 3070 Ti on Amazon

- AMD Radeon RX 6800 XT on Amazon