Not so much forgotten, but more a case of you have to ignore something for a simple analysis like this. For example, a fully unlocked Vega 20 (as used in the Raden Instinct M160) has pretty much the same FP16, FP32, and FP64 throughput as a GV100, but the latter obviously has the tensor cores which can be used in parallel with the shader units. However, they do an FP16 multiplication, followed by an FP32 addition, so it's not a case of just adding the numbers all together.

With regards to the tensor and RT cores, it's worth nothing that a TU116 is 284 mm2 and the TU106 is 445 mm2 in area; the former has 24 SMs to the latter's 36, so if one scales the TU116 by a factor of 36/24 then its area becomes 426 mm2. Of course, the TU116 has no RT core or the 4 sets of Tensor cores per SM that the TU106 has, but they've been replaced by 4 sets of 32 FP16 ALUs. The TU116 also has 2 fewer memory controllers, but the rest of the architectural layout (TPC and GPC count) is the same in the two chips, so the the extra 19 mm2 of die space accounts for the 2 memory controllers and the size difference between the tensor+RT cores versus the banks of FP16 ALUs.

So how big are the tensor and RT cores? Well, if you take a die shot of the TU102, you can hack out a rough estimate:

This is 754 mm2 of die area, and architecturally has the following layout:

Now assuming the 2nd and 3rd images are accurate layout representations of the die shot, SM units are roughly 12 mm2 each and RT cores account for 0.6 mm2 of that or 5%. Working out which sector in the die image are the tensor cores is harder, but a total guess puts it around the same as the RT block.

So in total, the tensor and RT cores account for (very, very,

very approximately) 12% of the whole die. If such guessing is fairly close to the mark, then removing these units from the analysis would shift the position of the Turing and Volta chips, but not by that much.

You do make a good point about the inclusion of the HBM affecting the die size figures (I.e. AMD's Fiji and Vega, Nvidia's GV100), so I'll have a look at some die images and estimate the actual GPU sizes - I'll post updated graphics in this thread to see what change this has.

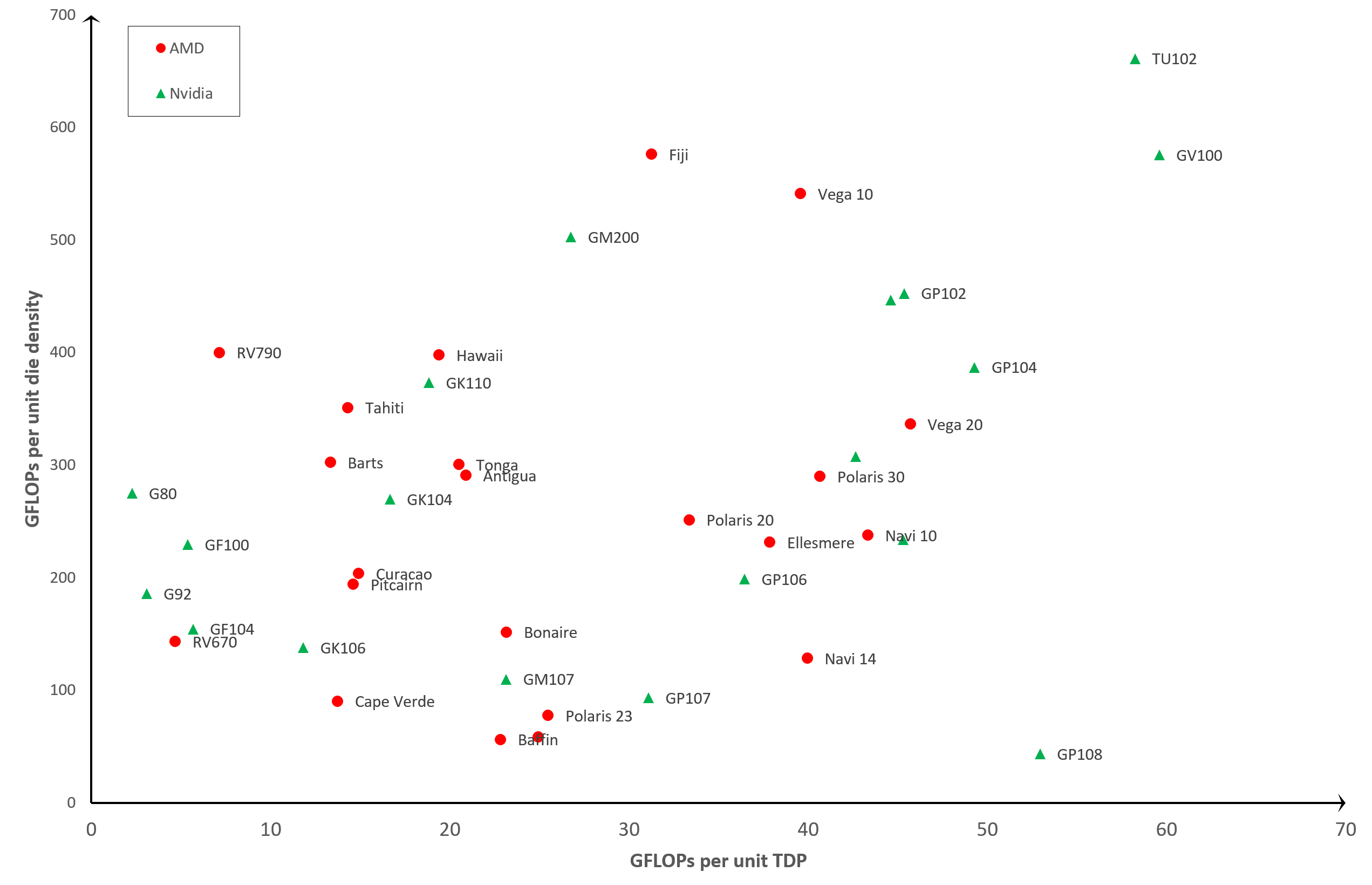

Edit: Here are the adjusted graphs for the removal of HBM with die area. TDP values remain the same, as they are for the whole card, but it's not been possible to adjust the transistor count as it's not clear whether AMD or Nvidia include the HBM's transistors in the total count.

The Vega 20 chip is missing off the 2nd graph because it's GLOPs per unit die area comes to a value of 101, so it's way above the rest. The actual Vega 20 chip is pretty small:

The HBM itself actually has more die area than the GPU.