In context: Nvidia’s much-awaited RTX 3090 Ti has been like a mirage ever since the company decided to reveal its existence. Originally announced at CES in January this year, Team Green’s “monster GPU” was supposed to secure the performance crown more firmly into its hands. Instead, the new card has been delayed to the point where the little hype that has been generated around it has largely died down.

The most recent rumors point to a March 29 launch date for the RTX 3090 Ti, with sales expected to start on the same day. However, those same murmurs suggest the MSRP will hover around $1,999, which is $500 above the suggested price of the regular RTX 3090. Retail GPU pricing has been improving as of late, so there’s hope that at least some gamers will be able to afford one.

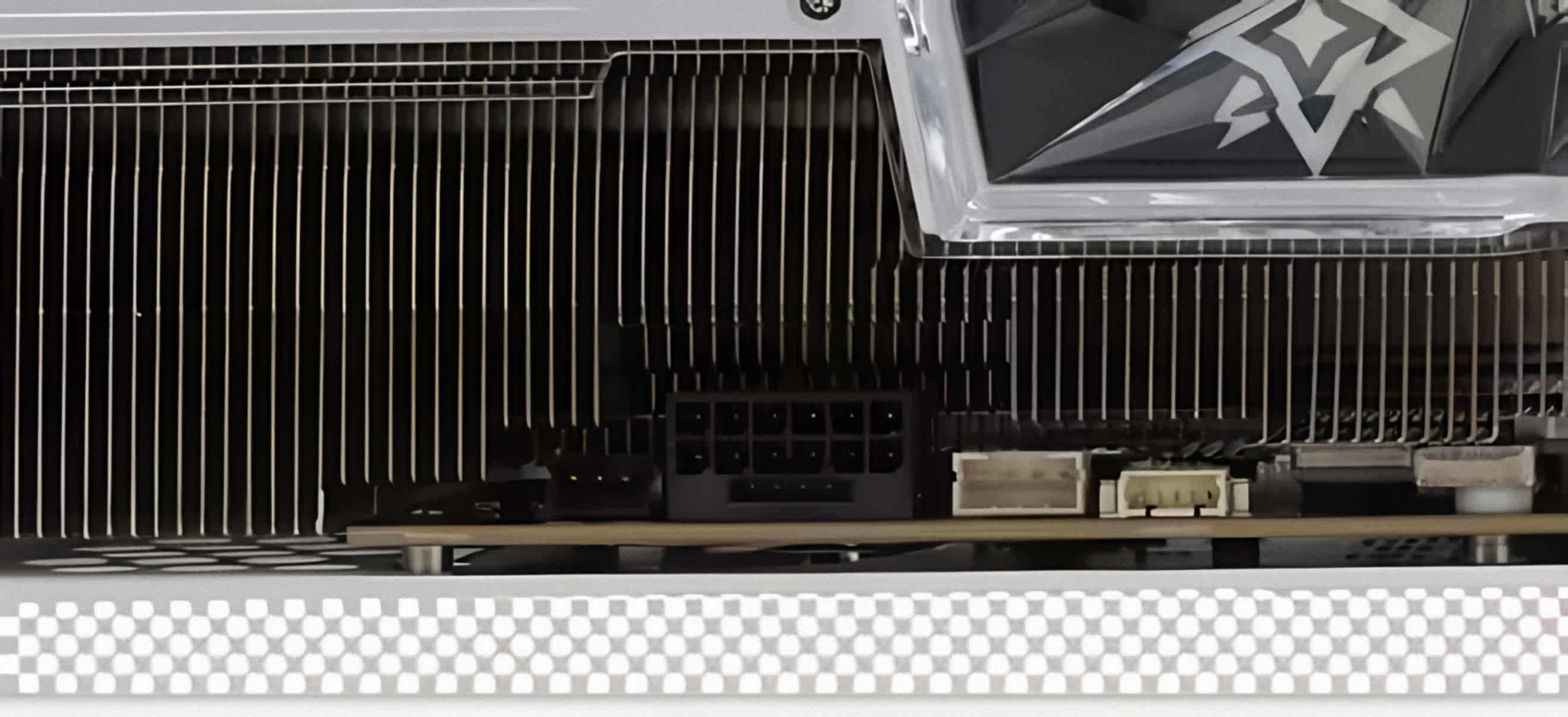

Thanks to popular leaker @wxnod (via Videocardz), we now know what the Galax RTX 3090 Ti Boomstar will look like. The upcoming card is the Chinese-exclusive version, and it looks like it will occupy three slots, which isn’t all that surprising given the cooling requirements of a top-end GPU these days. If the leaked picture is any indication, it will use the 16-pin 12VHPWR power connector that’s part of the PCIe 5.0 standard and is capable of providing up to a whopping 600 watts of power.

AIB variants of the RTX 3090 Ti are said to require up to 450 watts in order to feed an overclocked GA102 GPU with no less than 10,752 CUDA cores and 24 gigabytes of 21 Gbps GDDR6X memory. The Founders Edition might still feature Nvidia’s proprietary 12-pin connector, and that would explain the long delay in bringing the new card to market.

In the meantime, AMD is preparing a response in the form of the Radeon RX 6950 XT for a late April launch. And according to @greymon55, the company will bring back the Midnight Black cooler design for the reference version of the new card.

https://www.techspot.com/news/93727-alleged-picture-galax-geforce-rtx-3090-ti-boomstar.html