In context: One of the subjects Deutsche Bank covered in its interview with AMD late last week was AMD's stance towards Arm-based chips. While it didn't have anything concrete to announce, it didn't rule out working with Arm, either.

As part of its 2021 Technology Conference, Deutsche Bank interviewed the CFO of AMD over a range of topics, from the growth of its graphics sector to the supply of its consumer parts. They also touched on how AMD is looking at Arm chips in light of what other companies are doing with them.

"It's not just your other x86 competitor trying to rejuvenate itself, it's also some vertically integrated folks doing ARM-based processors A6, et cetera," said Deutsche Bank's Ross Seymore, likely referring to Apple starting to move its Macs over to its new Arm-based M1 processors. Seymore asked AMD CFO Devinder Kumar if the company is feeling any sort of market pressure from moves like this.

"Whether it's x86 or ARM or even other areas, that is an area for our focus and investment for us," Kumar answered, proceeding to point out AMD's relationship with Arm. "We have a very good relationship with ARM."

Rumors emerged late last year that AMD could be working on its own Arm-based rival to the M1. Around the same time, Microsoft was also rumored to be working on something Arm-based for servers and Surface computers. Apple's M1s have seen gains in performance and efficiency compared to similar x86-based competitors. Other manufacturers all over the world have also started looking to Arm-based chips in order to make their own alternatives to the Intel and AMD x86 processors which currently dominate the CPU market.

One of AMD's main competitors, Nvidia, sent shockwaves through the computer industry last year when it announced a bid to acquire the company behind Arm chip design. The deal still hasn't gone through yet and has proven controversial, with major companies like Google and Microsoft against it.

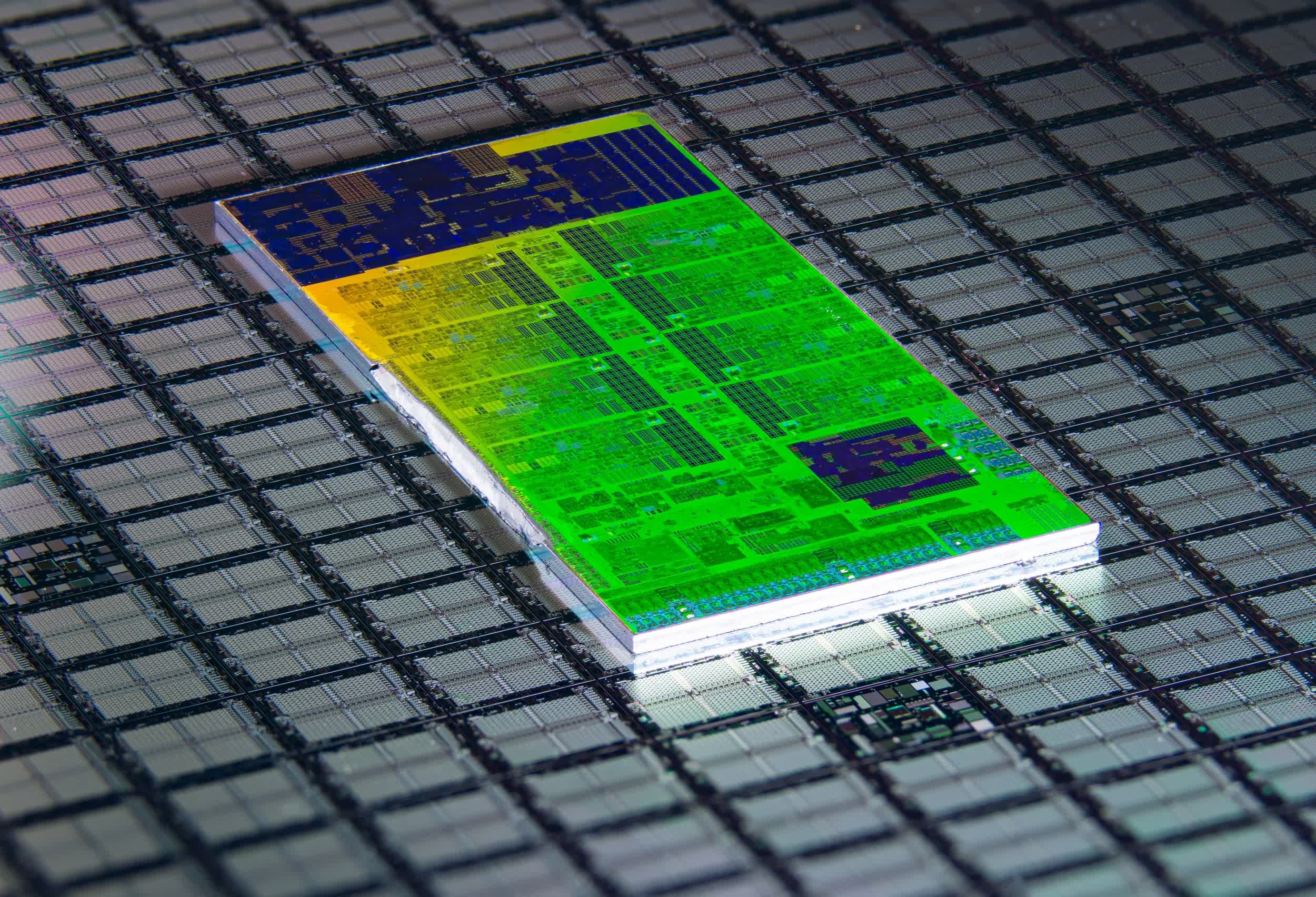

Images credit Fritzchens Fritz

https://www.techspot.com/news/91289-amd-considers-arm-area-investment-ready-make-chips.html