During a recent press event in Australia, AMD revealed additional details regarding its upcoming Radeon RX 470 and RX 460 graphics cards.

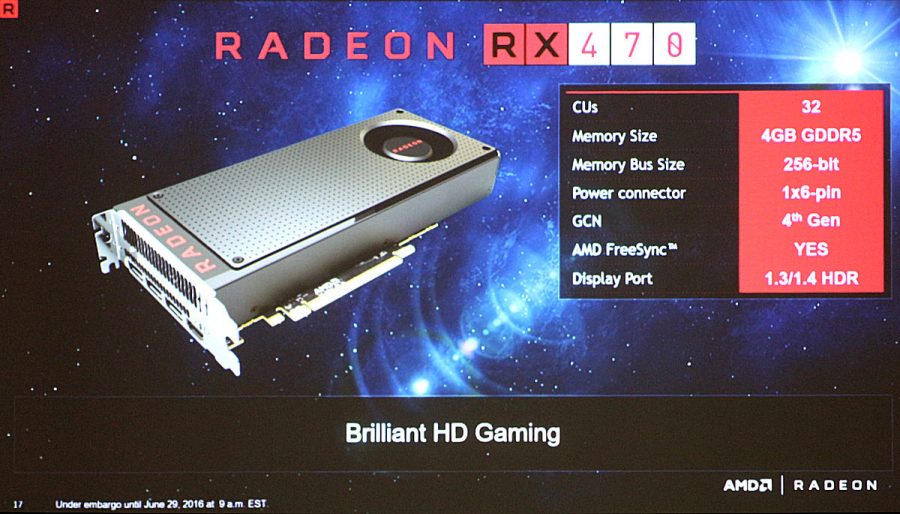

As for the Radeon RX 470, VideoCardz's slide shot reveals it will utilize the same blower-style cooling system found on the RX 480. Furthermore, manufacturing partners will have the option to churn out cards with 8GB of GDDR5 although AMD reference cards will apparently be limited to just 4GB.

The RX 470 will also feature a 256-bit interface in conjunction with 32 Compute Units, or 2,048 Stream Processors, down from the 2,304 Stream processors found in the current RX 480. It’ll be powered by a single 6-pin power connector in addition to what it draws from the PCIe slot.

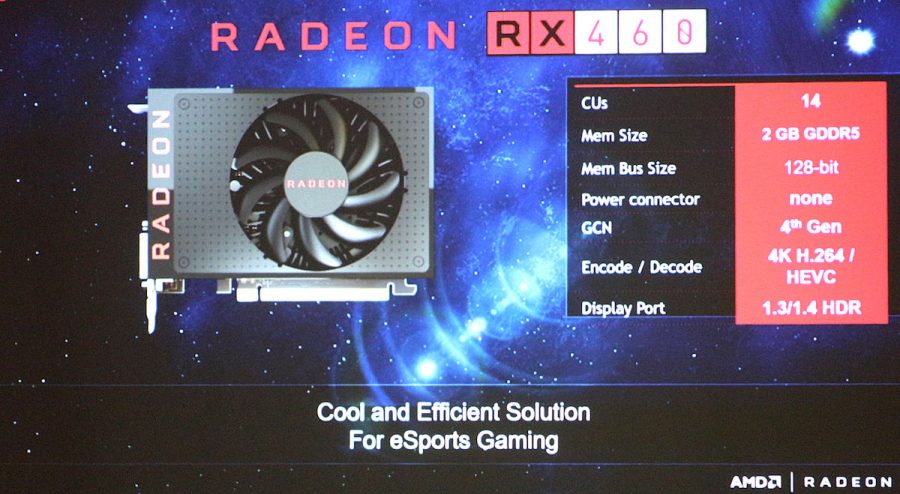

Elsewhere, the RX 460 will have 14 Compute Units (896 Stream Processors) with reference boards coming equipped with 2GB of GDDR5 and a 128-bit interface. As you can see, the card is rather short and resembles AMD’s R9 Nano which would make it much easier to fit inside cases with very little room. Unlike the other two cards, this one won’t require an ancillary power source.

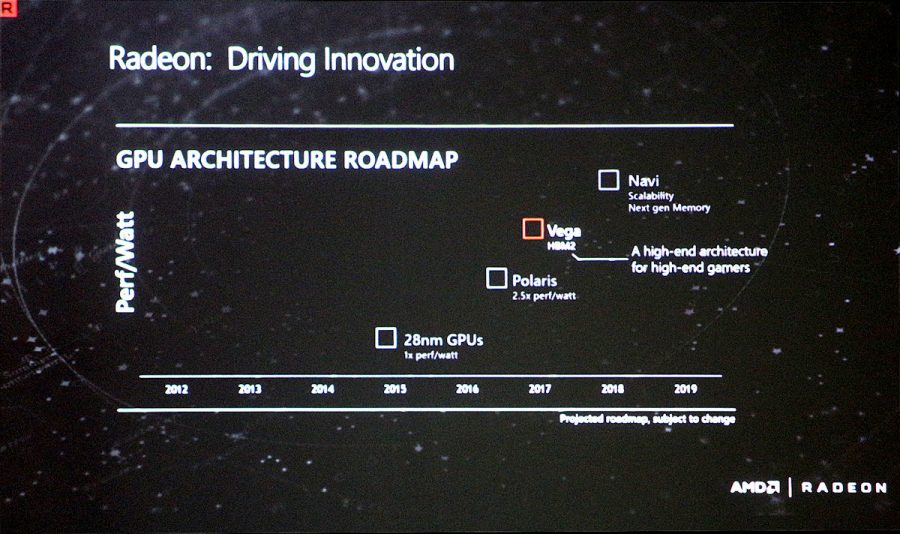

Last but not least is an updated GPU roadmap that describes Vega as a high-end architecture for high-end gamers. The next update, scheduled for 2018, is Navi which will address scalability and feature next-generation memory.

No word yet on when the RX 470 and RX 460 will arrive although the general consensus among the community seems to be next month and shortly thereafter, respectively.

https://www.techspot.com/news/65552-amd-shares-new-details-regarding-upcoming-radeon-rx.html