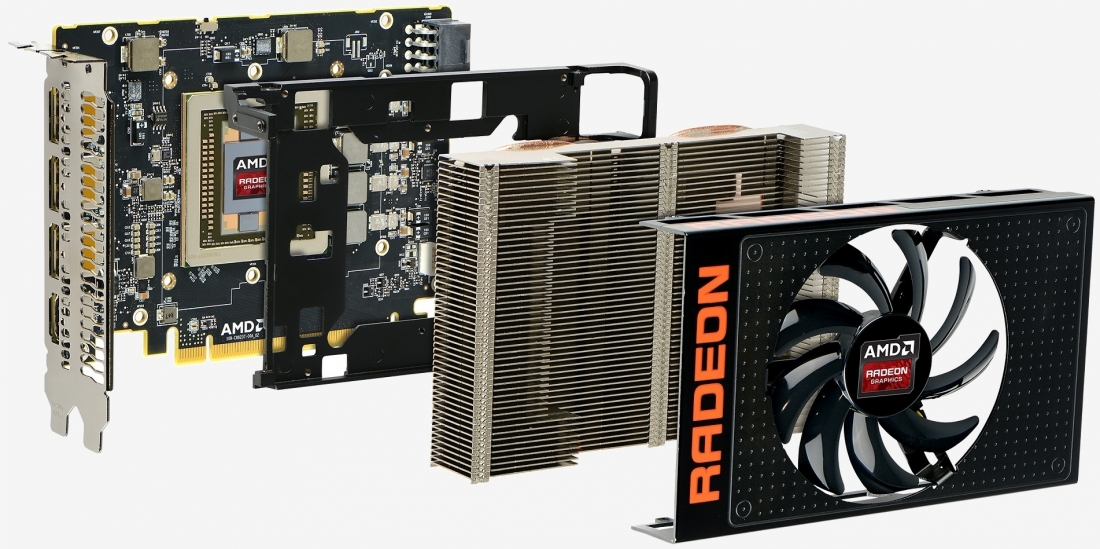

AMD has launched GPUOpen, an initiative that aims to equip developers with the tools and access they need to squeeze the most out of today's GPUs.

Nicolas Thibieroz, worldwide ISV gaming engineering manager at AMD, said GPUOpen is composed of two areas: games & CGI which focuses on game graphics and content creation and professional compute for high-performance GPU computing.

The first principle, Thibieroz said, is to provide code and documentation to developers so they can gain more control over the GPU. Thibieroz added that current and upcoming GNC architectures include many features that go unutilized in PC graphics APIs. GPUOpen aims to help developers come up with ways to leverage such features, with the payoff being increased performance and / or quality.

The initiative is also expected to make it easier for developers to port games from current-generation consoles to the PC.

Thibieroz said the second principle is a commitment to open source software with the third being a collaborative engagement with the developer community.

AMD is feeling pressure from both ends of the GPU market. Rival Nvidia reigns supreme in the high-end graphics market with Intel competing at the low end, not because their integrated solutions are better but because Intel processors outsell chips from AMD.

More information about GPUOpen can be found at GPUOpen.com.

https://www.techspot.com/news/63621-amd-gpuopen-initiative-offers-developers-deeper-access-gpus.html