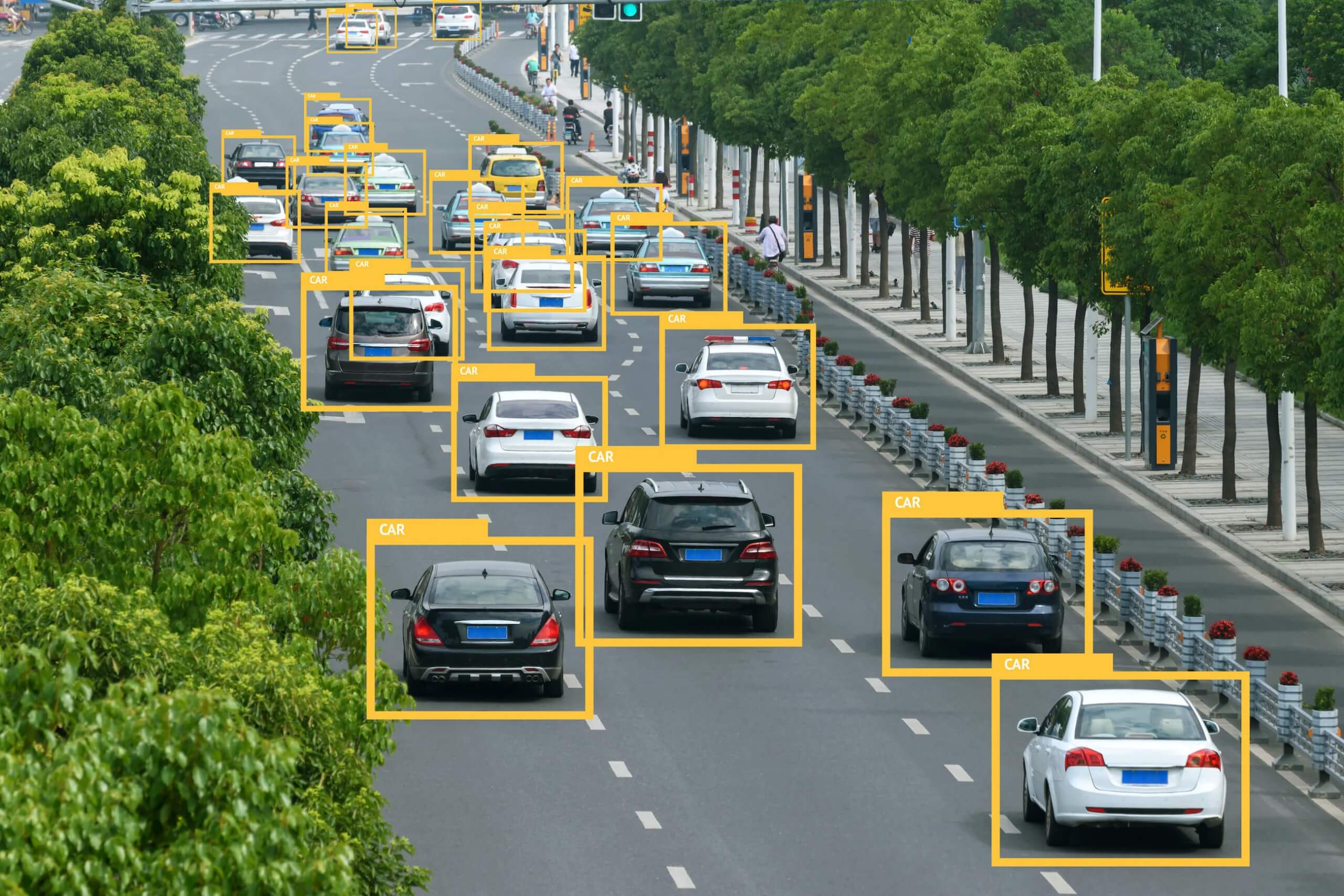

In context: The proliferation of machine learning systems in everything for facial recognition systems to autonomous vehicles has come with the risks of attackers figuring out ways to deceive the algorithms. Simple techniques have already worked in test conditions, and researchers are interested in finding ways to mitigate these and other attacks.

The Defense Advanced Research Projects Agency (DARPA) has tapped Intel and Georgia Tech to head up research aimed at defending machine learning algorithms against adversarial deception attacks. Deception attacks are rare outside of laboratory testing but could cause significant problems in the wild.

For example, McAfee reported back in February that researchers tricked the Speed Assist system in a Tesla Model S into driving 50 mph over the speed limit by placing a two-inch strip of black electrical tape on a speed limit sign (below). There have been other instances where AI has been deceived by very crude means that almost anyone could do.

DARPA recognizes that deception attacks could pose a threat to any system that uses machine learn and wants to be proactive in mitigating such attempts. So about a year ago, the agency instituted a program called GARD, short for Guaranteeing AI Robustness against Deception. Intel has agreed to be the primary contractor for the four-year GARD program in partnership with Georgia Tech.

Signs altered by electrical tape, adversarial stickers, or graffiti can foul up autonoumus vehicle object recognition.

"Intel and Georgia Tech are working together to advance the ecosystem's collective understanding of and ability to mitigate against AI and ML vulnerabilities," said Intel's Jason Martin, the principal engineer and investigator for the DARPA GARD program. "Through innovative research in coherence techniques, we are collaborating on an approach to enhance object detection and to improve the ability for AI and ML to respond to adversarial attacks."

The primary problem with current deception mitigation is that it is rule-based and static. If the rule is not broken, the deception can succeed. Since there is nearly an infinite number of ways deception can be pulled off, limited only by the attacker's imagination, a better system needs to be developed. Intel said that the initial phase of the program would focus on improving object detection by using spatial, temporal, and semantic coherence in both images and video.

Dr. Hava Siegelmann, DARPA's program manager for its Information Innovation Office, envisions a system that is not unlike the human immune system. You could call it a machine learning system within another machine learning system.

"The kind of broad scenario-based defense we're looking to generate can be seen, for example, in the immune system, which identifies attacks, wins, and remembers the attack to create a more effective response during future engagements," said Dr. Siegelmann. "We must ensure machine learning is safe and incapable of being deceived."

https://www.techspot.com/news/84769-darpa-taps-intel-georgia-tech-pioneer-machine-learning.html