In brief: Intel's first wave of Arc graphics cards isn't here yet, but the company has been hyping up the Xe-HPG core and support for Xe Super Sampling (XeSS). Early benchmarks have started to appear online, and if proven true they suggest that Intel still has a lot of work to do if it wants to have "Alchemist" ready for a 2022 release.

Intel is currently working on its Arc high-performance GPUs for desktops and mobile form factors, but the first products of that effort won't arrive until sometime in the first quarter of 2022. Chipzilla has been throwing hints about its plans with Arc and how it intends to challenge both Nvidia and AMD in the discrete GPU market, but other than a roadmap and some nifty software tools, we've had little to go on for an idea about how these new GPUs might perform.

A number of leaks have suggested that Intel's upcoming "Alchemist" graphics solutions will come in several SKUs, ranging from 128 execution units and 4 GB of VRAM on a constrained 64-bit bus and up to a high-end model with 512 execution units with 16 GB of VRAM on a 256-bit bus, which is said to be roughly between an Nvidia RTX 3070 and an RTX 3080 in terms of performance.

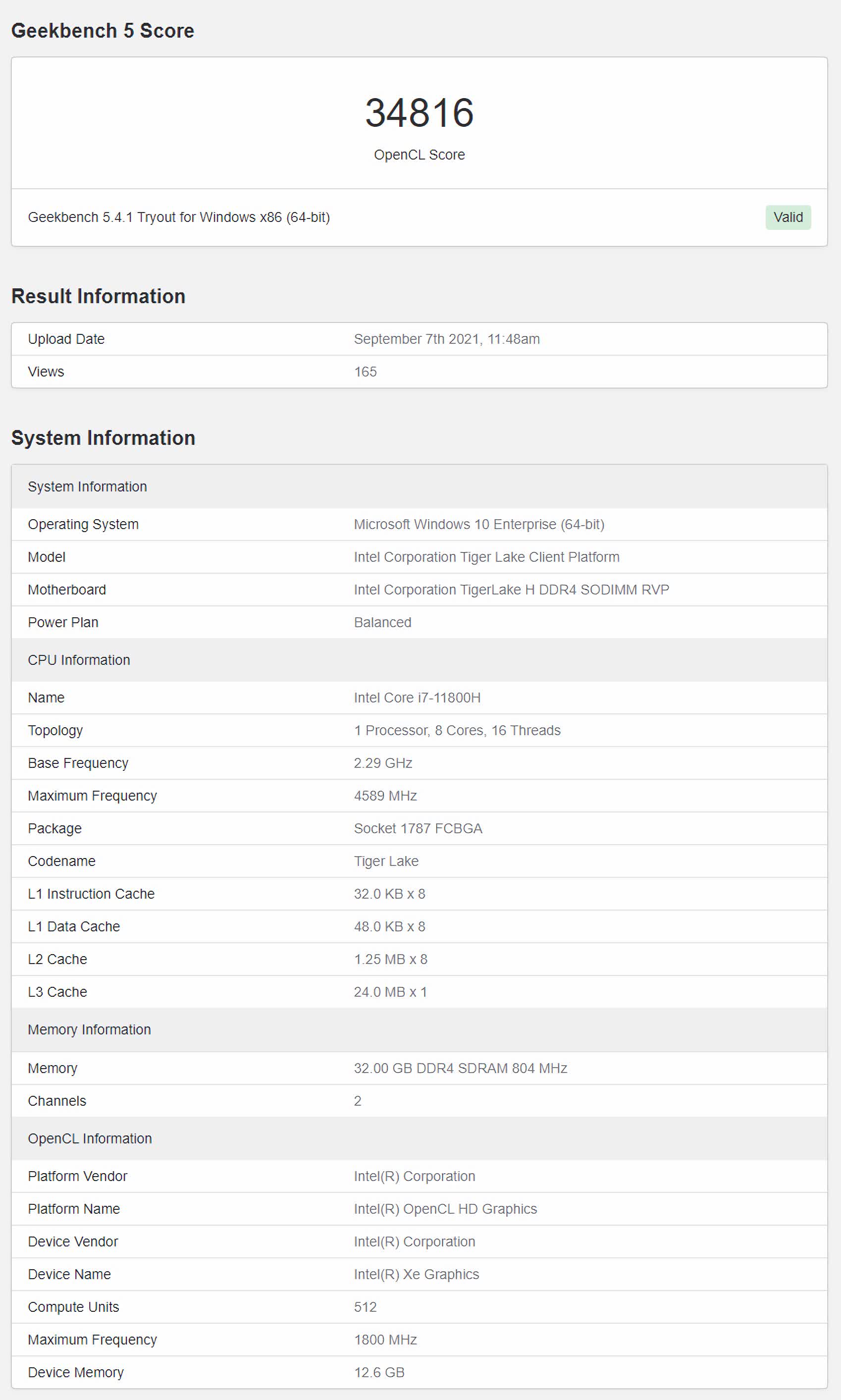

Thanks to some early benchmarks spotted by @Tum_Apisak, we can get a very rough idea on how well the mobile variant of the top-end Alchemist GPU might perform. These are Geekbench 5 tests performed with an Intel Tiger Lake CPU, and we're likely looking at an early engineering sample, but the image isn't pretty for Alchemist.

It looks like the engineering sample obtained an OpenCL score of 34,816 points when clocked at 1,800 MHz, which would be a rather disappointing result if taken at face value. For reference, this is around the same level as Nvidia's last-gen GeForce GTX 1650 Max-Q, which is actually able to score in excess of 36,000 points on the same test.

Granted, this is only a singular test that measures the compute performance of the Alchemist GPU, which again is an early sample clocked at a rather low 1,800 MHz. Intel suggested during its Architecture Day presentation that we can expect the Xe-HPG graphics engine to have 1.5 times the performance-per-watt and frequency boost of the Xe-LP one found in the Iris DG1 card released earlier this year.

This works out to a theoretical boost clock speed in excess of 2 GHz, which is further indication that we're looking at very early silicon here, and in a mobile form factor no less, which means it could be power-constrained. Back in June, someone tested the Iris DG1 gaming performance and found it was surprisingly decent across a number of popular games, despite a barrage of early benchmarks suggesting otherwise.

If anything, the same can happen with Alchemist, and it goes without saying that Intel only needs to come up with entry-level and mid-range GPUs during the current market conditions, which are expected to persist for at least another year. If it can manufacture them without disruptions using its own chip plants, many gamers and cryptocurrency miners will buy them in a heartbeat.

https://www.techspot.com/news/91135-early-benchmarks-make-intel-arc-mobile-gpu-look.html