In context: Augmented reality is an intriguing technology with some exciting potential. Companies like Microsoft, Apple, and Facebook have projects in various stages of development. As they continue figuring out how to make the tech accessible to the average consumer, they have to figure out how we will control them.

Facebook has been seriously working on augmented reality technology since as at least 2019. Last year, it revealed it has a working AR "research platform" it calls "Project Aria." The latest fruit of that work is a conceptual wristband controller that reads users' nerve signals.

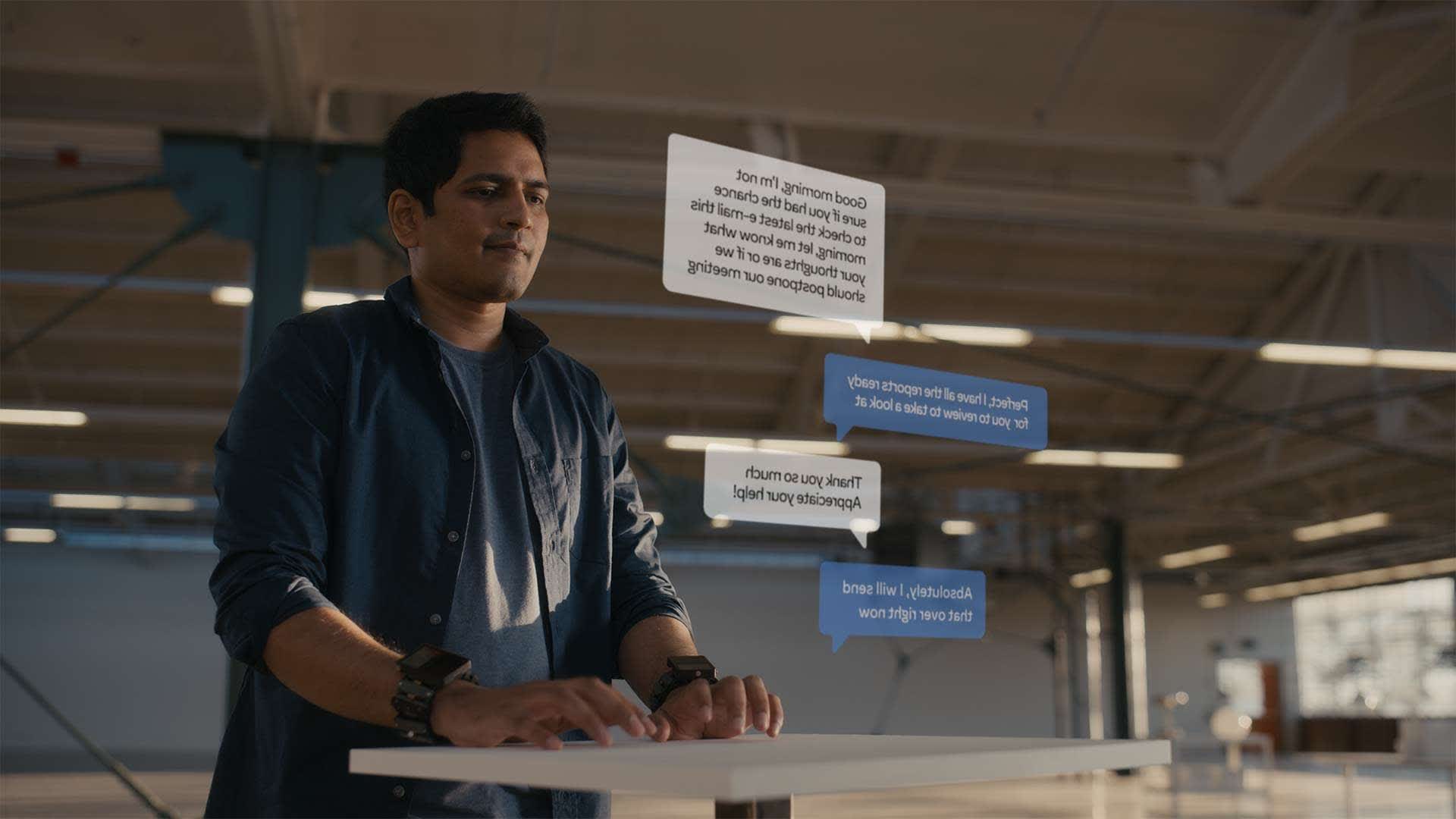

Facebook Reality Labs' goal is to develop an "easy-to-use, reliable, and private" human-computer interface (HCI). The last thing users want when using AR glasses and interacting with the real world is to be carrying around a large device to manipulate virtual elements. Users will want something subtle, like slight gestures or ideally invisible impulses, to interact with their augmented realities. FRL is working on the former with the goal of the latter.

"Our north star [is] to develop ubiquitous input technology — something that anybody could use in all kinds of situations encountered throughout the course of the day."

Its "research prototype" wristbands use electromyography (EMG) to intercept nerve impulses sent by the brain to the hands. Onboard AI then interprets the signals into "digital commands." One may think of it as another form of gesture control; only in this instance, the sensors track one's invisible nerve signals rather than one's visible finger or hand movements.

Facebook's research prototype easily reads the electrical signals while performing more discrete movements than are required by traditional gesture tracking. It further hopes advancements lead to sensors that interpret impulses so fast that the action is performed before or without the user completing the gesture.

"The signals through the wrist are so clear that EMG can understand finger motion of just a millimeter," said FRL. "That means input can be effortless. Ultimately, it may even be possible to sense just the intention to move a finger."

Ultimately EMG controllers could lead to enhanced reaction times and performing actions without physically going through the motions. Imagine how much faster you could type if you only had to think about moving your fingers without actually having to do so. Add to that an AI that adapts to your typing style and corrects your typos on the fly.

"The result is a keyboard that slowly morphs to you, rather than you and everyone else in the world learning the same physical keyboard. This will be faster than any mechanical typing interface, and it will be always available because you are the keyboard."

Facebook's concept is not the first time we've seen electromyography used to control a device. Prosthetics manufacturers have been working on EMG and neural interfaces for quite some time. It will be interesting to see the tech developed for the general consumer market.

https://www.techspot.com/news/88983-facebook-wants-us-control-ar-glasses-electrical-impulses.html