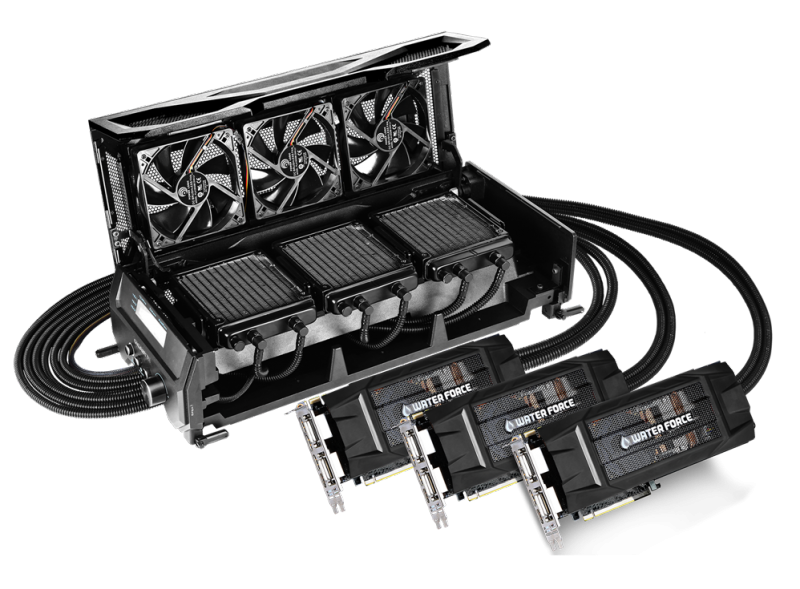

Gigabyte's crazy closed-loop water cooling solution for three high-end graphics cards, Waterforce, is now on sale with a whopping $3,000 price tag. The mammoth liquid cooling unit that sits atop your PC case does come with three Nvidia GeForce GTX 980s, but the price tag is still hard to swallow.

Each GTX 980, which regularly retail for around $560 in a traditional air-cooled setup, is factory overclocked in the Waterforce cooler to a base clock of 1,228 MHz and a boost clock of 1,329 MHz. The cards all come with 4 GB of GDDR5 memory clocked at 7,010 MHz on a 256-bit bus.

The setup for Waterforce is fairly inelegant: you have to feed the power cable from the cooling box to your PSU through a front 5.25" drive bay, and then feed one radiator for each card through the drive bay to the cooling box. The end result is a bunch of tubes running from the cooler atop your case, through the front panel and then to each graphics card.

Waterforce promises to deliver excellent cooling performance and quiet operation, which is what you'd hope for if you're spending $3,000. The front display on the cooler allows you to monitor GPU temperatures and adjust fan and pump speeds on the fly.

While the huge price tag will keep this sort of setup in the mind of enthusiasts only, installing a tri-SLI water-cooled setup can be complex and expensive. Water blocks for each GPU could cost upwards of $100 each, then you'd also have to purchase radiators and fans, pumps, reservoirs, and tubing.

https://www.techspot.com/news/59167-gigabyte-gtx-980-tri-sli-waterforce-cooler.html