A tough task: While Google’s intentions seem good-hearted, the successful implementation of such a system could be incredibly tricky. Really, aside from encouraging site owners to adopt best practice with regard to optimizing their site for speed, I’m not sure how effective it can really be. For example, will it be able to take into account server hiccups and traffic spikes and do so without bias (including its own sites and services)?

Speed is a core attribute of any web browser worth using but with so many factors contributing to a website’s perceived zippiness, how can you reliably differentiate between poor network conditions and a truly slow website? Google wants to help.

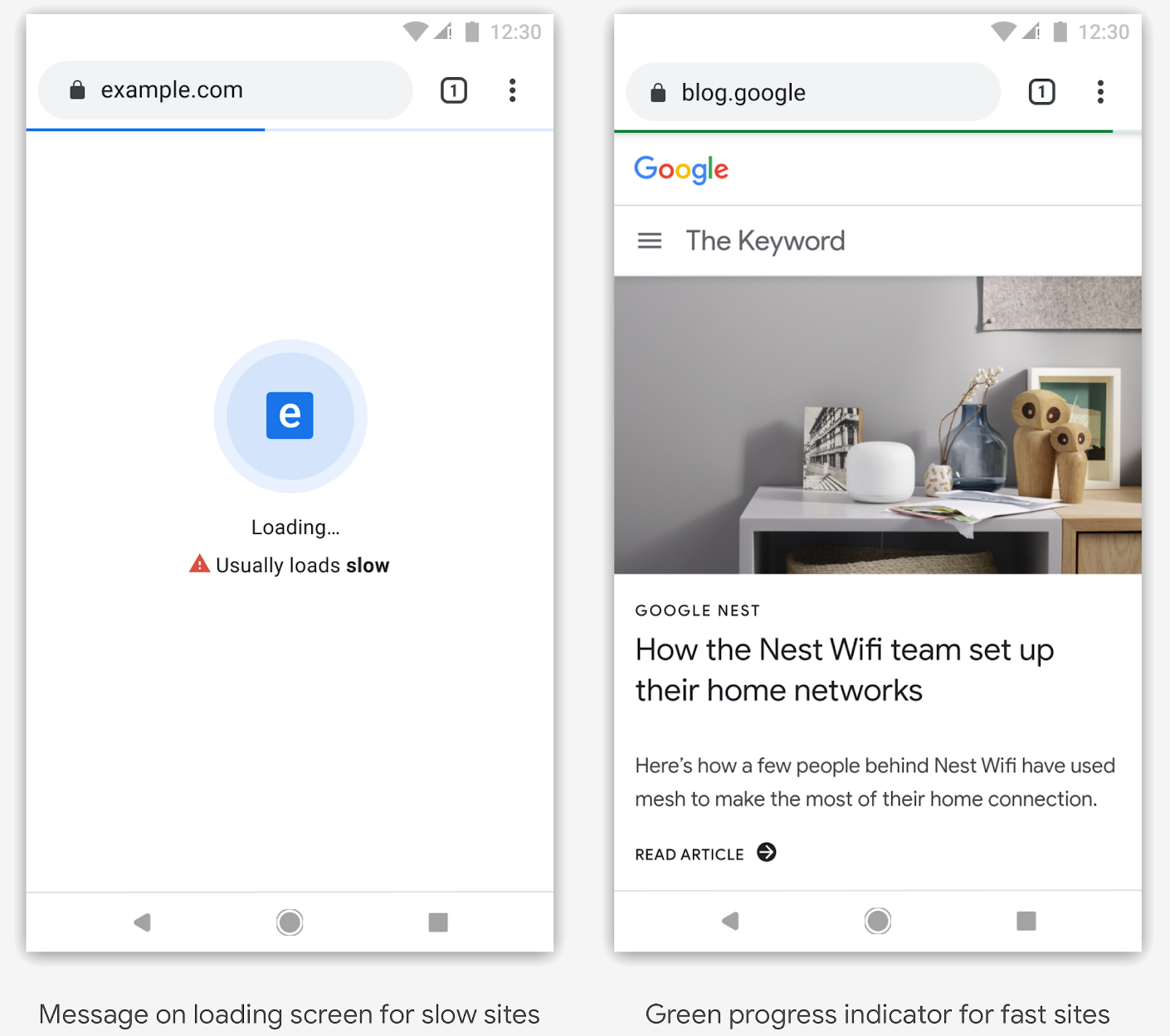

The tech giant is in the early stages of creating a badging system that’ll identify websites that offer high-quality experiences – namely, those that are optimized for speed and have a solid track record of delivering on a consistent basis.

In announcing the initiative, members from Google’s Chrome team said the feature may take a number of forms, adding that they are experimenting with different options to see which provide the most value for users. For example, they may lean on historical load latencies as one metric.

More advanced iterations may even identify when a site is likely to be slow based on the device you are using or network conditions.

Google highlights a couple of tools – PageSpeed Insights and Lighthouse – as starting points to evaluate the performance of your website and encourages owners not to wait to optimize their sites.

Masthead credit: Loading by Yuttanas

https://www.techspot.com/news/82718-google-working-way-chrome-label-slow-websites.html