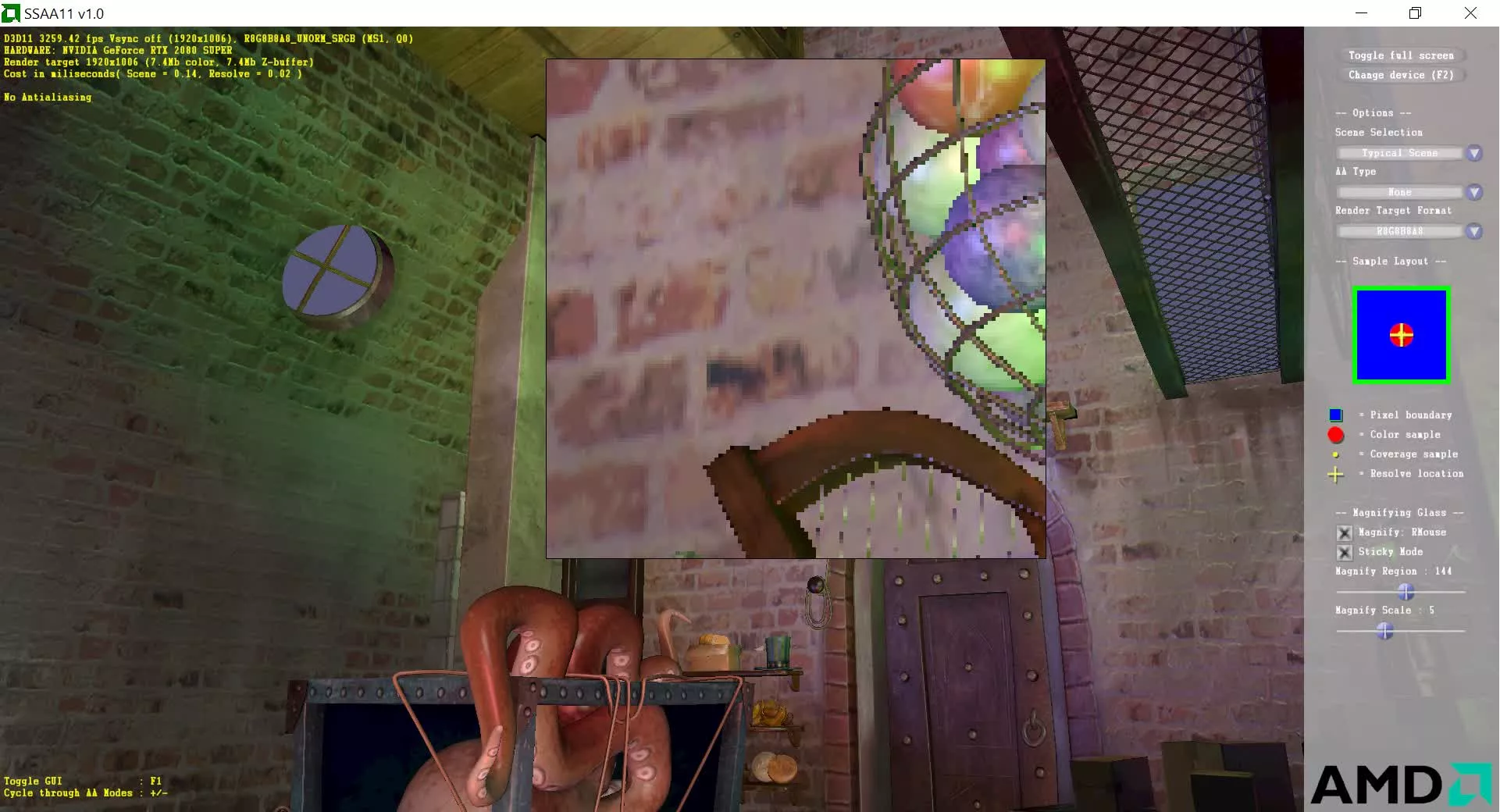

The 3D games we play and love are all made up of thousands, if not millions, of colored straight lines, which inevitably will look jagged in our screens if not for smoothing anti-aliasing techniques. Let us explain in this new deep dive.

https://www.techspot.com/article/2219-how-to-3d-rendering-anti-aliasing/