In context: Apple keeps the inner workings of the M1 family of processors secret from the public, but dedicated developers have been reverse-engineering it to create open source drivers and a Linux distro, Asahi Linux, for M1 Macs. In the process, they've discovered some cool features.

In her efforts to develop an open source graphics driver for the M1, Alyssa Rosenzweig recently found a quirk in the render pipeline of the M1's GPU. She was rendering increasingly complicated 3D geometries, and eventually arrived at a bunny that made the GPU bug out.

Basically -- and please note that this and everything else I'm about to say is an oversimplification -- the problem begins with the GPU's poor access to memory. It's a powerful GPU, but like the A-series iPhone SoC it shares an ancestor with, it takes shortcuts to preserve efficiency.

Instead of rendering straight into the framebuffer like a discrete GPU might, the M1 takes two passes of a frame: the first finds the vertices, and the second does everything else. Obviously, the second pass is much more intensive, so between the passes, dedicated hardware segments the frame into tiles (mini-frames, basically) and the second pass is taken one tile at a time.

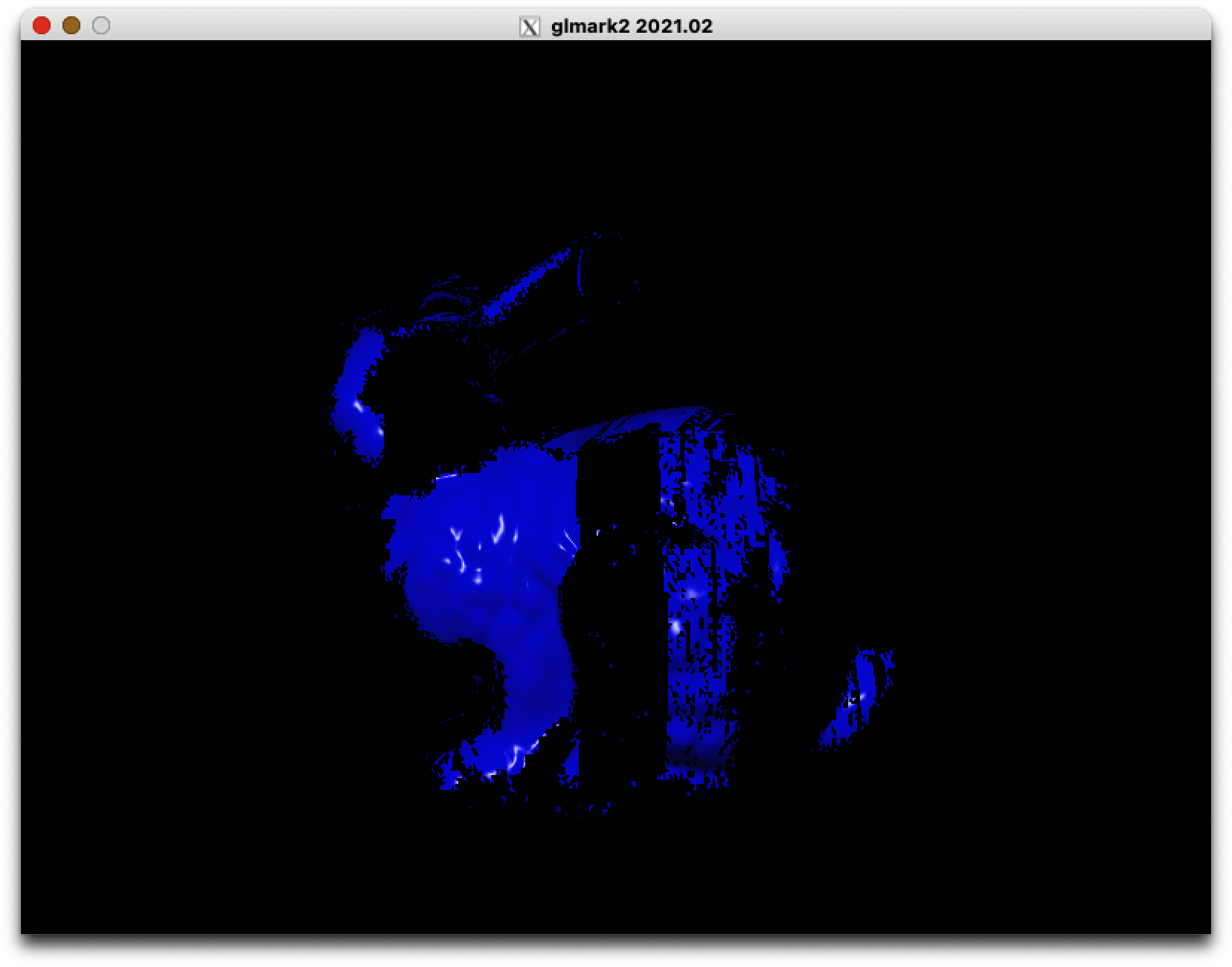

Tiling solves the problem of not having enough memory resources, but to be able to piece the tiles back together into a frame later, the GPU needs to keep a buffer of all the per-vertex data. Rosenzweig found that whenever this buffer overflowed, the render wouldn't work. See the first bunny, above.

In one of Apple's presentations, it's explained that when the buffer is full, the GPU outputs a partial render - I.e., half the bunny. In Apple's software, the buffer in question is called the parameter buffer, a name seemingly taken from Imagination's PowerVR documentation.

Imagination is a UK-based company that, like Arm, designs processors that it licenses to other companies. Apple inked a deal with the company at the beginning of 2020 that allows Apple to license a broad range of its IP. It's clear that the M1, which was brought to market at the end of 2020, uses their PowerVR GPU architecture as some sort of a basis for its GPU.

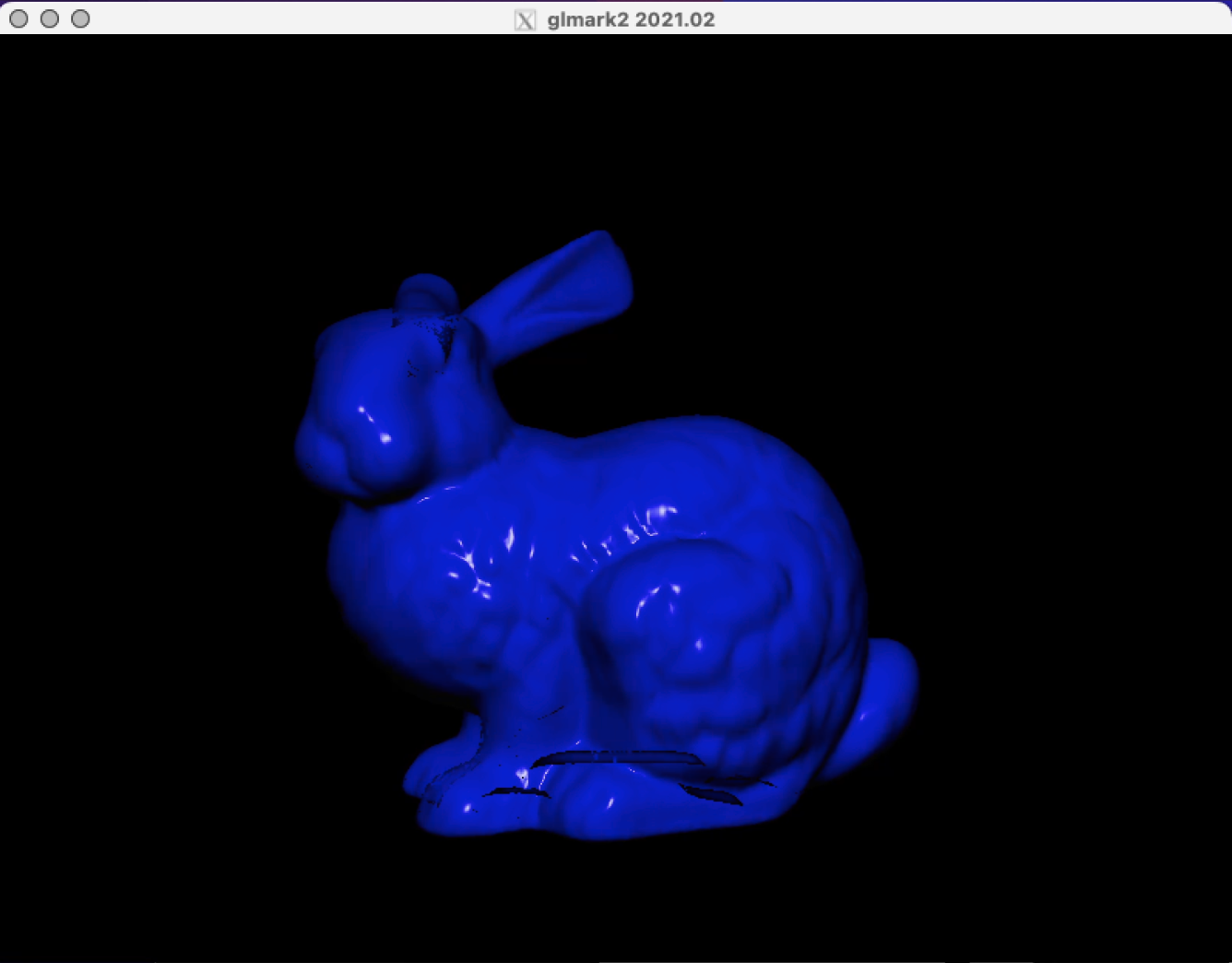

Anyway, back to the bunny. As you might have guessed, the partial renders can be added together to create a render of the whole bunny (but with a dozen extra steps in-between, of course).

But this render still isn't quite right. You can see artifacts on the bunny's foot. It turns out that this is because different parts of the frame are split between a color buffer and a depth buffer, and the latter misbehaves when loaded with partial renders.

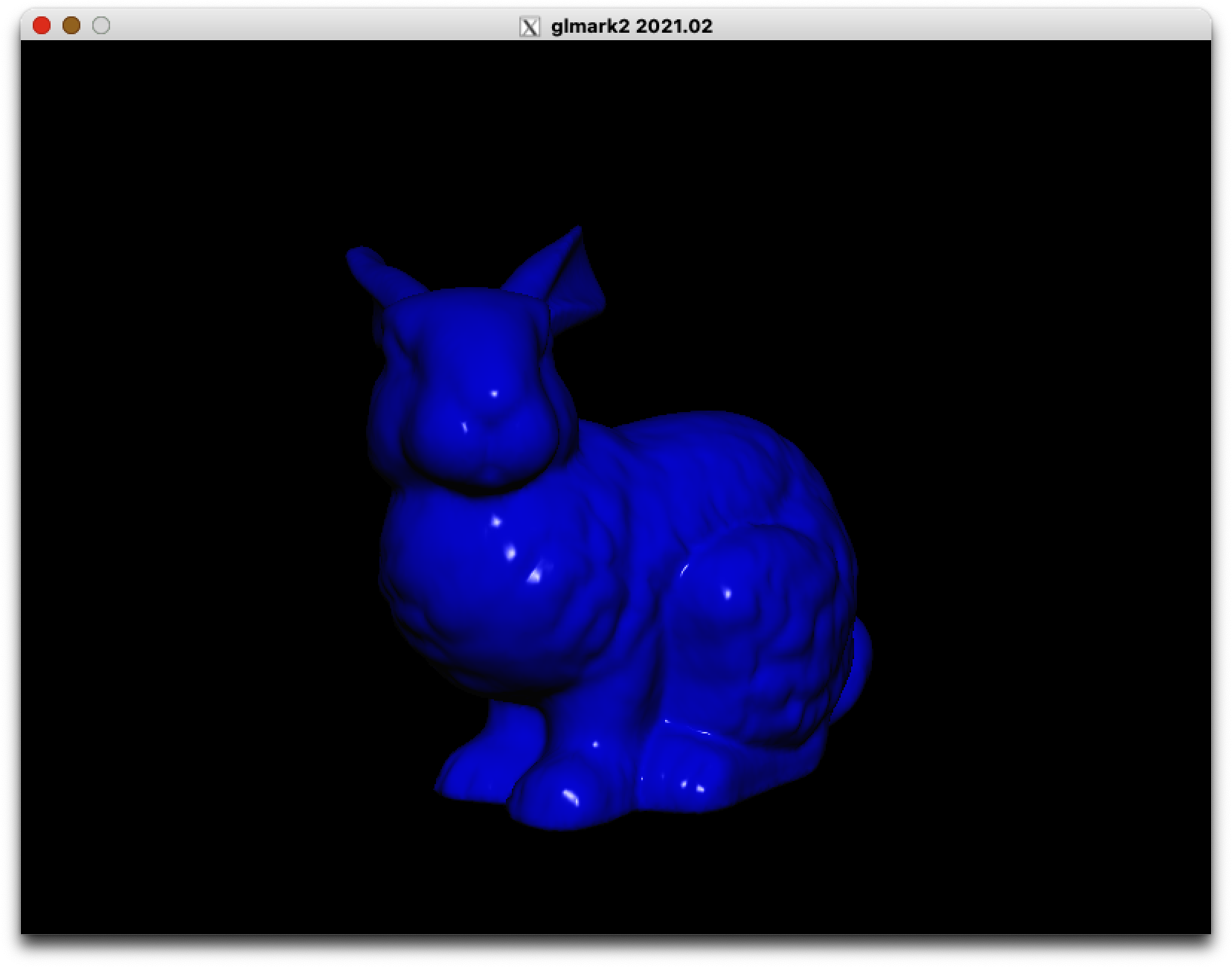

A reverse-engineered configuration from Apple's driver fixes the problem, and then you can finally render the bunny (below).

It's not just Rosenzweig's open-source graphics driver for the M1 that jumps through all these hoops to render an image: this is just how the GPU works. Its architecture probably wasn't designed with 3D rendering in mind but despite that, Apple has turned it into something that can rival the latest discrete GPUs, if not quite surpass them, as Apple claims. It's cool.

For a more in-depth (and technically accurate) explanation of bunny rendering, and for other explorations into the M1, be sure to check out Rosenzweig's blog and the Asahi Linux website.

Masthead credit: Walling

https://www.techspot.com/news/94593-inside-apple-m1-incredibly-quirky-gpu.html