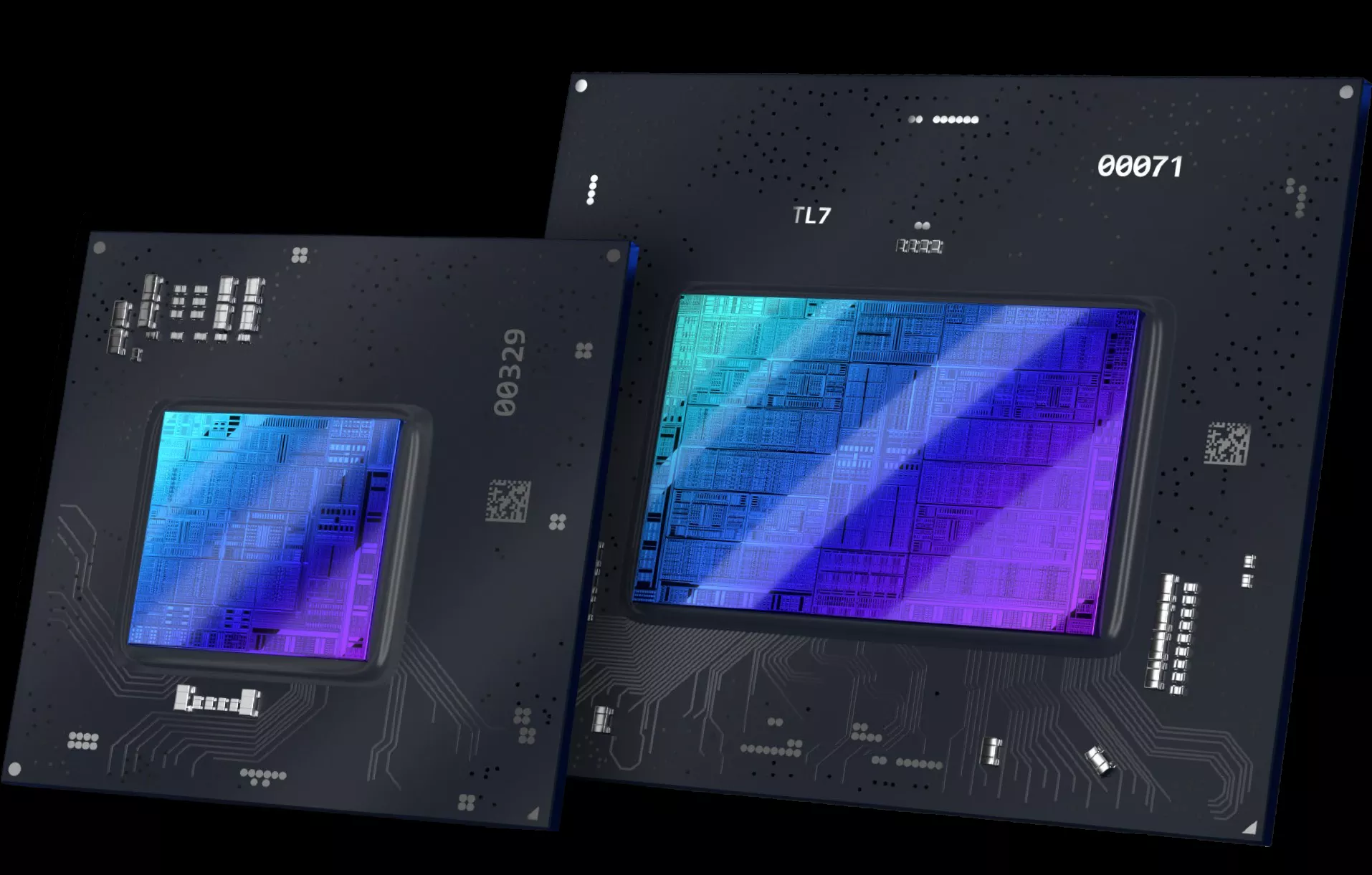

Highly anticipated: GPU shortages have been a roadblock for gamers, builders, and other users looking for an upgrade since late 2020. Earlier this year, Intel announced that their in-house graphics hardware lineup, Arc, would be released by the first quarter of 2022 to compete directly with Nvidia and AMD. Based on recent information and videos from Intel, it looks like Team Blue is serious about capturing a piece of the GPU market.

Two of the leading technologies in this generation's GPU wars have been Nvidia's Deep Learning Super Sampling (DLSS) and AMD's FidelityFX Super Resolution (FSR). Both promise to provide an enhanced visual experience for users, one through an AI-based algorithm and specialized hardware, and the other by rendering lower resolution frames and upscaling them using an open source algorithm. Never one to be outdone by the competition, Intel has thrown their hat in the ring with their own super sampling feature, known as Intel Xe Super Sampling (XeSS).

Intel recently released a video of XeSS in action on an Intel Arc GPU. Unlike previous demonstrations, this latest video from Team Blue provides one of the first real looks at 1080p content upscaled and recorded in 4k. The video leaves little to be debated, with the upscaling solution delivering noticeably higher quality 4k images.

Intel's new super sampling technology brings a distinct advantage to the table. While Nvidia relies upon specialized Tensor Cores for a hardware-based upscaling solution, XeSS has been designed using open standards, similar to AMD's FSR, to run across all current GPU platforms. This means users from teams Red, Green, and Blue will have the ability to use the new upscaling solution for any supported titles.

It is currently unknown what level of compatibility Intel's solution will provide with older generation hardware. AMD's solution was well received due to its ability to breathe new life into aging hardware, offering support for architectures as far back as AMD's 400 series and Nvidia's GeForce 10 series.

There is currently no shortage of demonstration videos showcasing the DLSS and FSR solutions. Unfortunately, the compression losses incurred by most major video platforms make it hard to compare each solution to Intel's XeSS in a side-by-side comparison. We likely won't be able to see this head-to-head comparison until Intel's Arc GPUs actually launch, but based on the initial releases from Intel, it looks like the company is making big moves to enter the GPU arms race.

https://www.techspot.com/news/92108-intel-ai-based-xess-shows-promise-new-4k.html