@Steve @Julio Franco will you do a simple test to verify the results that some are getting of the Windows FCU improving FPS by quite a big percentage? Gamer Nexus did CPU bound tests but not the GPU ones (yet).

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

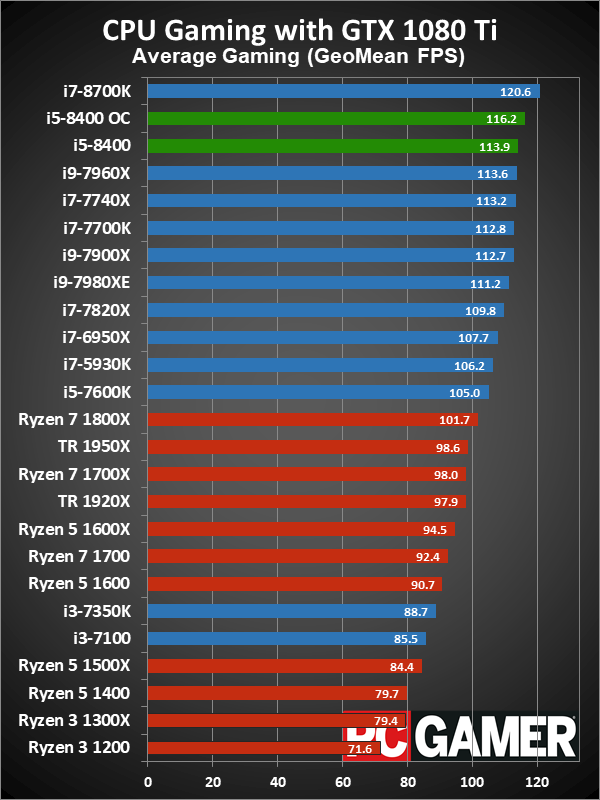

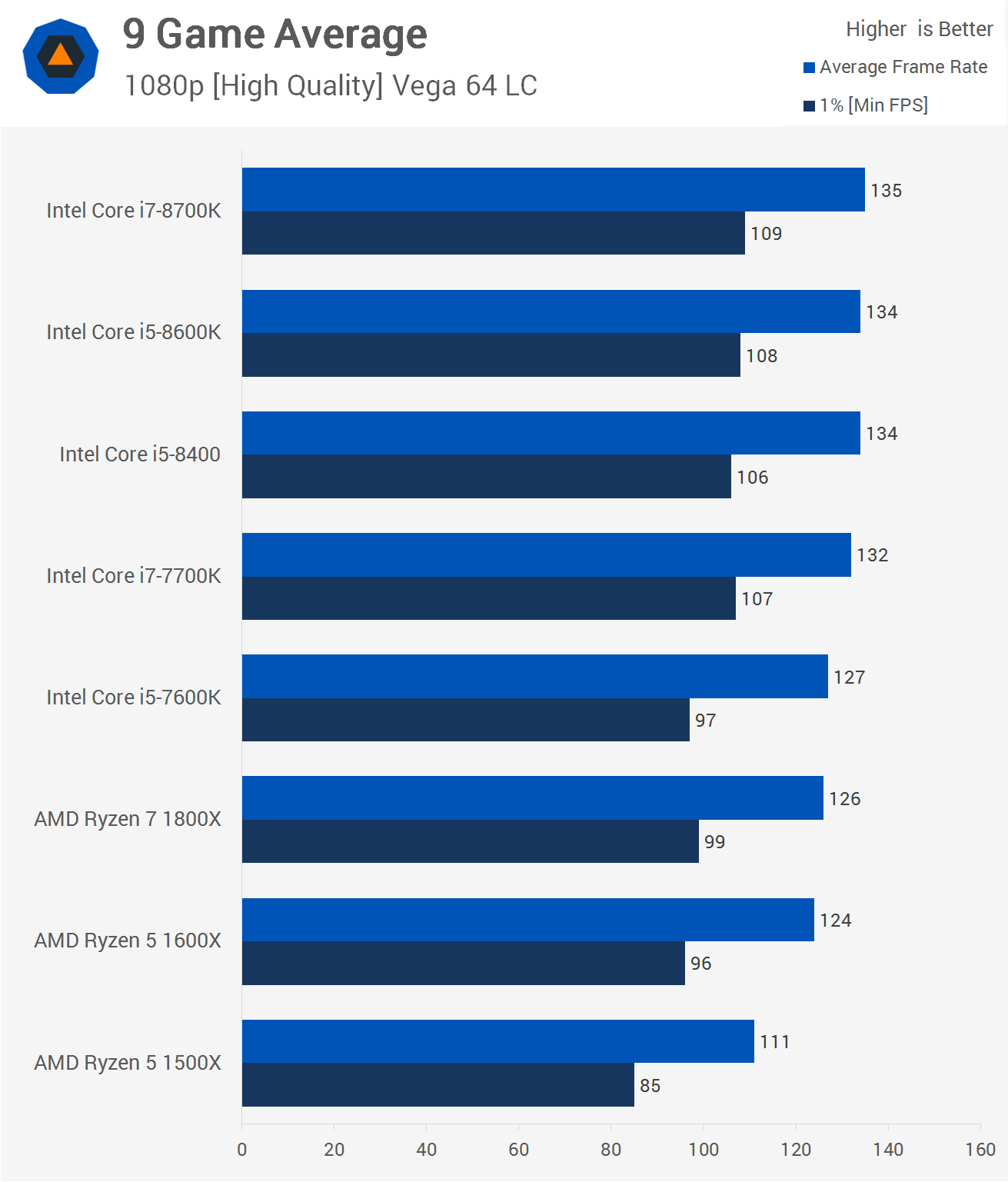

Intel Core i7-8700K, i5-8600K, 8400 versus AMD Ryzen 7 1800X, R5 1600X, 1500X

- Thread starter Steve

- Start date

If you want to talk about 7600K vs Ryzen, you're 6 months too late and on the wrong thread...

I want to talk about the claim that 720p benchmark are indicative of future performance. I proved it wrong simply by comparing 2 cpu's.

Again, you seem severely confused. If you're looking back 3 years now, the FX-8350 vs i5-4670K does indeed show that the latter did better on new cards.

So, in some situations the claim is true. In others it isn't. Therefore, the claim ISN'T true! What do you call a claim that is true in half the situations? Exactly! How many times does a claim have to be false in order for it not to be a true claim? Exactly!

And since you seem to want to go Intel vs AMD (and they call me a fanboy) while I don't, let's then compare Intel to Intel. Let's go to 4670k vs 4930k or 5820k. The i5 does better than both on 2013-2014 games. Does it do better now? I really really doubt it.

All I'm trying is to prove that the claim is wrong. `The claim is " if a CPU does better on 720p in games than another CPU then the first one will do better in future games as well". The claim mentions nothing about generation of CPU or anything else. Therefore my comparison (R5 1600 vs 7600k) that proves the claim wrong is absolutely fine.If you're looking forwards to the future, the obvious comparison you're trying your hardest to pretend doesn't exist is i5-8600K vs R5 1600X. Which yet again, IS THE WHOLE POINT OF THE ARTICLE.

About the 8400 / 8600k vs R5 1600 / 1600x on future games I claim I don't know. You claim you do, based on a claim that I already proved wrong. My guess is there will definitely come a point that the 8400 will get chocked compared to the R5. You can already see it in heavy 64mp bf1 maps, or crysis 3 or civ 6. Now whether that will become the majority of games or not 3-4 years from now I have no clue, and neither do you frankly.

Last edited:

So, instead of caring about the facts you care about winning an e-argument. Great. Now tell me, since you bothered to go to every review, how exactly do you think you proved me wrong? You know there are like a trillion websites outhere that show the opposite results? You know why? Cause averages are entirely based on the games you are testing. I can take 10 games from 2012 and show that the 7600k is 30% faster than the 1600. I can also take some other 10 games (like bf1 multiplayer / civ 6 / crysis 3) and show that the 1600 is 30% faster than the 7600k. The fact is, the more modern a game is, the faster the 1600 gets. The heavier a scene, the more the 7600k plummets. Which was my point all freaking along, that the Ryzens do better on more recent games!but let's just check one or two or more professional sites before we take AMDfanboy1 living in mom's basement as the end all be all on benches.

Does Tom's hardware agree? Nope the further right you go the better the processor performs and notice the gap widens once you OC the 7600k compared to an OC 1600.

So other then every professional web site out there not agreeing with you, you are correct because of "youtube benchmark".

I love reading fanboy hissy fits in the morning, they read like...victory.

Do you think that the i5's do better the more recent a game is, or are you just cherrypicking to win an e-argument?

PS1. Calling me a fanboy isn't an argument, sorry. I can call you a fanboy back and it get's us nowhere. But I surely must be doing a really lousy job at being a fanboy cause I'm suggesting people to buy an 8400 instead of a 1600! Go figure

Sausagemeat

Posts: 1,597 +1,423

Nope, nobody said that.

Did you read the same posts?

No it's not indicative of future performance. It's only indicative of performance in CURRENT games with future graphics cards.

So you’re suggesting that no one commenting here is stating that Ryzen is a better buy?

Also, 720p is the best indication of future performance out of all the tests. Testing in that fashion puts the “bottleneck” on the CPU. Using the logic that a faster chip today will be a faster chip in the future you can come to the conclusion that the faster chips today will last longer. Or are you suggesting that the slower chips at 720p today will actually end up being faster in future titles?

Not very bright are you mate. Just out of curiosity, what swamp did you crawl out of?

Boilerhog146

Posts: 642 +223

Nope, nobody said that.Lot of people saying that Ryzen won? Baffling.

Did you read the same posts?Did they read the same tests?

No it's not indicative of future performance. It's only indicative of performance in CURRENT games with future graphics cards.Especially when you look at 720p tests - something that can actually differentiate between these CPUs and indicate future performance.

Looks like to me .this test is indicative of current performance in current games with current Gpu.

Steve has no future gpu or future games to test with.

Fanbois should just quit that futuristic hyperbole.its gotten boring.

It was a great indicator!

dirtyferret

Posts: 1,059 +1,711

So, instead of caring about the facts you care about winning an e-argument. Great. Now tell me, since you bothered to go to every review, how exactly do you think you proved me wrong? You know there are like a trillion websites outhere that show the opposite results? You know why? Cause averages are entirely based on the games you are testing. I can take 10 games from 2012 and show that the 7600k is 30% faster than the 1600. I can also take some other 10 games (like bf1 multiplayer / civ 6 / crysis 3) and show that the 1600 is 30% faster than the 7600k. The fact is, the more modern a game is, the faster the 1600 gets. The heavier a scene, the more the 7600k plummets. Which was my point all freaking along, that the Ryzens do better on more recent games!

Do you think that the i5's do better the more recent a game is, or are you just cherrypicking to win an e-argument?

PS1. Calling me a fanboy isn't an argument, sorry. I can call you a fanboy back and it get's us nowhere. But I surely must be doing a really lousy job at being a fanboy cause I'm suggesting people to buy an 8400 instead of a 1600! Go figure

1) there is no argument, you need to post a valid point in order to have one, which you failed to do.

2) you have yet to post a single fact, another failure

3) I did not prove you wrong, every single professional review web site proved you wrong. It would be like if you stated the moon really was made out of cheese and every single professional astronomer proved you wrong.

dirtyferret

Posts: 1,059 +1,711

Youtube videos generally offers much better indication about real gaming than benchmark runs. When running benchmark, you don't actually play the game. That's big difference and explains why youtube is indeed be much better source than any benchmark result found on "professional website".

yea, not one single person believes that other then those who wear AMD PJs to bed but nice try youtube fanboys are better reviewers then an entire slate of professional review web sites

The only thing you've "proven" is that you're still incapable of understanding why 720p benchmarks exist. Likewise, "lack of proof of the future is proof of a negative" is a logical fallacy, not some 'clever' argument.I want to talk about the claim that 720p benchmark are indicative of future performance. I proved it wrong simply by comparing 2 cpu's. All I'm trying is to prove that the claim is wrong. `The claim is " if a CPU does better on 720p in games than another CPU then the first one will do better in future games as well".

The real

So yes, that IS being indicative of future performance, that's the whole point and sole reason to do it and why people have been doing it for 20 years going back to 640x480 resolution benchmarks when 800x600-1024x768 CRT's were the norm. It's perfectly valuable data that shows up different effects of how much more a CPU can do in future without a GPU bottleneck (that exist even at 1080p). The wider the 720p vs 1080p gap, the more headroom, conversely a game which is hitting say 112fps at both resolutions is already CPU bottlenecked (to which a GPU upgrade will add nothing). It is absolutely "indicative of future performance" on same CPU as GPU's improve. That's not even a "claim", but a simple observation of reality...

As for threading in future games, it'll continue to be the same mixed bag we've always had. At one extreme you've got Crysis 3, the other extreme Starcraft 2, and most lying somewhere in the middle (BF1, Overwatch, etc). As explained previously, this isn't going to change any time soon or continuously magically scale every 3 years for the simple reason that AAA cross-platform games are ultimately designed for and constrained by consoles (6-7 usable out of 8x Jaguar cores). That's why there's a far bigger jump from 4C to 6C but little from 6C/12T 1600X vs 8C/16T 1800X or 16C/32T 1950X. It's why in Youtube vids, games that "use" 12 threads often have as little as 4-5% utilization on half the cores. This isn't going to continue to scale every 3 years until you see 16 core games consoles. And yes, that's a future prediction you absolutely can "take to the bank" because no game dev in their right mind is going to throw away 80-90% of their 2019 AAA sales just because someone bought a Threadripper and wants it to become the minimum new standard. That's not how the real world works at all regardless of enthusiast PC hardware.

Also, 720p is the best indication of future performance out of all the tests. Testing in that fashion puts the “bottleneck” on the CPU. Using the logic that a faster chip today will be a faster chip in the future you can come to the conclusion that the faster chips today will last longer. Or are you suggesting that the slower chips at 720p today will actually end up being faster in future titles?

You do understand best does not equal good? Even if 720p is best indication of future performance, it's still very bad indication of future performance because on real world gaming GPU should always be bottleneck. I also suggest that slower chips at 720p today will be faster on future titles. That is because future games will make better use of cores than today's DX11 (and DX11 titles with aded DX12 support titles) and so more cores will result better performance. On today's titles amount of cores is not that important, so less cores more MHz is better now it will change in the future, at least some way.

You should just accept the fact that it's simply impossible to make accurate predictions about future performance today.

yea, not one single person believes that other then those who wear AMD PJs to bed but nice try youtube fanboys are better reviewers then an entire slate of professional review web sites

Real world gaming results are always better than any gaming benchmarks.

Jimster480

Posts: 160 +142

In all honesty your choice of words is a little deceptive.

"struggle" is not the word to be used for any of these CPU's.

You are seeing 100+FPS in every title in every resolution basically and stable FPS over those resolutions aswell.

Even getting into 1080p many titles are holding 144+FPS even for those who bought into the 144hz hype.

So honestly it just makes Intel a bad value when a R5 1600 still gets more than playable FPS in every title.

Even if its a 20% FPS difference, this equates to 20% more dropped frames on 90% of peoples computers with 60hz monitors.

On peoples computers with 144hz monitors, there are few titles where going intel would get you a 144hz stable frame rate vs AMD.

"struggle" is not the word to be used for any of these CPU's.

You are seeing 100+FPS in every title in every resolution basically and stable FPS over those resolutions aswell.

Even getting into 1080p many titles are holding 144+FPS even for those who bought into the 144hz hype.

So honestly it just makes Intel a bad value when a R5 1600 still gets more than playable FPS in every title.

Even if its a 20% FPS difference, this equates to 20% more dropped frames on 90% of peoples computers with 60hz monitors.

On peoples computers with 144hz monitors, there are few titles where going intel would get you a 144hz stable frame rate vs AMD.

amstech

Posts: 2,688 +1,860

As for threading in future games, it'll continue to be the same mixed bag we've always had. At one extreme you've got Crysis 3, the other extreme Starcraft 2, and most lying somewhere in the middle (BF1, Overwatch, etc). As explained previously, this isn't going to change any time soon or continuously magically scale every 3 years for the simple reason that AAA cross-platform games are ultimately designed for and constrained by consoles (6-7 usable out of 8x Jaguar cores). That's why there's a far bigger jump from 4C to 6C but little from 6C/12T 1600X vs 8C/16T 1800X or 16C/32T 1950X. It's why in Youtube vids, games that "use" 12 threads often have as little as 4-5% utilization on half the cores. This isn't going to continue to scale every 3 years until you see 16 core games consoles. And yes, that's a future prediction you absolutely can "take to the bank" because no game dev in their right mind is going to throw away 80-90% of their 2019 AAA sales just because someone bought a Threadripper and wants it to become the minimum new standard. That's not how the real world works at all regardless of enthusiast PC hardware.

This right here is the golden text of truth.

Jimster480

Posts: 160 +142

Except it has been happening.Right along with a Half Life 3 release....Over the next year, we'll see games that will utilize multiple cores for various purposes other than simply running the game engine. .

Cmon man the 'more core and future proof' angle again?

Maybe games will use more then 4-8 cores/threads in the next few years, sure would be nice. Good luck convincing people its going to happen.

Games are using more and more cores per year.

And considering that Ryzen delivers more than playable framerates in every game, and doesn't trail intel in a place where 144hz monitors would matter across most titles.... it makes little sense to splash out extra cash for any Intel CPU over a Ryzen 5 1600/X if you are just doing gaming.

As for threading in future games, it'll continue to be the same mixed bag we've always had. At one extreme you've got Crysis 3, the other extreme Starcraft 2, and most lying somewhere in the middle (BF1, Overwatch, etc). As explained previously, this isn't going to change any time soon or continuously magically scale every 3 years for the simple reason that AAA cross-platform games are ultimately designed for and constrained by consoles (6-7 usable out of 8x Jaguar cores). That's why there's a far bigger jump from 4C to 6C but little from 6C/12T 1600X vs 8C/16T 1800X or 16C/32T 1950X. It's why in Youtube vids, games that "use" 12 threads often have as little as 4-5% utilization on half the cores. This isn't going to continue to scale every 3 years until you see 16 core games consoles. And yes, that's a future prediction you absolutely can "take to the bank" because no game dev in their right mind is going to throw away 80-90% of their 2019 AAA sales just because someone bought a Threadripper and wants it to become the minimum new standard. That's not how the real world works at all regardless of enthusiast PC hardware.

It's going to change and here's good reason:

RealNeil

Posts: 44 +24

Where can you find a Ryzen-5 1600 for 169.99?Back in real life where people use 1080p, 2560x1080p, and 2560x1440p Freesync monitors, Excellent AM4 motherboards were 20% off at newegg (only $60 shipped for ASRock's excellent AB350m Pro4) and Ryzen 5 1600 was $169.99, but let's go ahead and do everything we can to pretend intel is still relevant in price/performance.......

Jimster480

Posts: 160 +142

Nice forgetting that OC'ing isn't guarenteed and costs additional cash for a cooler.Sure you can speculate based on past and current results. And that's exactly what I did. The 7600k wipes the floor with the R5 1600x on older games. In current games, as you've said, they are close, with a slight advantage to the R5 1600x in modern engines. So the argument about 720p / CPU overhead / future gaming performance is wrong.

There are benches on youtube

OK benches on youtube, discussion is over. No more to see here...but let's just check one or two or more professional sites before we take AMDfanboy1 living in mom's basement as the end all be all on benches.

Does Tom's hardware agree? Nope the further right you go the better the processor performs and notice the gap widens once you OC the 7600k compared to an OC 1600.

Does Anandtech agree? Nope

I have $250, What Should I Get – the Core i5 7600/7600K or the Ryzen 5 1600X?

Platform wise, the Intel side can offer more features on Z270 over AM4, however AMD would point to the lower platform cost of B350 that could be invested elsewhere in a system.

On performance, for anyone wanting to do intense CPU work, the Ryzen gets a nod here. Twelve threads are hard to miss at this price point. For more punchy work, you need a high frequency i5 to take advantage of the IPC differences that Intel has.

For gaming, our DX12 titles show a plus for AMD in any CPU limited scenario, such as Civilization or Rise of the Tomb Raider in certain scenes. For e-Sports, and most games based on DX9 or DX11, the Intel CPU is still a win here.

https://www.anandtech.com/show/1124...x-vs-core-i5-review-twelve-threads-vs-four/17

Hey Maybe Techpowerup agrees? Nope

Surely PCgamer (aka maximumpc) agrees? Nope

What about techspot, the very site you are posting on? Nope

So other then every professional web site out there not agreeing with you, you are correct because of "youtube benchmark".

I love reading fanboy hissy fits in the morning, they read like...victory.

Also you are ignoring the fact that basically every Intel needs an aftermarket cooler out of the box vs AMD being able to run with stock coolers (except for the models which do not come with them).

The FPS comparisons done here are pointless, Intel is pulling ~10% more FPS for 100% more price. This has been the case since Ryzen came out.

Nothing changed with the new release except that ***** Intel fanboys have to buy new motherboards again

dirtyferret

Posts: 1,059 +1,711

The only thing you've "proven" is that you're still incapable of understanding why 720p benchmarks exist. Likewise, "lack of proof of the future is proof of a negative" is a logical fallacy, not some 'clever' argument.

The realclaimobservation is : "For over 20 years, people have benchmarked at lower than normal play resolutions to eliminate GPU bottlenecks. By doing this, the difference in fps between that and normal resolution gives an idea of how much overhead you have when upgrading GPU but keeping same CPU 1-2 years later."

So yes, that IS being indicative of future performance, that's the whole point and sole reason to do it and why people have been doing it for 20 years going back to 640x480 resolution benchmarks when 800x600-1024x768 CRT's were the norm. It's perfectly valuable data that shows up different effects of how much more a CPU can do in future without a GPU bottleneck (that exist even at 1080p). The wider the 720p vs 1080p gap, the more headroom, conversely a game which is hitting say 112fps at both resolutions is already CPU bottlenecked (to which a GPU upgrade will add nothing). It is absolutely "indicative of future performance" on same CPU as GPU's improve. That's not even a "claim", but a simple observation of reality...

As for threading in future games, it'll continue to be the same mixed bag we've always had. At one extreme you've got Crysis 3, the other extreme Starcraft 2, and most lying somewhere in the middle (BF1, Overwatch, etc). As explained previously, this isn't going to change any time soon or continuously magically scale every 3 years for the simple reason that AAA cross-platform games are ultimately designed for and constrained by consoles (6-7 usable out of 8x Jaguar cores). That's why there's a far bigger jump from 4C to 6C but little from 6C/12T 1600X vs 8C/16T 1800X or 16C/32T 1950X. It's why in Youtube vids, games that "use" 12 threads often have as little as 4-5% utilization on half the cores. This isn't going to continue to scale every 3 years until you see 16 core games consoles. And yes, that's a future prediction you absolutely can "take to the bank" because no game dev in their right mind is going to throw away 80-90% of their 2019 AAA sales just because someone bought a Threadripper and wants it to become the minimum new standard. That's not how the real world works at all regardless of enthusiast PC hardware.

the mods can now close the thread

Last edited:

amstech

Posts: 2,688 +1,860

Meh...maybe a few more then 5 years ago, nothing crazy.Except it has been happening.

Games are using more and more cores per year..

The 7700K smokes everything in every game and its 4/8.

My 930 from 7 years ago is 4/8.

Ryzen CPU's are the slowest CPU's in gaming.it makes little sense to splash out extra cash for any Intel CPU over a Ryzen 5 1600/X if you are just doing gaming.

They perform great for the money, but they still lose.

Someone8MyPosts

Posts: 464 +146

Twice the RAM on the Intel setups...hmmm. FPS is one thing...load times is another, latency, etc....

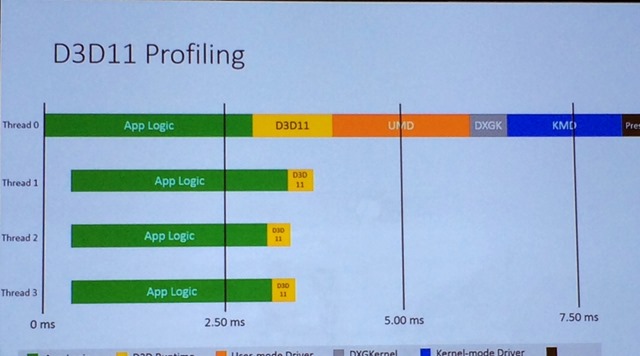

Unfortunately, static marketing slides of "DX12 Profiling" are about as "useful" as static marketing slides of Mantle or AoTS scripted draw call saturation tests were at predicting +1300% increases in frame-rates going from DX11 to DX12...It's going to change and here's good reason:

Sausagemeat

Posts: 1,597 +1,423

Just out of curiosity, do the people who say that 720p performance is not an indicator of future performance think that the chips which perform worse at 720p now will perform better than their competition at 720p in the future?

Because that is ridiculous.

Of course 720p testing isn’t 100% accurate for the future. But it does show how fast a game can run when the limiting factor is the CPU. It is the best possible way of determining how fast a CPU can run games. Typically, if a chip A is faster than chip B at any given task in year X then chip A will be faster than chip B in year X+3. It’s not difficult logic.

Because that is ridiculous.

Of course 720p testing isn’t 100% accurate for the future. But it does show how fast a game can run when the limiting factor is the CPU. It is the best possible way of determining how fast a CPU can run games. Typically, if a chip A is faster than chip B at any given task in year X then chip A will be faster than chip B in year X+3. It’s not difficult logic.

dirtyferret

Posts: 1,059 +1,711

Nice forgetting that OC'ing isn't guarenteed and costs additional cash for a cooler.

Also you are ignoring the fact that basically every Intel needs an aftermarket cooler out of the box vs AMD being able to run with stock coolers (except for the models which do not come with them).

The FPS comparisons done here are pointless, Intel is pulling ~10% more FPS for 100% more price. This has been the case since Ryzen came out.

Nothing changed with the new release except that ***** Intel fanboys have to buy new motherboards again

1) that goes both ways as neither do the Ryzen chips offer a "guaranteed" (two a and two e) OC

2) your second point is a redundant sentence, you literally said that both AMD & Intel CPUs need an aftermarket cooler if their chips don't come with one. I am proud of you for having this empathy.

3) If FPS comparisons are pointless why are posting here?

4) Which Ryzen Chip offers 10% of the Intel 7600 performance but costs around $100?

3) Current intel owners don't need new motherboards, their chips are already faster then Ryzen. Future Intel owners need a new mobo.

Meh...maybe a few more then 5 years ago, nothing crazy.

The 7700K smokes everything in every game and its 4/8.

My 930 from 7 years ago is 4/8.

i7-8700K is faster than i7-7700K and it's 6C/12T...

Unfortunately, static marketing slides of "DX12 Profiling" are about as "useful" as static marketing slides of Mantle or AoTS scripted draw call saturation tests were at predicting +1300% increases in frame-rates going from DX11 to DX12...

Show me slides where draw call increase equals FPS increase? Point with DX12 is that with DX11 you cannot make good use of multiple cores because performance is heavily limited by one main thread. With DX12 that major problem is not present.

Just out of curiosity, do the people who say that 720p performance is not an indicator of future performance think that the chips which perform worse at 720p now will perform better than their competition at 720p in the future?

Because that is ridiculous.

Of course 720p testing isn’t 100% accurate for the future. But it does show how fast a game can run when the limiting factor is the CPU. It is the best possible way of determining how fast a CPU can run games. Typically, if a chip A is faster than chip B at any given task in year X then chip A will be faster than chip B in year X+3. It’s not difficult logic.

Gaming is always limited by GPU performance (not counting titles where graphic quality is so low that GPU limits anyway). So faster GPU > bigger resolution > GPU is still limiting factor.

Not difficult logic either. Also future games will be different than today's games when it comes to CPU usage.

3) Current intel owners don't need new motherboards, their chips are already faster then Ryzen. Future Intel owners need a new mobo.

Ryzen 2 will be faster than current Intel's and current Ryzen users don't need new motheboard for that. Intel users however will need new motherboard for CPU's faster than Ryzen 2.

dirtyferret

Posts: 1,059 +1,711

Ryzen 2 will be faster than current Intel's and current Ryzen users don't need new motheboard for that. Intel users however will need new motherboard for CPU's faster than Ryzen 2.

show me the benchmarks of Ryzen 2 beating an intel processor....

show me the benchmarks of Ryzen 2 beating an intel processor....

http://techreport.com/news/32110/globalfoundries-fires-up-its-7-nm-leading-performance-forges

amstech

Posts: 2,688 +1,860

Not really, I just looked at the benches in this article and the 7700k and 8700k are almost identical besides a few games.i7-8700K is faster than i7-7700K and it's 6C/12T...

.

7700K hits 4.5GHz max turbo, 8700K hits 4.7GHz.

7700K has 8MB cache, 8700K has 12MB.

You AMD guys are really grasping at Straws.

Is that why he is called Strawman? Boom!

Last edited:

Similar threads

- Replies

- 50

- Views

- 2K

- Locked

- Replies

- 27

- Views

- 2K

- Locked

- Replies

- 51

- Views

- 2K

Latest posts

-

Microsoft to lay off thousands in July, but don't worry, AI's getting $80 billion

- Bluescreendeath replied

-

Intel to outsource marketing to Accenture and AI, cutting in-house staff

- maxxcool7421 replied

-

AMD Ryzen 5 9600X3D leak hints at mid-range push with 3D V-Cache

- maxxcool7421 replied

-

AMD Radeon RX 9060 XT PCIe Comparison: 8GB vs. 16GB

- Vanderlinde replied

-

TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.