In brief: As the dust settles on the semi-paper launch of Intel's mobile Arc GPUs, there's still a lot of mystery around the desktop counterparts. Intel has started teasing them again, but it will be interesting to see if the company can nail the performance and price aspects of these cards in time for a summer release.

This week, Intel announced its new Arc A-series dedicated GPUs for laptops, adding more competition for Nvidia and AMD. For now only the entry-level Arc 3 series are ready to hit the market, while the heavy hitters like Arc 5 and Arc 7 series which have more graphics cores, VRAM, and ray tracing units are set for a summer release. We'll have to wait and see how they perform in the real world, but Intel is now officially the third player in the discrete GPU space, even if it's off to a slow start.

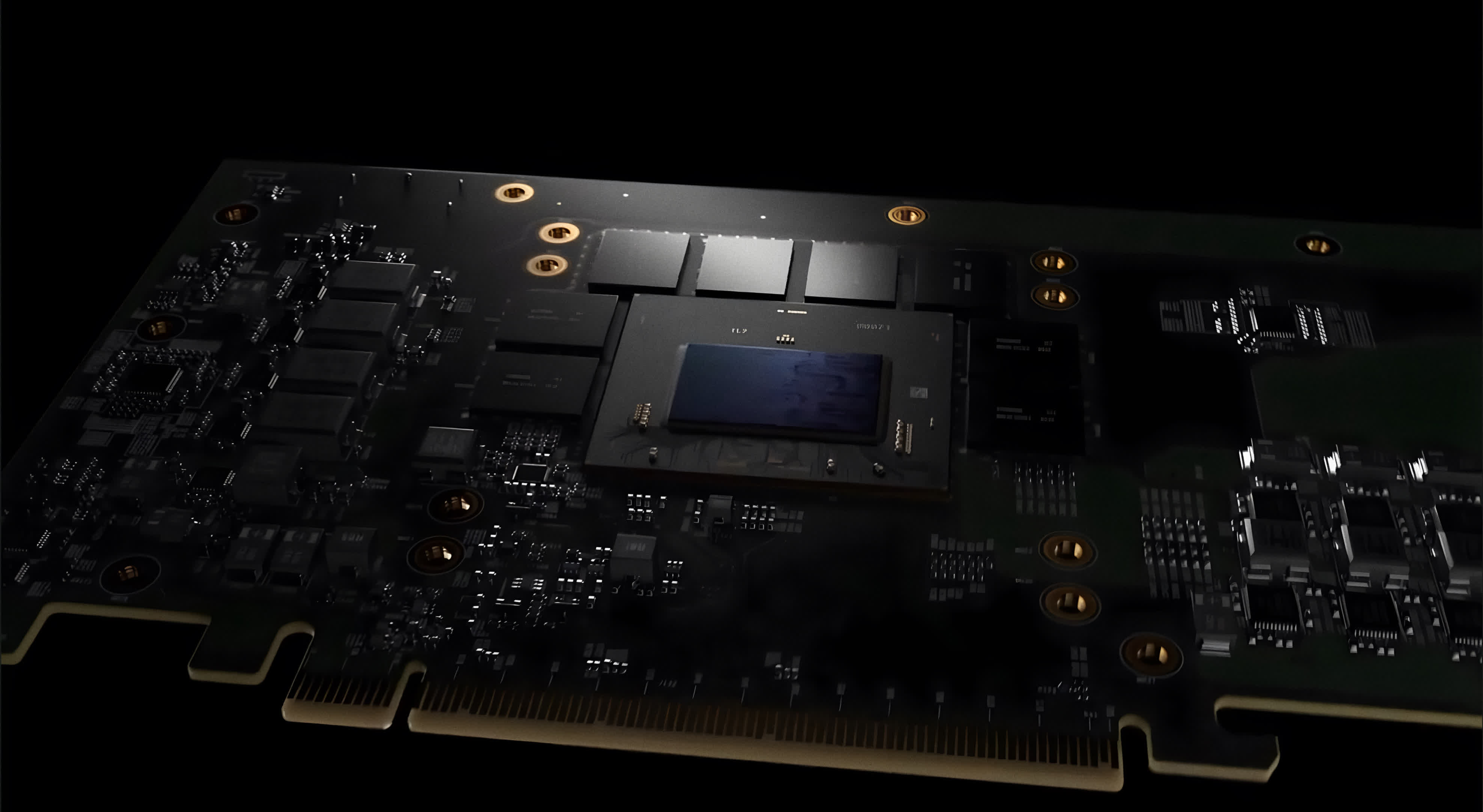

That said, many gamers are eagerly awaiting the desktop Arc offerings. Intel says these are also slated for this summer, and it's already teasing what appears to be the flagship of the lineup. The new card is supposedly called Intel Arc Limited Edition Graphics, and now we have the first look at the official design with a pretty cinematic rendering.

As you can see from the video above, Intel went with a clean and minimalistic aesthetic for this model. In an era when flagship cards tend to be gargantuan in size, this looks to be a surprisingly slim, two-slot graphics card with a standard dual-axial fan cooling system. This means it will exhaust heat into the PC case rather than blow it out, but by the looks of it this won't be a space heater on par with AMD's RX 6900 XT or Nvidia's RTX 3090 Ti.

Speaking of heat, if this model integrates the full ACM-G10 die with 32 Xe cores and 16 gigabytes of GDDR6 memory, it will likely run inside a 175-225 watt power envelope. The 50-second video doesn't show any signs of external power connectors, so we can only assume the render isn't representative of the final product.

Also visible in the video are three DisplayPorts and one HDMI port, which we're hoping will support the HDMI 2.1 specification. Intel has chosen to omit this feature when it comes to its mobile Arc GPUs, and instead wants OEMs to implement it via an external chip that will convert DisplayPort signals to HDMI 2.1.

Intel's desktop Arc GPUs may also feature an AV1 hardware encoding block like their mobile counterparts, which could set them apart from the competition when it comes to media engine capabilities for content creators. The company had a rather disappointing launch for its mobile Arc GPUs, so we're hoping the desktop versions will make up for it with more exciting specs and a competitive price point.

https://www.techspot.com/news/93999-intel-offers-first-look-arc-limited-edition-desktop.html