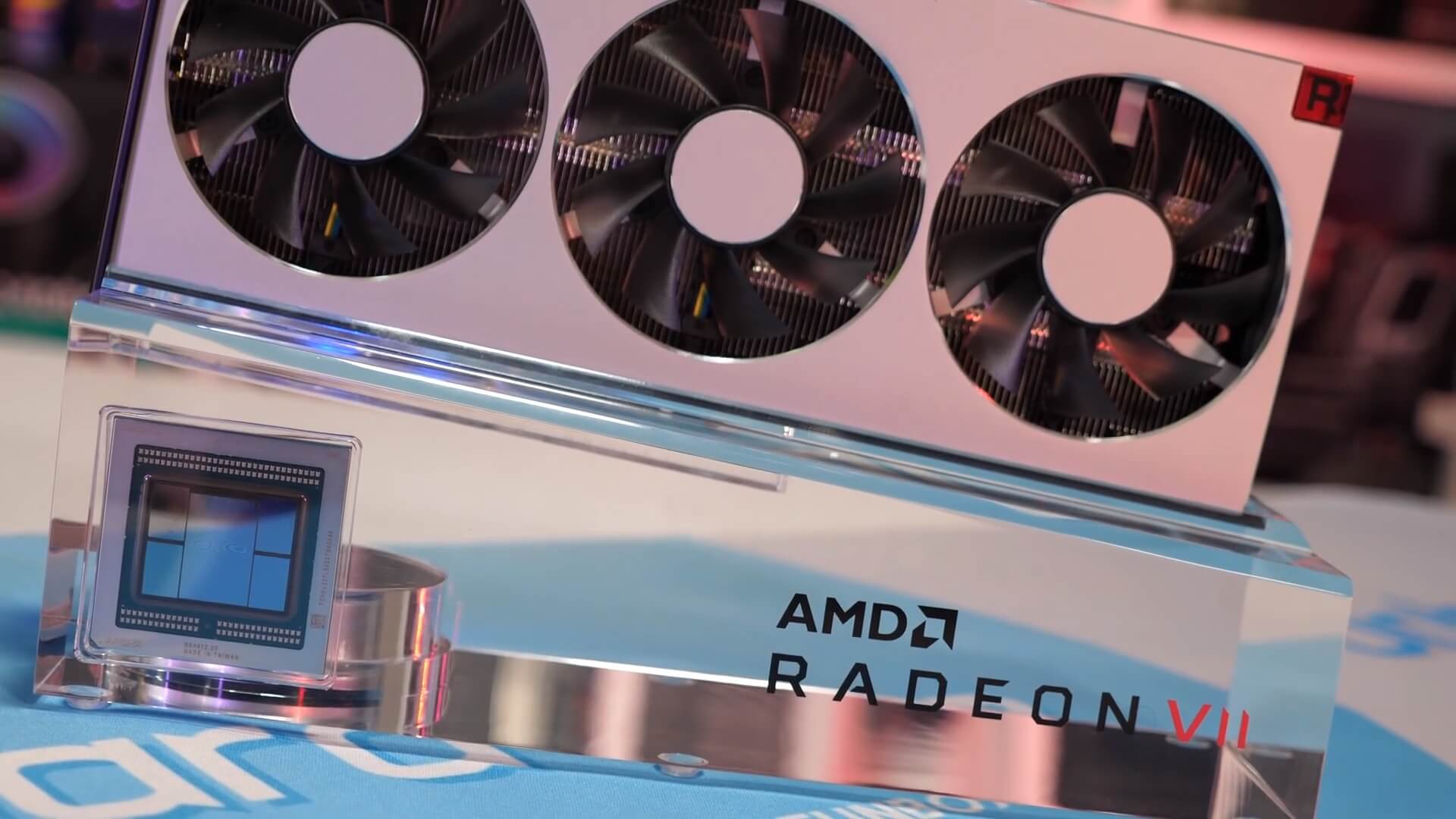

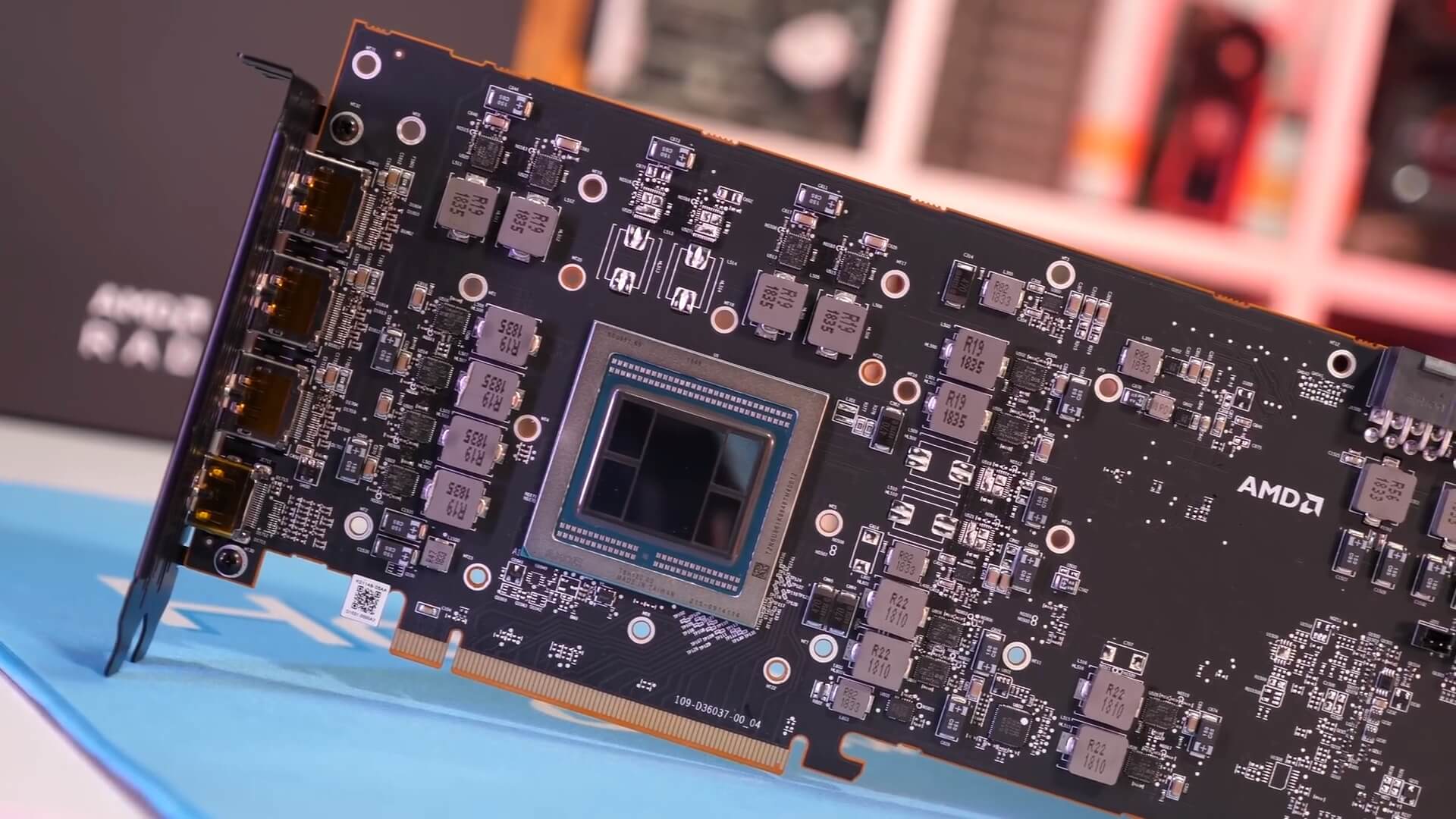

AMD unveiled the Radeon VII during AMD's CES 2019 keynote last month, claiming the new 7nm second-gen Vega GPU can boost performance 27 to 62 percent depending on the task. Boasting of 1 terabyte/s memory bandwidth, 60 compute units running up to 1.8GHz, and thanks to the shrink in process, the chip can squeeze 25% more performance in the same power envelope.

With an expected retail price of $699, AMD is looking to directly compete with the GeForce RTX 2080 that has the same MSRP. Radeon VII is expected to perform between 25 percent and 35 percent faster than Vega 64 (on paper).

During launch AMD will be offering an attractive game bundle with free copies of Resident Evil 2 remake, The Division 2, and Devil May Cry 5 shipping with every Radeon VII card.

This may be one of the last things you will hear about Radeon VII before we get to the benchmarks later this week. Official release of Radeon VII is slated for this Thursday, February 7 when you can expect our full review and benchmarks. For now you'll have to do with Steve's unboxing and color commentary. It's an entertaining thing to watch, so we'll leave you to it.

https://www.techspot.com/news/78567-unbox-amd-radeon-vii-graphics-card.html