Something to look forward to: The Pat Gelsinger-powered Intel may not be back to running on all cylinders just yet, but it is cooking some rather interesting PC hardware in the labs. According to a new leak, Team Blue's Lunar Lake-MX CPUs could be just what Team Blue needs to compete against the rising wave of Arm-based processors for laptops and ultraportable devices in the coming years.

In 2021, Intel CEO Pat Gelsinger proudly discussed the company's long-term plan to win Apple's business back by "outcompeting" it. Ever since he returned to Team Blue, Gelsinger has been on a quest to demonstrate that Arm-based processors aren't a big threat to x86 in the consumer space and that the older architecture can still incorporate plenty of innovations on top of its already solid foundations.

To that end, Intel has been working on refining several key technologies like PowerVia, Foveros, and RibbonFET as well as adopting a hybrid approach when it comes to manufacturing. That means the company that once strived to be at the forefront of process technology is now willing to use competing manufacturing nodes at foundries like TSMC as opposed to producing everything in-house.

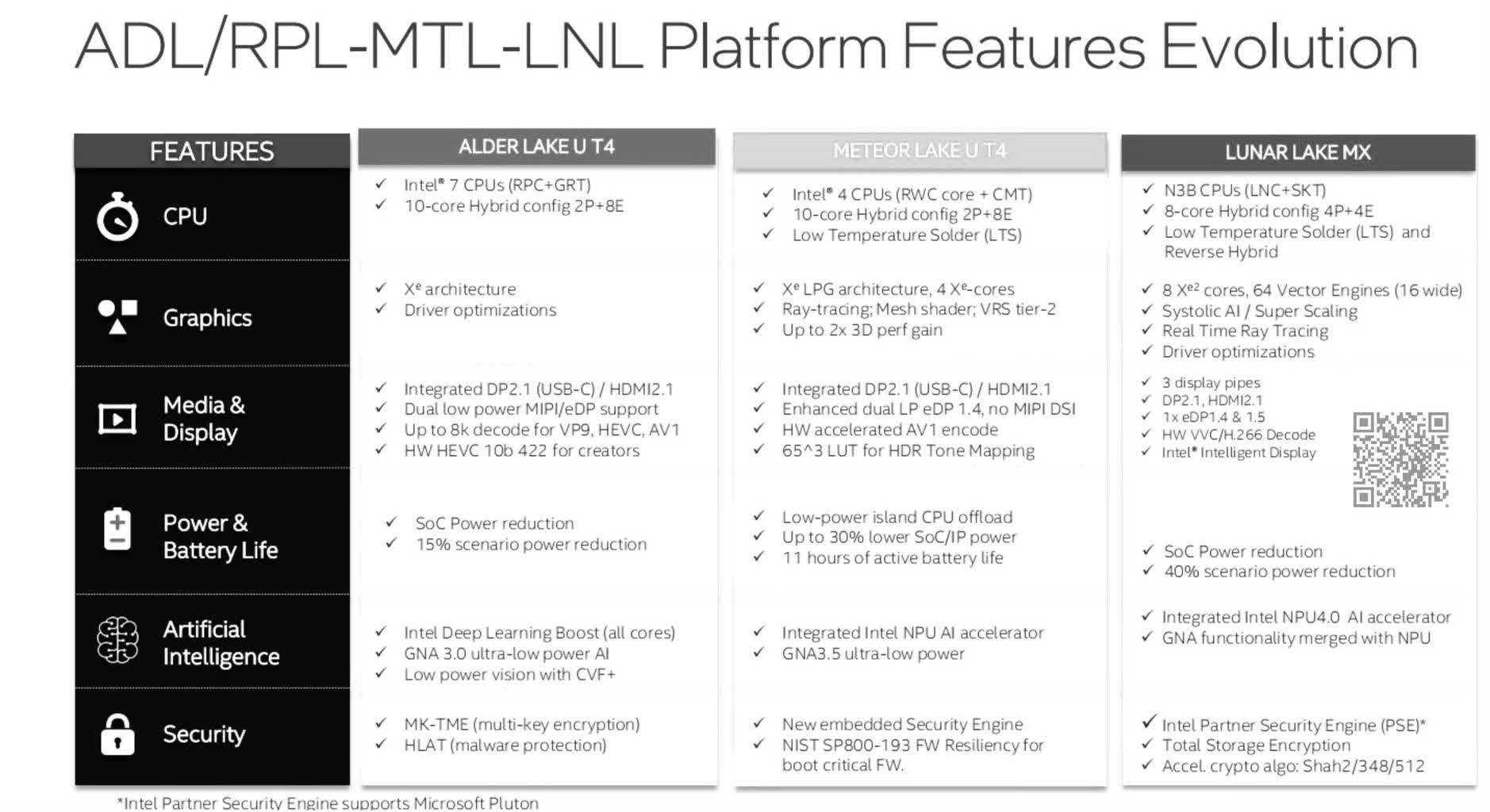

A recent leak by YuuKi_Ans on X.com (formerly Twitter) sheds more light on Team Blue's goals when it comes to low-power architectures like Lunar Lake-MX. While architectures like Arrow Lake will mostly feature improvements meant to boost gaming performance and show off the benefits of Intel's 20A process node and gate-all-around transistor technology, Lunar Lake-MX is shaping up to be more of a direct competitor to Apple M-series chipsets, with a focus on maximizing performance-per-watt for thin and light laptops.

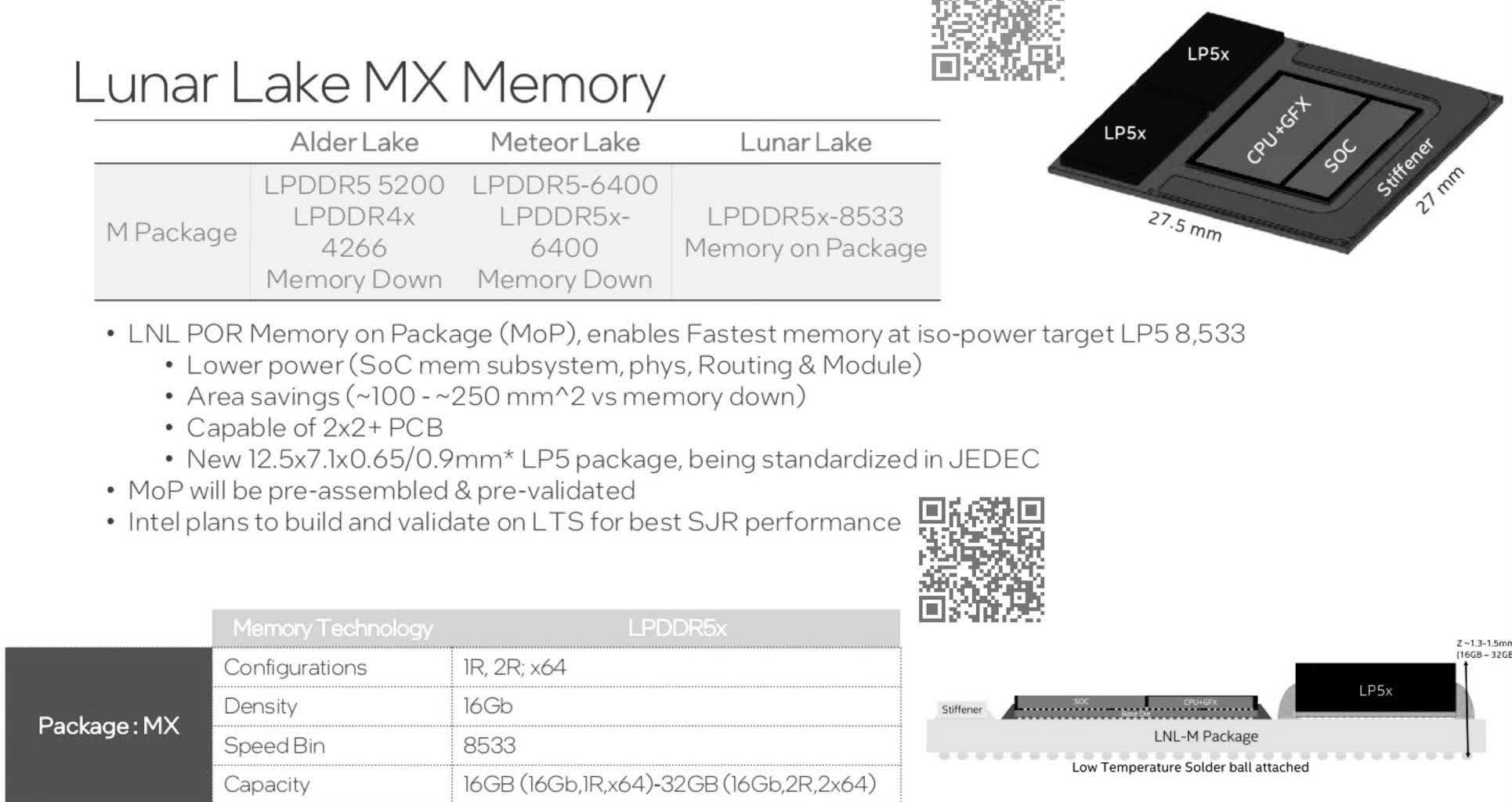

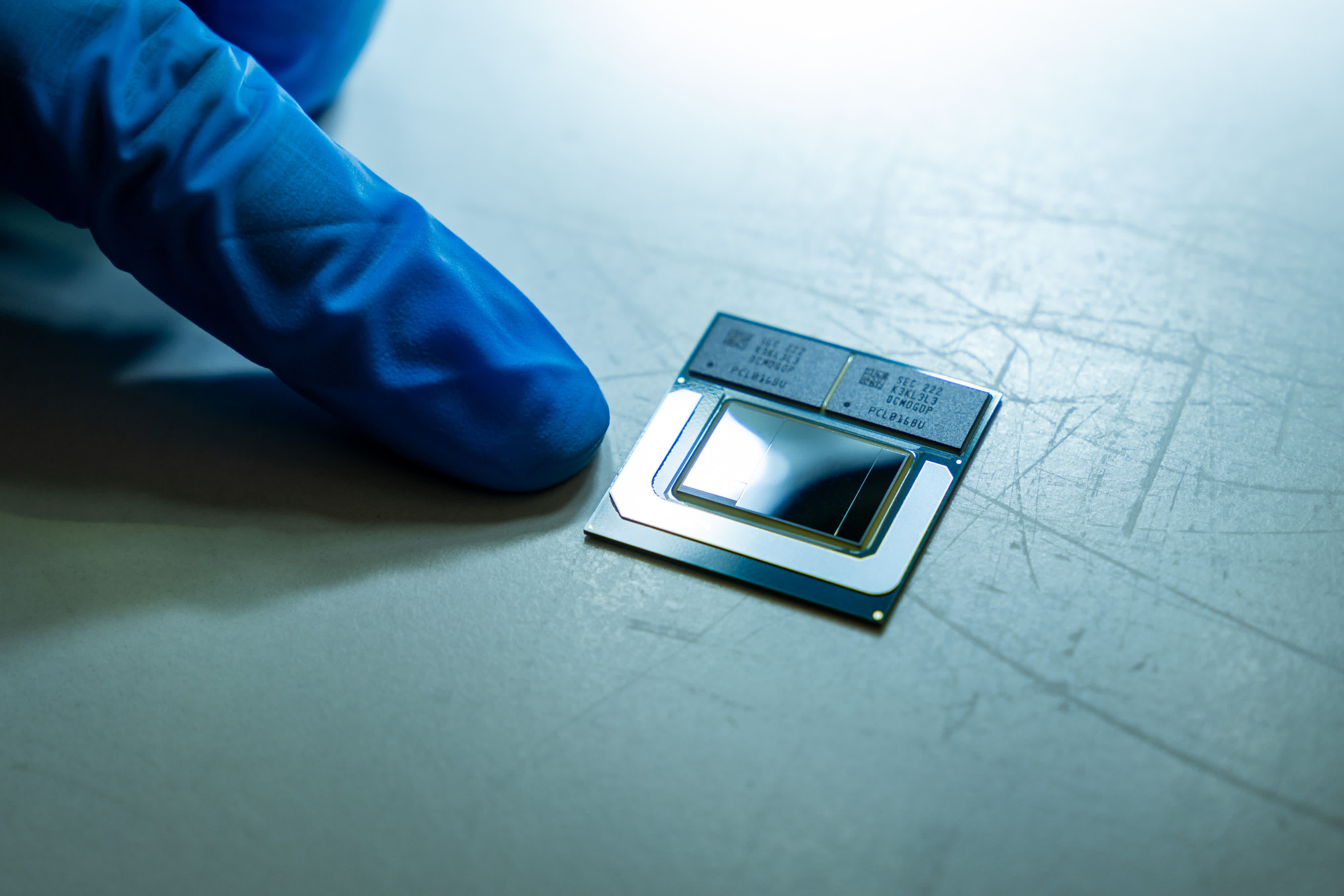

The leaked slides suggest that Lunar Lake-MX will continue the trend of multi-chip design that we've seen with Alder Lake mobile CPUs. Notably, Intel is taking a page from Apple's silicon book and soldering memory right next to the CPU. It looks like the Lunar Lake-MX UP4 package will be larger than the Alder Lake UP4 and Meteor Lake UP4 counterparts to accommodate two LPDDR5X-8533 memory chips for up to 32 gigabytes of total capacity over a 160-bit, dual-channel interface.

Interestingly, the minimum memory capacity will be 16 gigabytes which is a good amount considering you won't have the ability to add more after purchasing the device incorporating such a CPU. Meanwhile, Apple is still trying to justify including a more modest eight gigabytes of unified memory on base-level M3 chipsets using questionable claims of higher "efficiency" when compared to standard PC hardware with double the memory.

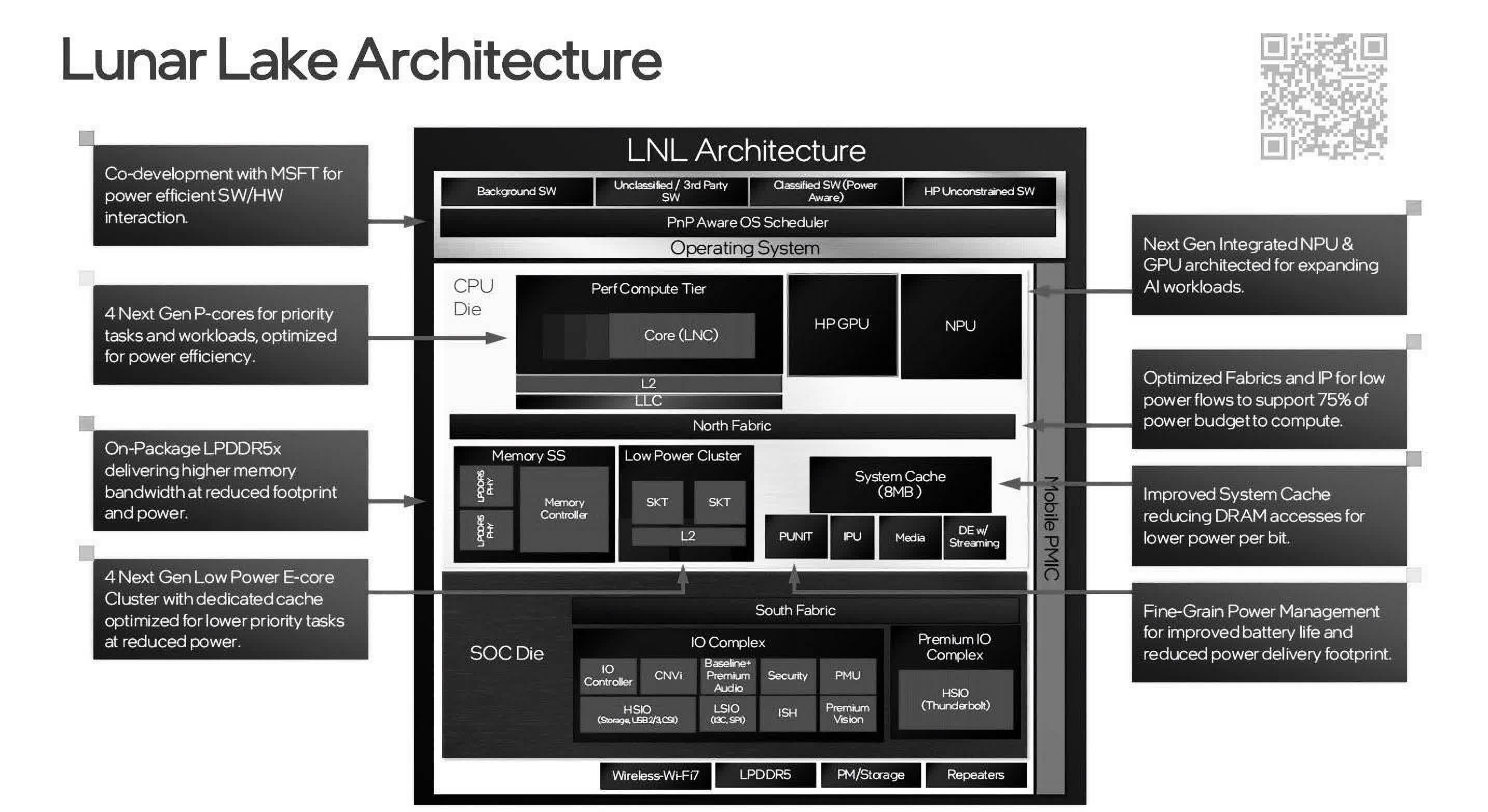

The compute muscle of Lunar Lake will feature four performance cores (Lion Cove) and four efficiency cores (Skymont) along with eight megabytes of "side cache," a faster neural processing unit (NPU 4.0), and eight Xe2 Battlemage graphics cores (64 vector engines with up to 2.5 teraflops of raw performance) with DirectX 12 Ultimate support – all linked together via a "North Fabric". The SoC tile will be essentially the good ol' PCH but will notably support PCIe 5.0, hardware-accelerated storage encryption, and up to three USB4/Thunderbolt 4 ports.

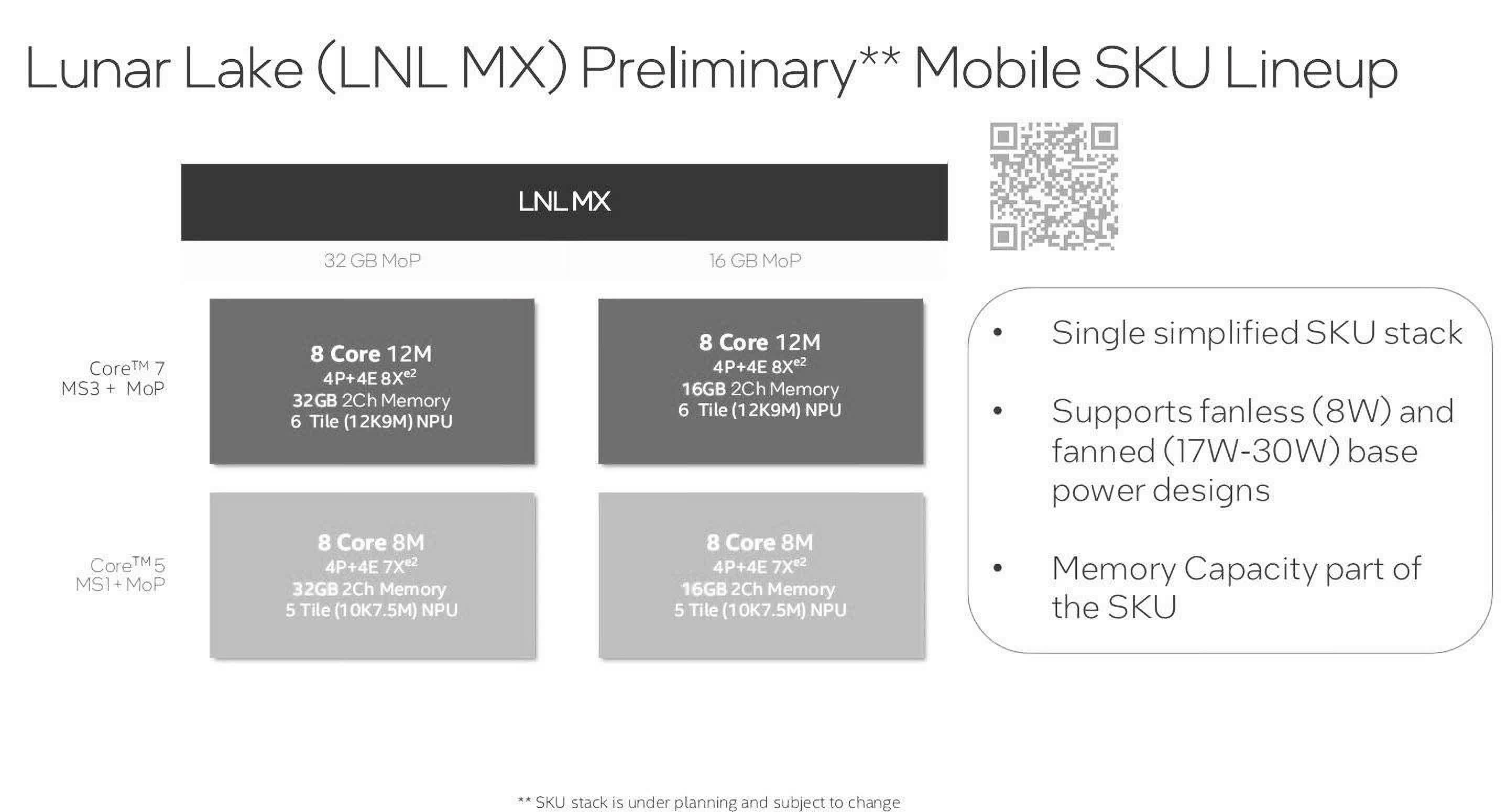

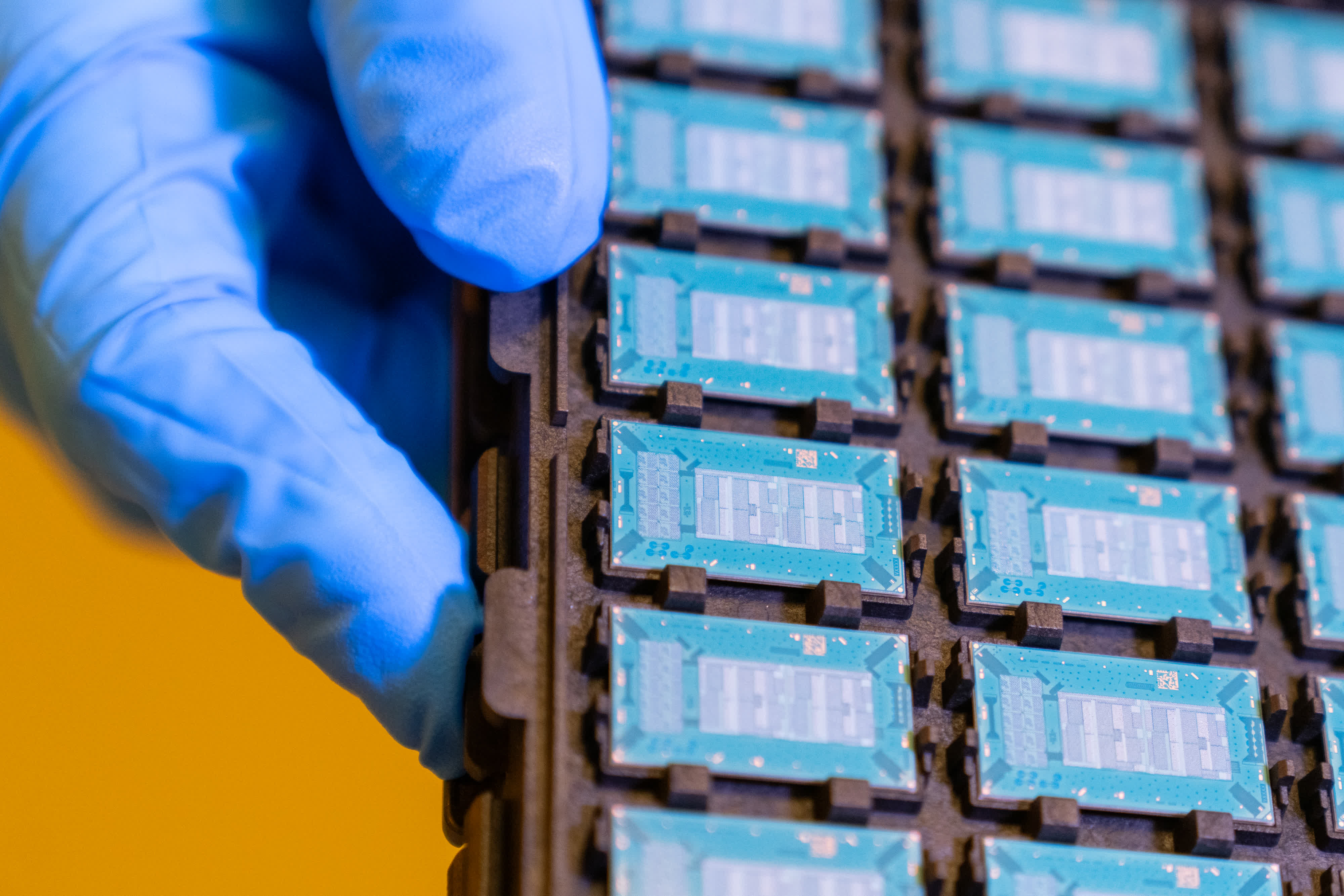

The slides suggest there will be four variants of Lunar Lake processors targeting base power envelopes between 8 W and 30 W – two models of Core 7 and two models of Core 5 with either 16 or 32 gigabytes of memory and slightly different iGPU and NPU configurations. The 8-watt versions of Lunar Lake will supposedly be able to operate in completely fanless devices.

If the leak is any indication, Intel will use TSMC's "N3B" node to make the CPU tile for Lunar Lake UP4 packages, while the upcoming Meteor Lake UP4 designs will be manufactured on the Intel 4 node.

Lunar Lake-MX processors are expected to debut sometime in 2024 or early 2025. By that time, it's possible we'll also see a new M-series chipset from Apple as well as the much-awaited Snapdragon X Elite from Qualcomm. It will be interesting to see how Intel's x86 designs stack up against those offerings. The compact design makes us think Lunar Lake-MX may also end up in some next-generation handheld consoles, which should lead to more competition in that space.

https://www.techspot.com/news/100904-lunar-lake-mx-leak-suggests-intel-serious-about.html