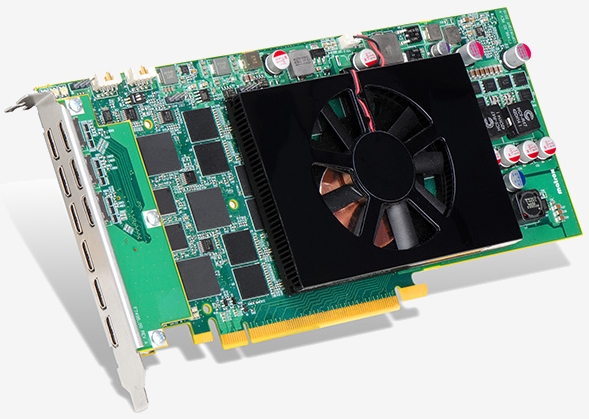

Matrox on Wednesday announced the latest addition to its popular C-Series line of multi-monitor graphics cards, the Matrox C900. The card is noteworthy as it's the world's first single-slot, nine-output graphics card.

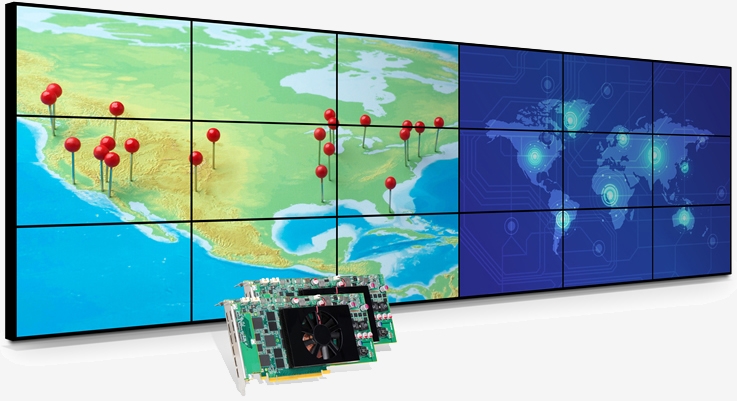

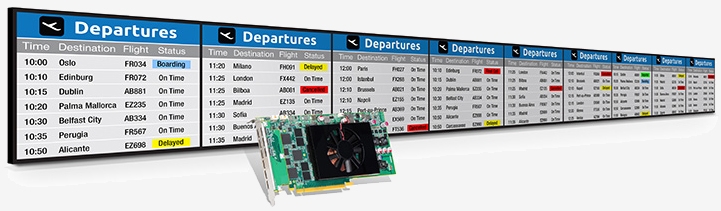

The Matrox C900 is a PCIe 3.0 x16 video card that packs 4GB of memory and nine mini-HDMI connectors that consumes just 75 watts. With those connectors, it can push nine 1,920 x 1,080 displays at 60Hz either in a 9x1 or 3x3 video wall configuration. Matrox says the card offers a total desktop resolution of 5,760 x 3,240 when in the latter configuration.

If that's not enough, the card can be paired with another C900 card or a Matrox C680 six-output board to push 18- or 15-screen video walls, respectively.

The company also tells us it supports digital audio through HDMI and is DirectX 12 and OpenGL 4.4 compliant and is also compatible with Matrox Mura IPX Series 4K capture and IP encoder & decoder cards.

As you've likely surmised, a card of this caliber is best suited for signage installations in retail, corporate, entertainment and hospitality environments. Matrox also says it'd be ideal for a control room video wall in security, process control and transportation environments.

Matrox said its C900 will be publicly demonstrated at ISE 2016 in Amsterdam from February 9 through February 12. The card will be available sometime in the second quarter, we're told. No word yet on pricing.

https://www.techspot.com/news/63702-matrox-c900-single-slot-nine-output.html