The world is full of networking protocols – the ways in which electronic equipment and computers communicate. A big use of these protocols can be found in data centers, especially among the hyperscales like Google and Amazon.

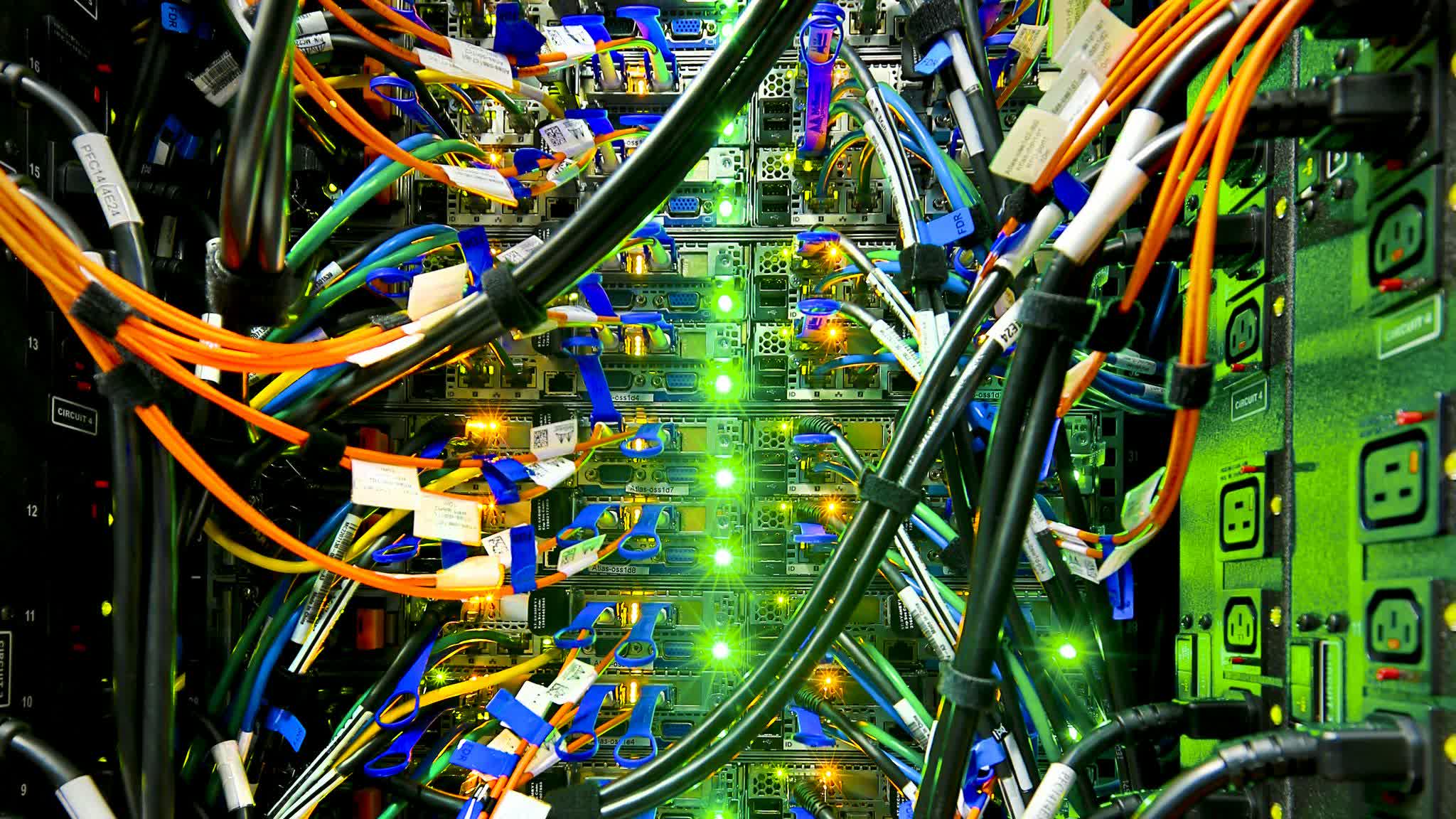

Connecting all the servers inside a data center is a high-intensity networking operation, and for decades these operators used the most common communication protocols like Ethernet to physically connect all those servers and switches. (Yes, there are many others still in use, but Ethernet makes up the majority of what we are talking about). Ethernet has been around a long time because it is highly flexible, scalable and all the other -bles. But as with all things in technology, it has its limitations.

Editor's Note:

Guest author Jonathan Goldberg is the founder of D2D Advisory, a multi-functional consulting firm. Jonathan has developed growth strategies and alliances for companies in the mobile, networking, gaming, and software industries.

Earlier this year, Google unveiled their new networking protocol called Aquila in which Google seems to be focusing on one big limitation of Ethernet – latency in the network, or delays caused by the amount of time it takes to move things around an Ethernet network. Admittedly, we are measuring latency in millionths of a second (microseconds, μs), but at the scale we are talking about these delays add up.

In Google's words:

We are seeing a new impasse in the datacenter where advances in distributed computing are increasingly limited by the lack of performance predictability and isolation in multi-tenant datacenter networks. Two to three orders of magnitude performance difference in what network fabric designers aim for and what applications can expect and program to is not uncommon, severely limiting the pace of innovation in higher-level cluster-based distributed systems

Parsing through this dense paper two questions stood out for us.

First, what applications is Google building that are so complicated that they require this solution. Google is famous for its effort to commercialize distributed computing solutions. They invented many of the most common tools and concepts for breaking up big, complex computing problems into small, discrete tasks that can be done in parallel. But here, they are looking to centralize all that.

Instead of discrete tasks, Google is instead building applications that are closely linked and interdependent, so much so that they need to share data in such a way that microseconds bog down the whole thing by "two to three orders of magnitude".

The answer is likely "AI neural networks" – which is a fancy way of saying matrix multiplication. The bottleneck that many AI systems face is that when calculating massive models, the different stages of the math affect each other – one calculation needs the solution to another calculation before it can complete.

Dig into the travails of startup AI chip companies and many of them founder on these sorts of issues. Google must have models so big that these inter-dependencies become bottlenecks that can only solved at data center scale. A likely candidate here is that Google needs something like this to train autonomous driving models – which have to factor in huge numbers of inter-dependent variables. For example, is that object on Lidar the same as what the camera is seeing, how far is it, and how long until we crash into it?

It is also possible that Google is working on some other very intense calculations. The paper repeatedly discusses High Performance Computing (HPC), which is typically the realm of super computers simulating the weather and nuclear reactions. It is worthwhile to think through what Google is working on here because it will likely be very important somewhere down the line.

That being said, a second factor that stood out for us in this paper is a sense of extreme irony.

We found this paragraph in particular to be highly humorous (which definitely says more about our sense of humor than anything else):

The key differences in these more specialized settings relative to production datacenter environments include: I) the ability to assume single tenant deployments or at least space sharing rather than time sharing; ii) reduced concerns around failure handling; and iii) a willingness to take on backward incompatible network technologies including wire formats.

The point here is that Google wanted Aquila to provide dedicated networking paths for a single user, to provide guaranteed delivery of a message, and to support older communications protocols. There is, of course, a set of networking technologies that already do this – we call them circuit switching, and they have been used by telecom operators for over a hundred years.

We know that Aquila is very different. But it is worth reflecting on how much of technology is a pendulum. Circuit switching gave way to Internet packet switching over many painful, grueling decades of transition. And now that we are at the point where Internet protocols power pretty much every telecommunications network out there, the state of the art is moving back to deterministic delivery with dedicated channels.

The point is not that Google is regressive, merely that technology has trade-offs, and different applications require different solutions. Remember that the next time someone wages a holy war over one protocol or another.

Google is not alone in seeking out something like this. Packet switching in general and the Internet in particular, have some serious limitations. There are dozens of groups in the world looking to address these limitations. These groups range from Internet pioneers like Professor Tim Berners-Lee and his Solid initiative to Huawei's New IP (a slightly different view of that).

Those projects obviously have very different objectives, but all of them suffer from a problem of overcoming a world-spanning installed base. By contrast, Google is not looking to change the world, just its portion of it.

https://www.techspot.com/news/96335-networking-google-aquila-more-things-change.html