Why it matters: Modern GPUs have pushed the venerable 8-pin Molex connector to its limits. Nvidia denounced it with this generation’s Founders Edition cards, which have a proprietary 12-pin connector; AIBs choose between overstuffing hungry cards with three connectors or starving them with two.

A new power connector, called 12VHPWR, solves that problem by delivering as much power as four 8-pin Molex connectors -- 600W. It’s part of the PCIe 5.0 standard, and it could start appearing on GPUs next year.

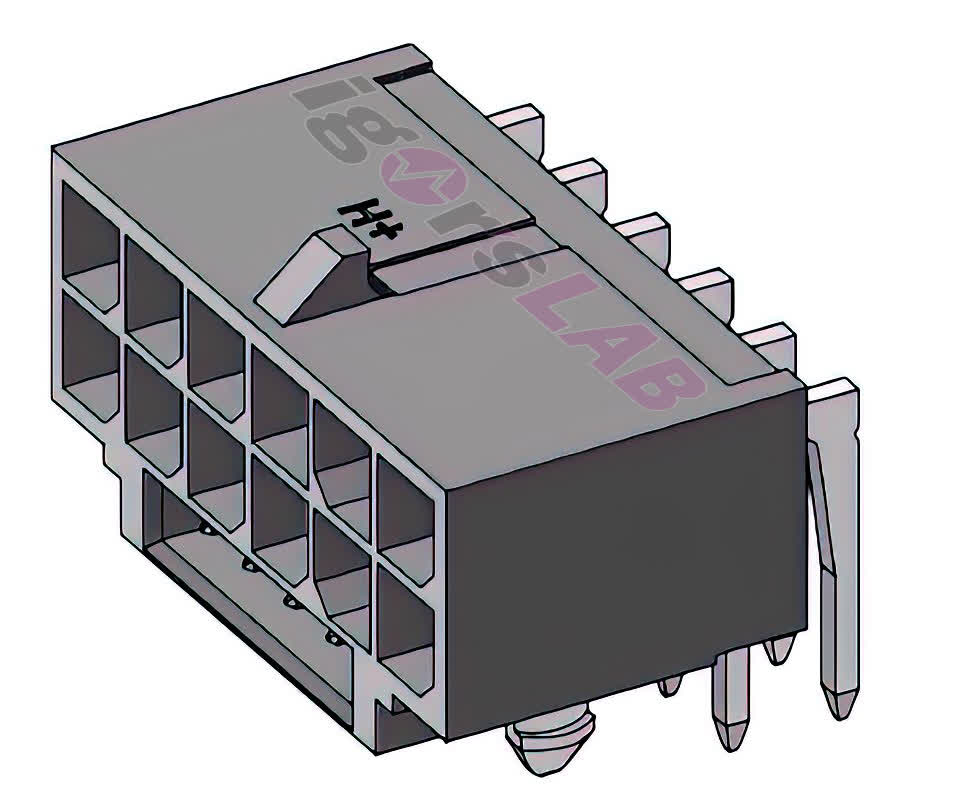

Some manufacturers have already listed their 12VHPWR plugs and cables, hence the pictures. But the credit for most of the details goes to Igor’s Lab, which learned about the connector from power supply manufacturers and AIBs. The latter was relieved to be moving on from old 8-pins.

As its name would suggest, the 12VHPWR connector has 12 power pins/plugs. They’re 3 mm wide, instead of 4.2 mm like Molex. It also has four tiny contact pins/plugs on the underside to carry sideband signals and a latch on top to secure the pins in the plugs.

Each of the 12 power channels can carry at least 9.2 A at 12 V. When all 12 channels are active, it can carry a total of 55.22 A or 662.4W. But it can’t be paired with GPUs that draw more than 600W because it needs ~10% redundancy.

Impressively, at 19 mm wide, it’s only a fraction larger than a single 8-pin connector. It does require beefier cables, though, made out of more premium materials.

At the moment, there aren’t any GPUs that require the full 600W. The first GPU that’s rumored to use the 12VHPWR connector is the (unconfirmed) RTX 3090 Ti, which might draw 450W.

But the connector might be getting used to its fullest sooner than you’d expect. AMD and Nvidia’s next generation of data center GPUs are believed to use power-hungry chiplet designs, and be twice as large as their current offerings, which already consume 250-300W.

Masthead credit: Vagelis Lnz

https://www.techspot.com/news/91668-next-gen-power-connector-gpus-can-handle-up.html