Lol kinda sad that the best DLSS implementation so far has been wasted on a woke trash of a game :/. Oh well let hope that Cyberpunk 2077 will also feature DLSS, even via a later patch, that will certainly help boosting RTX sale through the roof.

Anyone who think the whole AI image enhancing is a gimmick better check out some clip from 2kliksphilip

In short AI can make an upscaled image that is 1/3 the size of the original image look better than the original, quite mind blowing actually. However it also have some flaw just like DLSS such as shimmering...

You do realize that say for the example above, the algorithm didn't

necessarily make the blurry image

accurate, right? (well it is up to a point) The way this tech works is that the rest of the image is filled in based on a library that most likely doesn't contain that image. So it uses pieces from the other images that are similar to make it look acceptably clear, but it won't necessarily be accurate to the original. There's a difference between "pleasing to the eye" and "accurate to the original" in this regard. The more the machine learning has to fill in details, the less accurate to the original the image will become. The "shimmering" is likely a result of these various random "similar" bits being added from the library. Still pics work best. Sometimes "pleasing to the eye" is exactly what we are after though, when accuracy is less important. (in other words, this has limited use as magical forensic tool that can pull data from images that doesn't exist - although it works well enough to be a basic image enhancer to a point)

If you go to 12:10 you can see a blurry brick chimney ... then wait for the AI version - its an "alligator skin" chimney - not accurate. DLSS contains this flaw at its core because it is inherent in the technology.

-------

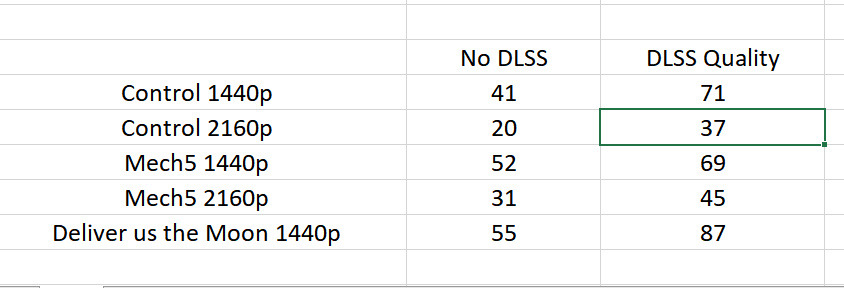

My main issue with DLSS is that it was touted as an AA technique, and compared to other AA techniques, when this is not really a type of AA technique at all. AA, in its inception was a "render higher res, then downscale" technique and it primarily still is except we have fancy edge detect algorithms, etc. that try to lessen the performance hit. DLSS does the opposite - you set your resolution to say 1440p but the game is then rendered at 1080, and upscaled with selective artificial sharpening applied to certain (sometimes wrong) areas.

If I render at 720p and upscale to 1080p and it looks blurry, can I say that I applied anti-aliasing? lol ... no ... that's the opposite of what the essence of AA is. So to call DLSS an "AA solution" is a bit of a misnomer - the only "super sampling" happening, was done at the Nvidia server when they try to build libraries, no super sampling actually is processed with DLSS. Its a selective sharpener, and that's about it. I hate deceptive marketing. One thing Jensen and Nvidia knows how to sell is hype and awe. At least if I apply Radeon sharpening, the resolution I set is the resolution I get, and there's still no performance hit.

I probably would have less a sour taste in my mouth if Nvidia was honest about what it really was, what it really was designed for -- only to try to make RT performance

acceptable at a given "resolution" (in air quotes), that's really its

only purpose .... when RT performance becomes mainstream usable, DLSS probably won't have a whole lot of usefulness.

I can see other selective technologies, along the lines of Radeon Boost, and that other Nvidia selective

true AA (I can't recall what it is called) being actually more useful tools.

Real-time RT still needs some time before it is matured enough to be solid feature. One or two more generations will see some significant improvements.