Why it matters: Nvidia's Ampere' successor is nearly here according to leaks, and it's going to be hot and hungry. GeForce RTX 4000 series cards, codenamed Ada Lovelace, could consume 50 to 100 W more than their predecessors across the board, with flagship models breaking new ground.

Veteran leaker kopite7kimi, who nailed the specifications of Ampere weeks before anyone else, has updated his forecasts of Ada Lovelace's power limits. It's important to note that power limits are not the same as TDPs or TBPs; they're an upper bound that only comes into play when overclocking is involved. However, they're still a good guideline for power consumption, just an overestimate.

Coming to torture our power supplies is the flagship AD102 with a limit of 800W. Going down a level, the desktop AD103 has a limit of 450W, the AD104 of 400W, and the AD106 of 260W. In the past, the xx106 has been used by entry-level GPUs and the xx104 by mid-range GPUs, but Nvidia could change that up.

As you'd hope, the leaked laptop power budgets are tamer. Kopite reports that the mobile versions of the AD103 and AD104 have limits of 175W, and the AD106 has a limit of 140W. However, because they're laptop parts, manufacturers will have a lot of leeway to tweak them anyway.

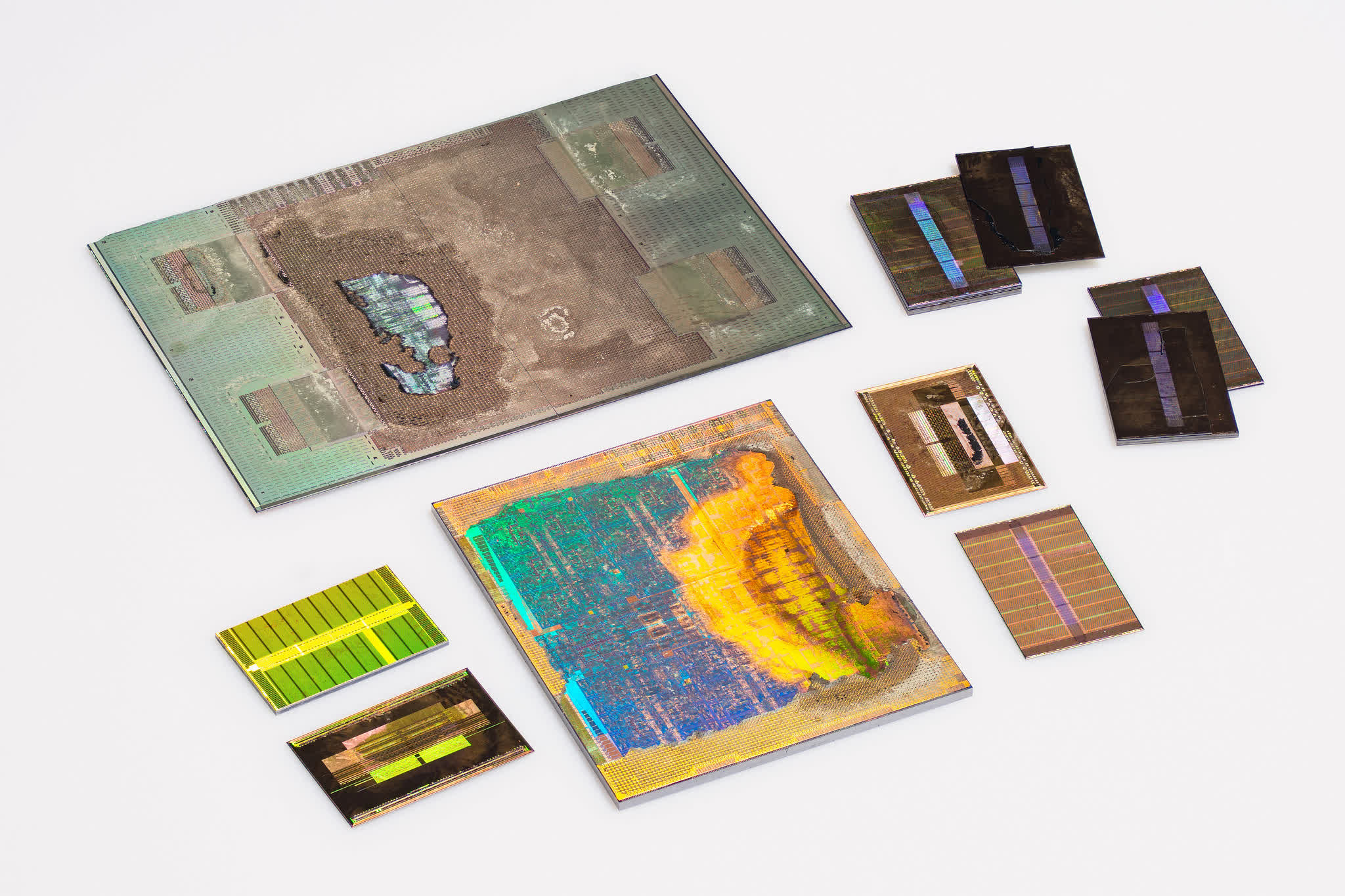

A disassembled Nvidia P100. Via Fritzchen Fritz.

Back to the numbers, you're probably still reeling over: 800W. It's achievable with a pair of the new PCIe 5.0 power connectors, which can handle 600W each (if the card used 8-pin power connectors, it would need six!). But there aren't very many power supplies with two of those ports and that much power on the market.

Cooling will also be an interesting challenge. But, setting those practical concerns aside for a moment, is an 800W ceiling that unreasonable for a GPU with 18,432 CUDA cores (according to the information from February's hack)?

In its fully unlocked state, the AD102 will consume 0.0434W per CUDA core, which is only marginally more than the 0.0419W per core consumed by the RTX 3090 Ti. As Steve pointed out in our RTX 3090 Ti review, that GPU is a power-hungry monster, but theoretically the AD102 is only equally as awful.

And remember, 800W is the (probable) hard limit, not the TDP. A past report said that Nvidia was testing a 600W TDP for the 4090 that would improve the GPU's efficiency and leave 200W as overclocking headroom.

https://www.techspot.com/news/94993-nvidia-geforce-rtx-4090-could-have-power-limit.html