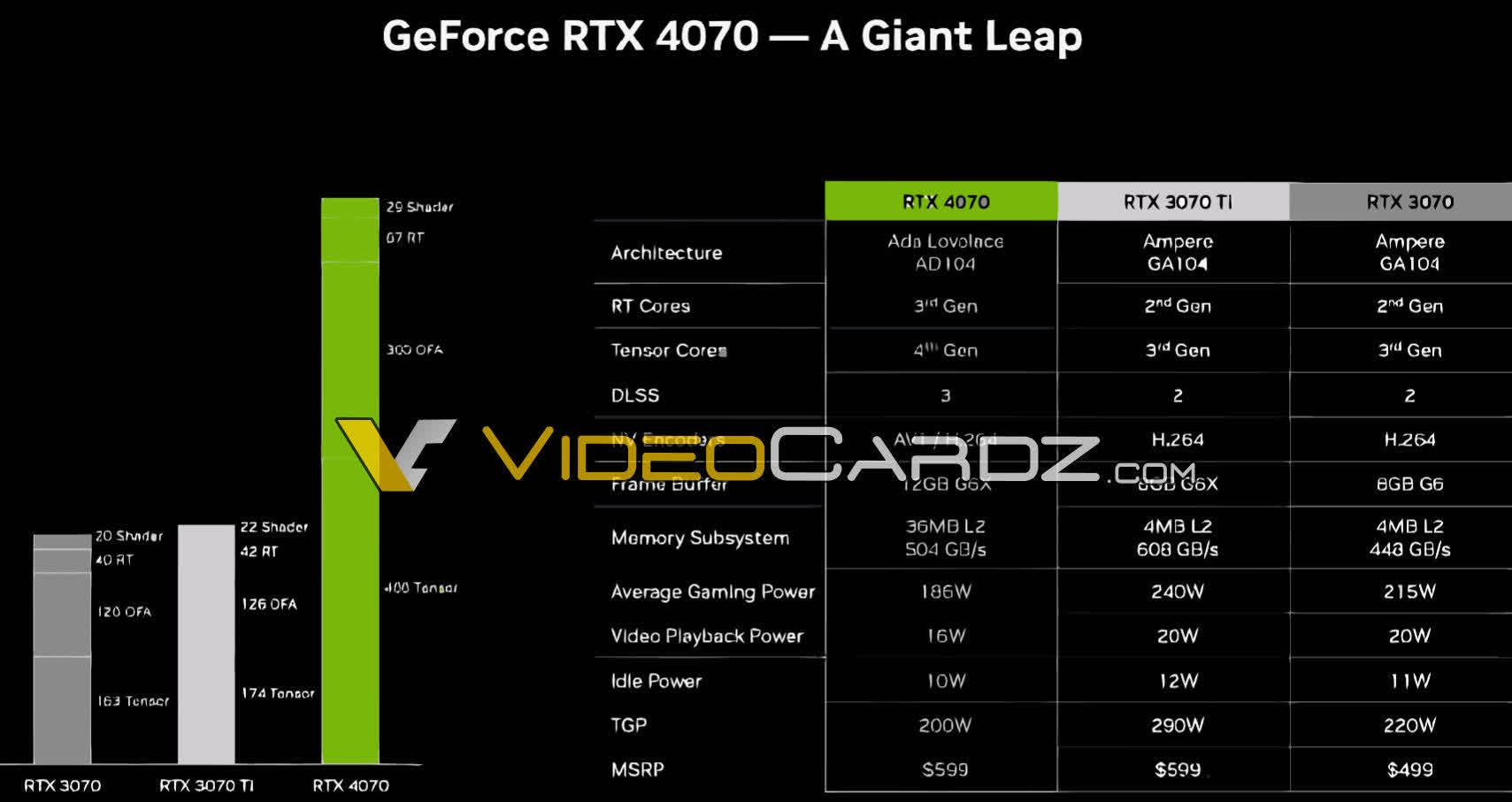

What just happened? Nvidia's fourth graphics card based on the Ada Lovelace architecture is almost here. According to chatter around the water cooler, the card is currently being put through the paces by hardware reviewers ahead of a planned launch on April 13 for $599. A recently leaked presentation slide shared by VideoCardz adds even more pieces to the puzzle.

According to the slide, the RTX 4070 non-Ti will ship with 12 GB of GDDR6X memory across a 192-bit interface and 36 MB of L2 cache. That's four GB more memory and nine times more cache than the 8 GB of RAM and paltry 4 MB of L2 cache found on the RTX 3070 and RTX 3070 Ti.

(Click to expand)

Cards will also be advertised with a 200W TGP (Total Graphics Power) but on average, should consume closer to 186 watts in real-world gaming scenarios which is lower than the average consumption of both the RTX 3070 and 3070 Ti. For comparison, the standard RTX 3070 has a TGP of 220W while the Ti variant is rated at 290W. The RTX 3080 and Ti variants have a TGP up to 350W.

The slide – assuming it is legitimate – likely came from Nvidia's reviewer's guide which accompanies early samples sent out to tech sites in preparation of their coverage. It's not uncommon for slides like these to find their way online early, especially if the source can guarantee their anonymity.

Related reading: GeForce RTX 4070 Ti vs. GeForce RTX 3080 - 50 Game Benchmark

Pricing is expected to play a major role in the popularity of the RTX 4070. It'd be interesting at $599 considering the 4070 Ti starts at $200 more and the RTX 3080 has an MSRP of $699.

The 4070 Ti was originally set to arrive as the RTX 4080 12 GB at $899 but Nvidia "unlaunched" it after backlash from the gaming community. The hardware maker rebranded the card and cut the price by $100, resulting in the RTX 4070 Ti.

https://www.techspot.com/news/98172-nvidia-rtx-4070-consumes-186w-while-gaming-leaked.html