Rumor mill: Nvidia’s upcoming RTX 4000 series of graphics cards, codenamed Lovelace, are rumored to arrive this September. But in addition to availability and price concerns, you’ll probably need a new PSU for the high-end models, which are said to consume over 800W of power.

We heard reports last year claiming the RTX 4000 cards would use some of the most power-hungry GPUs we’ve ever seen. That’s especially true of the AD102 that’s rumored to have a TGP of 800W or more.

The latest rumors come from prolific leakers Kopite7kimi and Greymon55, both of whom say they’ve heard claims that the AD102 GPUs will have scarily high TGPs. The former thinks the RTX 4080 will carry a 450W TGP and the RTX 4080 Ti will be 600W. The RTX 4090, meanwhile, will come with a monstrous 800W rating.

That's just a rumor.

— kopite7kimi (@kopite7kimi) February 23, 2022

I've heard 450/600/800W for 80/80Ti/90 before.

But everything is not confirmed.

Greymon55 is going for 450W/650W/850W for the AD102. He also adds that it’s unclear if one model has three TGP ranges or whether it has three models.

I am not clear at the moment whether one model has three TGP ranges or whether it has three models but the TGP number of the AD102 is 450W-650W-850W, of course this is not the final specification and there may be some deviation.

— Greymon55 (@greymon55) February 23, 2022

The most power-hungry consumer Ampere card on the market right now is the RTX 3090 with 350W. The RTX 3090 Ti is expected to up that to 450W, though we don’t know when that much-delayed card will arrive.

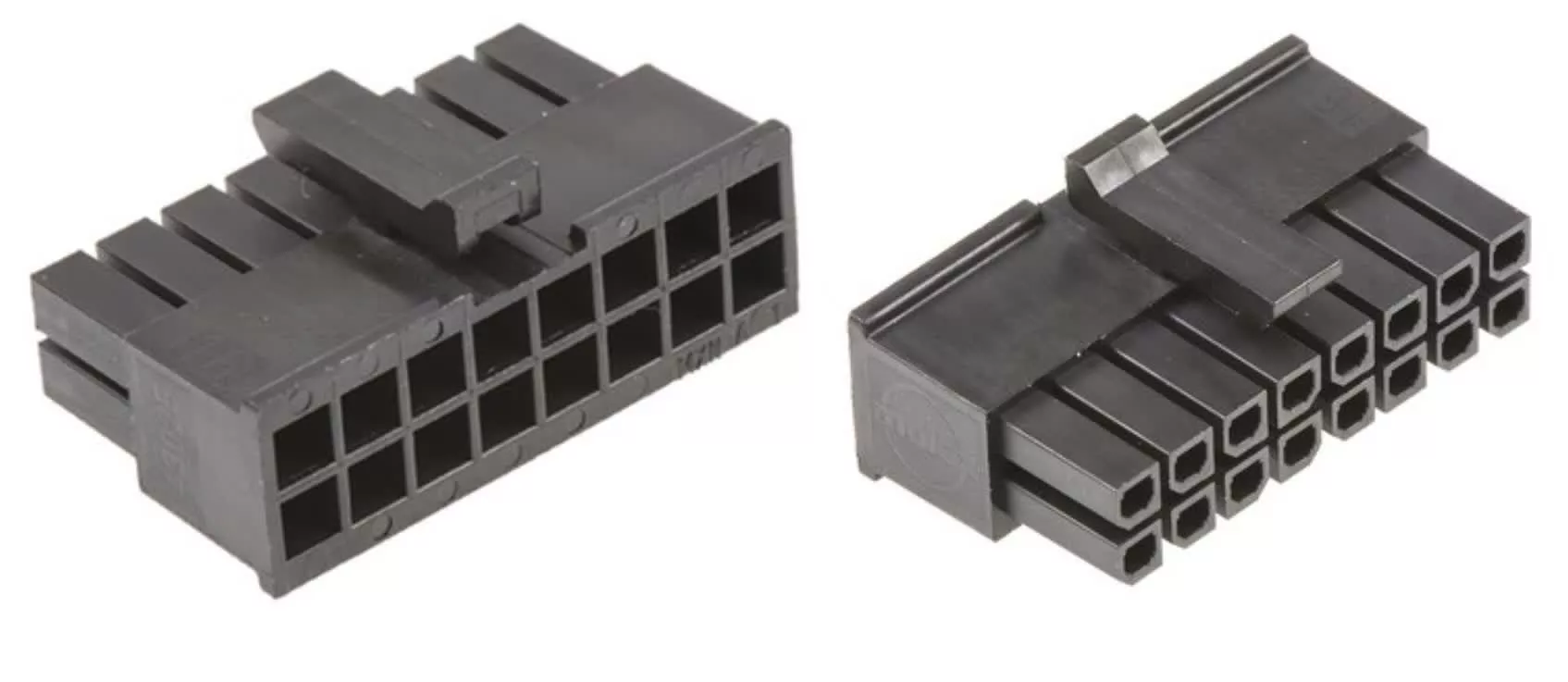

We also know that companies are working on (and some have released) PCIe Gen 5 power supplies ready for next-gen cards. The 16-pin power cable is set to replace existing 8-pin 150W power cables, though the 800W+ RTX 4000 may need two. There had been theories that the RTX 3090 Ti would use the PCIe Gen 5 connector, but it seems Nvidia is saving it for Lovelace.

These are all rumors and speculation, of course, but it’s not the first time we’ve heard similar claims. Nvidia previously said graphics card supply would improve in the second half of 2022, so let’s hope owning a capable PSU will be the only issue with Lovelace.

https://www.techspot.com/news/93535-rtx-4000-series-aka-nvidia-lovelace-rumored-feature.html