Couple things. While it's implied, there is no actual statement as to what cooling was used in either of the i9 tests. Stock cooling? Explain.

How many times was each benchmark ran? Was this an average of results? Was this using the same parts except what was stated? Was it an open test case? Were they performed at the same time?

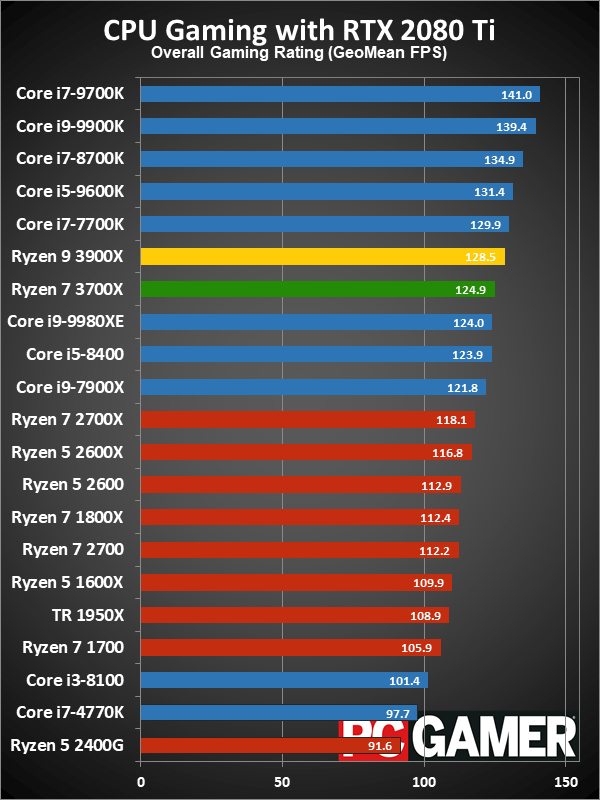

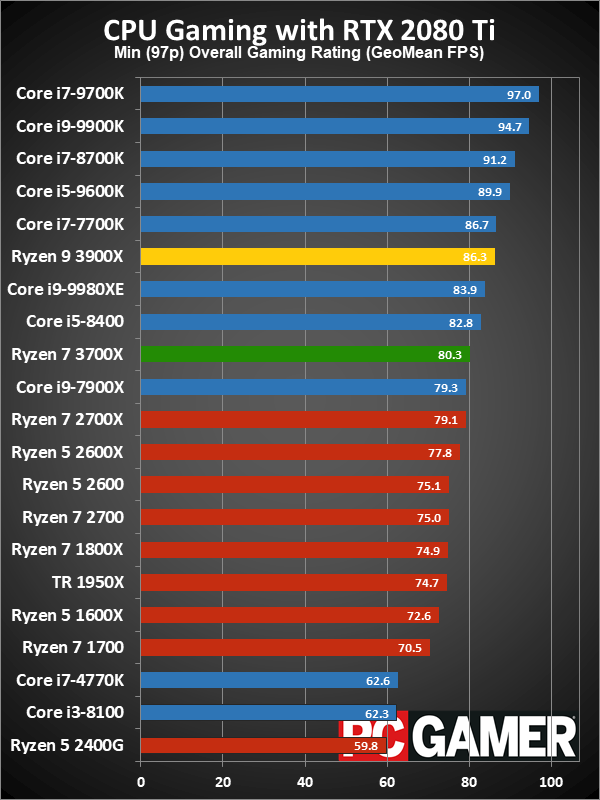

Is 1080p considered a benchmark anymore? Why use a 2080ti for 1080p? Can we get cpu and gpu (gpu especially) usage percentage scores (high/low/average)?

Jesus, people act like it's tough to properly report findings. This just comes off as sloppy, I expected better, honestly.

How many times was each benchmark ran? Was this an average of results? Was this using the same parts except what was stated? Was it an open test case? Were they performed at the same time?

Is 1080p considered a benchmark anymore? Why use a 2080ti for 1080p? Can we get cpu and gpu (gpu especially) usage percentage scores (high/low/average)?

Jesus, people act like it's tough to properly report findings. This just comes off as sloppy, I expected better, honestly.