Bottom line: No word yet on whether or not Sony's new image sensors will find their way to consumer products although given advancements in technology like AR, you have to think someone will try their hand at it at some point.

Sony on Thursday announced two new image sensors which it claims are the first in the world to come with built-in artificial intelligence processing. Including AI processing functionality directly on the image sensor affords multiple benefits, we’re told.

Localized, or edge processing, helps to speed up the entire process, especially when used in conjunction with cloud services as it reduces the amount of data that needs to be transmitted. This, combined with the fact that extracted information is output as metadata, reduces privacy concerns and security risks. The combo also lowers power consumption and communication costs.

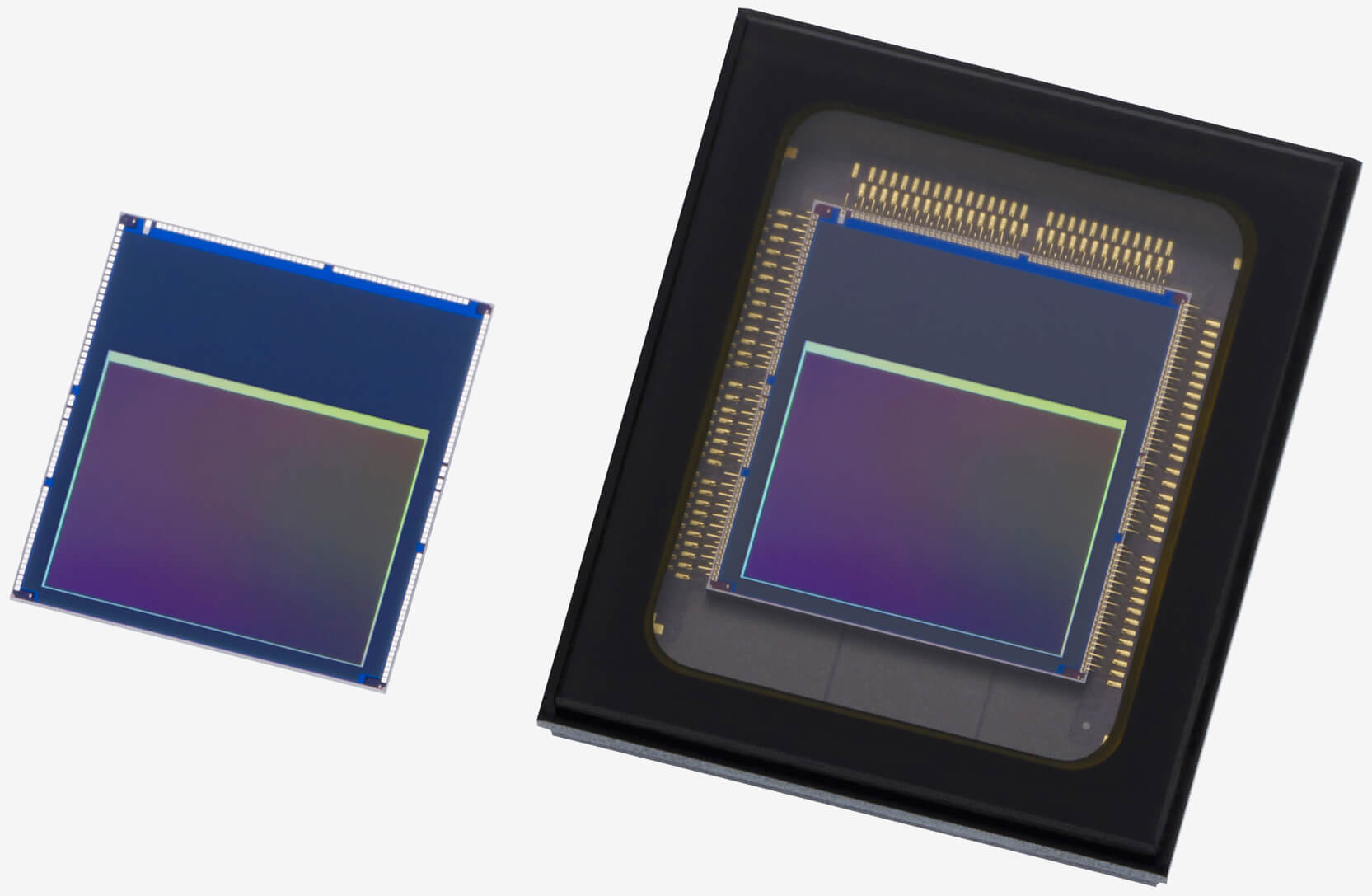

The new sensors are the IMX500 and the IMX501. Both are 1/2.3-type (7.857 mm diagonal) sensors with a 12.3 effective megapixel output. The IMX500 is a bare chip product while the larger IMX501 is a package product. Full chip specifics can be found over on Sony’s website.

A sensor of this nature has many potential uses. In a retail setting, for example, a camera could be set up at the entrance to count the number of shoppers entering the store. When installed on a shelf, it could help detect stock shortages and when mounted in the ceiling, could help create a heat map to identify where people tend to gather.

Sony is already sampling its IMX500 with industry partners and plans to do the same with the IMX501 starting next month.

https://www.techspot.com/news/85228-sony-new-image-sensors-first-ever-integrated-ai.html