krizby

Posts: 429 +286

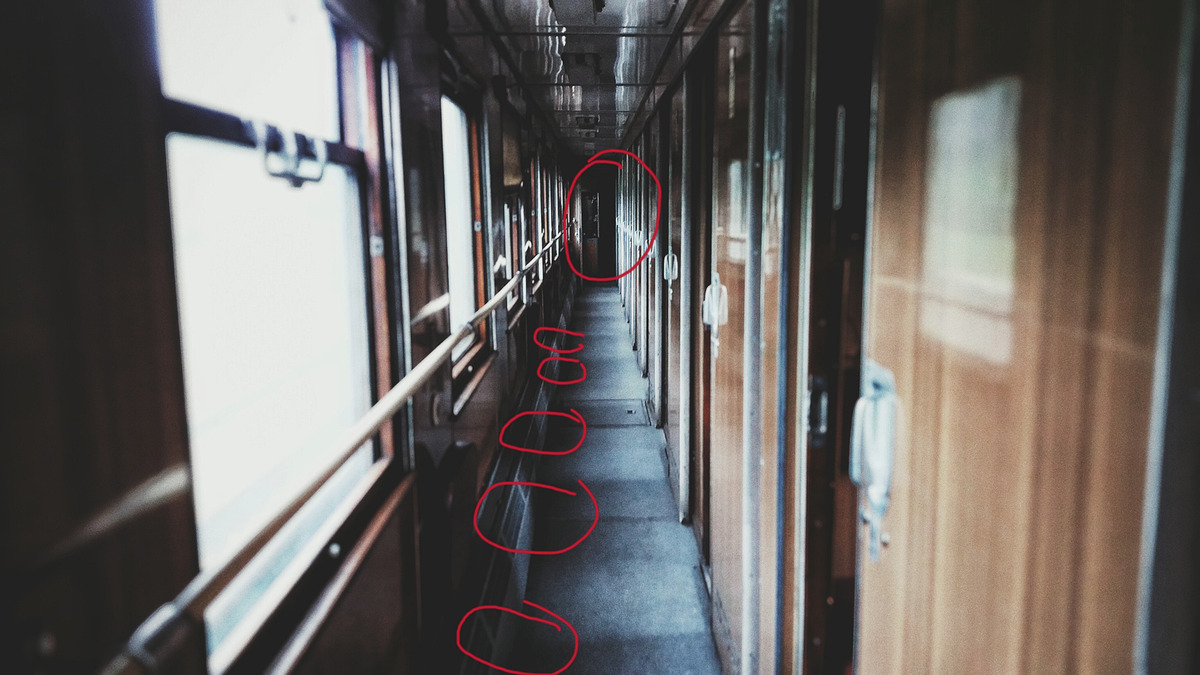

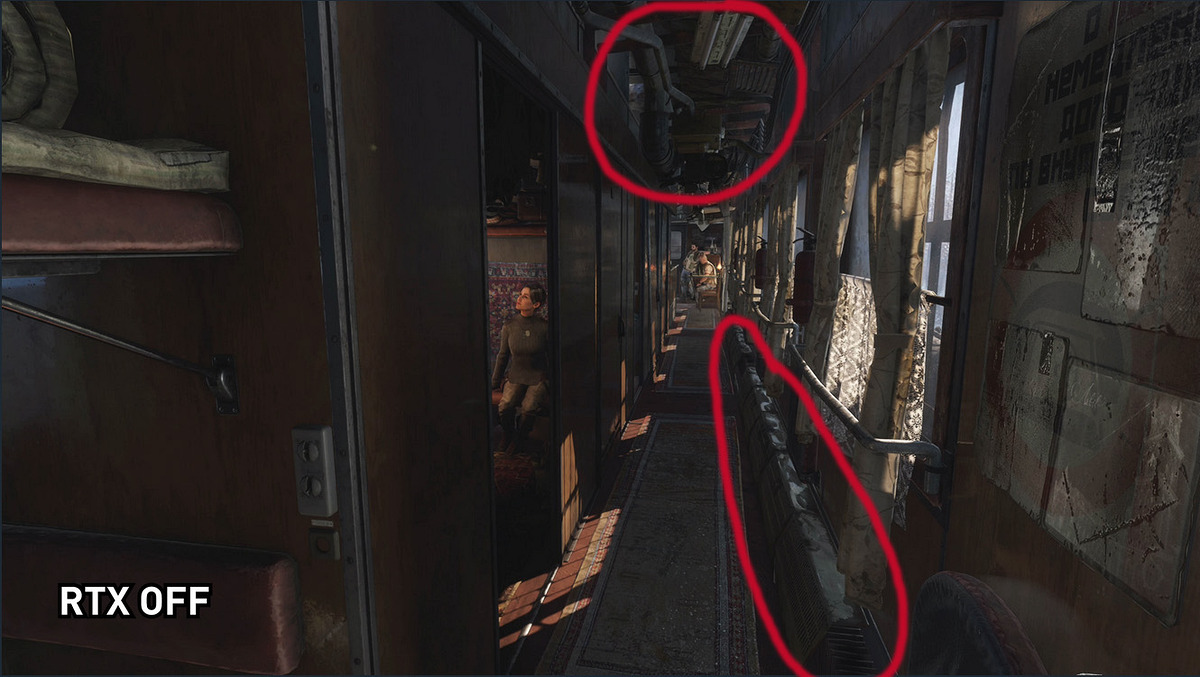

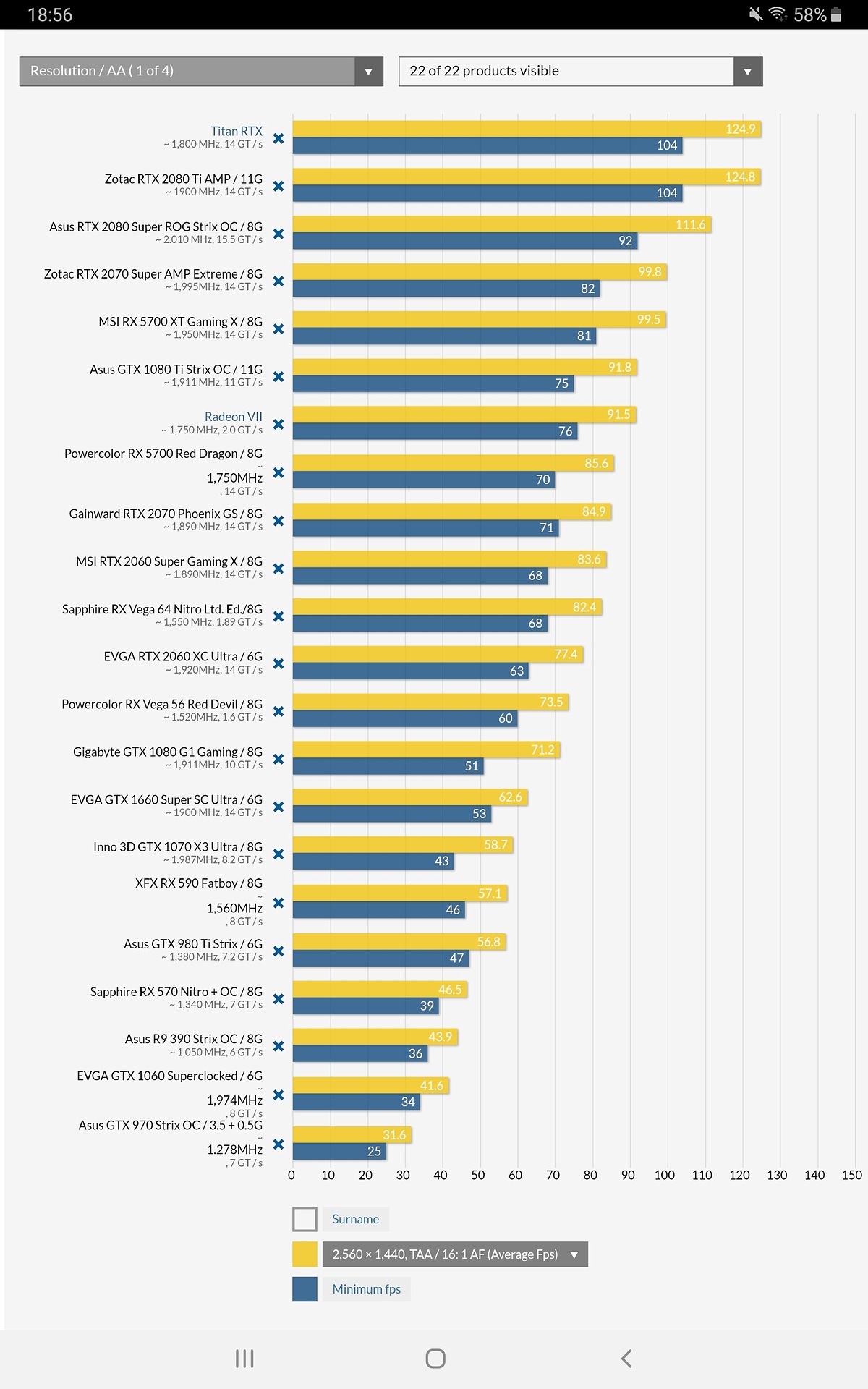

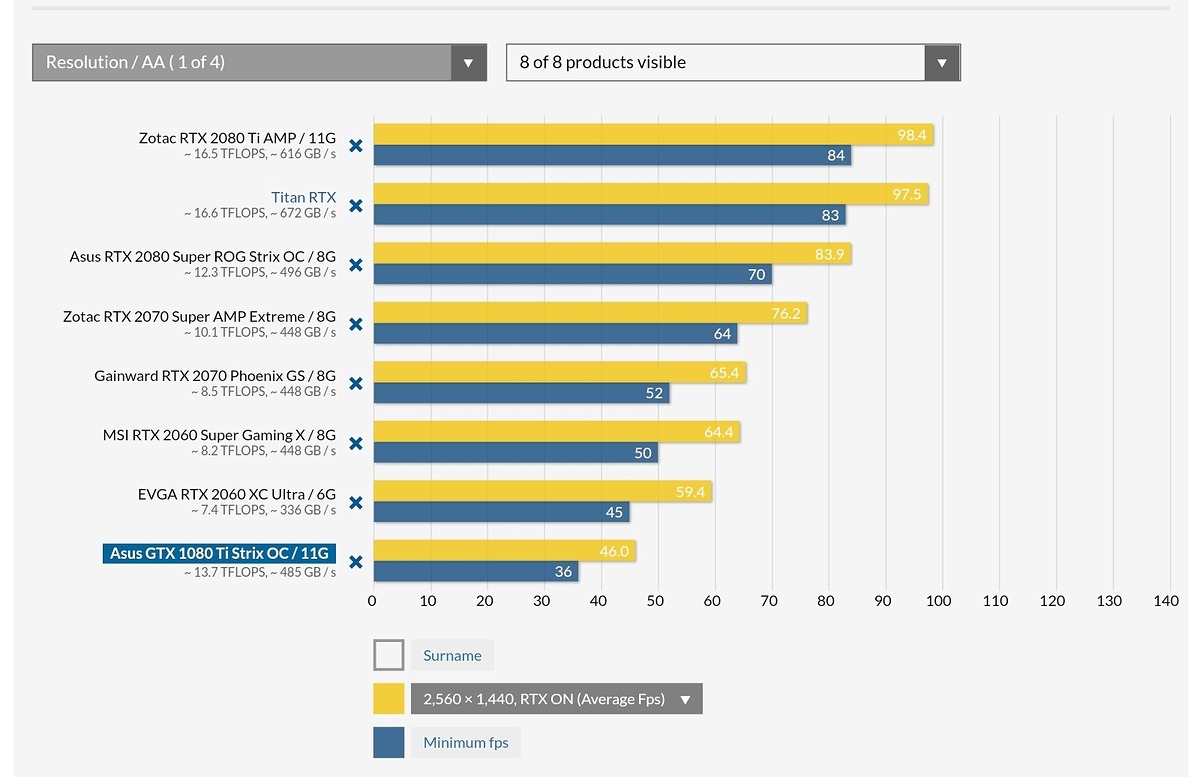

Modern Warfare has got RTX and not so much performance loss, 2060S can get 60fps 1440p Ultra Details with RTX while 2080Ti can get 60fps at 4K. Although it's only shadows and the effects are subtle, it does contribute to a more realism environments.

www.pcgameshardware.de

www.pcgameshardware.de

Call of Duty Modern Warfare Benchmarks: Raytracing-Schatten, schicke Grafik und flinke Direct-X-12-Performance

Im zweiten Teil unseres Tests zum Reboot von Call of Duty Modern Warfare für das Jahr 2019 vergleichen wir das Raytracing im Shooter und beschäftigen uns mit entsprechenden Grafikkarten-Benchmarks, die die Anforderungen des Shooters auf dem PC aufzeigen.