Avro Arrow

Posts: 3,721 +4,822

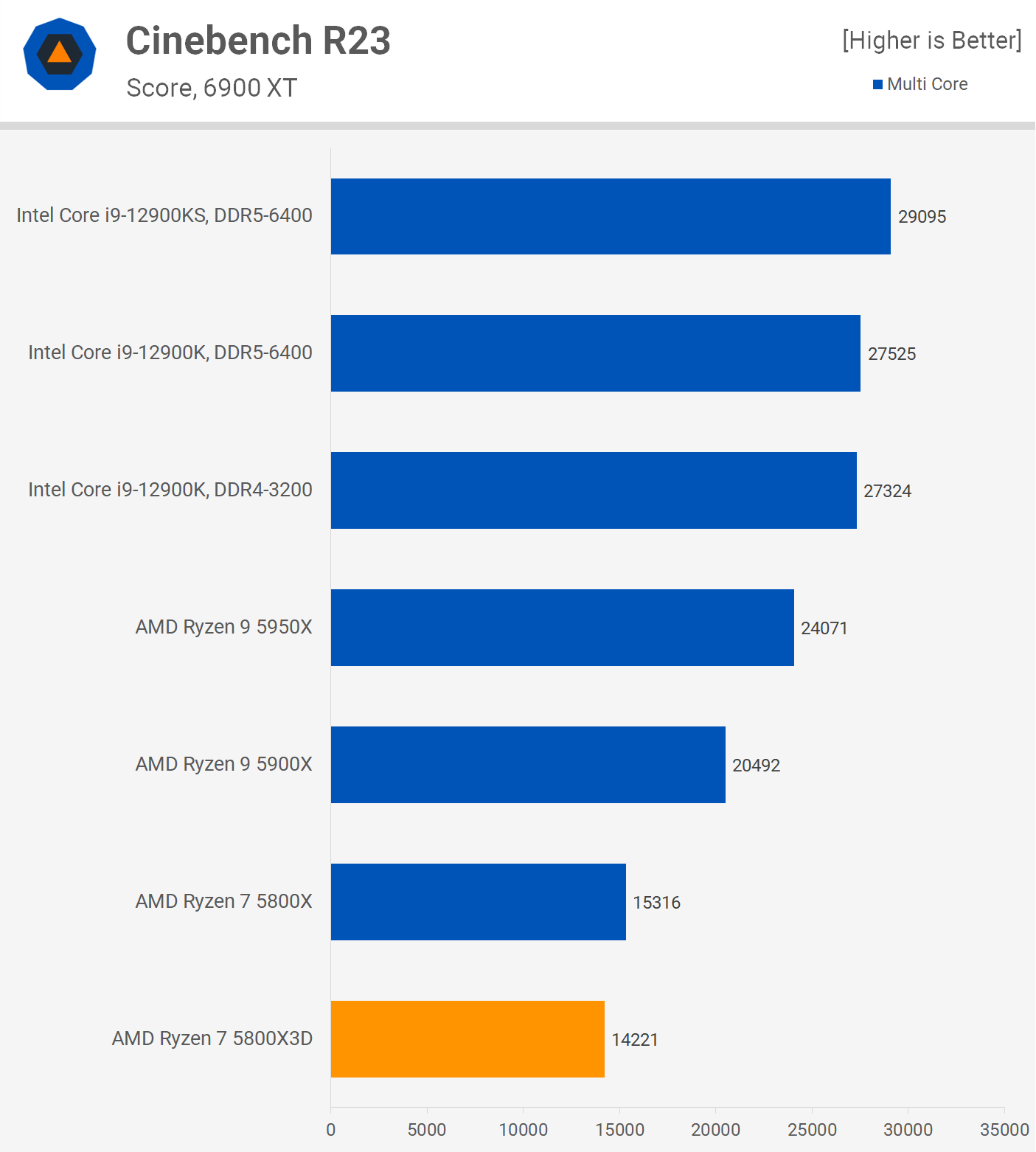

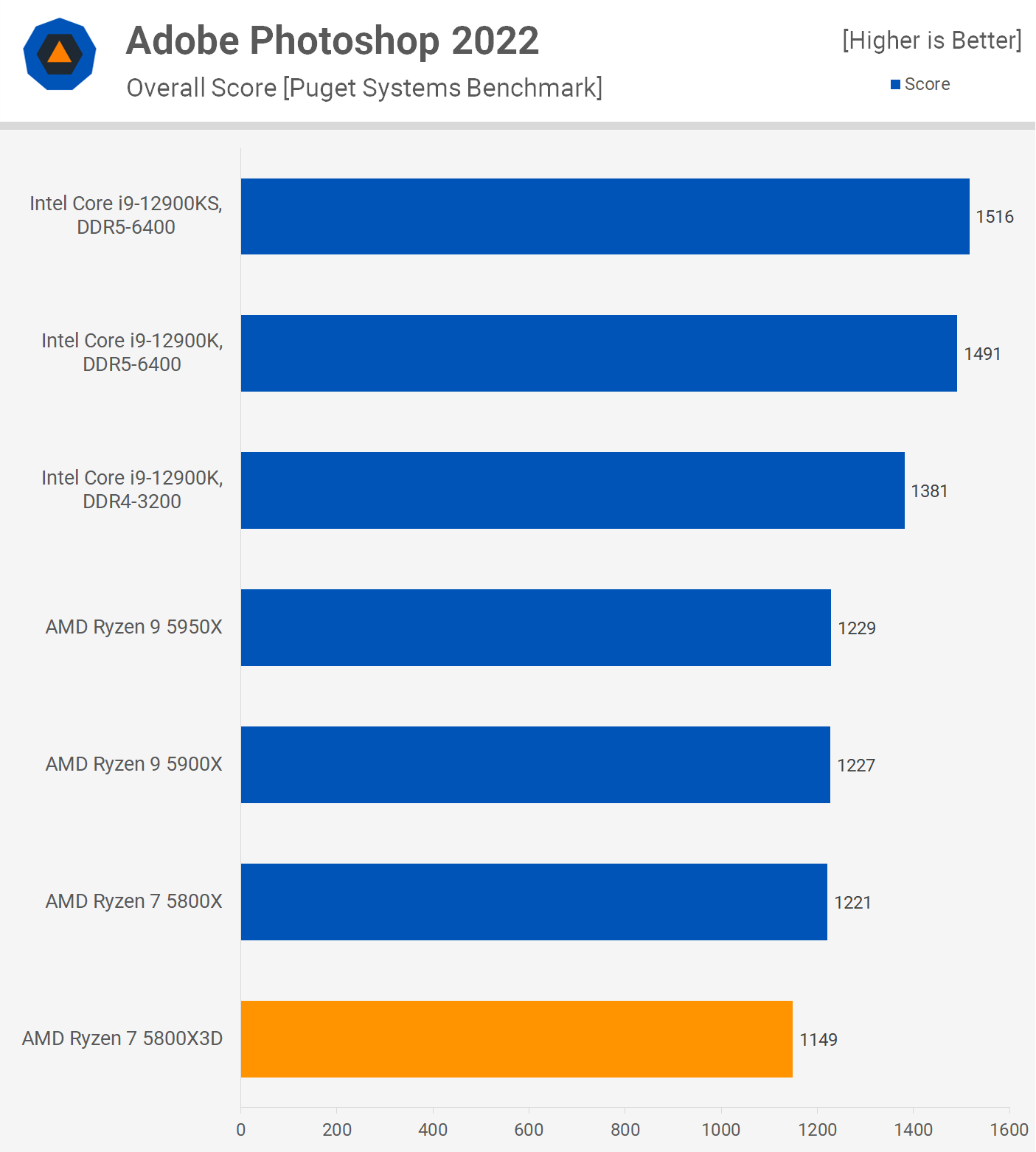

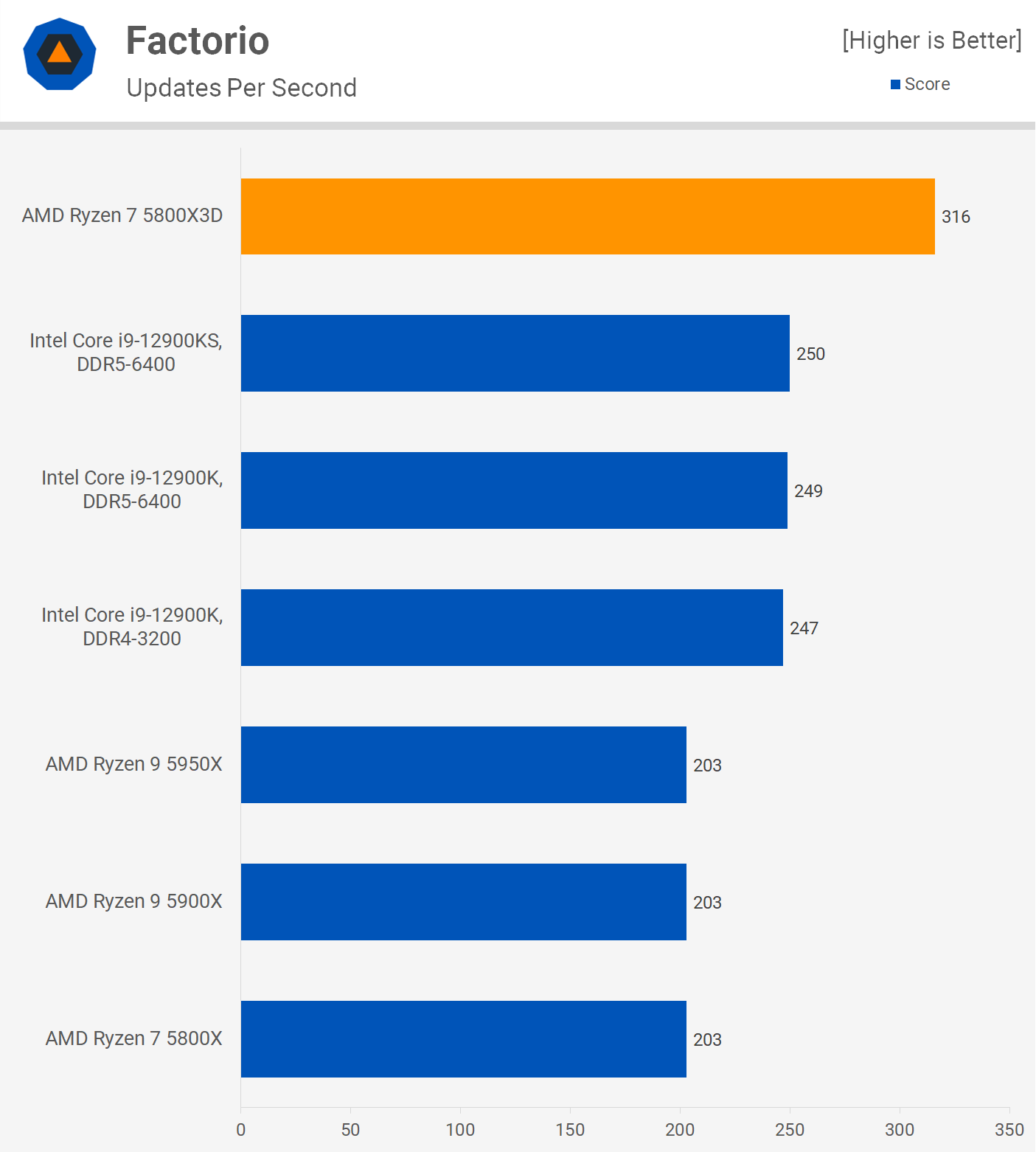

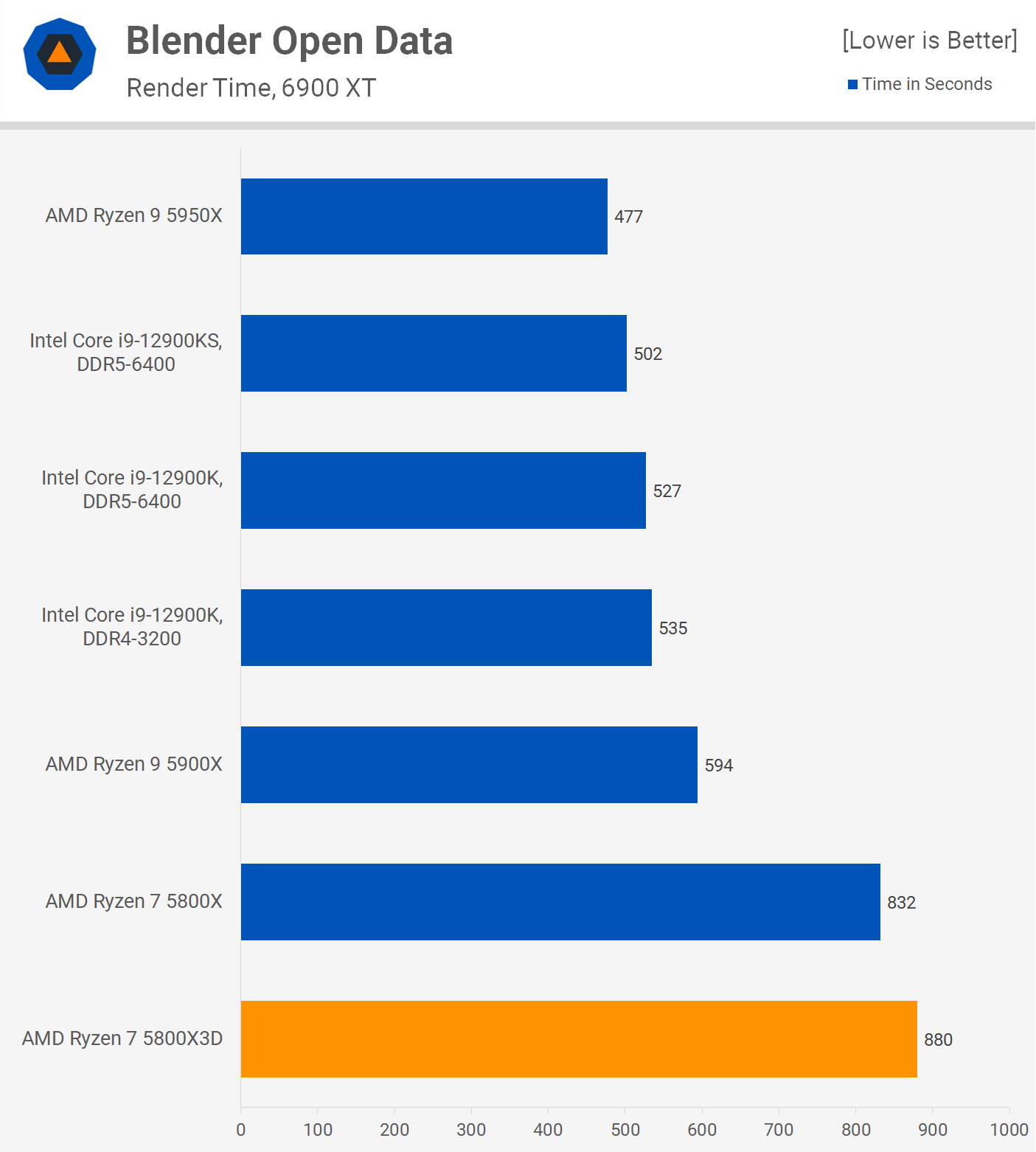

Why would you do that? The X3D cache does nothing for productivity. In fact, it has been shown to actually hurt it. The only instance in which I saw the X3D cache have a positive effect on productivity (that I can remember anyway) was in file compression and decompression. In everything else, the best-case scenario was no effect at all and the worst-case scenario was a reduction in performance because of its inability to clock higher. Save your money because the R9-5900X is one of the most efficient productivity CPUs out there.Sweet. Was thinking of holding off upgrading to AM5 but now looking to upgrade my 5900X to 7900X3D or 7950X3D.

Because the IGPs in the standard CPU line aren't meant for gaming, they're meant to do the same job that Intel's IGPs do. For that, even Vega would be good enough. I have no problem with them cutting costs on the IGP if it means the CPU costs less overall because the IGP is going to be pitifully weak no matter which architecture they use. It's just a display adapter and that doesn't take much.Hopefully they will also have rdna3 cores, instead of rdna2. I can't understand why they put in rdna2 when they had the new and better rdna3.

This is exactly why I think that the decision to put this X3D cache in productivity CPUs and not the 6-core gaming CPU is galactically stupid. The 6-core variant would've been the one to benefit most from this X3D cache and fewer cores means higher possible boost clocks.Perhaps not - after all, the 5800X3D was no better or worse than the 5800X in productivity benchmarks. If AMD can retain the original clock speeds of the models they plan to add additional cache too, then maybe all will be well. Either way, Steve's got a lot more testing coming his way

I can't believe that AMD shot themselves in the foot like this. They were in a position to completely own the PC gaming market from the CPU (and therefore, platform) standpoint yet they managed to snatch defeat from the jaws of certain victory!

Even worse, the X3D cache, at best, offers no advantage in productivity (as you rightly pointed out) so nobody who is in the market for a 12 or 16-core CPU will be willing to pay extra for it. Those 12 and 16-core X3D CPUs' main talent will be gathering dust on store shelves. To anyone with ½ a brain, all of this should have been obvious. Lisa Su really screwed up by allowing this course of action to take place.

I wouldn't bother yet if I were you. The difference in performance isn't anywhere near worth the difference in cost. If you're on AM4, just get an R7-5800X3D (if you can) or an R7-5700X. Those CPUs will be viable for years to come and by the time you're really ready to jump to AM5, it will be ½ as expensive as it is now, DDR5 and all.Great.

Waiting on these new 3D CPUs before I finally make the jump from AM4 to AM5. Hopefully by then, 32GB of DDR5 can be had for around 150 US or so.

I don't know if you've read any of the articles or if you've seen any of the videos in which the X3D cache is tested but it has NO positive effect on productivity at best and a negative impact at worst. Putting X3D cache into a 12 or 16-core productivity CPU would be absolutely useless. The only one who will benefit from AMD squandering this opportunity is Intel.Total checkmate for Intel if they add a 7950x at the same clockspeeds and 3d Cache - less watt, same or higher productivity performance and a much higher gaming performance. Will be a beast

That's not enough of a reason to put the X3D cache on a 12 or 16-core CPU while NOT putting it on a 6-core gaming CPU where the X3D cache has shown to have by far the most benefit. Nope, AMD screwed the pooch with this decision.3D V-Cache seems to mostly benefit realtime processes. So offline stuff (most productivity software) doesn't typically benefit. But some does (real time audio, video editing previews, etc.).

Actually, I hadn't heard anything about a 6-core variant, just the other three (I maybe just missed that info). I remember thinking "Why would you NOT make a 6-core X3D CPU for gamers who benefit most from X3D?".LOL last week only an 8 core and 6 core variant were coming. Now we are back to 16, 12 and 8 cores, but no 6 core. You tech sites really should get you facts straight before posting stories.

Yep, I call decisions like this "Galactically Stupid".Anyway IMO AMD are morons for not doing a 6 core version at $329 max.

Why? The R9-7950X is a productivity CPU and productivity doesn't benefit from the X3D cache from the tests that we've seen. If I were you, I'd just get the 7950X and not pay extra money for nothing.If they release a 7950X3D, I am sold.

I agree. I can't see a good use case for the R9-7900X3D or the R9-7950X3D. The best use case would have been for the R5-7600X3D but for some *****ic reason, AMD decided to NOT make that one. Man, if they did release an R5-7600X3D, no gamer would look at Intel for a very long time. Talk about $hitting their own bed!So you don't just game clearly. Are aware of what productivity software will benefit from v-cache. I have seen server tests of Milan-X showing some huge uplifts in software, but most is server related naturally. Only one I saw of interest to me was OpenFOAM fluid sim. Currently 13900K kills 7950X in a lot of important software I use, so v-cache would need to bring big improvements outside of gaming. Zen 4 is already excellent in gaming and hardly needs a boost, but those Intel core counts are killing it for multi-threaded apps. I'm leaning towards 13700K rather than 7900X. AMD will no doubt add another $100 on the 7900X3D meaning in Australia it would be about $400-500 dearer, enough to pay for motherboard and a stick of memory.

They need more market share to gain parity with Intel and an R5-7600X3D could have possibly done that in a single generation. AMD's stupidity here is boggling my mind.That's a good question, they certainly don't seem to want to cater to the traditionally largest consumer base. Have they really found a sustainable business model of just selling these higher margin, mostly useless for gaming, hardware components? Financial reports indicate lowered guidance and revenues, so it seems like no but, they seem to be set on it.