The big picture: Face recognition is quickly becoming one of the biggest threats to our privacy, with many startups looking to create commercial services around this technology while exploiting the immense number of photos we've uploaded online. There is a solution to this problem, but its effectiveness will ultimately depend on big platforms adopting it.

Facial recognition technology has evolved so fast that its uses have become a real concern for many consumers who see this as yet another intrusion into their lives. Last week, it was revealed that drug store chain Rite Aid had been using face recognition to make it easier to identify shoplifters, with a rather discriminatory focus on regions where there's a higher concentration of people with low income or people of color.

Wearing a mask can throw off some of the most advanced facial recognition tech that's in use today, but even that could soon change with new solutions that use publicly available mask selfie data sets to retrain AIs. Even as the face recognition space has seen some companies like IBM retreat from it as a result of increased scrutiny from regulators and privacy groups, there's a case to be made that we need new legislation and some form of protection against companies that abuse this new technology.

Take the example of Clearview AI, which has been scraping photos of people from Twitter, Facebook, Google, and YouTube to create a facial recognition database. The company didn't ask for permission from any of the users on these services and is said to have poor security practices, which is why it's seen a fair share of lawsuits as of late.

A solution proposed by researchers at the University of Chicago comes in the form a software tool that gives people a chance to protect the images they upload online from being used without their permission.

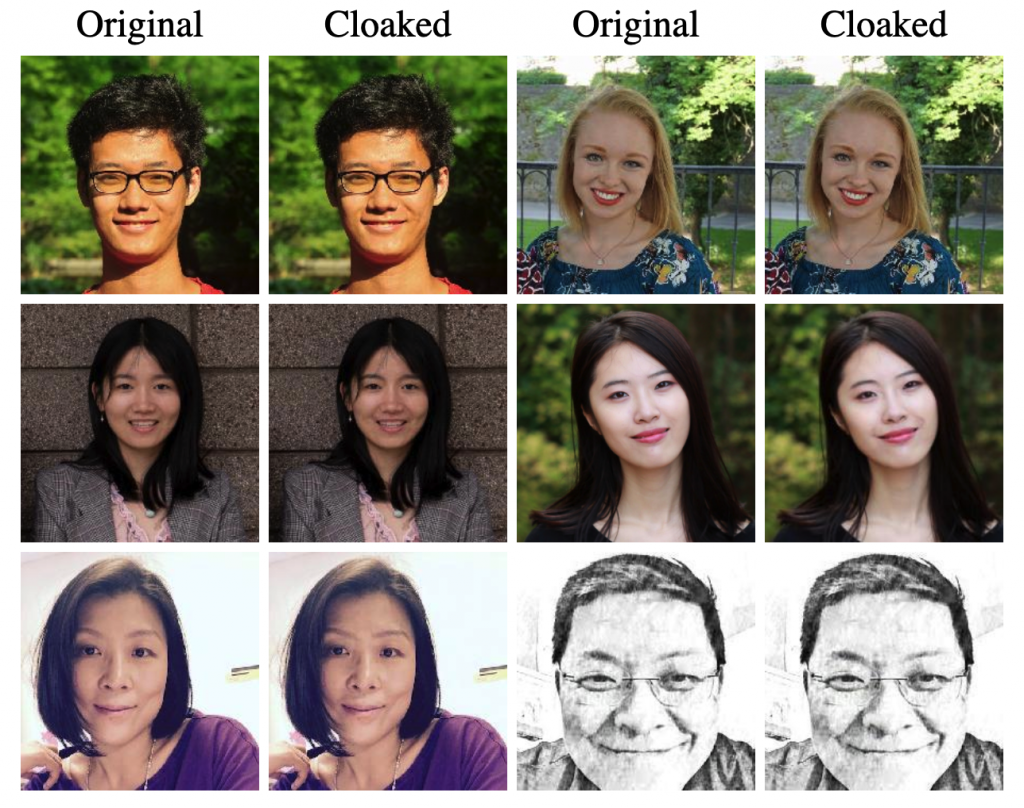

The tool -- dubbed Fawkes -- takes advantage of the fact that machine learning models have to be fed lots of images in order to identify certain distinctive features of an individual with any degree of accuracy. Fawkes makes small pixel-level changes to your images that are imperceptible to the human eye but can effectively "cloak" your face so that machine learning models can't work their magic.

For instance, researchers cloaked images of actress Gwyneth Paltrow using images of actor Patrick Dempsey's face, which prevented a general adversarial network (GAN) from creating a model that worked to recognize Gwyneth Paltrow in uncloaked images. It even fooled face recognition systems from Facebook, Microsoft, Amazon, and Megvii.

However, Fawkes isn't perfect -- the tool can't do anything about the models already built using images that haven't been altered, such as those already used by companies like Clearview AI, as well as law enforcement agencies. And while the cloaked images will be useless even after applying compression to them, the success of Fawkes will depend on whether it will get widespread use among people who upload their photos online.

Interestingly, Clearview AI CEO Hoan Ton-That told The New York Times that Fawkes doesn't seem to work against his face recognition system. He also noted that given the sheer number of unmodified pictures available on the Internet, "it’s almost certainly too late to perfect a technology like Fawkes and deploy it at scale."

As of writing, Fawkes is available on the project's website and has been downloaded over 100,000 times already. It's possible that companies like Facebook will acquire or develop similar tools to protect against other services that try to use images uploaded to its social network, while continuing to use the originals for features like automatic tagging.

Professor Ben Zhao, who coordinated the development of Fawkes, says that services like Clearview AI are still far from having a comprehensive set of images for everyone on the Internet. Zhao also noted that Fawkes is based on a poisoning attack, which is designed to gradually corrupt facial recognition models as the percentage of cloaked images increases over time.

Zhao's team would like to see companies like Clearview AI be driven out of business, but its members admit that it's going to be a game of cat and mouse where third parties will always find short-term ways to adapt to tools like Fawkes.