The big picture: Nvidia finally took the wraps off their next-generation GeForce 40 series graphics cards, which feels like it's been a long time coming considering all the leaks and rumors over the last year or so. We've spent some time analyzing Nvidia's presentation to give our thoughts on the new RTX 4090 and RTX 4080 series GPUs and to break down some of Nvidia's confusing performance reveals that obfuscate the most important information.

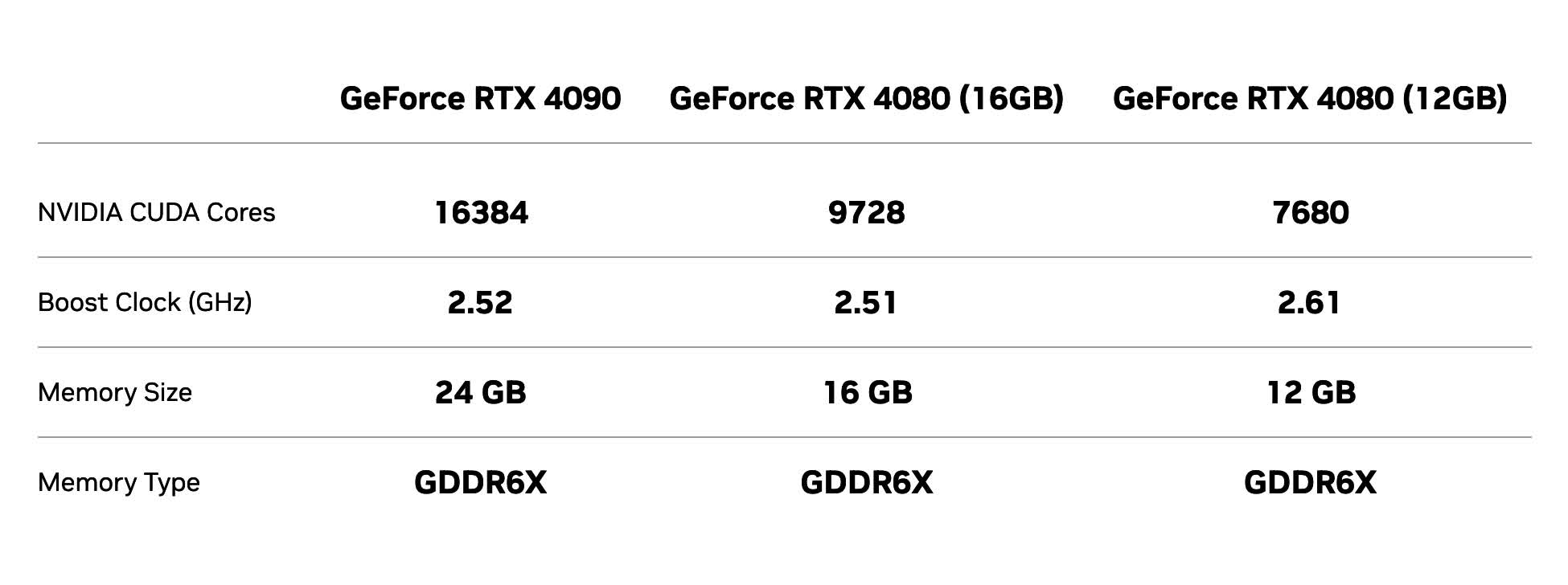

At the top we have the GeForce RTX 4090, which is based on the new Ada Lovelace architecture built on a custom version of TSMC's N4 node. This GPU is a bit of a monster in terms of hardware, packing 16384 CUDA cores and 24 GB of GDDR6X memory on a 384-bit bus, plus boost clock speeds for the GPU up to 2.52 GHz. As expected, it's a power hungry card with a rated TGP of 450W. It will be available on October 12 for $1,600.

Then we have the GeForce RTX 4080 16GB, which packs 9728 CUDA cores, a substantial cutdown of the RTX 4090. It features boost clocks up to 2.51 GHz, plus 16GB of GDDR6X memory on a 256-bit bus, and a 320W TGP. Pricing is set at $1,200, to be available a bit later with no date specified.

There's also the GeForce RTX 4080 12GB, which drops the amount of memory to 12GB GDDR6X but also memory bandwidth from a decrease to a 192-bit memory interface. This 12GB model sports fewer CUDA cores at 7680, clocked up to 2.61 GHz. A 285W TGP and a price tag of $900.

In addition to the new card models, Nvidia announced a whole bunch of features relating to the new Ada Lovelace architecture, which we'll give our thoughts on later, including DLSS 3, but for now let's talk about some of the key reactions just about the GPUs themselves.

A big obvious one is that we have two GeForce RTX 4080 GPUs with vastly different specifications. This seems like a bad and confusing choice, considering the multitude of naming options Nvidia has at their disposal with numbers and suffixes like Ti. There's no reason to give these GPUs such a similar name unless Nvidia intended to trick customers in some way.

The way Nvidia discussed the RTX 4080 series is highlighting the differences in memory, 16GB vs 12GB, making it seem like this is the main difference you're paying for. At first glance this would make the RTX 4080 12GB seem like much better value, it's $300 less and packs otherwise the same performance, right? Well, it's not until you look at the spec sheet that you discover this isn't the case at all, with the 12GB model packing 21% fewer shader units and reduced memory bandwidth. These are not at all the same GPU with Nvidia's own data suggesting the 16GB model could be upwards of 25% faster. This would be the largest difference in performance between two cards named this way in memory. So you can bet everyday non-enthusiast consumers that don't know a ton about GPUs will end up purchasing the worse 12GB card expecting the full RTX 4080 experience -- something that could have been avoided with appropriate naming.

One theory going around the wider community is that the RTX 4080 12GB is really what used to be/should have been the RTX 4070, renamed into a 4080 to soften the blow of the high asking price ($900 vs $500 for the RTX 3070).

This is plausible given how many people get caught up in comparing series vs series across generations and having such a huge price leap for the x070 series would cause a lot of disappointment. This theory is further strengthened by the fact that the RTX 4080 12GB appears to deliver similar performance to the RTX 3090, and Nvidia has often delivered top-tier performance from the previous generation in the next-generation's 70 tier card.

However to be honest what matters most is the price to performance ratio. If the RTX 4070 was massively faster than the RTX 3070 and also cost a lot more money, it could still be reasonable if the performance gain outpaced the price increase. There would need to be some reshuffling and additional entry-level options, and consumers would need to get used to the new naming system, but ultimately in that sort of scenario the name wouldn't really matter -- it's the hardware and price that matters, relative to the competition and previous models.

But when you have two cards that will appear in product listings as nearly the same GPU, both with RTX 4080 naming, I don't think it's reasonable to have a significant performance discrepancy.

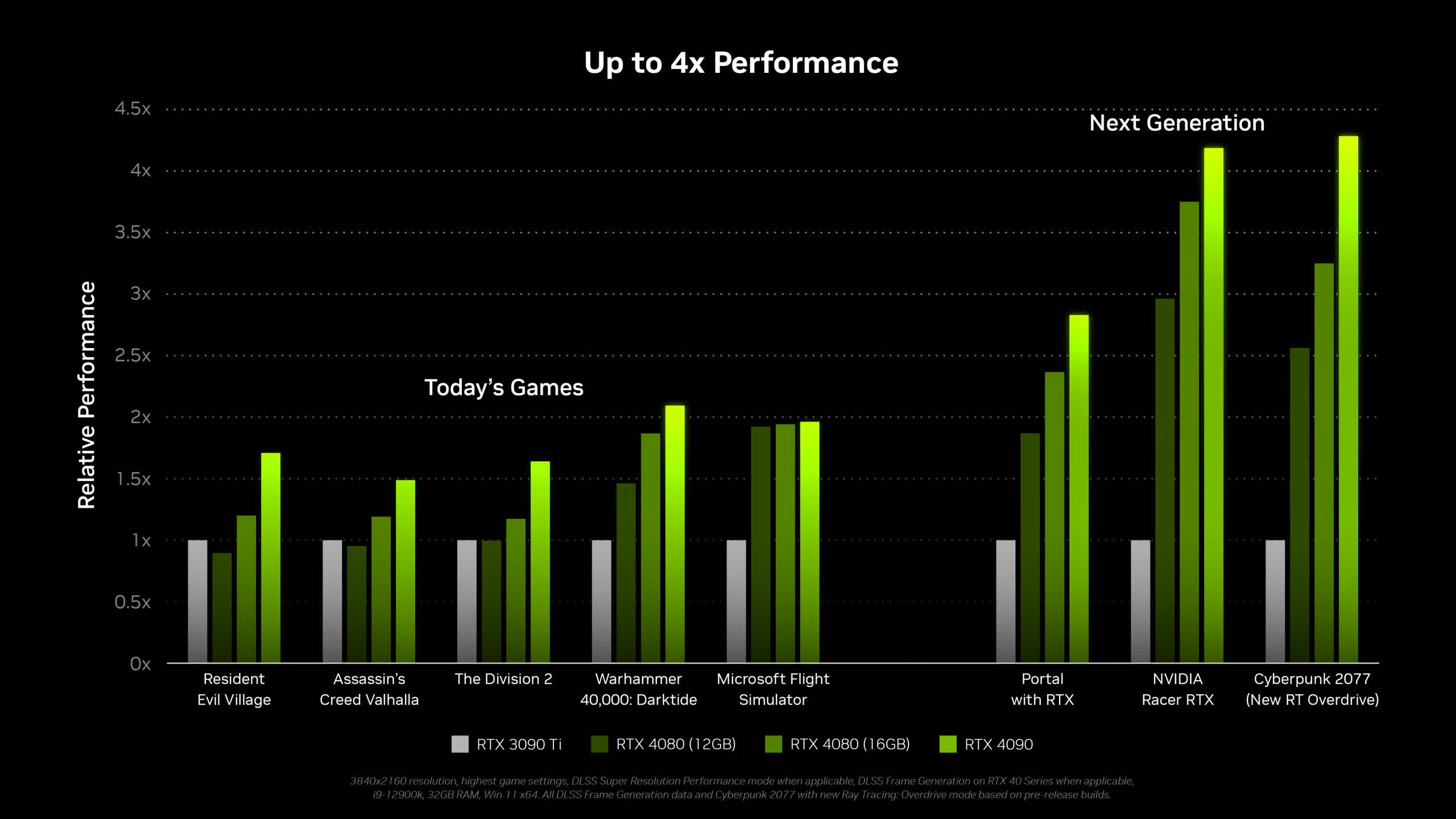

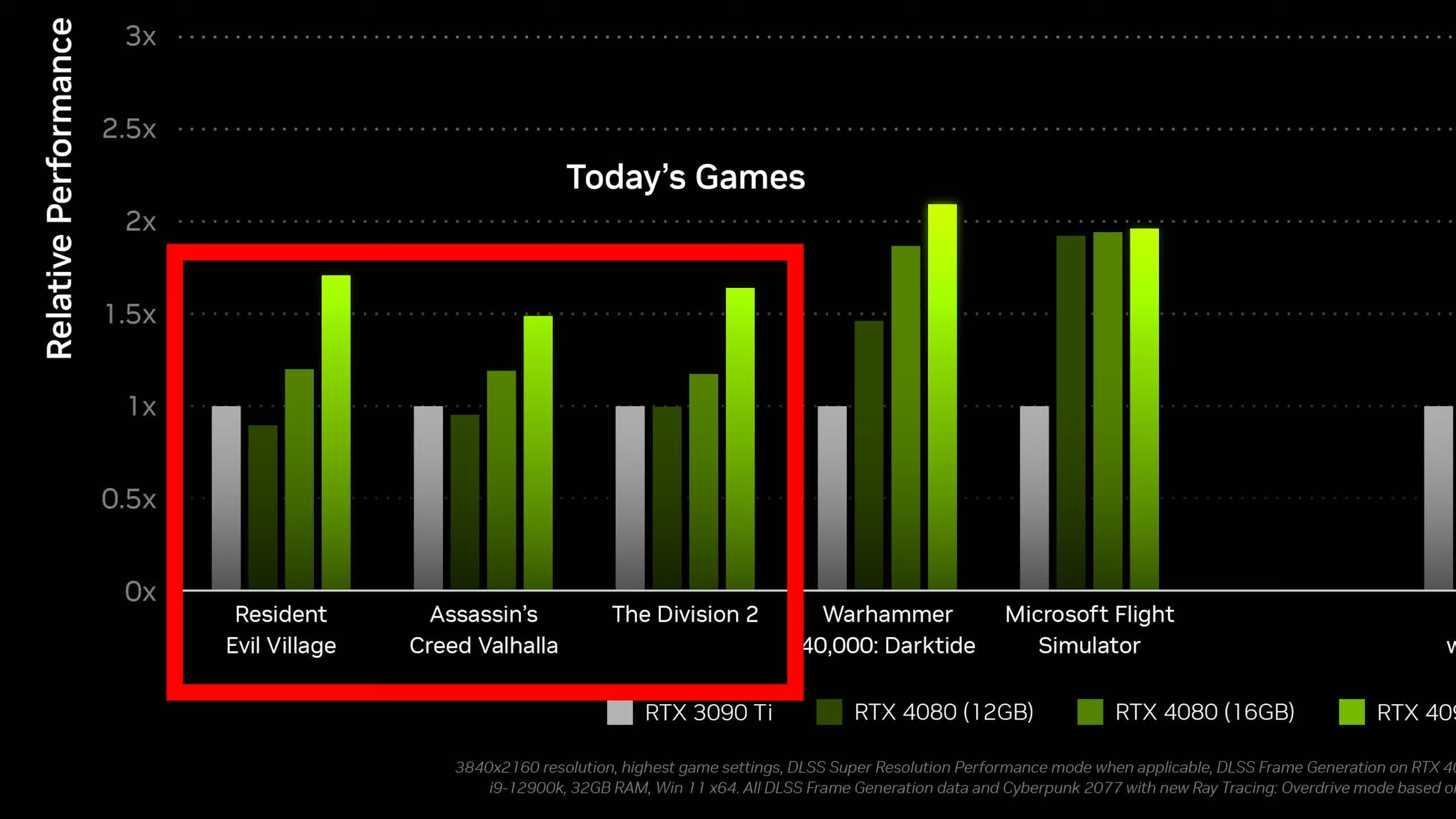

Now let's talk about the supposed performance of these GPUs. Nvidia tends to provide the most obfuscated performance numbers of the big three hardware vendors, and the least amount of performance testing when talking about new products. Testing is often conducted comparing new cards to strange matchups from the previous generation, or with exclusive features on the new cards enabled (which are only found in a very limited number of games) to further enhance the apparent performance difference. That's definitely the case with these performance charts which have stuff like DLSS 3 enabled... more on that later.

When looking at the three "standard" games Nvidia has provided, the RTX 4090 appears to be around 60 to 70 percent faster than the RTX 3090 Ti. This can be brought up to 2x or greater when additional Ada features are supported in the game, but I would expect the performance gain in most titles to be similar to these three games. This is a little lower than the hardware would suggest, as the RTX 4090 has 52% more shader cores and up to a 34% higher boost frequency, however the 450W TGP may limit this to some degree as it's the same as the 3090 Ti.

This is an impressive gen-on-gen performance uplift for the flagship model, one of the largest performance gains we've seen. For example, with the upgrade from Turing to Ampere, the flagship model gave us about 50% more performance. If some titles end up delivering twice the performance that would be very impressive.

As for value, there's two ways you can look at it... The RTX 4090 is much better value than the RTX 3090 Ti's launch price of $2,000, it's cheaper and much faster. It also compares favorably to the MSRP of the RTX 3090, delivering a huge performance uplift for only $100 more. However, the MSRP is no longer relevant in the current market. Going on current pricing (discounted in anticipation of this launch and the crypto crash), the RTX 3090 Ti is available for $1030, while the most affordable RTX 3090 is $960. This would give the RTX 4090 similar price to performance to the RTX 3090 factoring in its large price increase, which is not too bad given you typically pay a premium for top-tier performance.

For the GeForce RTX 4080 16GB we appear to be getting roughly 25% more performance than the RTX 3090 Ti in standard games -- again quite a good deal relative to the 30 series MSRP. The 4080 16GB is cheaper than the 3090 and similar in price to the RTX 3080 Ti. Nvidia does claim 2-4x faster performance than the 3080 Ti, but this seems to be based on special cases rather than general performance.

Based on the current market, this would be similar price to performance relative to the RTX 3090, and even the RTX 3080 Ti, which is currently available for $800. Not a huge deal of progress there, and it would be up to features to get it over the line.

Similar situation with the RTX 4080 12GB. Nvidia is showing performance slightly below the RTX 3090 Ti, or similar to the RTX 3090, at $900. This card basically slots into the existing price to performance structure of Nvidia's 30 series line-up, the RTX 3080 10GB is about $740 these days so still above its $700 MSRP and given it's only slightly slower than the RTX 3090 this new RTX 4080 12GB could actually end up delivering less performance per dollar than the 3080 series from Ampere outside of Ada Lovelace enhanced titles.

My early thoughts are that the cards are very expensive, though this isn't too surprising. After all, Nvidia has learned that people will pay exorbitant amounts of money at the high end, plus we're still in the recovery phase of a pricing boom and there are inflation pressures around the globe. But it's hard to make a definitive call without seeing benchmarks, and I'm only going off what Nvidia showed at their presentation.

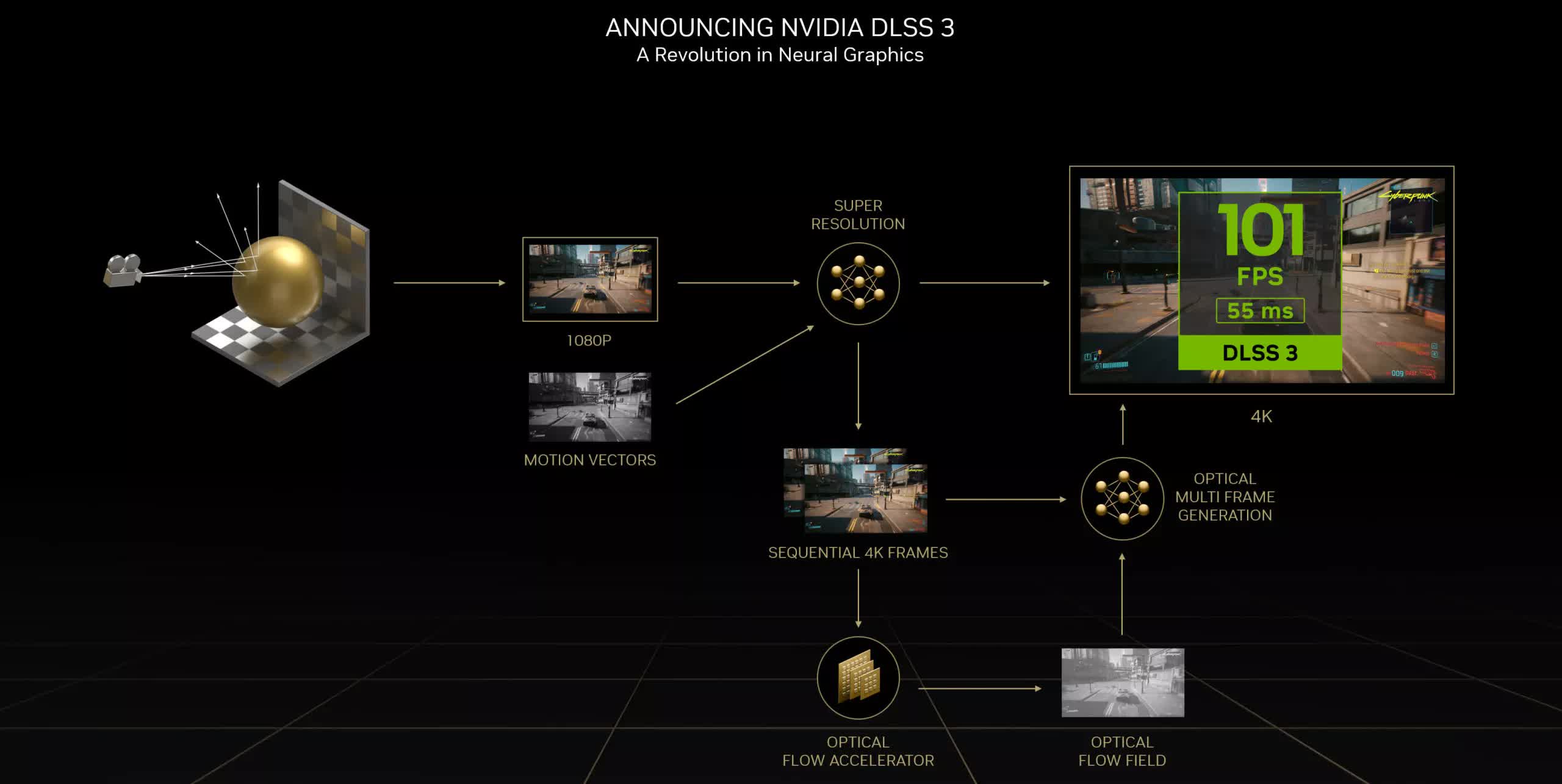

The issues with naming and pricing have overshadowed some of the technical advancements Nvidia are making this generation, at least for now. In particular, DLSS 3 looks like a very interesting and cool technology, taking DLSS one step further to provide AI enhanced frame generation, similar to frame interpolation technologies that we've seen in TVs and other hardware for some time, but built specifically for games and GPU hardware. The idea is that DLSS 3 would use data from current and future frames to generate significant portions of frames (up to 7/8ths of the displayed pixels according to Nvidia). This process uses optical flow and the optical flow accelerator present on Nvidia GPU hardware.

While DLSS 3 is coming in October and will be available in over 35 games at some point, it will be exclusive to RTX 40 series hardware. The reasoning is that it requires the enhanced optical flow accelerator in the Ada Lovelace architecture. While this accelerator is available in previous generations, apparently it isn't good or fast enough for this technology, so it's restricted to the new generation of cards. With some sort of performance overhead to run it's also unclear how much acceleration is possible above a certain frame rate, as many of the examples Nvidia showed were running games at a low base frame rate.

Nvidia has shown demos of the technology, but it'll take a full visual quality analysis to see how it appears in real life. I definitely think it is possible to use AI interpolation in this way for games, but with so many pixels being reconstructed it may have visual quality implications -- like what we see using DLSS Ultra Performance mode, which generally looks bad in motion. Luckily, DLSS 2 is otherwise quite good, so with further research and enhancements I'm certainly looking forward to seeing how this looks in action.

Another key advantage of Ada Lovelace are enhancements to the ray tracing cores. This is a big deal as we move more towards the ray tracing era and performance requirements increase substantially. Nvidia's third-gen ray tracing cores are more powerful and support new hardware acceleration capabilities such as micro-mesh engines and opacity micro-map engines. In particular, Nvidia claims these RT cores can build ray tracing BVHs 10x faster using 20x less VRAM through the use of displaced micro-meshes, while there's also twice the ray-triangle intersection throughput

All up, Nvidia is claiming 191 RT-TFLOPS of performance on the RTX 4090, compared to 78 for the RTX 3090 Ti, which is a 2.4x improvement (Nvidia also claims up to 2.8x which may refer to another GPU pairing). Either way, this would outpace the raw rasterization performance improvement: if the RTX 4090 is roughly a 1.7x improvement on the RTX 3090 Ti, but ray tracing performance goes up 2.4x, this would reduce the performance impact of ray tracing in games or enable more ray tracing effects to be used. It's crucial for next-gen cards like this to have ray tracing performance outpace rasterization so that the cost to using ray tracing is reduced.

Ada also includes 4th-generation tensor cores, though outside of DLSS there aren't many gaming-specific use cases for these hardware accelerators. There's a new 8-bit floating point engine that delivers more performance but this will be mostly useful for workstation users.

Another key inclusion are dual AV1 encoders from the new eighth-generation NVENC engine. AV1 decode has been supported in Nvidia's previous architecture, but AV1 encoding hasn't been possible until now. It's clear by now that AV1 will be the major successor to H.264 across video playback and streaming, so having features like AV1 encoding is crucial for the future. OBS will support AV1 encoding in an October update and Discord will be integrating it later this year as well.

Then we have shader execution reordering, which is an Ada specific architecture enhancement that reorganizes inefficient shader workloads into an efficient stream that is said to improve performance by up to 25% in games. This feature along with DLSS 3 is why Nvidia has shown some titles delivering massive performance improvements on RTX 40 series GPUs relative to Ampere, while other titles won't benefit as much. We could see quite a wide range of performance figures when we benchmark these cards.

As for Founders Edition models, the RTX 4090 and RTX 4080 16GB appear to be getting FE cards, with a similar design to the RTX 30 series. These GPUs use PCIe 5.0 16-pin connectors for power, which are found on ATX 3.0 power supplies. However, Nvidia will be packaging an adapter in the box to use with existing power supplies that have 8-pin connectors.

Interestingly, these cards don't feature improvements to display output connectivity or the PCIe bus. It appears PCIe 4.0 is still being used, while we're getting HDMI 2.1 and DisplayPort 1.4 -- no upgrade for DisplayPort 2.0. Neither PCIe 5.0 nor DP 2.0 will be too important this generation, although it's possible other GPU vendors will use these features before Nvidia will.

Overall, I have mixed feelings about Nvidia's RTX 40 unveil. The hardware itself looks impressive with a substantial performance uplift, one of the largest we've seen comparing flagship models. There's also some neat features that will be interesting to explore, such as DLSS 3 and improvements to ray tracing performance beyond what we are expecting for standard rasterized games.

However, pricing for these new GPUs is concerning and there doesn't appear to be a significant step forward in price to performance ratio when compared to the current market. There are still a lot of older GPUs to be sold through from the RTX 30 series, in addition to many used GPUs shortly hitting the market. This could put further price pressure on the RTX 40 series from the start.

Bottom line, everything will depend on how these cards benchmark, and hopefully we won't see further inflated prices anymore. It is expected these models will sell out almost instantly, but the crucial time period is usually about a month after launch, when we expect Nvidia to have cards available at the MSRP – otherwise our BS meter will be fully activated.

For now, I would strongly recommend to wait and see how AMD responds with their RDNA 3 products, which will be unveiled on November 3 and hopefully launched before the end of the year. We're expecting strong competition and with such a short gap between Nvidia and AMD's launches, it may be worth waiting to see where both GPU makers stand this generation.

https://www.techspot.com/news/96044-expensive-first-reactions-nvidia-rtx-4090-rtx-4080.html