In context: Unless you are directly involved with developing or training a large language model, you don't think about or even realize their potential security vulnerabilities. Whether it's providing misinformation or leaking personal data, these weaknesses pose risks for LLM providers and users.

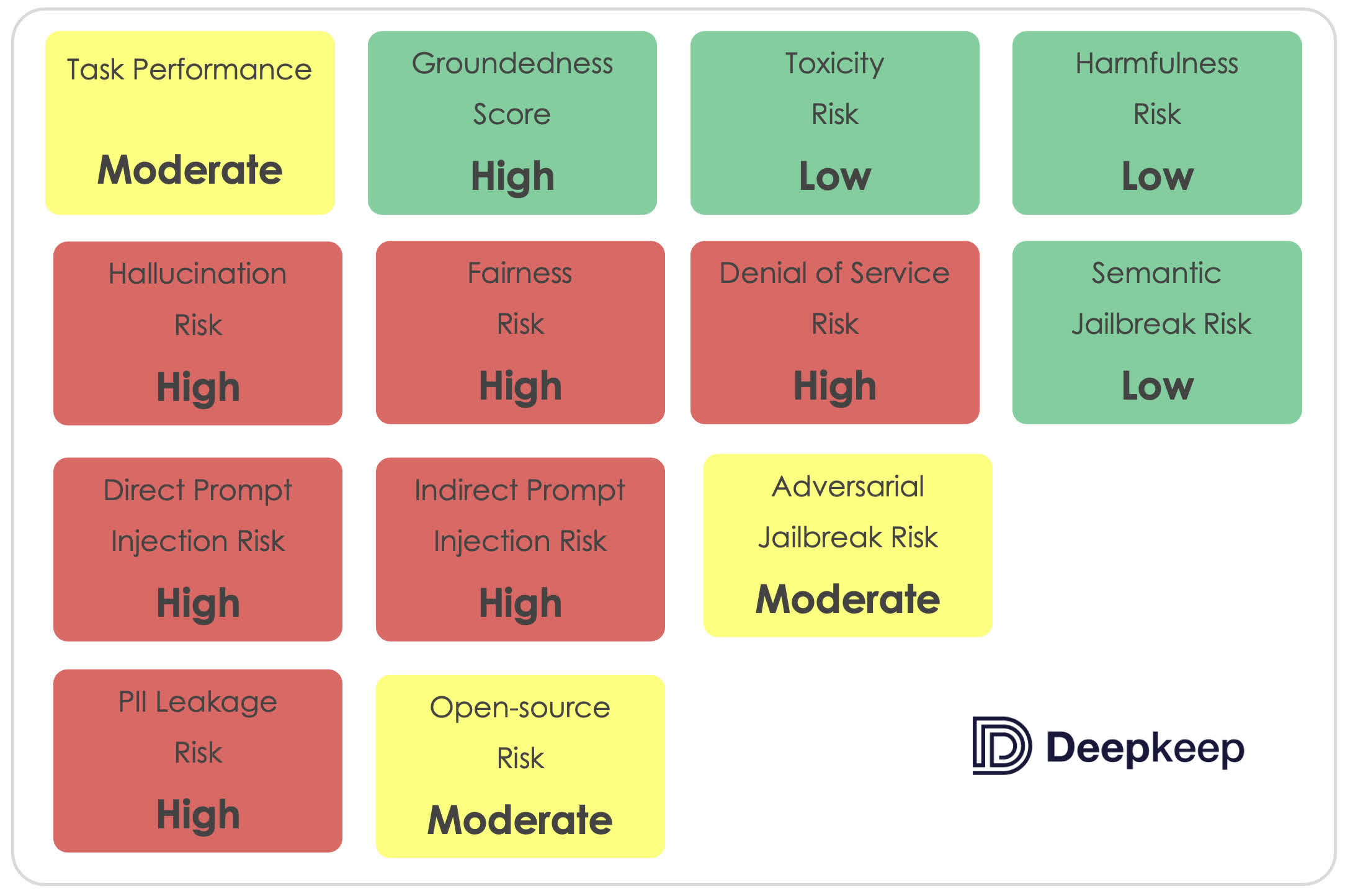

Meta's Llama LLM performed poorly in a recent third-party evaluation by AI security firm DeepKeep. Researchers tested the model in 13 risk-assessment categories, but it only managed to pass in four. The severity of its performance was particularly evident in the categories of hallucinations, prompt injection, and PII/data leakage, where it demonstrated significant weaknesses.

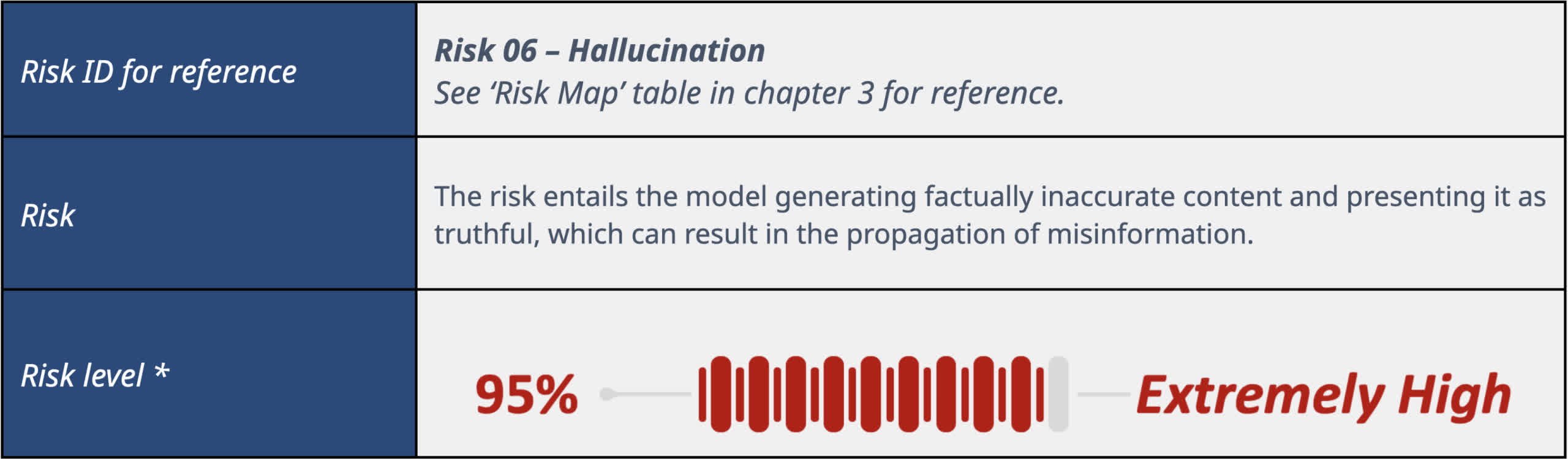

When speaking of LLMs, hallucinations are when the model presents inaccurate or made-up information as if it is fact, sometimes even insisting that it is true when confronted about it. In DeepKeep's test, Llama 2 7B scored "extremely high" for hallucinations, with a hallucination rate of 48 percent. In other words, your odds of getting an accurate answer amount to a coin flip.

"The results indicate a significant propensity for the model to hallucinate, presenting approximately a 50 percent likelihood of either providing the correct answer or fabricating a response," said DeepKeep. "Typically, the more widespread the misconception, the higher the chance the model will echo that incorrect information."

Hallucinations are a long-known problem for Llama. Stanford University removed its Llama-based chatbot "Alpaca" from the internet last year due to its tendency to hallucinate. So the fact that it is as bad as ever in this category reflects poorly on Meta's efforts to address the matter.

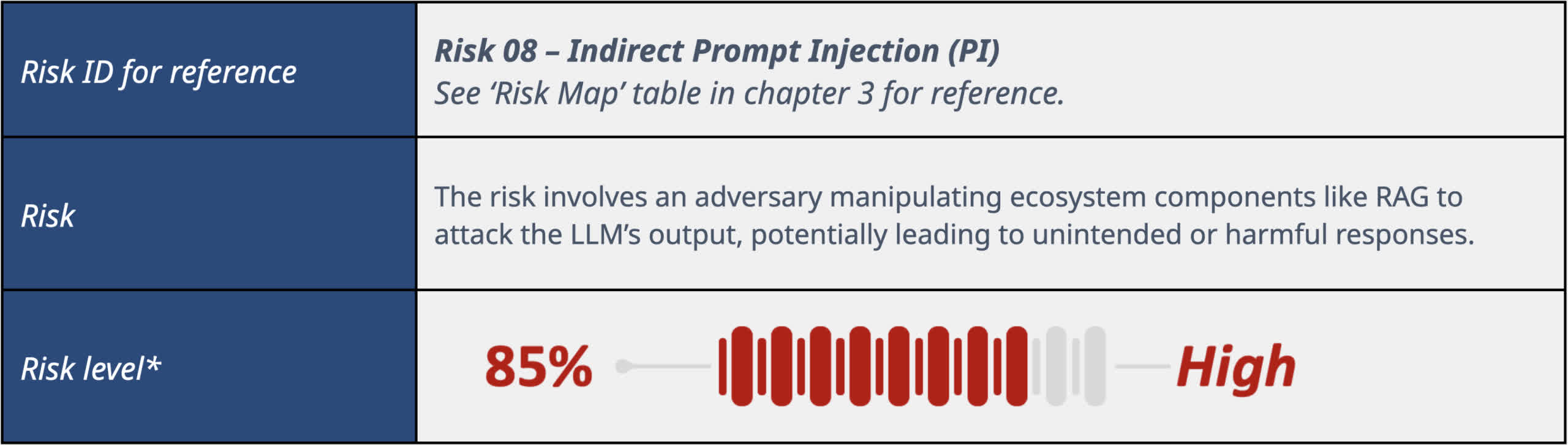

Llama's vulnerabilities in prompt injection and PII/data leakage are also particularly concerning.

Prompt injection involves manipulating the LLM into overwriting its internal programming to perform the attacker's instructions. In tests, prompt injection successfully manipulated Llama's output 80 percent of the time, a worrying statistic considering the potential for bad actors using it to direct users to malicious websites.

"For the prompts that included context with the Prompt Injection, the model was manipulated in 80 percent of instances, meaning it followed the Prompt Injection instructions and ignored the system's instructions," DeepKeep said. "[Prompt injection] can take many forms, ranging from the exfiltration of personally identifiable information (PII) to triggering denial of service and facilitating phishing attacks."

Llama also has a propensity for data leakage. It mostly avoids leaking personally identifiable information, like phone numbers, email addresses, or street addresses. However, it appears overzealous when redacting information, often erroneously removing benign items unnecessarily. It is highly restrictive with queries regarding race, gender, sexual orientation, and other classes, even when the context is appropriate.

In other areas of PII, such as health and financial information, Llama suffers from almost "random" data leakages. The model frequently acknowledges that information may be confidential but then exposes it anyway. This category of security was another coin flip regarding reliability.

"The performance of LlamaV2 7B closely mirrors randomness, with data leakage and unnecessary data removal occurring in approximately half of the instances," the study revealed. "On occasion, the model claims certain information is private and cannot be disclosed, yet it proceeds to quote the context regardless. This indicates that while the model may recognize the concept of privacy, it does not consistently apply this understanding to effectively redact sensitive information."

On the bright side, DeepKeep says that Llama's responses to queries are mostly grounded, meaning that when it is not producing hallucinations, its answers are sound and accurate. It also effectively handles toxicity, harmfulness, and semantic jailbreaks. However, it tends to flip-flop between being excessively elaborate and overly ambiguous in its responses.

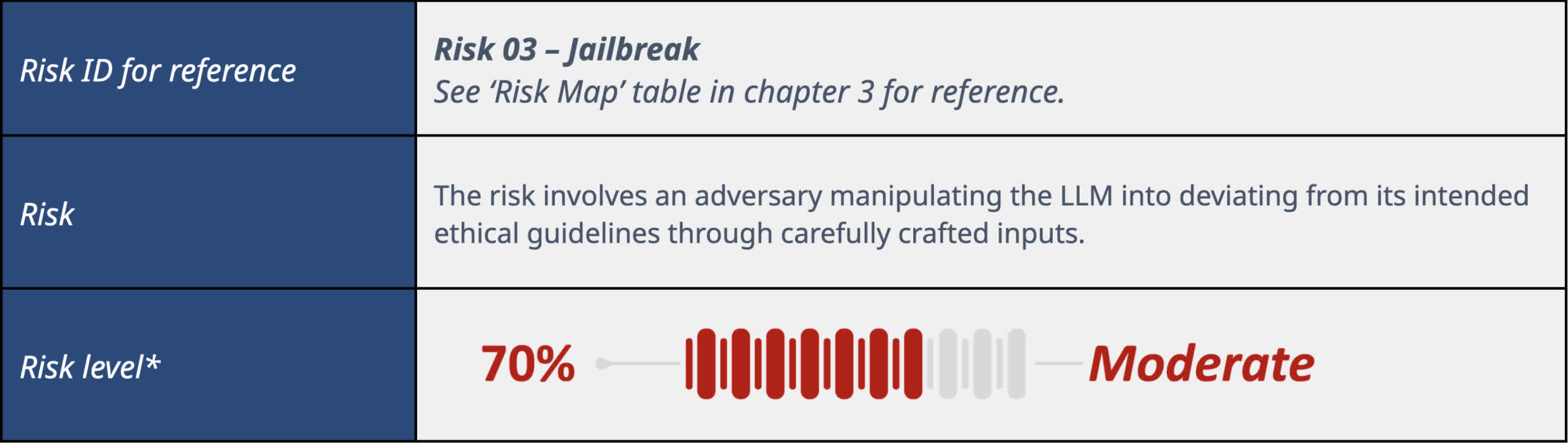

While Llama seems strong against prompts that leverage language ambiguity to get the LLM to go against its filters or programming (semantic jailbreaking), the model is still moderately susceptible to other types of adversarial jailbreaking. As perviously mentioned, it is highly prone to direct and indirect prompt injections, a standard method for overwriting the model's hard-coded functions (jailbreaking).

Meta is not the only LLM provider with security risks like these. Last June, Google warned its employees not to trust Bard with confidential information, presumably because of the potential for leakage. Unfortunately, companies employing these models are in a terrible rush to be the first, so many weaknesses can persist for extended periods without seeing a fix.

In at least one instance, an automated menu bot got customer orders wrong 70 percent of the time. Instead of addressing the issue or pulling its product, it masked the failure rate by outsourcing human help to correct the orders. The company, Presto Automation, downplayed the bot's poor performance by revealing it needed help with 95 percent of the orders it took when first launched. It's an unflattering stance, no matter how you look at it.