In brief: By integrating custom-designed hardware like Trillium with industry-leading GPUs from Nvidia, Google Cloud is positioning itself as contender in the rapidly evolving field of AI computing. Its latest advancements promise to accelerate developing and deploying sophisticated AI models and applications across diverse industries.

Google Cloud recently announced a major upgrade to its AI infrastructure, introducing new hardware and software solutions designed to meet the growing demands of artificial intelligence workloads. The centerpiece of these changes is the release of Trillium, Google's sixth-generation Tensor Processing Unit (TPU).

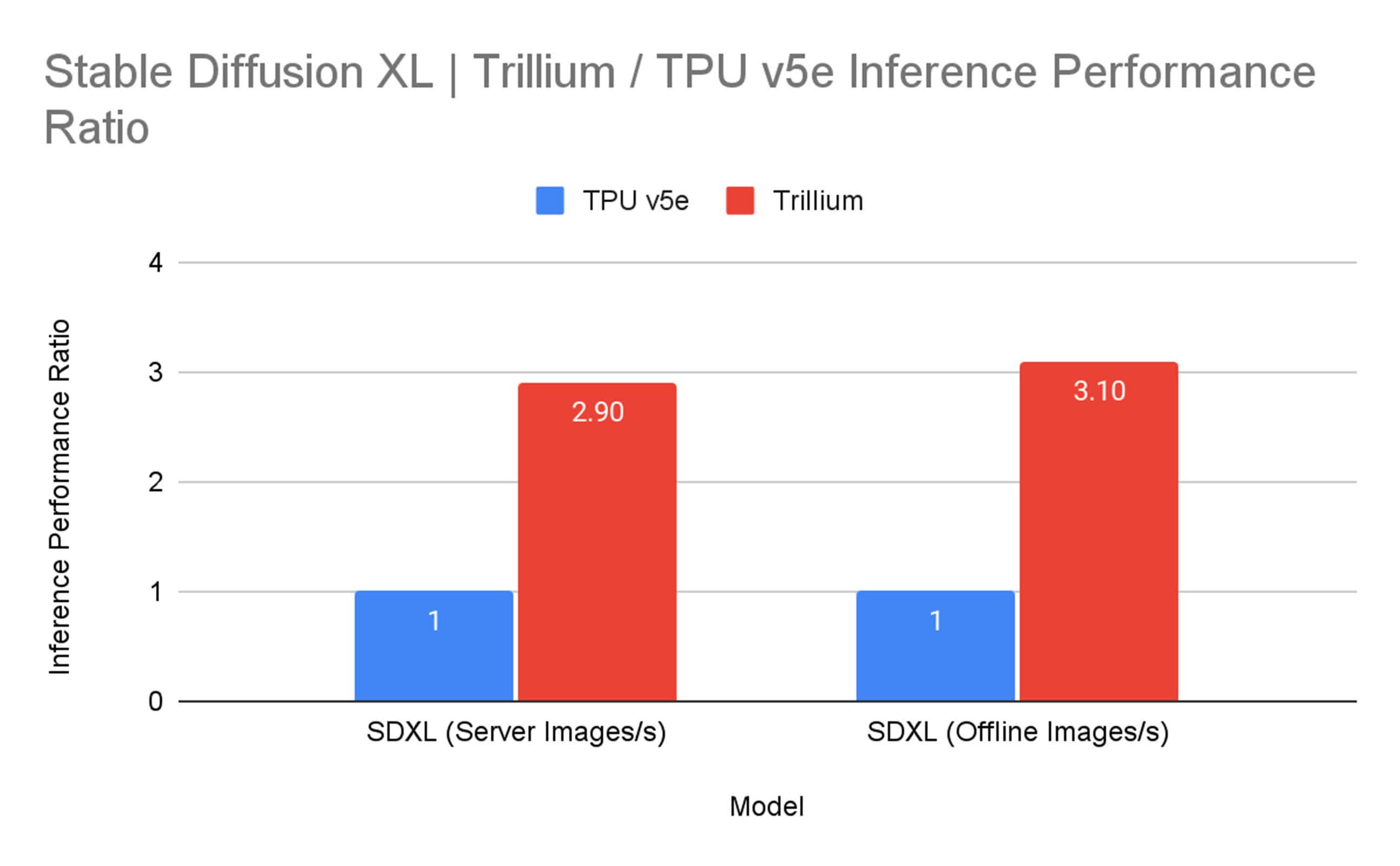

Compared to its predecessor, the TPU v5e, Trillium delivers over four times the training performance and up to three times the inference throughput. This improvement is accompanied by a 67% increase in energy efficiency.

The new TPU boasts impressive specifications, including double the High Bandwidth Memory (HBM) capacity and Interchip Interconnect (ICI) bandwidth, making Trillium particularly well-suited for handling large language models like Gemma 2 and Llama, as well as compute-intensive inference tasks such as those required by diffusion models like Stable Diffusion XL.

A standout feature of Trillium is its scalability. A single high-bandwidth, low-latency pod can incorporate up to 256 Trillium chips, and this configuration can be scaled to hundreds of pods, effectively creating a building-scale supercomputer interconnected by Google's Jupiter data center network, which offers a capacity of 13 petabits per second. Trillium's Multislice software enables near-linear performance scaling across these vast clusters.

Google's benchmark tests mean to showcase Trillium's capabilities. Compared to the TPU v5e, training performance for models like Gemma 2-27b, MaxText Default-32b, and Llama2-70B increased more than fourfold. For inference tasks, Trillium achieved a threefold increase in throughput when running Stable Diffusion XL.

Alongside the Trillium launch, Google Cloud announced plans to introduce A3 Ultra Virtual Machines (VMs) powered by Nvidia's H200 Tensor Core GPUs. Scheduled for preview next month, these new VMs promise to deliver twice the GPU-to-GPU networking bandwidth of their predecessors, the A3 Mega VMs.

The A3 Ultra VMs are designed to offer up to double the performance for LLM inference tasks, thanks to nearly twice the memory capacity and 1.4 times more memory bandwidth compared to A3 Mega VMs. These VMs will also be available through Google Kubernetes Engine.

Google Cloud is also launching the Hypercompute Cluster, a highly scalable clustering system aimed at simplifying the deployment and management of large-scale AI infrastructure. This system enables customers to treat thousands of accelerators as a single, unified unit.