The big picture: While AI and machine learning are often thought of as delivering capabilities and benefits in and of themselves, they're more likely to provide enhancements and advancements to other existing technology categories by accelerating certain key aspects of those technologies, just as they have to computer graphics in this particular application.

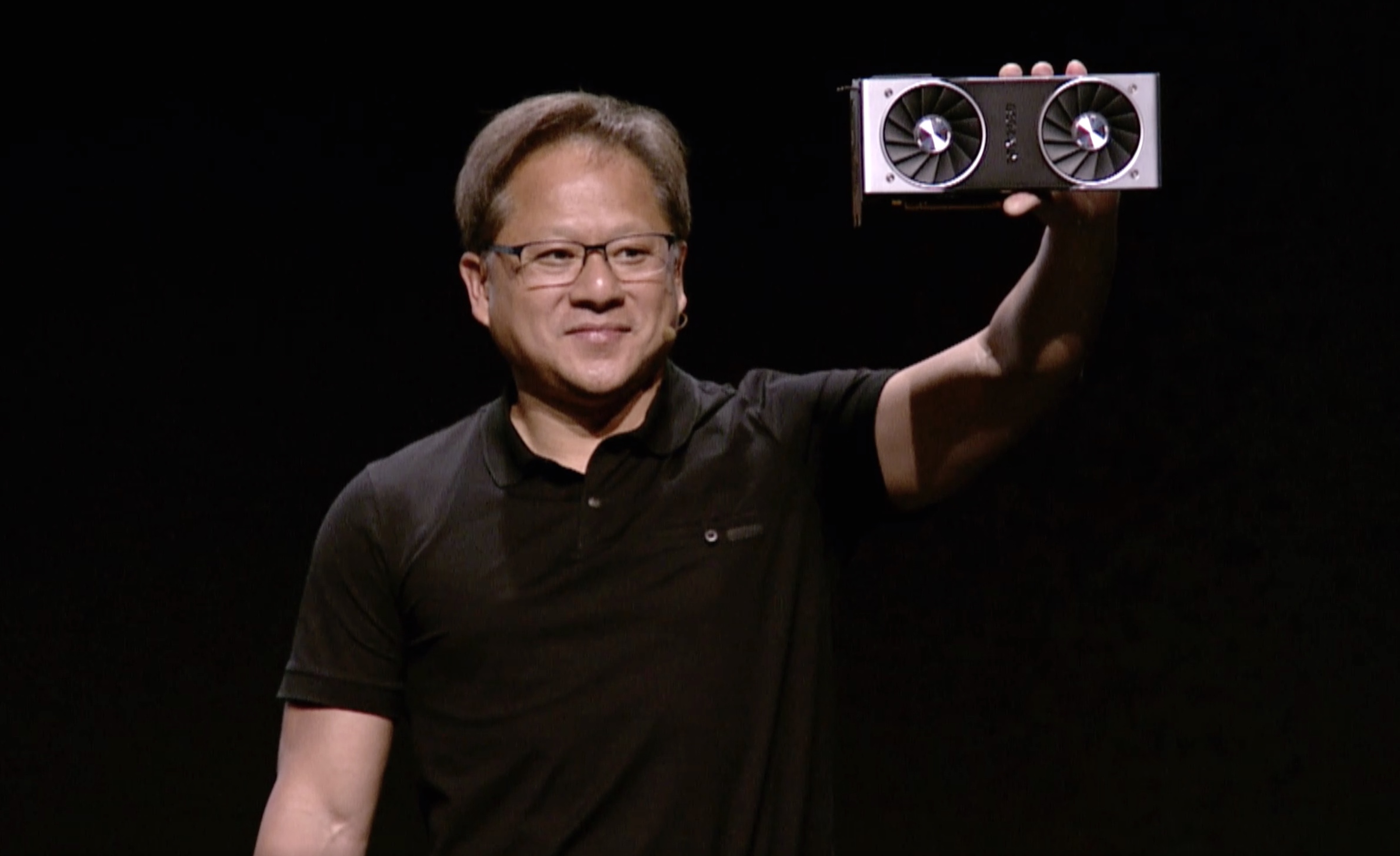

Sometimes it takes more than just brute horsepower to achieve the most challenging computing tasks. At the Gamescom 2018 press event hosted by Nvidia yesterday, the company's CEO Jensen Huang hammered this point home with the release of the new line of RTX 2070 and RTX 2080 graphics cards. Based on the company's freshly announced Turing architecture, these cards are the first consumer-priced products to offer real-time ray tracing, a long sought after target in the world of computer graphics and visualization. To achieve that goal, however, it took advancements in both graphics technologies as well as deep learning and AI.

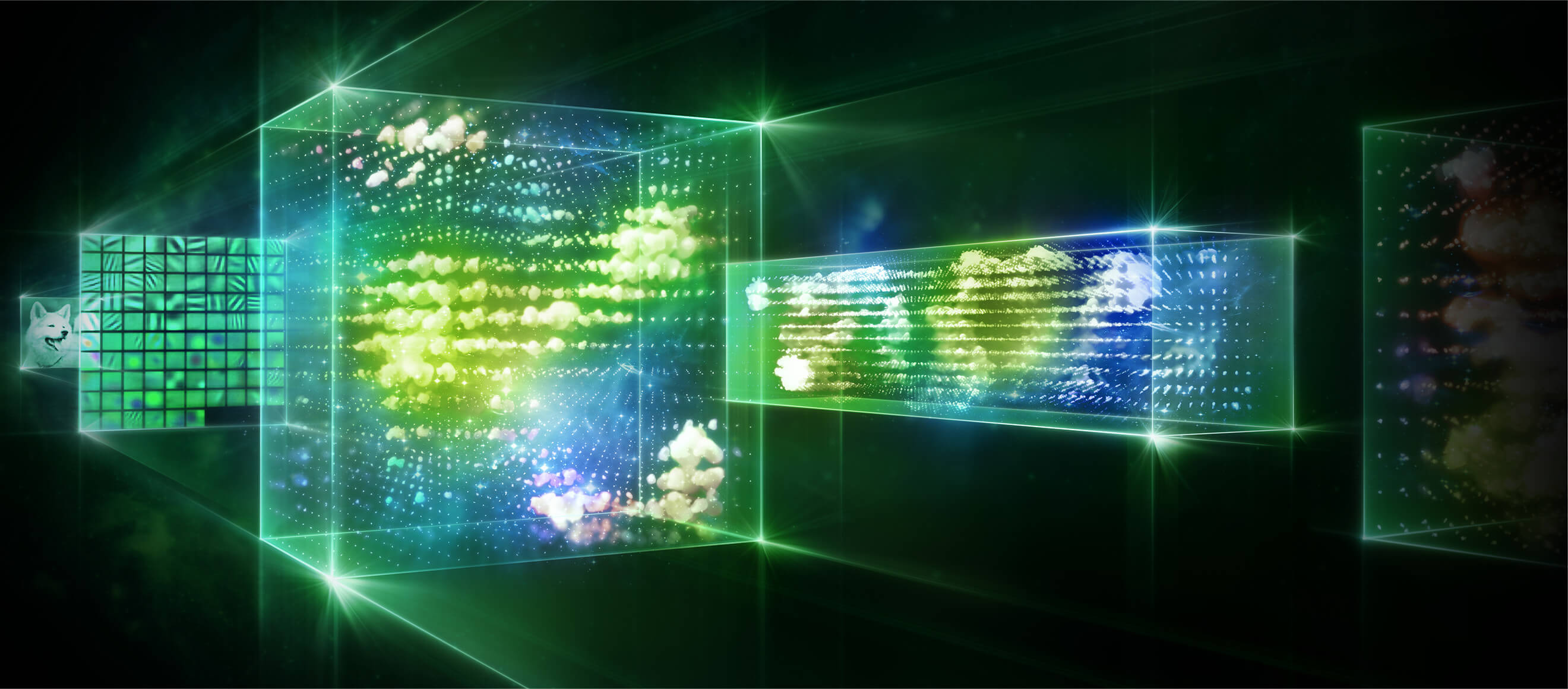

Ray tracing essentially involves the realistic creation of digital images by following, or tracing, the path that light rays would take as they hit and bounce off objects in a scene, taking into consideration the material aspects of the those objects, such as reflectivity, light absorption, color and much more. It's a very computational intensive task that previously could only be done offline and not in real-time.

What was particularly interesting about the announcement was how Nvidia ended up solving the real-time ray tracing problem---a challenge that they claimed to have worked on and developed over a 10-year period. As part of their RTX work, the company created some new graphical compute subsystems inside their GPUs called RT Cores that are dedicated to accelerating the ray tracing process. While different in function, these are conceptually similar to programmable shaders and other more traditional graphics rendering elements that Nvidia, AMD, and others have created in the past, because they focus purely on the raw graphics aspect of the task.

Not only is this a clever implementation of machine learning, but I believe it's likely a great example of how AI is going to influence technological developments in other areas as well.

Rather than simply using these new ray tracing elements, however, the company realized that they could leverage other work they had done for deep learning and artificial intelligence applications. Specifically, they incorporated several of the Tensor cores they had originally created for neural network workloads into the new RTX boards to help speed the process. The basic concept is that certain aspects of the ray tracing image rendering process can be sped up by applying algorithms developed through deep learning.

In other words, rather than having to use the brute force method of rendering every pixel in an image through ray tracing, other AI-inspired techniques like denoising are used to speed up the ray tracing process. Not only is this a clever implementation of machine learning, but I believe it's likely a great example of how AI is going to influence technological developments in other areas as well.

It's also important to remember that ray tracing is not the only type of image creation technique used on the new family of RTX cards, which will range in price from $499 to $1,199. Like all other major graphics cards, the RTX line will also support more traditional shader-based image rasterization technologies, allowing products based on the architecture to work with existing games and other applications. To leverage the new ray tracing capabilities, in fact, games will have to be specifically designed to tap into the ray tracing features---they won't simply show up on their own. Thankfully, it appears that Nvidia has already lined up some big name titles and game publishers to support their efforts. PC gamers will also have to specifically think about the types of systems that can support these new cards, as they are very power hungry and demand up to 250W of power on their own (and a minimum 650W power supply for the full desktop system).

For Nvidia, the RTX line is important for several reasons. First, achieving real-time ray tracing is a significant goal for a company that's been highly focused on computer graphics for 25 years. More importantly, though, it allows the company to combine what some industry observers had started to see as two distinct business focus areas---graphics and AI/deep learning/machine learning---into a single coherent story. Finally, the fact it's their first major gaming-focused GPU upgrades in some time can't be overlooked either.

For the tech industry as a whole, the announcement likely represents one of the first of what will be many examples of companies leveraging AI/machine learning technologies to enhance their existing products rather than creating completely new ones.

Bob O'Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting and market research firm. You can follow him on Twitter @bobodtech. This article was originally published on Tech.pinions.