In context: Facebook is stirring more controversy around its content policies with new changes that focus on fake videos created using artificial intelligence. At the same time, misleading content created using other techniques will only be marked as "false" and prevented from going viral through the news feed.

It's no secret that Facebook is under pressure due to its controversial stance on political ads and its general policies on what content needs to go through fact-checking filters.

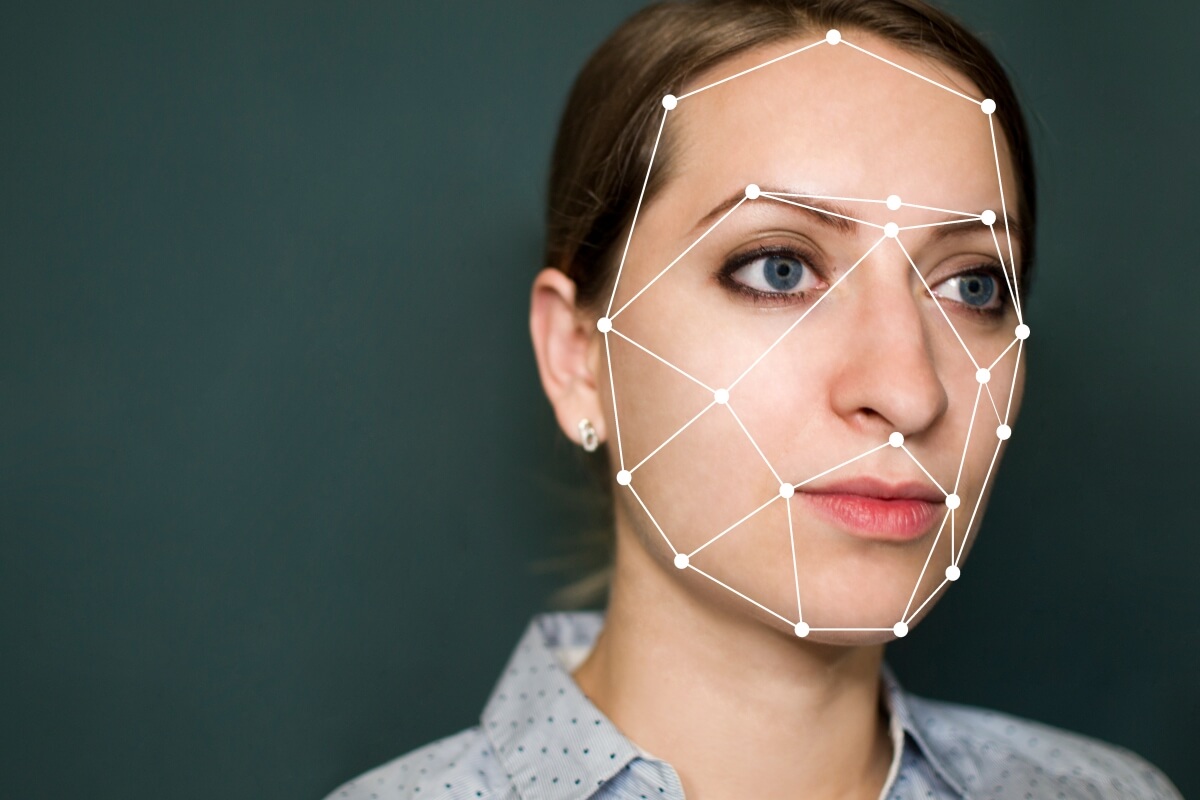

The social giant recently announced that it will ban deepfakes on its platform as a way to prevent them from interfering with the public perception on candidates of the 2020 presidential election campaign. The policy change was first reported by the Washington Post and confirmed by Facebook on Monday night.

The decision means Facebook will begin removing all content that has been manipulated "in ways that aren't apparent to an average person" such as through machine learning techniques, which have proven quite adept in the recent past. And while deepfakes aren't as widespread as other types of manipulated content, the scale of Facebook's platform can be used to weaponize attacks on a politician's image through content that has been edited in a way that makes people think an official said something they didn't actually say.

The obvious problem with the policy is that it doesn't extend to content that is parody or satire, according to Facebook's Monika Bickert. That's also true for "video that has been edited solely to omit or change the order of words." The Washington Post notes that misleading videos that are created using conventional editing methods could slip through the cracks, offering the notable example of a viral video where House Speaker Nancy Pelosi's voice was altered using readily available software.

On the other hand, a video that doesn't meet the new criteria for removal but violates the other Community Standards like hate speech and graphic violence can still get the boot from Facebook.

The company says it doesn't want to make a stricter policy where it removes all manipulated videos flagged by its fact-checking filters or one of its 50 worldwide partners that comb through content in over 40 languages. Instead, the social giant will mark some of that content as false and control its visibility in the News Feed so that people can see things in context. The approach isn't ideal, but Facebook thinks it's a good compromise since false videos would still get uploaded on other platforms, which may or may not provide any clues about their authenticity.

Back in September 2019, Facebook partnered with Microsoft and several universities to create better open-source tools for detecting deepfakes as part of the Deep Fake Detection Challenge. The company poured $10 million towards research grants, hoping to encourage experts to contribute their ideas and spearhead development.