A hot potato: Apple has revealed plans to scan all iPhones and iCloud accounts in the US for child sexual abuse material (CSAM). While the system could benefit criminal investigations and has been praised by child protection groups, there are concerns about the potential security and privacy implications.

The neuralMatch system will scan every image before it is uploaded to iCloud in the US using an on-device matching process. If it believes illegal imagery is detected, a team of human reviewers will be alerted. Should child abuse be confirmed, the user's account will be disabled and the US National Center for Missing and Exploited Children notified.

NeuralMatch was trained using 200,000 images from the National Center for Missing & Exploited Children. It will only flag images with hashes that match those from the database, meaning it shouldn't identify innocent material.

"Apple's method of detecting known CSAM is designed with user privacy in mind. Instead of scanning images in the cloud, the system performs on-device matching using a database of known CSAM image hashes provided by NCMEC and other child safety organizations. Apple further transforms this database into an unreadable set of hashes that is securely stored on users' devices," reads the company's website. It notes that users can appeal to have their account reinstated if they feel it was mistakenly flagged.

Apple already checks iCloud files against known child abuse imagery but extending this to local storage has worrying implications. Matthew Green, a cryptography researcher at Johns Hopkins University, warns that the system could be used to scan for other files, such as those that identify government dissidents. "What happens when the Chinese government says: 'Here is a list of files that we want you to scan for,'" he asked. "Does Apple say no? I hope they say no, but their technology won't say no."

It's disturbing to me that a bunch of my colleagues, people I know and respect, don't feel like those ideas are acceptable. That they want to devote their time and their training to making sure that world can never exist again.

--- Matthew Green (@matthew_d_green) August 5, 2021

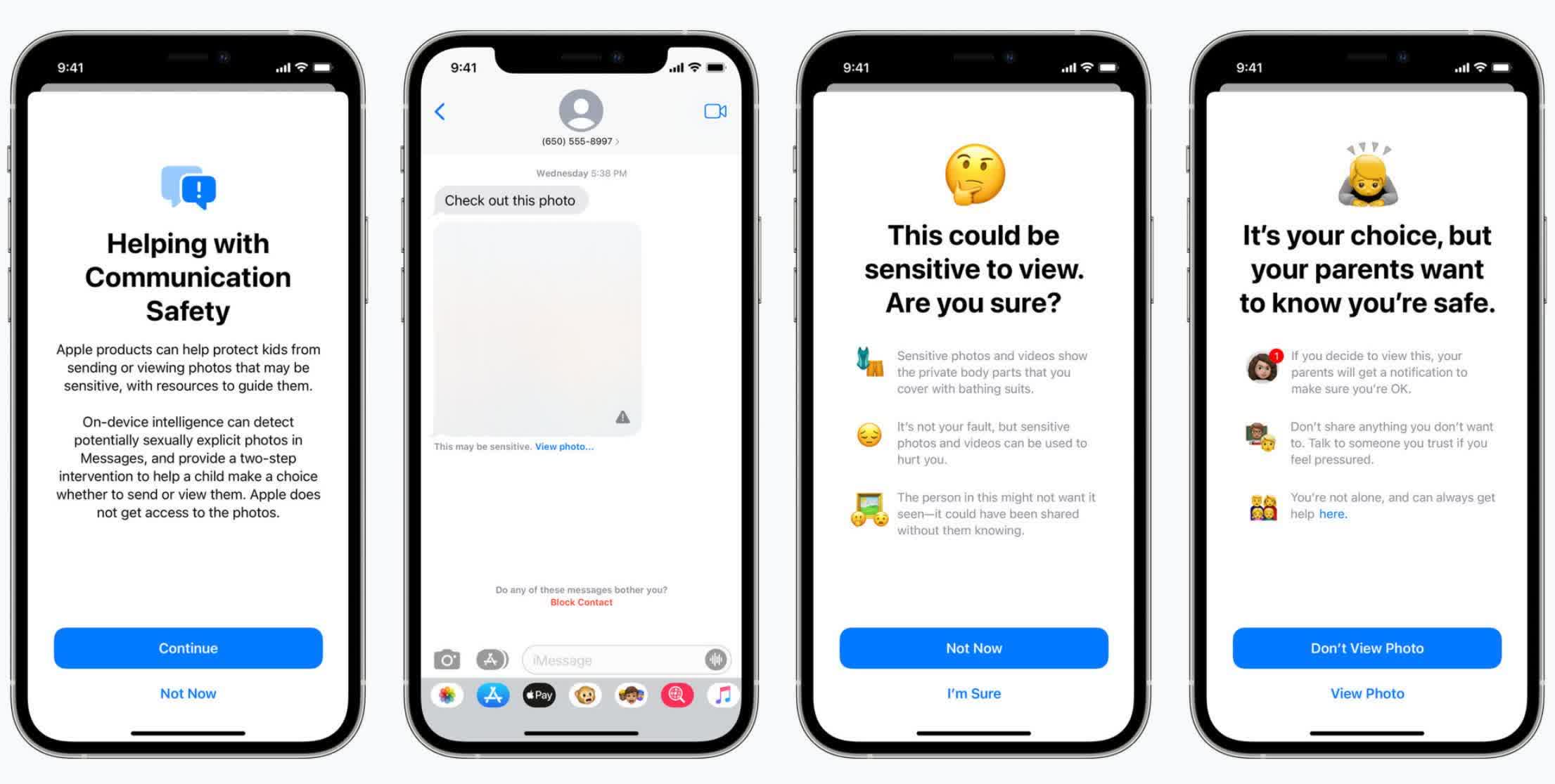

Additionally, Apple plans to scan users' encrypted messages for sexually explicit content as a child safety measure. The Messages app will add new tools to warn children and their parents when receiving or sending sexually explicit photos. But Green also said that someone could trick the system into believing an innocuous image is CSAM. "Researchers have been able to do this pretty easily," he said. This could allow a malicious actor to frame someone by sending a seemingly normal image that triggers Apple's system.

"Regardless of what Apple's long term plans are, they've sent a very clear signal. In their (very influential) opinion, it is safe to build systems that scan users' phones for prohibited content," Green added. "Whether they turn out to be right or wrong on that point hardly matters. This will break the dam --- governments will demand it from everyone."

The new features arrive on iOS 15, iPadOS 15, MacOS Monterey, and WatchOS 8, all of which launch this fall.

Masthead credit: NYC Russ