In context: With the kind of focus that most organizations are putting on the application side of digital transformation, it's easy to forget what a critical role data plays in these efforts. Yes, the move to cloud-native, container-based applications --- whether hosted in public or private clouds --- is critical, but so are projects to organize and analyze the vast amounts of data that most organizations generate or have access to. In particular, the need to support data portability and analytics tools across multiple public clouds and private clouds --- the now classic "hybrid cloud" model is becoming increasingly important.

One important element of that work involves deciding how to best leverage the data assets that are available. Recently, many companies are starting to consider the idea of what many are calling data lakehouses. As the name suggests, a data lakehouse combines some of the characteristics of a data lake and a data warehouse into a single entity.

Data lakes typically have huge amounts of unstructured and semi-structured data consisting of text, images, audio, video, etc., and are used for compiling troves of information on a given process or topic. Data warehouses, on the other hand, are commonly made up of structured data organized into tables of numbers, values, etc., and are used for traditional database querying types of applications.

Data lakehouses extend the flexibility and capability of the data they store by allowing you to use the types of powerful analytics tools that were initially created for data warehouses on data lakes. In addition, they enable you to combine elements of the two data structure types for more sophisticated analysis, which many have discovered is extremely useful for AI and ML applications.

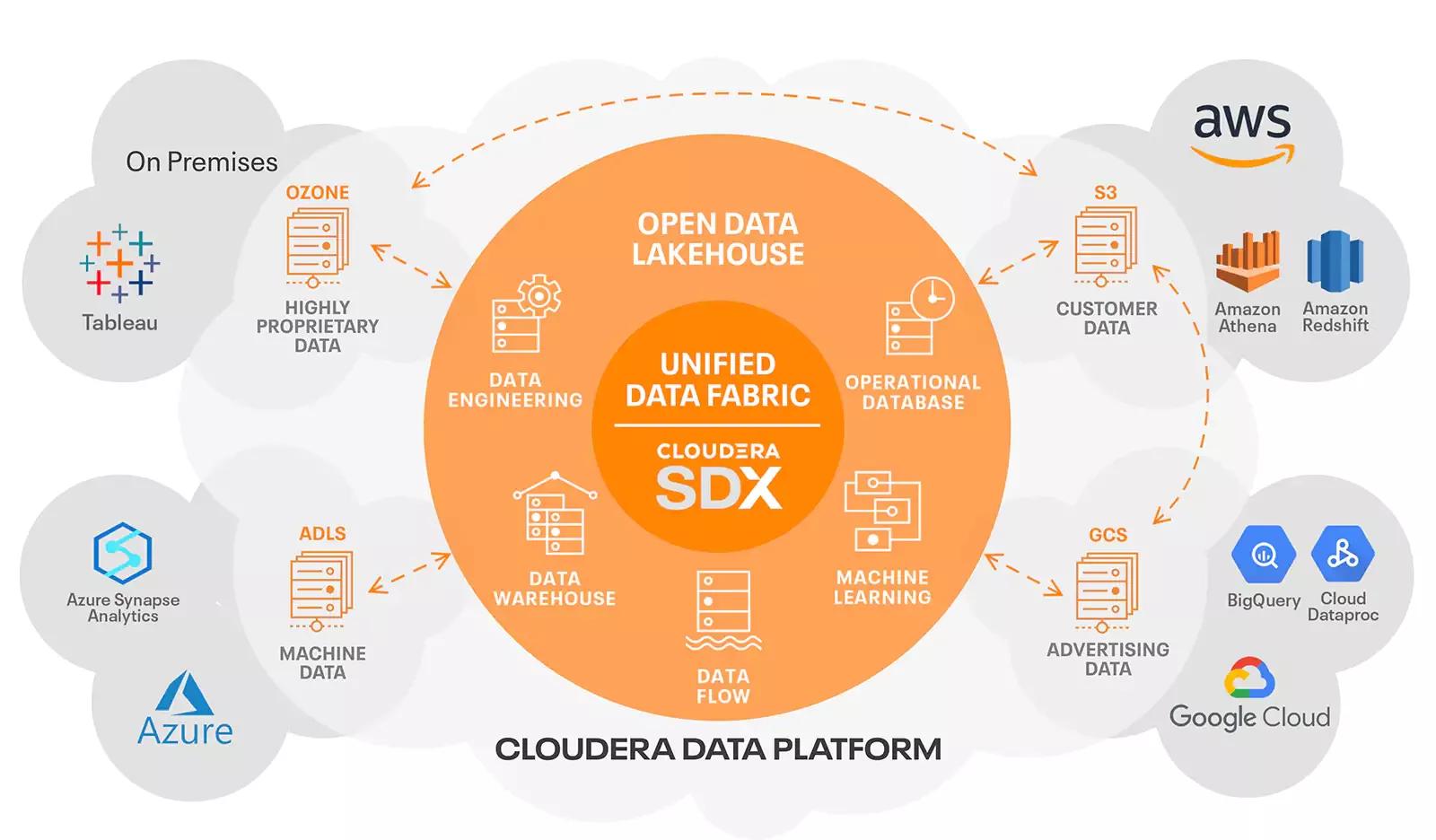

A key proponent of the data lakehouse concept is Cloudera, a company that traces some of its roots back to the open-source Hadoop big data software utilities that served as a key driver for the growth of data lakes. At Cloudera's recent Evolve 2022 event, the company unveiled some additions to its core Cloudera Data Platform (CDP) tools that should help enable the use of data lakehouses in more environments.

The company is bringing the ability to easily move data and analytics tools across multiple public clouds, including AWS and Microsoft Azure, as well as an organization's private cloud. Cloudera refers to the concept as "hybrid data," where data now takes on the flexibility and lock-in avoidance that hybrid cloud applications have begun to offer.

To bring the hybrid data concept to life, Cloudera enabled three specific new capabilities: Portable Data Services lets companies move the analytics applications and services created for a particular data set across various public and private environments without needing to change a single line of code. Secure Data Replication moves an exact copy of the data itself across the various environments, ensuring that companies can have access to the data they need for a given workload. Finally, Universal Data Distribution leverages the company's new Cloudera DataFlow tool for ingesting, or importing, into the platform with a particular focus on data streams, allowing live data feeds to be integrated into the company's enterprise data management tools as well. Like most aspects of Cloudera's solution, DataFlow is built on open-source tools, notably Apache NiFi.

Collectively, the three services give organizations all the tools they need to run data analytics workloads on a wide range of data types across multiple platforms and physical locations. Not only does this give companies the flexibility they've grown to appreciate with hybrid cloud architectures, but it opens up new opportunities as well.

For example, it can also be leveraged to try an analytics workload in different environments to better understand the unique hardware acceleration or platform benefits that different clouds (public or private) can offer. The goal is to help companies build a flexible data fabric that can extend to whatever type of environment is best suited for a given project or data set.

As has become clear with cloud computing in general, the need to support hybrid, multi-cloud solutions has become table stakes for today's modern application tools. As a result, vendors have either already created or are working to enable this type of flexible support. Therefore, it makes tremendous sense to extend this type of approach to the data and analytics tools that often power these applications. With Cloudera's latest extensions to CDP, it's taking an important step in that direction.

Bob O'Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on Twitter @bobodtech.