Something to look forward to: Tech giants like Microsoft and Google, alongside OpenAI have been making the headlines with their innovative AI research and advancement. Never to be outdone, Mark Zuckerberg and Meta have thrown their hat into the AI ring with the release of their new natural language model, LLaMA. The model reportedly outperforms GPT-3 in most benchmarks, being only one-tenth of GPT-3's total size.

Announced in a blog post on Friday, Meta's Large Language Model Meta AI (LLaMA) is designed with research teams of all sizes in mind. At just 10% the size of GPT-3 (third-gen Generative Pre-trained Transformer), the LLaMA model provides a small but high performing resource that can be leveraged by even the smallest of research teams, according to Meta.

This model size ensures that small teams with limited resources can still use the model and contribute to overall AI and machine learning advancements.

Today we release LLaMA, 4 foundation models ranging from 7B to 65B parameters.

--- Guillaume Lample (@GuillaumeLample) February 24, 2023

LLaMA-13B outperforms OPT and GPT-3 175B on most benchmarks. LLaMA-65B is competitive with Chinchilla 70B and PaLM 540B.

The weights for all models are open and available at https://t.co/q51f2oPZlE

1/n pic.twitter.com/DPyJFBfWEq

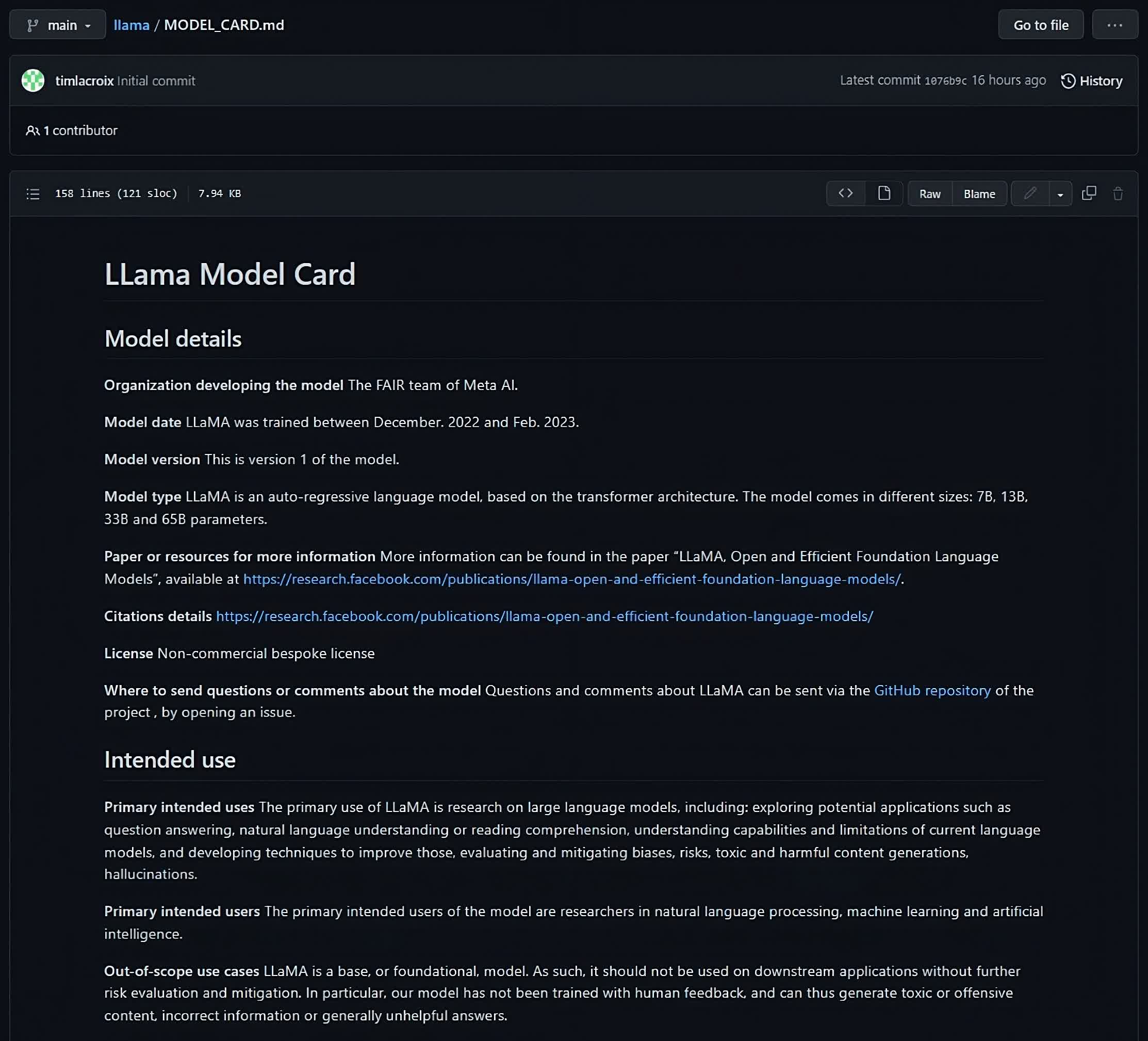

Meta's approach with LLaMA is markedly different when compared to OpenAI's ChatGPT, Google's Bard, or Microsoft's Prometheus. The company is releasing the new model under a noncommercial license, reiterating its stated commitment to AI fairness and transparency. Access for researchers in organizations across government, academia, and industry research interested in leveraging the model will required to apply for a license and granted access on a case-by-case basis.

Those researchers who successfully obtain a license will have access to LLaMA's small, highly accessible foundation model. Meta is making LLaMA available in several size parameters including 7B, 13B, 33B, and 65B. The company has also released the LLaMA model card on GitHub, which provides additional details about the model itself and Meta's public training data sources.

According to the card, the model was trained using CCNet (67%), C4 (15%), GitHub (4.5%), Wikipedia (4.5%), Books (4.5%), ArXiv (2.5%), and Stack Exchange (2%).

Meta was more than forthcoming about the state of LLaMA and their intent to further evolve the model. While it is a foundation model capable of being adapted to a number of different use cases, the company acknowledged that unknowns related to intentional bias and toxic comments are still a threat that must be managed. The company's hope is that sharing this small but flexible model will lead to new approaches that can limit, or in some cases eliminate, potential avenues of model exploitation.

The complete LLaMA research paper is available for download and review from the Meta Research blog. Those interested in applying for access can do so on Meta's online request form.