FreeSync Impressions

It's really hard to explain how good adaptive sync technology is without a real-world demonstration. It's all well and good to describe how stutter and tearing is removed when gameplay is rendered at below 60 Hz, but it's another thing entirely to see this technology in action. While I'll do my best to give my impressions of FreeSync and how it works, I'd recommend checking out a live demo of the technology if you are thinking of upgrading to an adaptive sync setup.

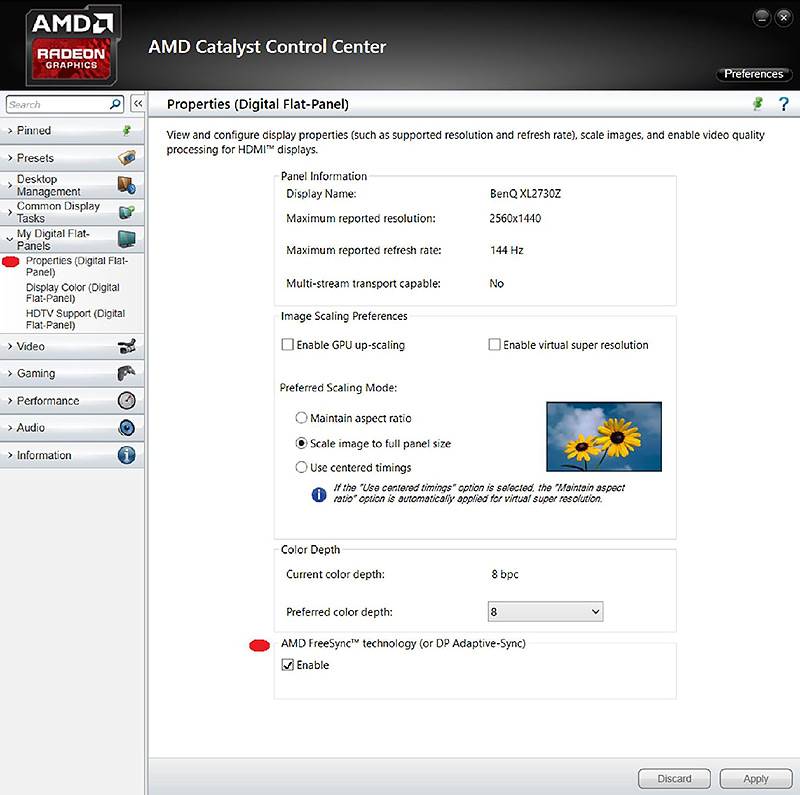

First thing's first, the setup. It's as easy as attaching the FreeSync display to your AMD graphics card via DisplayPort, installing the latest Catalyst driver (I tested with the 15.3.1 beta drivers for this review), and then enabling FreeSync in the display settings section. There is no need to enable FreeSync for individual games: with the setting enabled for a display, it will always be active regardless of whether v-sync is enabled or disabled. Changing v-sync settings in games only affects the method of synchronization used for render rates outside the variable refresh rate range.

Note that at this stage, FreeSync is only supported on single-GPU systems: dual-GPU users will have to disable CrossFire until a driver update is provided in April to enable FreeSync and CrossFire combinations. Multiple FreeSync displays are also unsupported, so you won't be able to have Eyefinity with FreeSync just yet, though a combination of one FreeSync display with other non-FreeSync displays should work.

AMD does have a windmill demo for FreeSync that allows you to easily enable and disable FreeSync, change frame rates and animations, and test out adaptive sync in general. As expected, FreeSync on looks smoother than off, but how does it fare in games?

Well, it basically looks the same as G-Sync. If the frame rates you're achieving in game are between 40 and 60 FPS, you're in the adaptive sync sweet spot where you'll see the most benefits. With v-sync off, gameplay in this range is filled with tearing and occasional stuttering as framerates naturally fluctuate between these ranges. It's a similar story with V-sync on: the repeated frames reduce tearing, but increase stuttering significantly.

With FreeSync enabled, both of these problems are solved, and gameplay looks smooth and tear-free. It may be hard to believe, but gameplay at 45 FPS really does look just as smooth as 60 FPS. Fluctuations in frame rates normally introduce stutter and jank in this FPS range, but with both adaptive sync technologies, the stutter is removed and you won't realize you're gaming below 60 FPS unless you have a counter on-screen. It really is remarkable what a difference it can make to a gaming experience.

With a Radeon R9 290 that I used for this review, there were some games I couldn't play at maximum settings, at 2560 x 1080, while sustaining a frame rate above this 34UM67's minimum refresh rate (48 Hz, more on that later). Dragon Age Inquisition is one such title where I had a noticeably smoother experience gaming with FreeSync on, and I also saw big differences in Crysis 3, Shadow of Mordor and Watch Dogs where I didn't consistently achieve 60 FPS. But it's not just these titles where you'll see the benefits: FreeSync is effective in every game, and there's no reason to turn it off when available to you.

The 34UM67 I received to review has a maximum refresh rate of 75 Hz, though adaptive refresh rates between 60 and 75 Hz don't have as much effect as below 60 Hz. This is because although you can get stutter with v-sync on due to repeated frames, with v-sync off you don't get as much tearing above 60 Hz. Although FreeSync removes all stuttering and tearing as you would expect, improving the experience somewhat, it's not as noticeable as it is below 60 Hz.

And while FreeSync does allow you to choose between v-sync on and v-sync off for frame rates above the maximum refresh rate (75 Hz in my case), there isn't a huge difference between the two. While gaming I opted to have v-sync enabled so the render rate was capped at 75 FPS, and this was a fine solution identical to what you get with G-Sync in these situations. Disabling v-sync will give you faster input for competitive games, and it's great to have an option, though I don't see any downside to Nvidia's implementation outside of niche situations.

It's a different situation entirely for when frame rates dip below the minimum refresh rate, which let's assume is 40 Hz. Choosing to have v-sync enabled produces notably more stutter below 40 FPS with FreeSync enabled than disabled, and this is due to some overhead with polling and having to repeat frames to 40 Hz. With FreeSync disabled, these frames are repeated to 75 Hz (or whatever is the maximum refresh rate), which creates a different and less janky experience. This is exactly the same experience as you get with G-Sync monitors dipping below 30 FPS.

Choosing to disable v-sync is a better option. You do introduce tearing in some circumstances, but performance feels better due to less stuttering. And unlike with v-sync enabled, there is no noticeable difference in smoothness in these low frame rate situations with FreeSync enabled compared to disabled.

It's also important to note that as soon as in-game frame rates dip below the minimum refresh rate, the smoothness immediately vanishes. This is understandable, and is the case for both G-Sync and FreeSync displays.

This brings me to my one main complaint about FreeSync, which is really a complaint directed towards FreeSync display manufacturers: a 48 Hz minimum refresh rate, which is the case for LG's FreeSync monitors like the one I used for this review, is too high to get the full benefits out of technology. The minimum refresh rate should be 40 Hz at the very highest, and ideally 30 Hz to match G-Sync.

Again, this is not a downside with FreeSync itself: the specification allows refresh rates of 9 to 240 Hz. But when you have a monitor that supports just 48-75 Hz, you're cutting out nearly half of that ideal variable refresh zone (40-60 Hz), which leads to a noticeable transition from smooth gameplay to stuttering gameplay at the variable refresh boundary of 48 Hz. For the best experience, you want this transition to occur gradually, which is what you get from a lower minimum refresh.

A minimum refresh of 48 Hz also increases the frame rate you'll want to target in games. When I was playing Metro: Last Light on the 34UM67, for example, I targeted 55 FPS to avoid the usual frame rate dips during gameplay from taking me below 48 FPS. But that's just 5 FPS shy of 60 FPS, a typical refresh rate of monitors. If the monitor supported a minimum of 40 Hz or 30 Hz, I could have targeted 45-50 FPS, allowing me to increase the graphical fidelity for a largely identical experience. On a 4K monitor, having the ability to target 45 FPS without dipping outside the smooth variable refresh zone is golden, especially considering the performance toll that 4K takes.

Most other OEMs have implemented 40 Hz minimum refresh rates, which going on my brief experience with some of these monitors at events, has a much nicer transition from variable refresh to fixed refresh. These monitors include the full ideal refresh zone, which in my eyes is the bare minimum for an adaptive sync display. Lowering the minimum refresh to 30 Hz would improve the transition even further, as well as giving refresh rate parity with G-Sync.

That said, I should make it clear that even at 48-75 Hz, FreeSync does deliver a noticeably smoother, better gaming experience. Opting for a monitor with a 40 Hz minimum delivers an even better experience, while shifting down to 30 Hz with G-Sync sees small gains. As far as this technology is concerned, the larger the refresh range, the better.