With technology advancing so rapidly around us, sometimes misconceptions can work their way into our common understanding. In this article, we'll take a step back and go over some of the most common things people get wrong when talking about computer hardware. For each one, we'll list the common fallacy as well as why it is not accurate.

#1 You can compare CPUs by core count and clock speed

If you've been around technology for a while, at some point you may have heard someone make the following comparison: "CPU A has 4 cores and runs at 4 GHz. CPU B has 6 cores and runs at 3 GHz. Since 4*4 = 16 is less than 6*3 = 18, CPU B must be better". This is one of the cardinal sins of computer hardware. There are so many variations and parameters that it is impossible to compare CPUs this way.

Taken on their own and all else being equal, a processor with 6 cores will be faster than the same design with 4 cores. Likewise, a processor running at 4 GHz will be faster than the same chip running at 3 GHz. However, once you start adding in the complexity of real chips, the comparison becomes meaningless.

There are workloads that prefer higher frequency and others that benefit from more cores. One CPU may consume so much more power that the performance improvement is worthless. One CPU may have more cache than the other, or a more optimized pipeline. The list of traits that the original comparison misses is endless. Please never compare CPUs this way.

#2 Clock speed is the most important indicator of performance

Building on the first misconception, it's important to understand that clock speed isn't everything. Two CPUs in the same price category running at the same frequency can have widely varying performance.

Certainly core speed has an impact, but once you reach a certain point, there are other factors that play a much bigger role. CPUs can spend a lot of time waiting on other parts of the system, so cache size and architecture is extremely important. This can reduce the wasted time and increase the performance of the processor.

The broad system architecture can also play a huge part. It's entirely possible that a slow CPU can process more data than a fast one if its internal architecture is better optimized. If anything, performance per watt is becoming the dominant factor used to quantify performance in newer designs.

#3 The main chip powering your device is a CPU

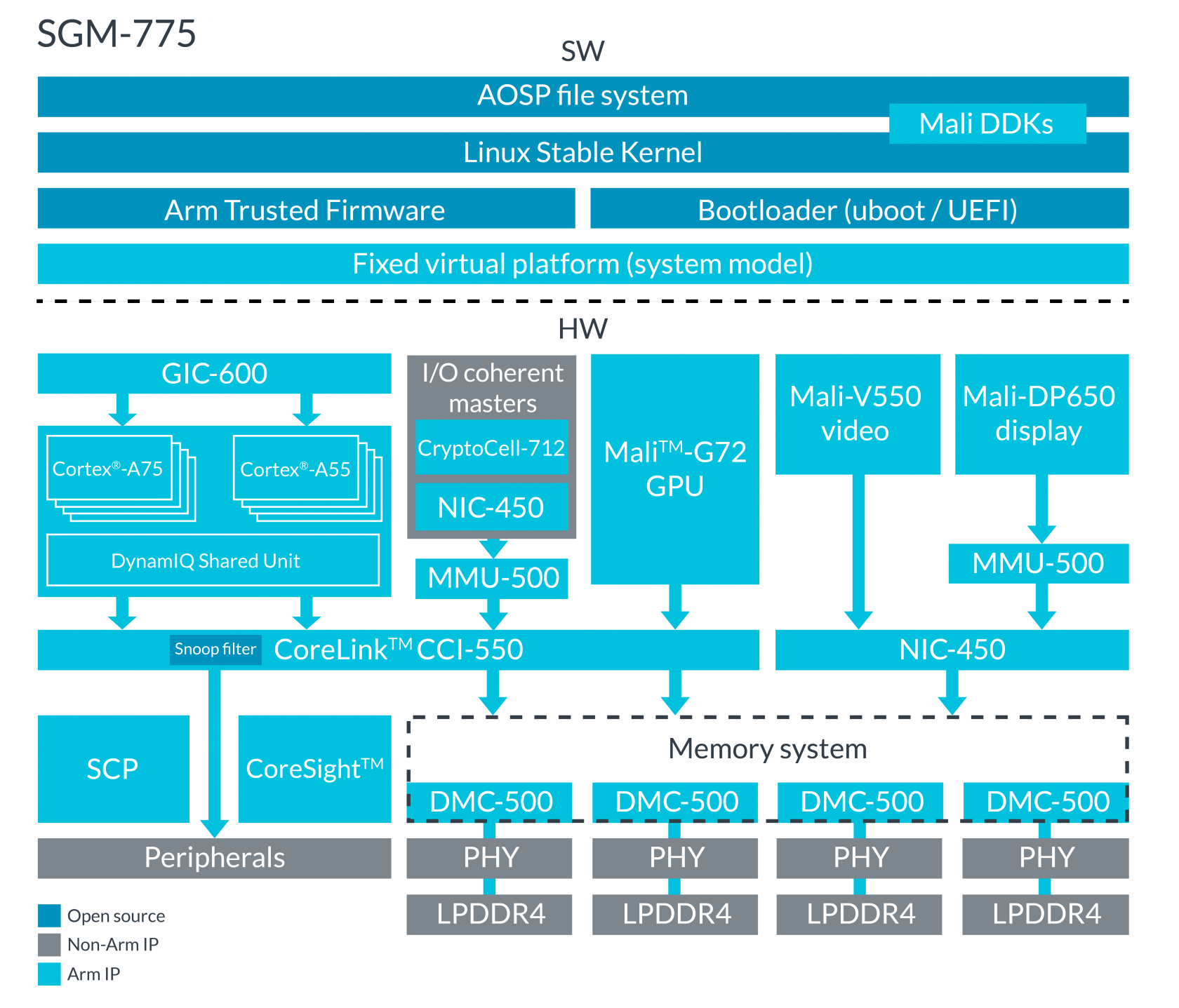

This is something that used to be absolutely true, but is becoming increasingly less true every day. We tend to group a bunch of functionality into the phrase "CPU" or "processor" when in reality, that's just one part of a bigger picture. The current trend, known as heterogeneous computing, involves combining many computing elements together into a single chip.

Generally speaking, the chips on most desktops and laptops are CPUs. For almost any other electronic device though, you're more than likely looking at a system on a chip (SoC).

A desktop PC motherboard can afford the space to spread out dozens of discrete chips, each serving a specific functionality, but that's just not possible on most other platforms. Companies are increasingly trying to pack as much functionality as they can onto a single chip to achieve better performance and power efficiency.

In addition to a CPU, the SoC in your phone likely also has a GPU, RAM, media encoders/decoders, networking, power management, and dozens more parts. While you can think of it as a processor in the general sense, the actual CPU is just one of the many components that make up a modern SoC.

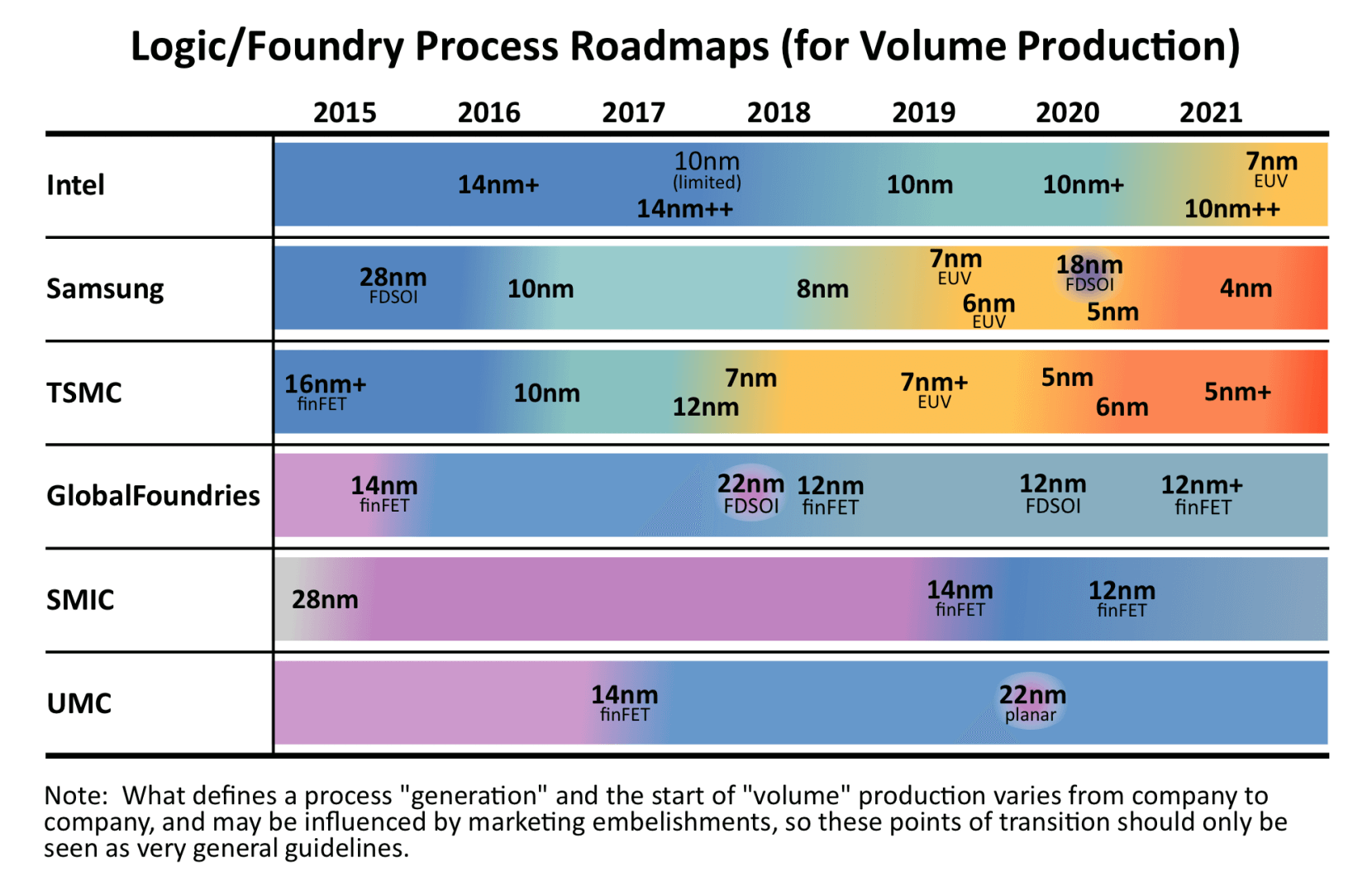

#4 Technology node and feature size are useful for comparing chips

There's been a lot of buzz recently about Intel's delay in rolling out their next technology node. When a chip maker like Intel or AMD designs a product, it will be manufactured using a specific technology process. The most common metric used is the size of the tiny transistors that make up the product.

This measurement is made in nanometers and several common process sizes are 14nm, 10nm, 7nm, and 3nm. It would make sense that you should be able to fit two transistors on a 7nm process in the same size as one transistor on a 14nm process, but that's not always true. There is a lot of overhead, so the number of transistors and therefore processing power doesn't really scale with the technology size.

Source: IC Insights

Another potentially larger caveat is that there is no standardized system for measuring like this. All the major companies used to measure in the same way, but now they have diverged and each measure in a slightly different way. This is all to say that the feature size of a chip shouldn't be a primary metric when doing a comparison. As long as two chips are roughly within a generation, the smaller one isn't going to have much of an advantage.

#5 Comparing GPU core counts is a useful way to gauge performance

When comparing CPUs to GPUs, the biggest difference is the number of cores they have. CPUs have a few very powerful cores, while GPUs have hundreds or thousands of less powerful cores. This allows them to process more work in parallel.

Just like how a quad core CPU from one company can have very different performance than a quad core CPU from another company, the same is true for GPUs. There is no good way to compare GPU core counts across different vendors. Each manufacturer will have a vastly different architecture which makes this type of metric almost meaningless.

For example, one company may choose fewer cores but add more functionality, while another may prefer more cores each with reduced functionality. However, as with CPUs, a comparison between GPUs from the same vendor and in the same product family is perfectly valid.

#6 Comparing FLOPs is a useful way to gauge performance

When a new high-performance chip or supercomputer is launched, one of the first things advertised is how many FLOPs it can output. The acronym stands for floating point operations per second and measures how many instructions can be performed by a system.

This appears straightforward enough, but of course, vendors can play with the numbers to make their product seem faster than it is. For example, computing 1.0 + 1.0 is much easier than computing 1234.5678 + 8765.4321. Companies can mess with the type of calculations and their associated precision to inflate their numbers.

Looking at FLOPs also only measures raw CPU/GPU computation performance and disregards several other important factors like memory bandwidth. Companies can also optimize the benchmarks they run to unfairly favor their own parts.

#7 ARM makes chips

Almost all low-power and embedded systems are powered by some form of ARM processor. What's important to note though is that ARM doesn't actually make physical chips. Rather, they design the blueprints for how these chips should operate, and let other companies build them.

For example, the A13 SoC in the latest iPhone uses the ARM architecture, but was designed by Apple. It's like giving an author a dictionary and having them write something. The author has the building blocks and has to adhere to guidelines about how the words can be used, but they are free to write whatever they want.

By licensing out their intellectual property (IP), ARM allows Apple, Qualcomm, Samsung, and many others to create their own chips best suited to their own needs. This allows a chip designed for a TV to focus on media encoding and decoding, while a chip designed to go into a wireless mouse will focus on low power consumption.

The ARM chip in the mouse doesn't need a GPU or a very powerful CPU. Because all processors based on ARM use the same core set of designs and blueprints, they can all run the same apps. This makes the developer's job easier and increases compatibility.

#8 ARM vs. x86

ARM and x86 are the two dominant instruction set architectures that define how computer hardware works and interacts. ARM is the king of mobile and embedded systems, while x86 controls the laptop, desktop, and server market. There are some other architectures, but they serve more niche applications.

When talking about an instruction set architecture, that refers to the way a processor is designed on the inside. It's like translating a book into another language. You can convey the same ideas, but you just write them out in a different way. It's entirely possible to write a program and compile it one way to run on an x86 processor and another way to run on ARM.

ARM has differentiated from x86 in several key ways, which have allowed them to dominate the mobile market. The most important is their flexibility and broad range of technology offerings. When building an ARM CPU, it's almost as if the engineer is playing with Legos. They can pick and choose whatever components they want to build the perfect CPU for their application. Need a chip to process lots of video? You can add in a more powerful GPU. Need to run lots of security and encryption? You can add in dedicated accelerators. ARM's focus on licensing their technology rather than selling physical chips is one of the main reasons why their architecture is the most widely produced. Intel and AMD on the other hand have stagnated in this area which created the vacuum that ARM took control of.

Intel is most commonly associated with x86 and while they did create it, AMD processors run the same architecture. If you see x86-64 mentioned somewhere, that's just the 64-bit version of x86. If you're running Windows, you may have wondered why there is a "Program Files" and a "Program Files (x86)". It's not that programs in the first folder don't use x86, it's just that they are 64-bit while programs in "Program Files (x86)" are 32-bit.

One other area that can cause confusion between ARM and x86 is in their relative performance. It's easy to think that x86 processors are just always faster than ARM processors and that's why we don't see ARM processors in higher-end systems. While that is usually true (up until now), it's not really a fair comparison and it's missing the point. The whole design philosophy of ARM is to focus on efficiency and low-power consumption. They let x86 have the high-end market because they know they can't compete there. While Intel and AMD focus on maximum performance with x86, ARM is maximizing performance per Watt.

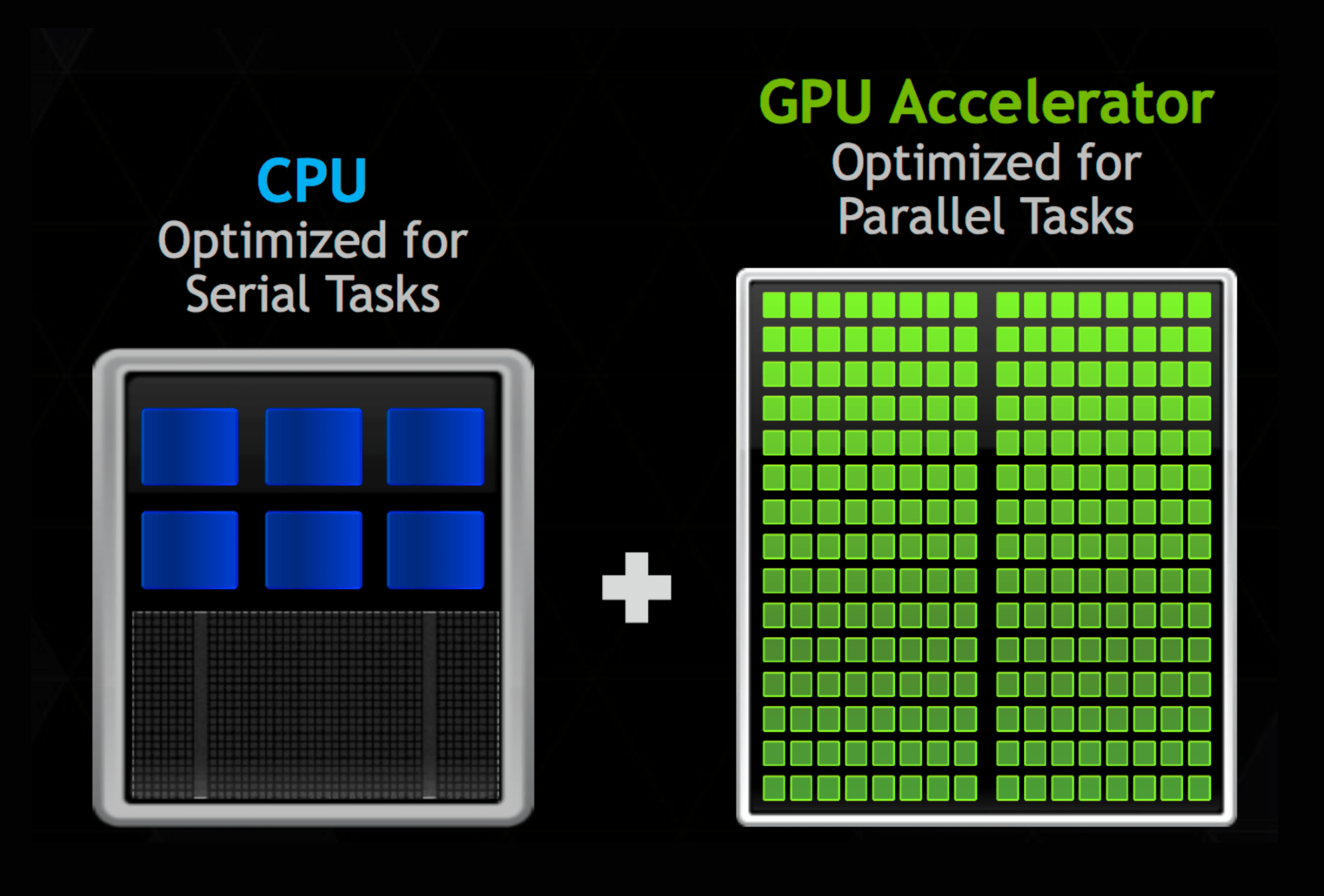

#9 GPUs are faster processors than CPUs

Over the past few years, we have seen a huge rise in GPU performance and prevalence. Many workloads that were traditionally run on a CPU have moved to GPUs to take advantage of their parallelism. For tasks that have many small parts that can be computed at the same time, GPUs are much faster than CPUs. That's not always the case though, and is the reason we still need CPUs.

In order to make proper use of a CPU or GPU, the developer has to design their code with special compilers and interfaces optimized for the platform. The internal processing cores on a GPU, of which there can be thousands, are very basic compared to a CPU. They are designed for small operations that are repeated over and over.

The cores in a CPU, on the other hand, are designed for a very wide variety of complex operations. For programs that can't be parallelized, a CPU will always be much faster. With a proper compiler, it's technically possible to run CPU code on a GPU and vice-versa, but the real benefit only comes if the program was optimized for the specific platform. If you were to just look at price, the most expensive CPUs can cost $50,000 each while top-of-the-line GPUs are less than half of that. In summary, CPUs and GPUs both excel in their own areas and neither is necessarily faster than the other outright.

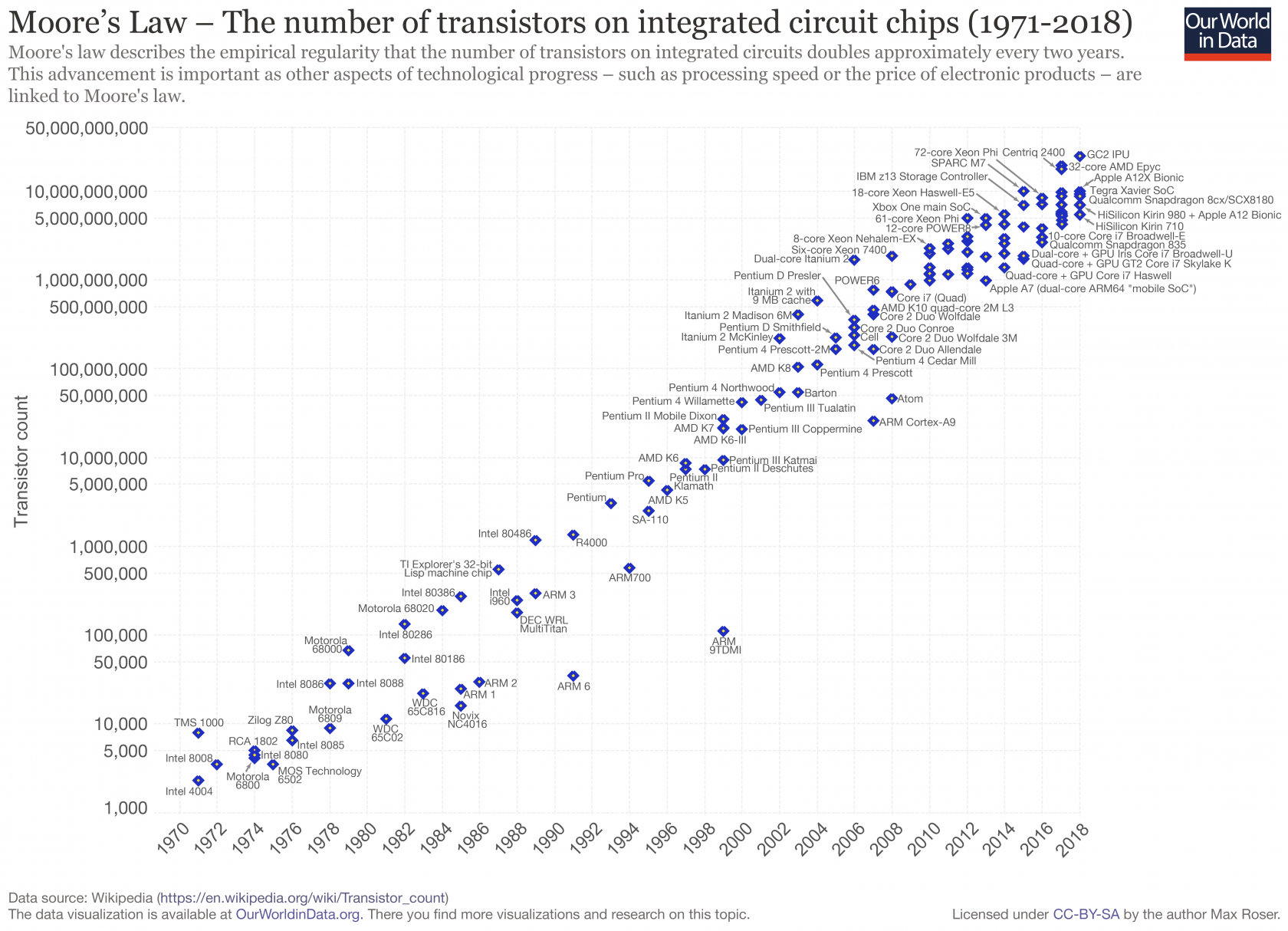

#10 Processors will always keep getting faster

One of the most famous representations of the technology industry is Moore's Law. It is an observation that the number of transistors in a chip has roughly doubled every 2 years. It has been accurate for the past 40 years, but we are at its end and scaling isn't happening like it used to.

If we can't add more transistors to chips, one thought is that we could just make them bigger. The limitation here is getting enough power to the chip and then removing the heat it generates. Modern chips draw hundreds of Amps of current and generate hundreds of Watts of heat.

Today's cooling and power delivery systems are struggling to keep up and are close to the limit of what can be powered and cooled. That's why we can't simply make a bigger chip.

If we can't make a bigger chip, couldn't we just make the transistors on the chip smaller to add more performance? That concept has been valid for the past several decades, but we are approaching a fundamental limit of how small transistors can get.

With new 7nm and future 3nm processes, quantum effects start to become a huge issue and transistors stop behaving properly. There's still a little more room to shrink, but without serious innovation, we won't be able to go much smaller. So if we can't make chips much bigger and we can't make transistors much smaller, can't we just make those existing transistors run faster? This is yet another area that has given benefits in the past, but isn't likely to continue.

While processor speed increased every generation for years, it has been stuck in the 3-5GHz range for the past decade. This is due to a combination of several things. Obviously it would increase the power usage, but the main issue again has to do with the limitations of smaller transistors and the laws of physics.

As we make transistors smaller, we also have to make the wires that connect them smaller, which increases their resistance. We have traditionally been able to make transistors go faster by bringing their internal components closer together, but some are already separated by just an atom or two. There's no easy way to do any better.

Putting all of these reasons together, it's clear that we won't be seeing the kind of generational performance upgrades from the past, but rest assured there are lots of smart people working on these issues.