In the beginning, it was just one. Years went by before it became two, and then four. Now you can have 8, 12, 16, or more. Modern PCs have CPUs that can handle lots of threads, all at the same time, thanks to developments in chip design and manufacturing.

But what exactly are threads and why is it so important that CPUs can crunch through more than just one? In this article, we'll answer these questions and more.

A stitch in time: What is a thread?

We can begin to delve into the world of processor threads by jumping straight in and answering the opening question: just what is a thread?

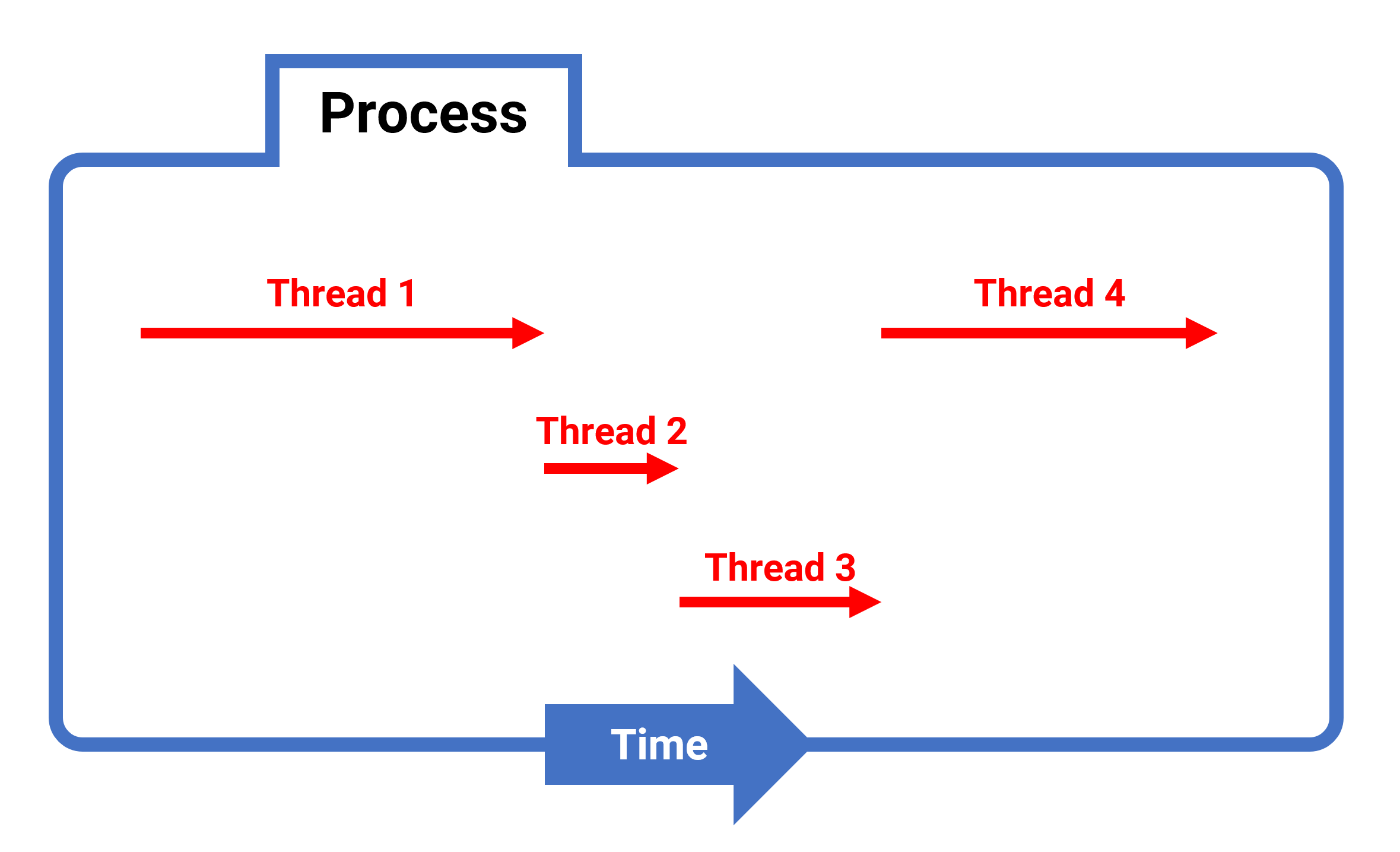

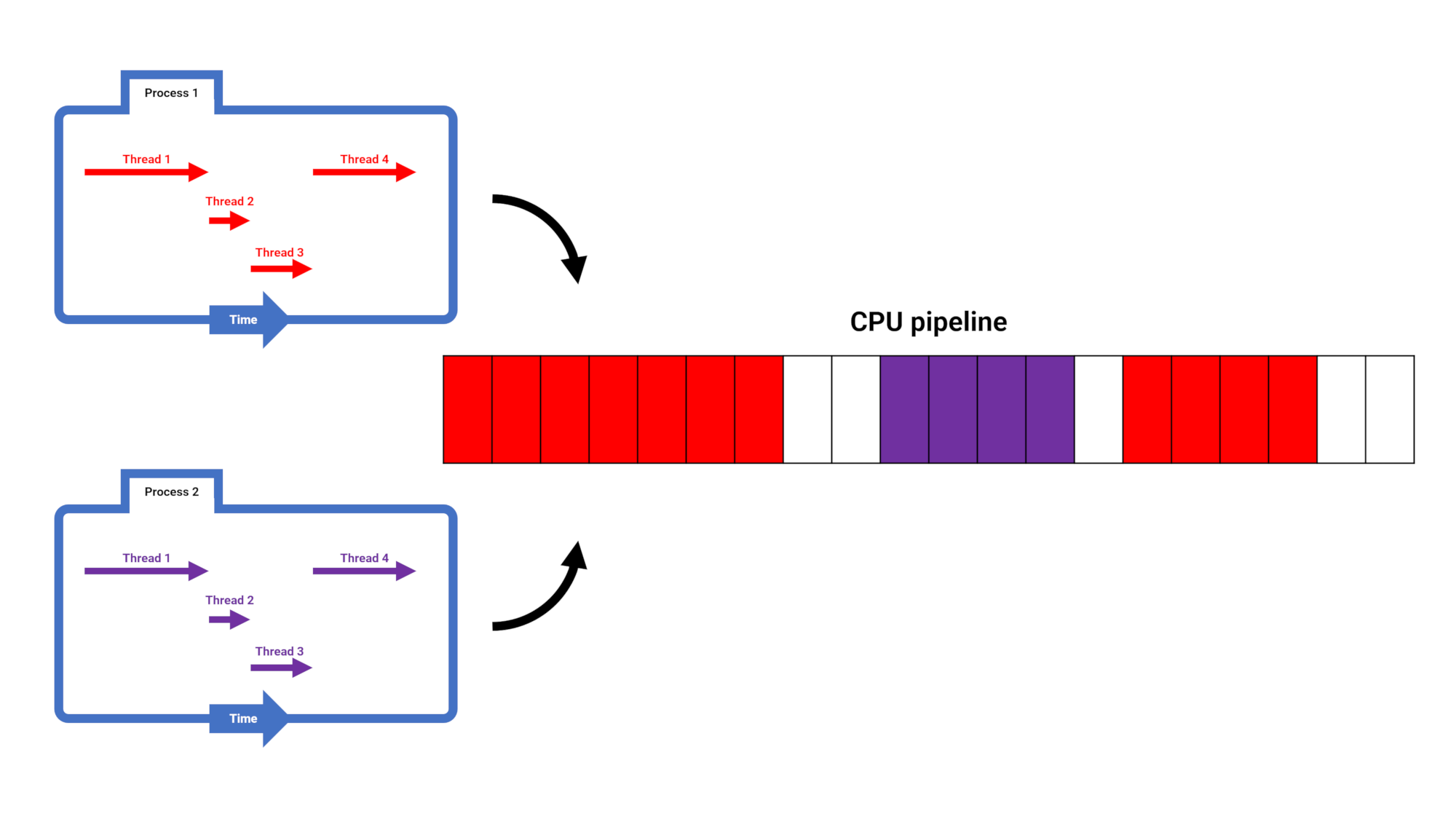

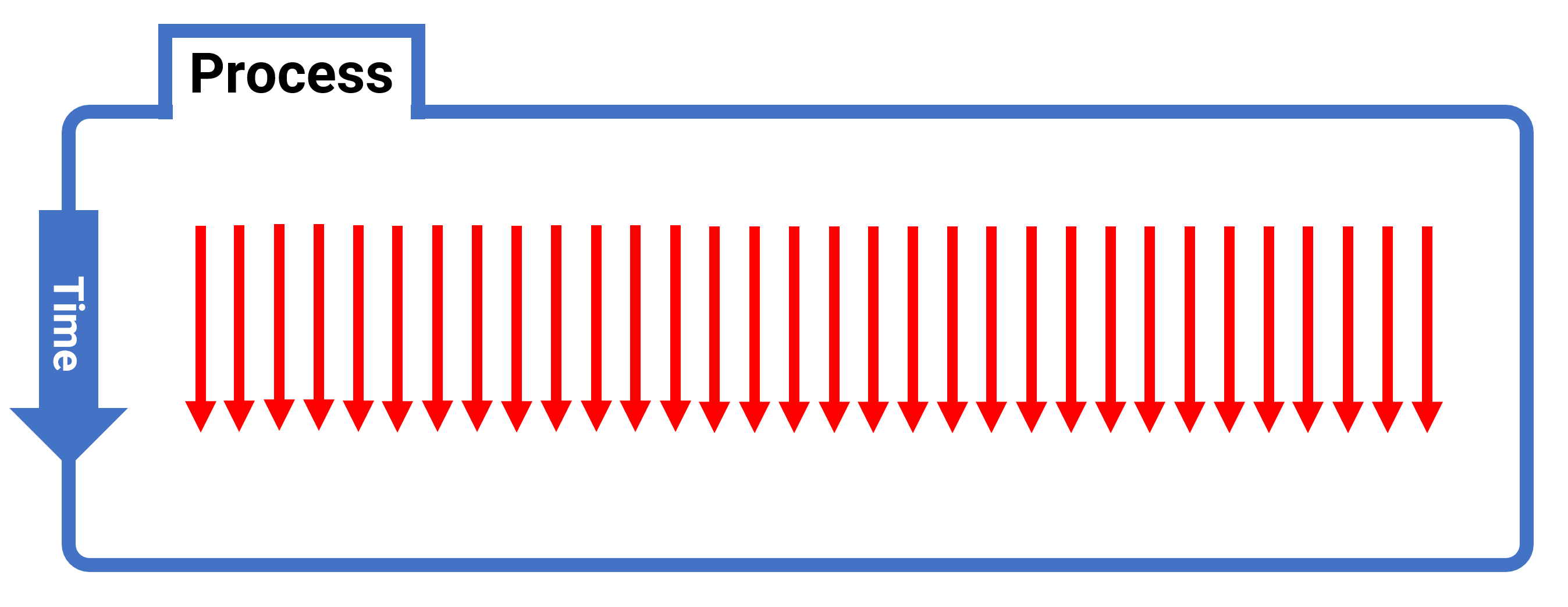

In the simplest of terms, a processor thread is the shortest sequence of instructions required to do a computing task. It might be a very short list, but it could also be enormous in length. What affects this is the process, what threads are part of (as illustrated below)...

So now we have a new question to answer (i.e. what is a process?) but thankfully, that's just as easy to tackle. If you're running Windows on your computer, press the Windows key and X, and select Task Manager from the list that appears.

By default, it will open up on the Processes tab and you should see a long list of processes currently running on your machine. Some of these will be individual programs, running by themselves with no interaction from the user.

Others will be an application, that you can directly control, and some of those may generate additional background processes – tasks that work away behind the scenes, at the bidding of the main program.

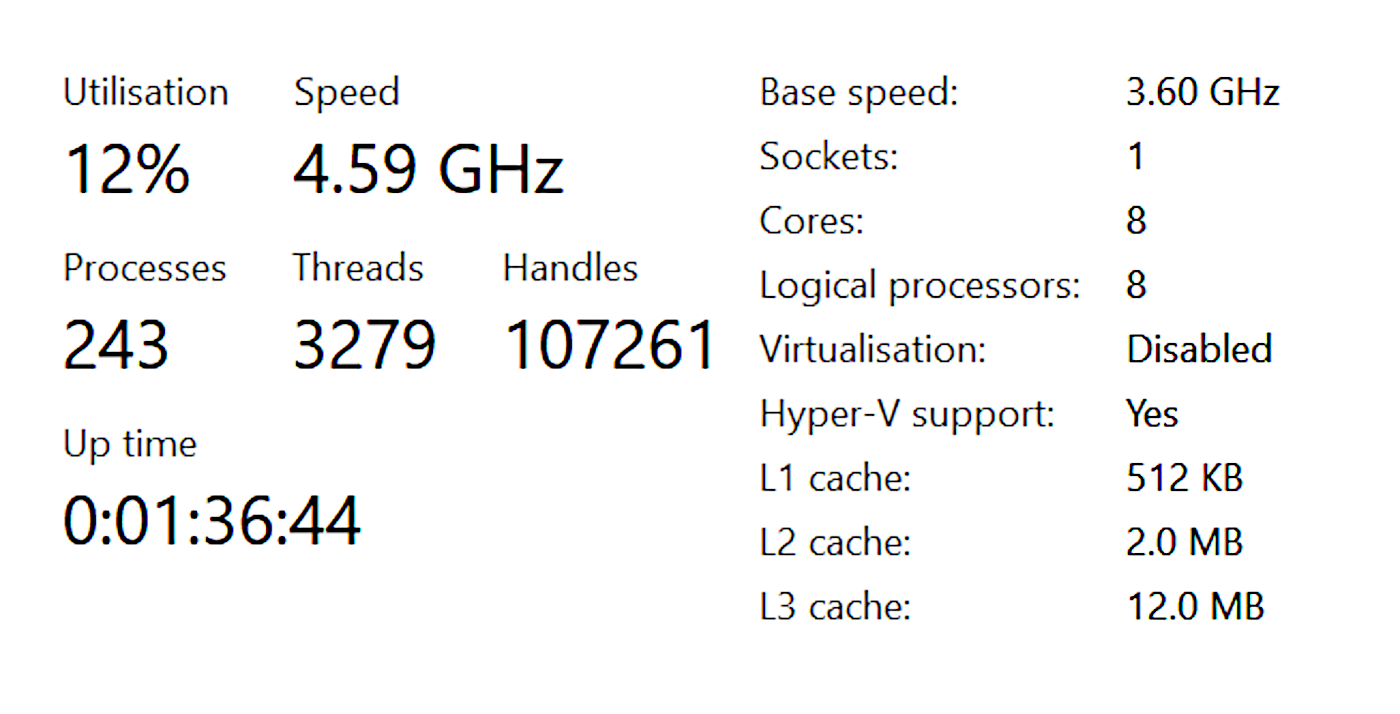

If you switch over to the Performance tab, in the Task Manager, and then select the CPU section, you can see how many processes are currently on the go, along with the total number of active threads.

The Handles number refers to the number of File Handles flying about. Every time a process wants to access a file, be it in RAM or a storage drive, a file handle is created. Each one is unique to the process that created it, so one file can actually have lots of handles.

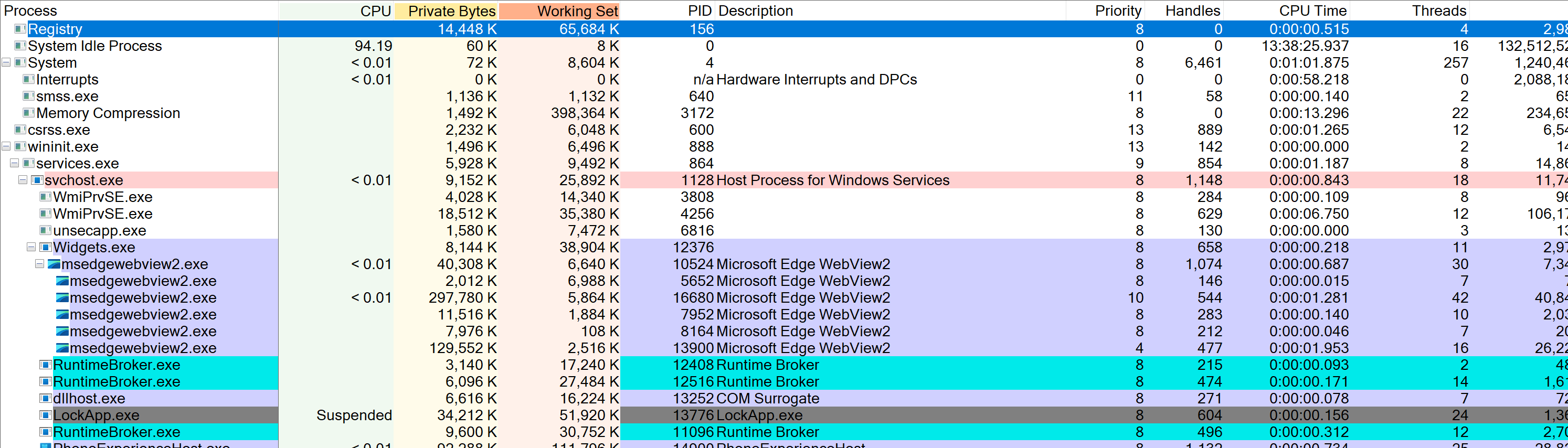

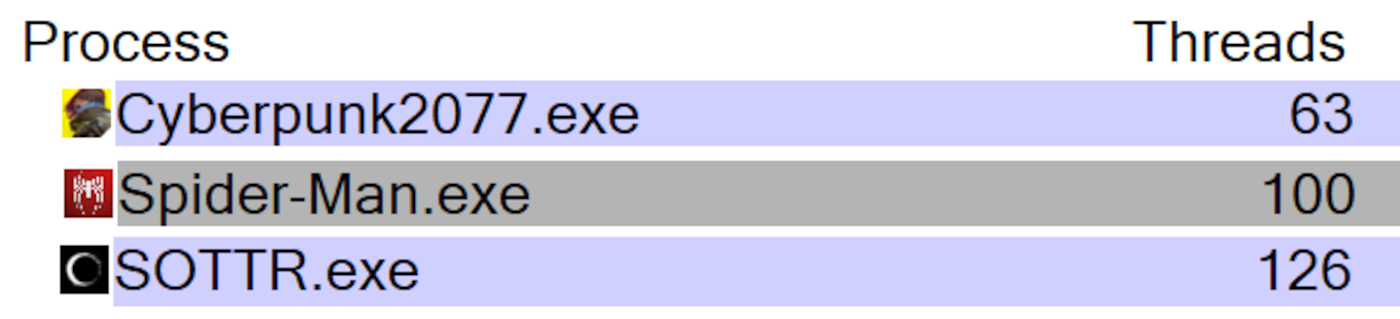

Returning to threads, Task Manager doesn't tell you much about them – for example, the number of threads associated with each process isn't shown. Fortunately, Microsoft has another program called Process Explorer to help us out.

Here we can see a far more detailed overview of the various processes and their threads.

Note how some programs generate relatively few instruction sequences (e.g. the Corsair iCUE plugin host just has one), whereas others run into the hundreds, such as the System process. There's a little more to the information that explains matters in more detail, but we'll return to looking at this later on.

Now, strictly speaking, it's actually the operating system that generates the majority of these threads – the process itself usually just has the one, to start it all off. The OS then goes about the task of creating and managing them all by itself. But that software can't actually process the instructions in the threads themselves; hardware is required for that job.

Enter the threaderizer, a.k.a. the CPU

The ultimate destination, for any thread, is the central processing unit (CPU). Well, not always, but we'll come to that in a bit. This chip takes the list of instructions, translates them into a "language" it understands, and then carries out the prescribed tasks.

Deep in the bowels of the processor, dedicated hardware stores threads to analyze them, and then sort their instruction list in such a way to best suit what the processor is doing at the moment in time.

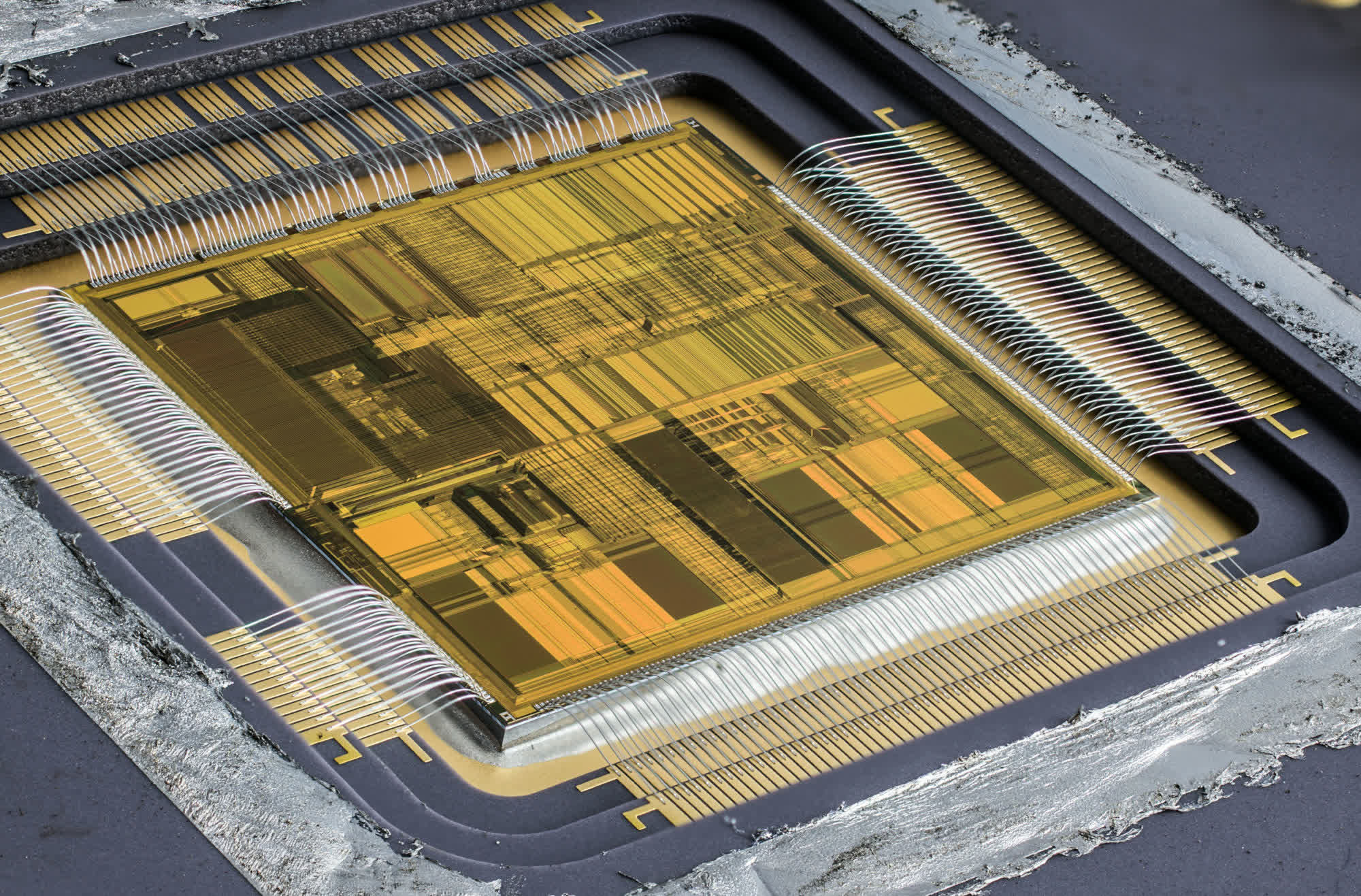

Even the likes of Intel's original Pentium, as shown above, thread instructions could be slightly reordered to maximize performance. Today's CPUs contain extremely complex thread management tools, not just because of the sheer number that they have to juggle, but also to calculate the future.

Branch prediction has been around for a long time now, and it's an essential part of a CPU's armory. If a thread contains a sequence of 'If...then...else' instructions, the prediction circuitry estimates what's the most likely outcome.

The answer from this guesstimate then makes the CPU rummage about in its instruction store and then execute the ones that the logic decision requires.

If the prediction was correct, then a notable amount of time is saved from having to wait for the whole thread to be processed. If not, then that's not so good – this is why CPU designers work hard on their branch predictors!

Central processors from the 1990s, whether in desktop or server form, just had one core, so could only work on one thread at a time, although they could do several instructions simultaneously (known as being superscalar).

Servers and top-end workstations have to deal with a huge number of threads, and machines of the Pentium era usually had two CPUs to help with the workload. However, the idea that a processor could handle several threads at the same time had been around for a good while.

For decades, various projects came and went, exploring the possibility of a processor working on multiple threads at once, but these implementations were still only executing the instructions from one thread at any one time.

The idea of a CPU crunching more than one thread instruction in its core, aka simultaneous multithreading (SMT), would have to wait until the capabilities of the hardware caught up.

This was achieved by 2002, when Intel launched a new version of the Pentium 4 processor. It was the first desktop CPU to be fully SMT-capable, with the feature coming under the moniker of Intel Hyper-Threading technology.

One potato, two potatoes...

So how exactly does a single core in a CPU work on two threads at the same time?

Think of a CPU as being a complex factory, with multiple stages to it – fetching and then organizing its raw materials (i.e. data), then sorting out its orders (threads), by breaking them down into lots of smaller tasks.

Just like a high-volume car production line will work on various parts, one or two at a time, a CPU needs to do various tasks in a set sequence in order to complete a given set of instructions.

Better known as a pipeline, the different stages won't always be busy; some have to wait for a while until the previous steps are completed.

This is where SMT comes into play. Hardware dedicated to keeping track of the status of every part in a pipeline is used to determine if a different thread could utilize idle stages, without stalling the thread currently being worked on.

The fact that desktop CPUs became multi-threaded long before they became multi-core shows that SMT is far easier to implement. In the case of Intel's Northwood architecture, less than 5% of the total die was involved in managing the two threads.

CPU cores that are SMT-capable are organized in such a way that, to the operating system, they appear as separate logical cores. Physically, they're sharing much of the same resources, but they act independently.

Desktop CPUs only ever handle two threads per CPU core at most, because their pipelines are relatively short and simple, and analysis by designers would have shown that two is the optimal limit.

At the opposite end of the spectrum, huge server processors, such as Intel's old Xeon Phi chips or IBM's latest POWER processors handle 4 and 8 threads per core, respectively. That's because their cores contain a lot of pipelines, with shared resources.

These different approaches to CPU design come about because of the very different workloads the chips have to deal with.

Central processors aren't the only chips in a computer that have to deal with lots of threads. There's one chip, with a very specific role, that deals with thousands of threads, all at the same time.

All your threads are belong to us

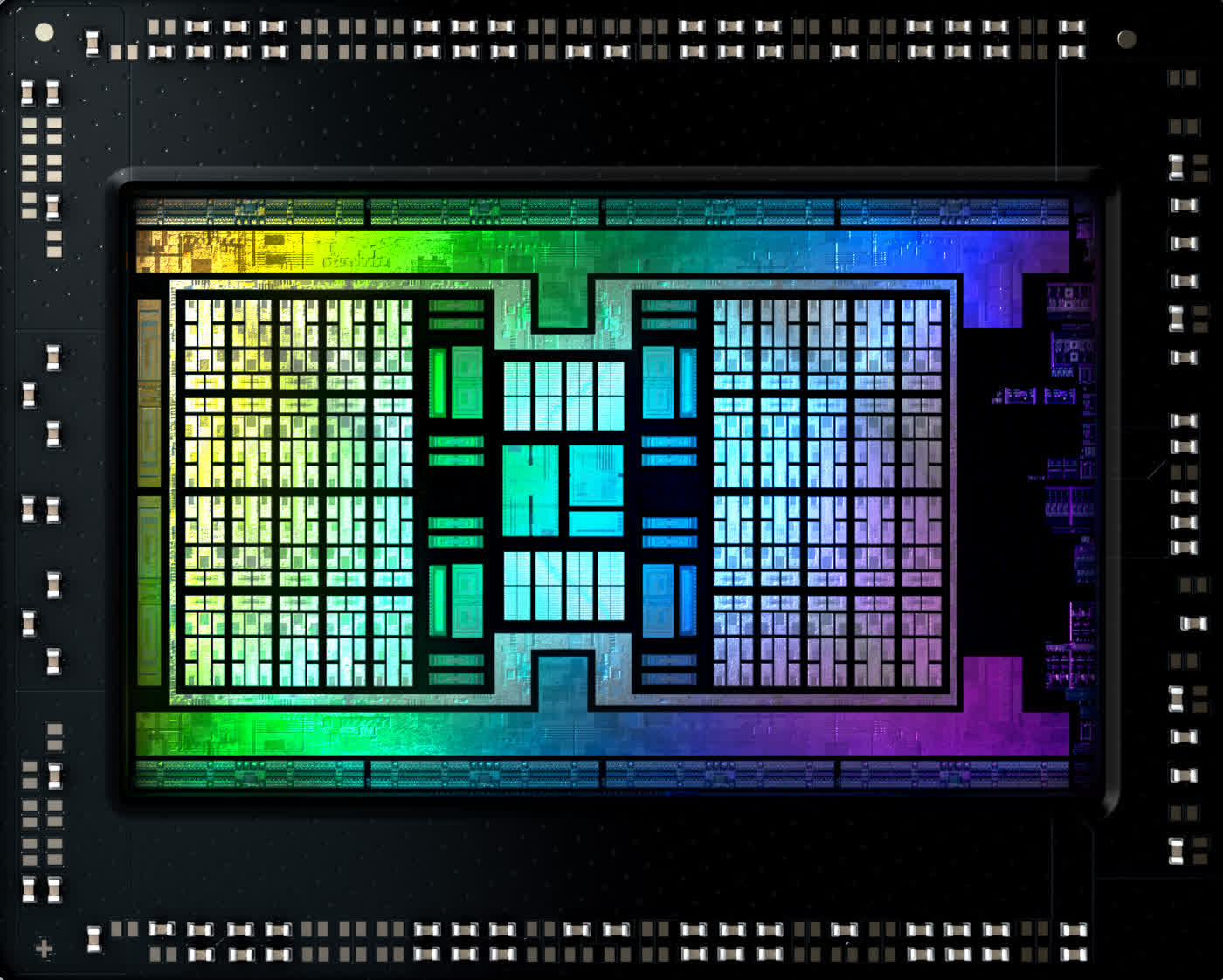

When it comes to boasting excessive numbers, GPUs have CPUs absolutely beaten. They're physically bigger, have way more transistors, use more power, and process vastly more threads than any server CPU could aim for.

Let's take AMD's Radeon RX 6800 graphics card, sporting the Navi 21 chip, as an example. That processor comprises 60 Compute Units (CU), with each one being to crunch up 64 separate threads at any one time, simultaneously.

That's 3,840 threads on the go!

So how does a GPU handle so many more than a central processor?

Each CU has two sets of SIMD (single instruction, multiple data) units and each one of those can work on 32 separate data elements at the same time. They can all be from different threads but the catch is, the unit has to be doing the exact same instruction in each thread.

This is the key difference to a CPU – where a desktop processor core will only be handling no more than two threads, the instructions can be totally different, from entirely unrelated processes.

GPUs are designed to carry out the same operations over and over, usually from similar processes (technically they're known as kernels, but we'll leave that aside), but all massively in parallel.

Just as with the IBM POWER10, a CPU that's only for enterprise servers, graphics processing chip are built to do a very specialized task.

Today's biggest games, with their complex 3D images, require an incredible amount of math to be processed, all in just a few milliseconds. And that requires threads – lots of them!

Threads! Lights! Action!

If you take a look at any of our CPU reviews, you'll nearly always see two results from Cinebench, a benchmark that carries a challenging CPU-based rendering task.

One result is for the test using just one thread, whereas the other will use as many threads as the CPU can handle in total. The results from the latter are always far faster than the single-threaded test. Why is this the case?

Cinebench is rendering 3D graphics, just like in a game, albeit a single highly-detailed frame. And if you remember how GPUs do lots of threads in parallel to create 3D graphics, it becomes obvious why CPUs with lots of cores, especially with SMT, do the workload so quickly.

Unfortunately adding more cores just makes the processor larger and therefore more expensive, so it might seem like SMT is always going to be a good thing to have. However, it depends very much on the situation.

For example, when we tested AMD's Ryzen 9 3950X (a 12-core, 24-thread CPU) across 36 different games, with and without SMT enabled, the results were very broad. Some titles gained as much as 16% more performance with SMT enabled, whereas others lost as much as 12%.

The mean difference, though, was only 1% so it's certainly not the case that SMT should always be disabled when gaming, but it does raise a few more questions.

The first of which is, why would a game run 12% slower when the CPU cores are handling two threads simultaneously? The key phrase here is "resource contention."

If a program is making a lot of demands on the CPU's memory system (cache, bandwidth, and RAM), having two threads on a core requesting access to the memory can induce a thread to stall, while it has to wait.

The more threads a CPU can handle, the more important the cache system in the processor becomes. This becomes evident when examining CPUs that have a fixed L3 cache size, no matter how many cores are activated.

The more cores and threads a chip has, the greater the number of cache requests the system will have to deal with. And this brings us nicely to the next question: is this why games don't use lots of threads?

Why games don't use lots of threads?

Let's go back to Process Explorer and check out a few titles, namely Cyberpunk 2077, Spider-Man Remastered, and Shadow of the Tomb Raider. All three were developed for PC and console, so you'd expect them to be using somewhere between 4 and 8 threads.

At first glance, games certainly do use lots of threads!

It also seems like this can't possibly be correct, as the CPU used in the computer running the games only supports 8 threads maximum.

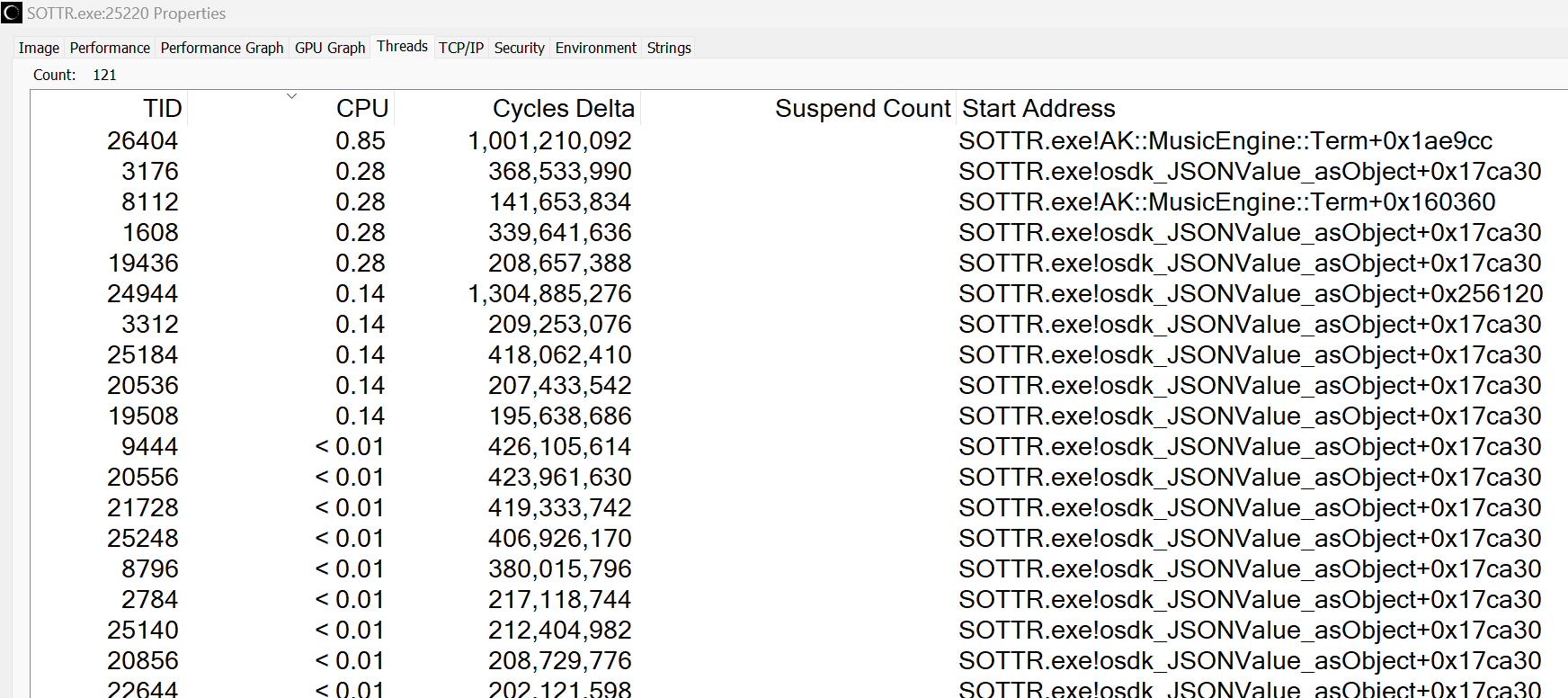

But if we delve deeper into the process threads, we get a much clearer picture. Let's look at Shadow of the Tomb Raider.

Below we can see that the vast majority of these threads take up almost none of the CPU's runtime (second column, displayed in seconds). Although the process and OS have generated over a hundred threads, most run too briefly to even register.

The Cycles Delta count is the total number of CPU cycles accrued by the thread in the process, and in the case of this game, it's dominated by just two threads. That said, others are still making use of all the available CPU cores.

It might seem like the number of cycles is a ridiculous number, but if the processor has a clock rate of, say, 4.5 GHz, then one cycle takes just 0.22 nanoseconds. So 1.3 billion cycles only equate to a little under 300 milliseconds.

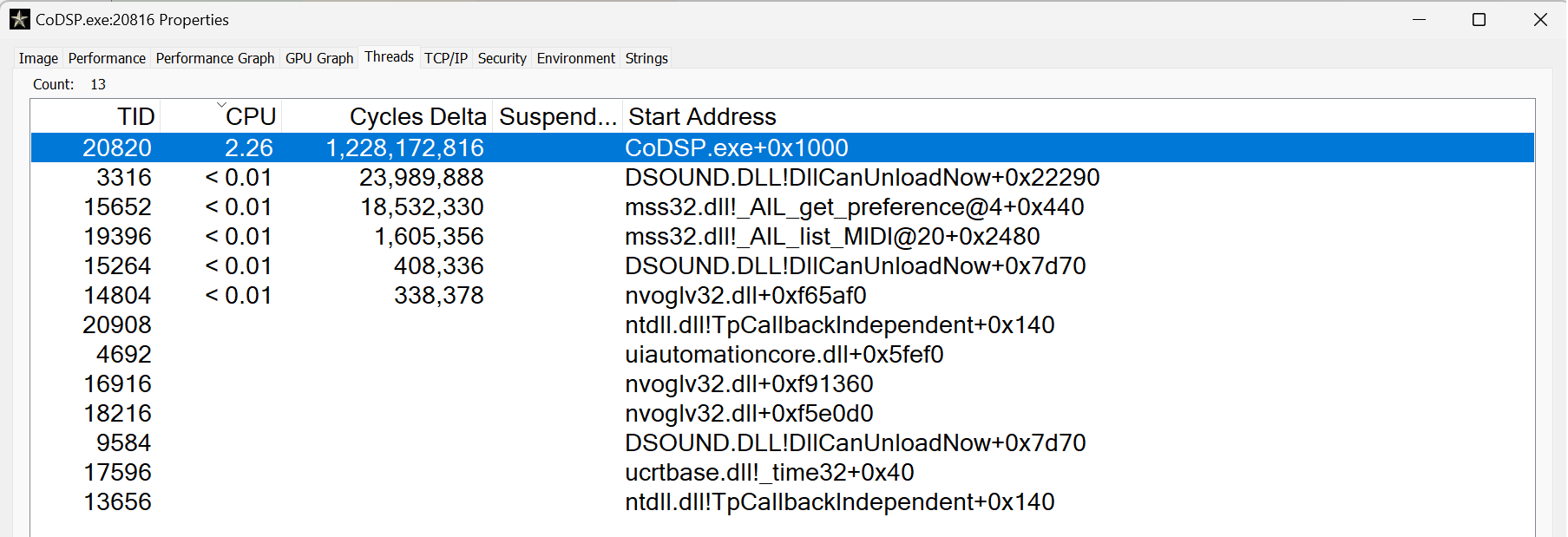

Not all games do it like this, of course, and the older the title, the fewer the number of threads. If we look at the original Call of Duty, from 2003, we see a very different picture.

Games from this era were all like this – just one primary thread for everything. This is because CPUs back then just had one core and relatively few of them supported SMT.

Where the Call of Duty process and operating generates one thread to do almost everything, Shadow of the Tomb Raider is properly simultaneously multi-threaded (as many as the CPU supports).

Initially, hardware outpaced software when it came to fully utilizing all of the cores (with or without SMT) on offer and we had to wait quite some years before games were thoroughly multi-threaded.

Now that the latest consoles have an 8-core CPU that is 2-way SMT capable, future titles will certainly get busier with threads.

The future will be very thready

Right now, funds and availability aside, you can get a desktop PC that has a CPU capable of handling 32 threads (AMD's Ryzen 9 7950X) and a GPU that can chomp through 4,096 (Nvidia's GeForce RTX 4090).

This hardware is, of course, right at the cutting edge of technology, cost, and power and certainly isn't representative of what most computers have to offer. But around 10 years ago, it was a very different picture.

The best CPUs were supporting 8 threads via SMT but the average PC typically had to get by with about 4 threads. Now, you can sub-$100 budget CPUs that handle the same as the best chips from a decade ago.

We can thank AMD for this, as they were the first to offer lots of cores/threads at an affordable price, and today both CPU vendors routinely battle over who can offer the most cores/threads per dollar.

And we're finally at a stage where recent and new games are taking full advantage of all the thread-crunching power that's available to them, when they're not being limited by the GPU.

So what's next? If we could fast forward a decade into the future, will we see the average PC gamer using a 128-thread CPU? Possibly, but unlikely, simply because there are diminishing returns as the core count increases. However, professional content creators are already using such processors (e.g. Threadripper Pro 5995WX) so it's anybody's guess as to what they'll be using circa 2032.

But whatever the future holds, one will remain true: threads are awesome little things!

Keep Reading. Explainers at TechSpot

- Path Tracing vs. Ray Tracing, Explained

- What is Chip Binning?

- What is a File System?

- Sustainable Computing, Explained

- The State of Quantum Computing Systems

Masthead credit: Ryan