In context: AMD launched the Ryzen 9 7950X3D at the end of last month and welcomed an enthusiastic response to its second-gen 3D V-Cache despite some mixed opinions about its usefulness in a 16-core CPU. Now they've shared some of the technical details that explain its performance.

AMD started mixing nodes in 2019 when it used the 7 nm node for the core complex die (CCD) and the 12 nm node for the IO die of the Zen 2 microarchitecture. AMD recently confirmed to Tom's Hardware that Zen 4 steps it up to three nodes: the 5 nm node for the CCD, the 6 nm node for the IO die, and the 7 nm node for the V-Cache.

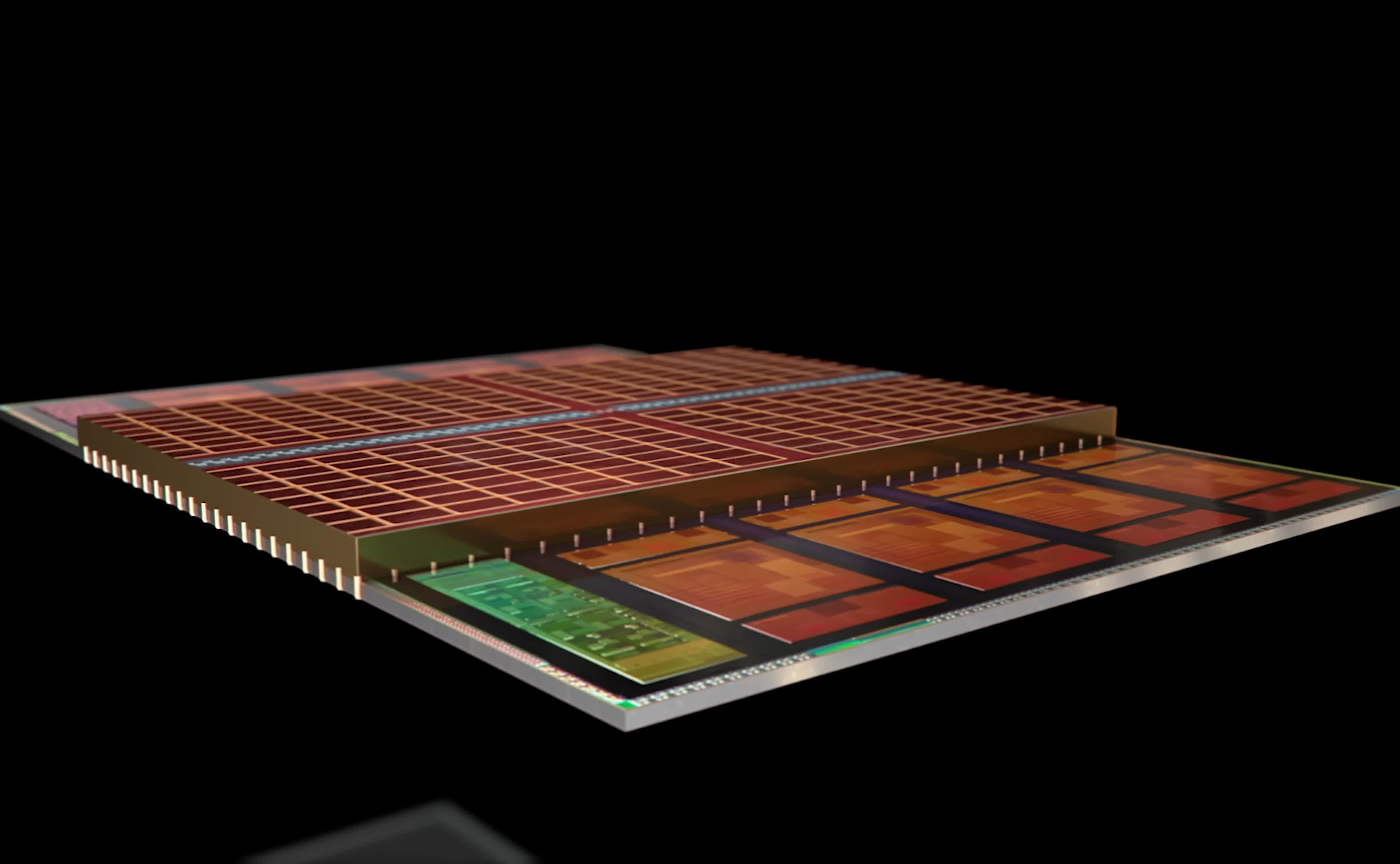

AMD explained some of the challenges it faced stacking one node onto another during its recent ISSCC presentation. Both the 7950X3D and the original 5800X3D have their V-Caches positioned over their regular L3 caches to allow them to be connected. The arrangement also keeps the V-Cache away from the heat produced by the cores. However, while the V-Cache fits neatly over the L3 cache in the 5800X3D, it overlaps with the L2 caches on the edges of the cores in the 7950X3D.

Also read: AMD Ryzen 9 7950X3D Memory Scaling Benchmark

The V-Cache sits over the middle of the CCD and the eight cores flank the sides.

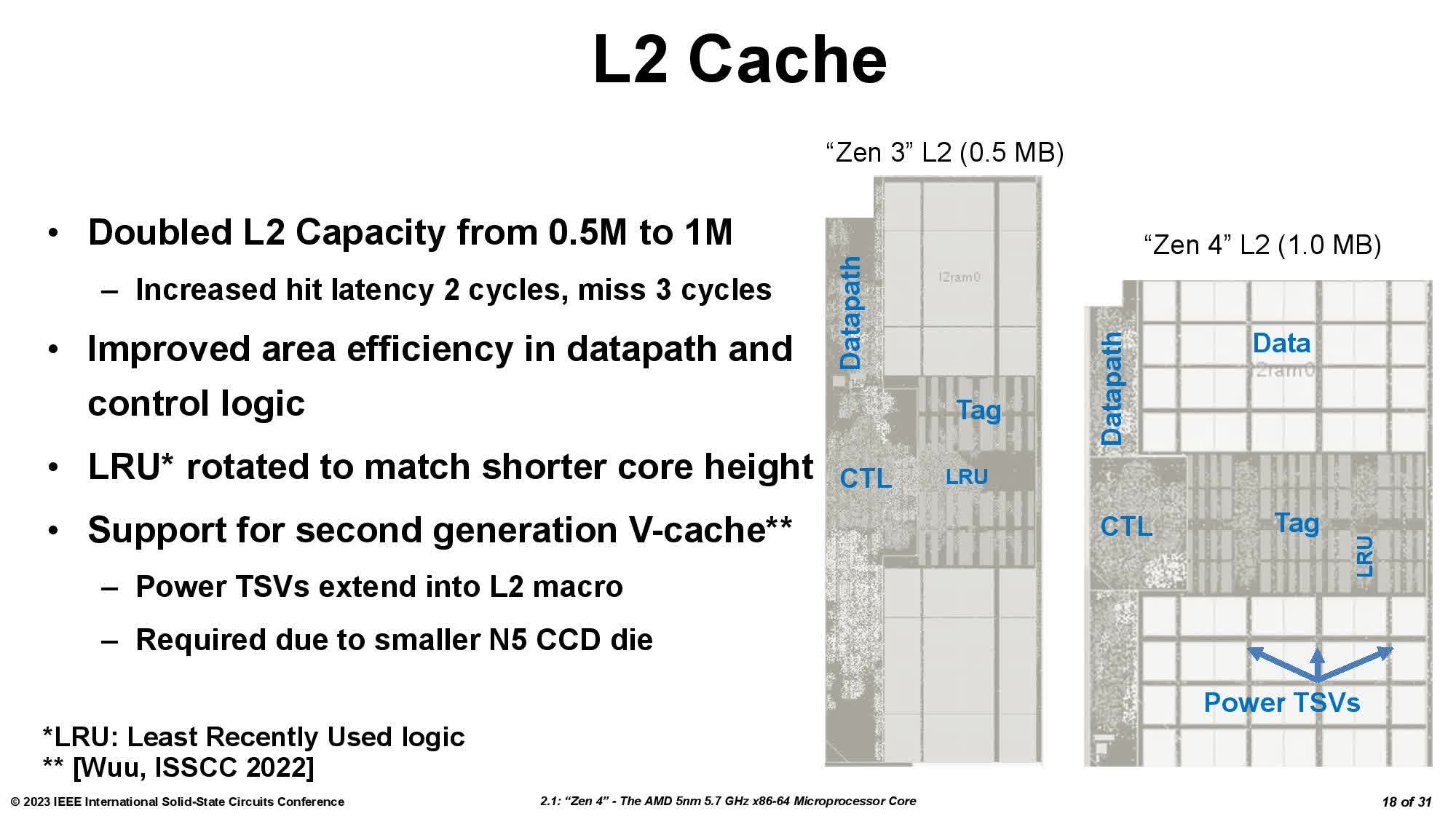

Part of the problem was that AMD doubled the amount of L2 cache in each core from 0.5 MB in Zen 3 to 1 MB in Zen 4. But it worked around the additional space constraints by punching holes through the L2 caches for the through-silicon vias (TSVs) that deliver power to the V-Cache. The signal TSVs still come from the controller in the center of the CCD but AMD tweaked them too to reduce their footprint by 50%.

The Zen 4 L2 cache is larger because of its larger capacity, but also because it has TSVs passing through it.

AMD shrunk the V-Cache down from 41 mm2 to 36 mm2 but maintained the same 4.7 B transistors. TSMC fabricates the cache on a new version of the 7 nm node that it developed especially for SRAM. As a result, the V-Cache has 32% more transistors per square millimeter than the CCD despite the CCD being manufactured on the much smaller 5 nm node.

All of the refinements and workarounds AMD implemented add up to a 25% increase in bandwidth to 2.5 TB/s and an unspecified increase in efficiency. Not bad for nine months between the first and second generations of a supplemental chiplet. Hopefully it shows its value when the Ryzen 7 7800X3D arrives in a month's time.

https://www.techspot.com/news/97818-amd-explains-how-new-3d-v-cache-improves.html