In context: Smart Access Memory, or SAM, is a software/hardware trick that takes advantage of a PCI Express feature called Base Address Register. So far, only AMD is using with their new Radeon RX 6000 GPUs to eek out a little extra performance. It lets the CPU feed information directly into the entire video memory buffer, instead of just a small portion of it. The result is about a 5-10% performance improvement depending on the title, which isn't insignificant.

When AMD announced Smart Access Memory on its latest GPUs, it sounded like SAM required close cooperation between the CPU and GPU. Consequently, it was launched as a new feature that only works when AMD RX 6000 GPUs are paired with AMD Ryzen 5000 CPUs.

Nvidia, however, believes that a form of SAM could be implemented universally, as long as all manufacturers can agree on some standards since it does rely on a PCI Express feature. PC World asked AMD's Scott Herkelman if AMD think that's possible, and to what extent AMD would support standardization. This an edited transcript of the interview:

Q: Will competitors need to push out BIOS updates for their whole ecosystem of products?

A: I think you'll have to ask them. But I believe that they'll need to work on their own drivers. Intel will have to work with their own motherboard manufacturers, and work on their own chipsets. I think there's some work to do for our competitors.

And, just to be clear, our Radeon group will work with Intel to get them ready. And I know that our Ryzen group will work with Nvidia. There's already conversations underway. If they're interested in enabling this feature on AMD platforms, we're not going to stop them.

As a matter of fact, I hope they do. At the end of the day, the gamer wins, and that's all that matters. We're just the company that could do it the fastest because we're the only company in the world (finally) with enthusiast GPUs and enthusiast CPUs.

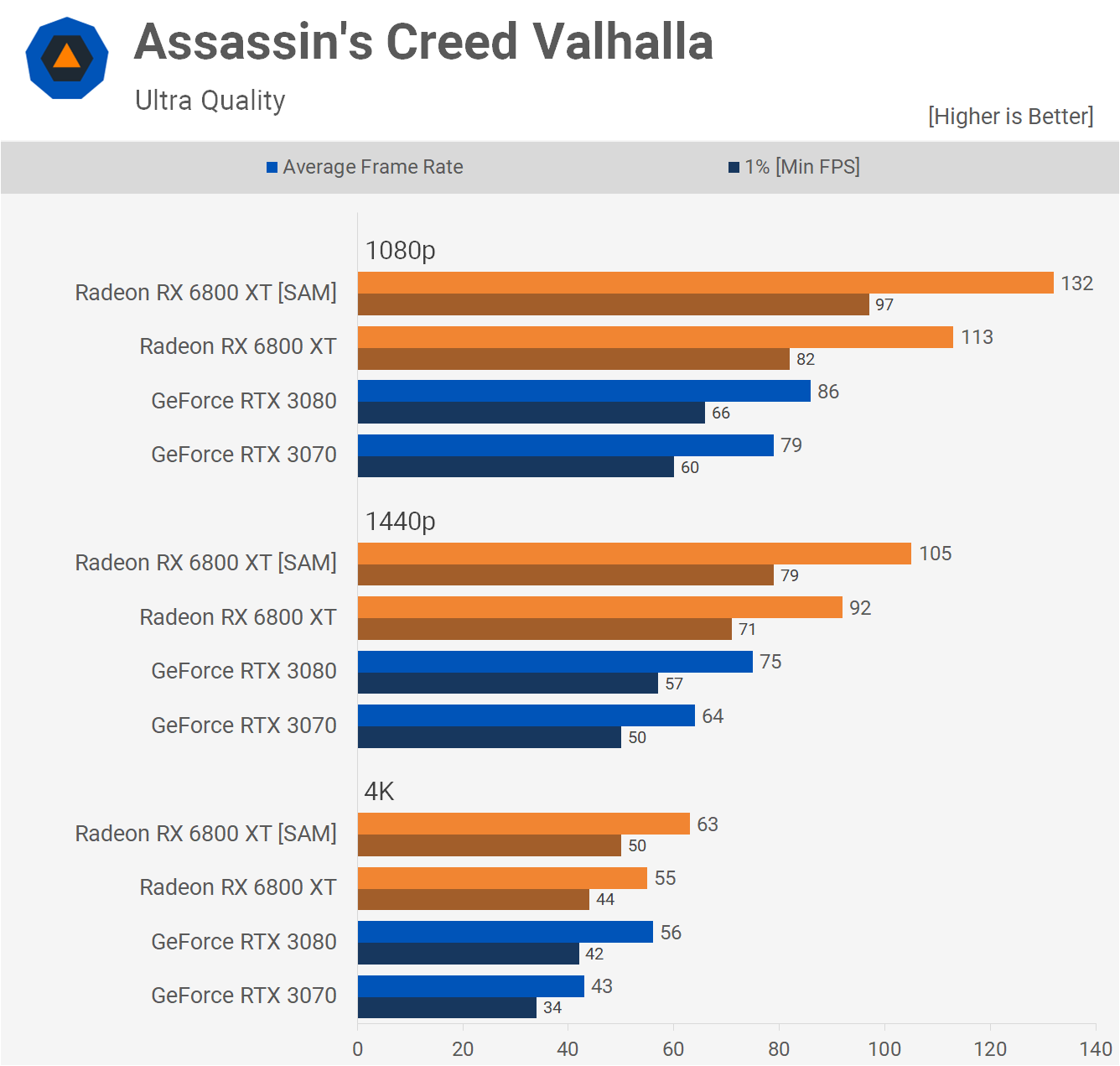

The standardization of a SAM-like feature could have an impact on the way games are developed. Although the feature doesn't require developer support, some games utilize it much more effectively than others: at 1440p, Assassin's Creed Valhalla sees a 14% improvement, but Shadow of the Tomb Raider only sees a 6% improvement. If developers are willing, future titles could be designed to utilize SAM to the greatest extent possible.

From our AMD Radeon RX 6800 XT Review. Also, check out our AMD Radeon RX 6800 Review for more SAM testing.

For now, however, SAM is a nice little incentive to keep your system all-AMD. In our 1440p eighteen game average, the RX 6800 XT was just 5% slower than the RTX 3090, a difference that SAM might be able to eliminate.

Also, as we noted in our RX 6800 XT review, PCIe 4.0 doesn’t appear to be required for SAM. We ran a few limited tests in Assassin’s Creed Valhalla while forcing PCIe 3.0 on our X570 test system and didn’t see a decline in performance when compared to PCIe 4.0, so that’s interesting.

https://www.techspot.com/news/87697-amd-happy-help-intel-nvidia-enable-smart-access.html