We first saw the upcoming Radeon HD 6990's specifications last November when an internal slide leaked online, but many details remained unknown -- until now. More slides have appeared on DonanimHaber, revealing nearly everything eager shoppers might want to know, except pricing.

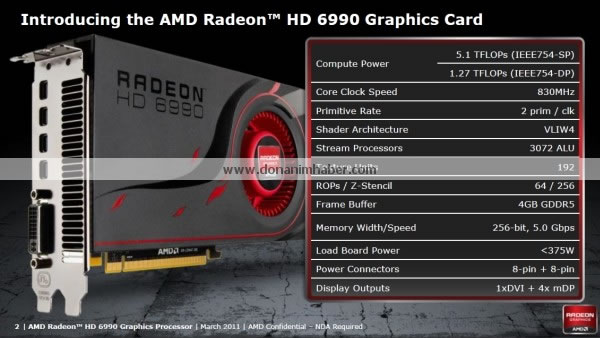

Rumored to be released next Tuesday, the Radeon HD 6990 features two Cayman GPUs clocked at 830MHz, 3072 stream processors, 192 texture units, two 256-bit memory channels, 4GB of GDDR5 running at 5000MHz, and it consumes a maximum of 375W (37W during idle).

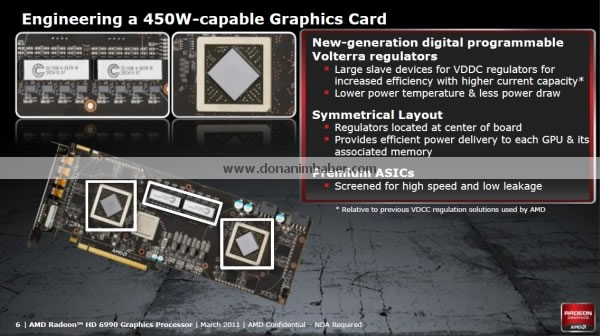

By flipping a switch on top of the card, you can enable a built-in overclocking mode that boosts the clock frequency to 880MHz and increases Radeon HD 6990's compute power from 5.10 TFLOPs to 5.40 TFLOPs. The max TDP is also elevated to 450W, but idle draw remains the same.

To keep temperatures in-check, AMD is outfitting its latest flagship card with an updated dual-slot cooler featuring two vapor chambers and one center fan. The Radeon HD 6990's cooler offers 20% more airflow and 8% better thermal performance in the same form factor as the HD 5970.

The Radeon HD 6990 receives power via two 8-pin PCIe connectors and the rear I/O plate houses four Mini DisplayPort outputs alongside one DL-DVI port. Additionally, AMD will include three adapters with every card: Mini DisplayPort to SL-DVI Passive, SL-DVI Active, and HDMI Passive.

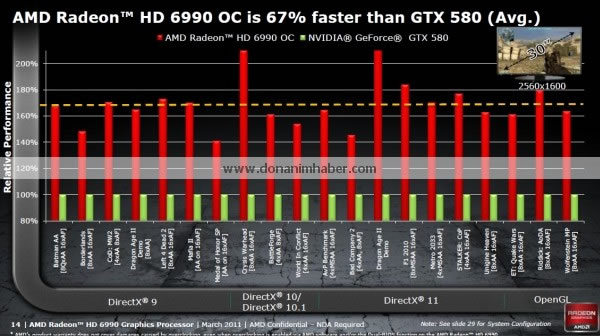

AMD's performance slides show that Radeon HD 6990 running in OC mode is on average 67% faster than the single-GPU GeForce GTX 580 when playing games at 2560x1600. The lead is especially dramatic in Crysis Warhead and the Dragon Age II demo, but again, these are AMD's numbers.

According to AMD's charts, you can expect a 60% to 100% performance increase in extremely high-resolution applications when two of the dual-GPU cards are configured in Quad CrossFire. As usual, you can expect us to provide a full review when the Radeon HD 6990 arrives.

https://www.techspot.com/news/42630-amd-radeon-hd-6990-specs-leaked-rumored-for-march-8.html