In the same wiki article:

"RAM on the video card:

Video cards always have a certain amount of RAM. This RAM is where the bitmap of image data is "buffered" for display. The term

frame buffer is thus often used interchangeably when referring to this RAM.

The CPU sends image updates to the video card. The video processor on the card forms a picture of the screen image and stores it in the frame buffer as a large bitmap in RAM. The bitmap in RAM is used by the card to continually refresh the screen image."

As in the word "compression", Nvidia stored compressed images in the VRAM, thus reducing the amount of data transfer between VRAM and GPU, boosting the overall

effective bandwidth. It even said in the Nvidia white paper "the combination of raw bandwidth increases, and

traffic reduction translates to a 50% increase in effective bandwidth on Turing compared to Pascal"

It will be clear if you look for any side by side comparison between AMD and Nvidia, as Nvidia will use less VRAM, sometimes only a few MB but sometimes can be as much as 1GB. Could this be the reason why some people say AMD has better colors than Nvidia ?

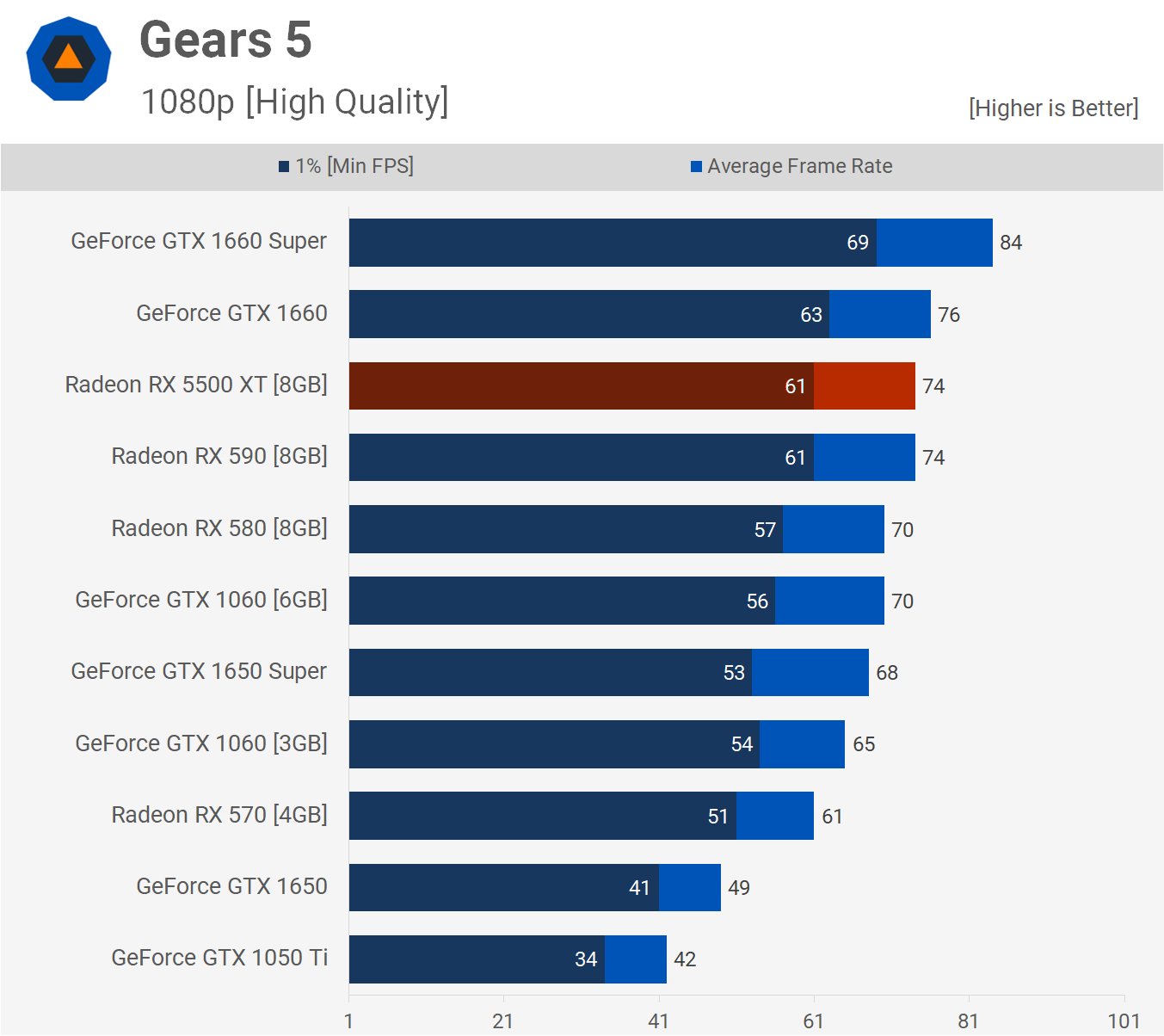

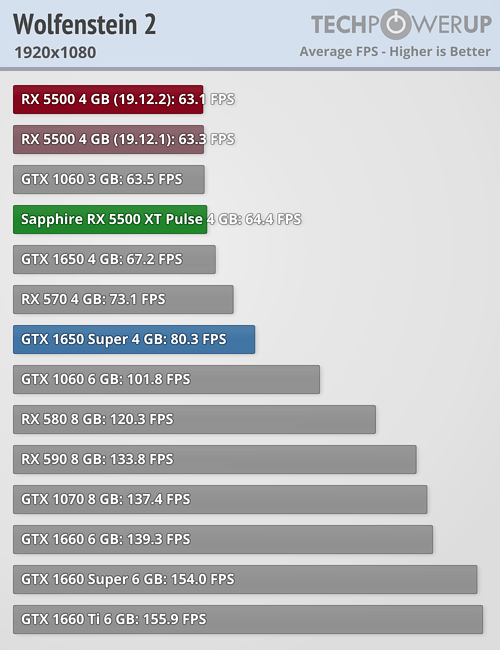

So yeah some games can choke the hell out of 5500XT 4GB while 1650S perform just fine.